JOURNAL OF L

A

T

E

X CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 3

of techniques and more focused on application scenarios

in e-commerce domain. Different from these surveys, our

work tries to identify the challenges encountered in previ-

ous studies, and extensively discuss existing solutions in a

comprehensive and well-organized manner. Specifically, we

categorize the methods for complex KBQA into two main-

stream approaches based on their working mechanisms. We

decompose the overall procedure of the two approaches into

a series of functional modules, and analyze the challenges in

each module. Such a way is particularly helpful for readers

to understand the potential challenges and solutions for

complex KBQA. It is worth noting that this survey is an

extended version of the short survey [18]. As a comparison,

this survey has several main differences: (1) This paper

introduces traditional approaches, preliminary knowledge,

and evaluation protocol of KBQA in multiple aspects and a

more concrete way, which goes far beyond the scope of the

short survey; (2) Based on the two mainstream categories of

KBQA methods, this paper provides a more comprehensive

discussion about their similarity and difference regarding

core modules and working mechanisms; (3) To have a

better view of existing solutions for various challenges, this

paper includes additional subsections to summarize tech-

nical contributions about complex KBQA methods; (4) This

paper gives a more thorough outlook on several promising

research directions and unaddressed challenges.

The remainder of this survey is organized as follows.

We will first introduce the task formulation and prelim-

inary knowledge about the task in Section 2. After that,

we introduce multiple available datasets and how they

are constructed in Section 3. Next, we introduce the two

mainstream categories of methods for complex KBQA in

Section 4. Then following the categorization, we figure out

typical challenges and solutions to these challenges in Sec-

tion 5. Then, we discuss several recent trends in Section 6.

Finally, we conclude and summarize the contribution of this

survey in Section 7.

2 B ACKGROUND

In this section, we first briefly introduce KBs and task

formulation of KBQA, then we talk about the traditional

approaches for KBQA systems and the general evaluation

protocol of the systems.

2.1 Knowledge Base

As mentioned earlier, KB is usually in the format of triples.

They are designed to support modeling relationships be-

tween entities. Take Freebase [19] as an example for KB.

Each entity in Freebase has a unique ID (refereed to as

mid), one or more types, and uses properties from these

types in order to provide facts [3]. For example, the Free-

base entity for person Jeff Probst has the mid “m.02pbp9”

1

and the type “people.marriage” that allows the entity to

have a fact with “people.mar riage.spouse” as the property

and “m.0j6d0bg” (psychotherapist Shelley Wright) as the

value. Freebase incorporates compound value types (CVTs)

to represent n-ary (n > 2) relational facts [1] like “Jeff

Probst was married to Shelley Wright in 1996”, where three

1. PREFIX: < http : //rdf.freebase.com/ns/ >

entities, namely “Jeff Probst”, “Shelley Wright” and “1996”,

are involved in a single statement. Different from entities

which can be aligned with real world objects or concepts,

CVTs are artificially created for such n-ary facts.

In practice, large-scale open KBs (e.g., Freebase and

DBPedia) are published under Resource Description Frame-

work (RDF) to support structured query language [3], [20].

To facilitate access to large-scale KBs, the query language

SPARQL is frequently used to retrieve and manipulate data

stored in KBs [3]. In Figure 2, we have shown an executable

SPARQL to obtain the spouses of entity “Jeff Probst”.

Different KBs are designed with different purposes, and

have varying properties under different schema design.

For example, Freebase is created mainly by community

members and harvested from many resources including

Wikipedia. YAGO [21] takes Wikipedia and WordNet [22]

as the knowledge resources and covers taxonomy of more

general concepts. WikiData [3] is a multilingual KB which

integrates multiple resources of KBs with high coverage and

quality. A more comprehensive comparison between open

KBs is available at [23].

2.2 Task Formulation

Formally, we denote a KB as G = {he, r, e

0

i|e, e

0

∈ E, r ∈ R},

where he, r, e

0

i denotes that relation r exists between subject

e and object e

0

, E and R denote the entity set and relation

set, respectively.

Given the available KB G, this task aims to answer natu-

ral language questions q = {w

1

, w

2

, ..., w

m

} in the format of

a sequence of tokens and we denote the predicted answers

as

˜

A

q

. Specially, existing studies assume the correct answers

A

q

derive from the entity set E of the KB. Unlike answers

of simple KBQA task, which are entities directly connected

to the topic entity and A

q

⊂ E , the answers of the complex

KBQA task are entities multiple hops away from the topic

entities or even the aggregation of them. Generally, a KBQA

system is trained using a dataset D = {(q, A

q

)}.

2.3 Traditional Approaches

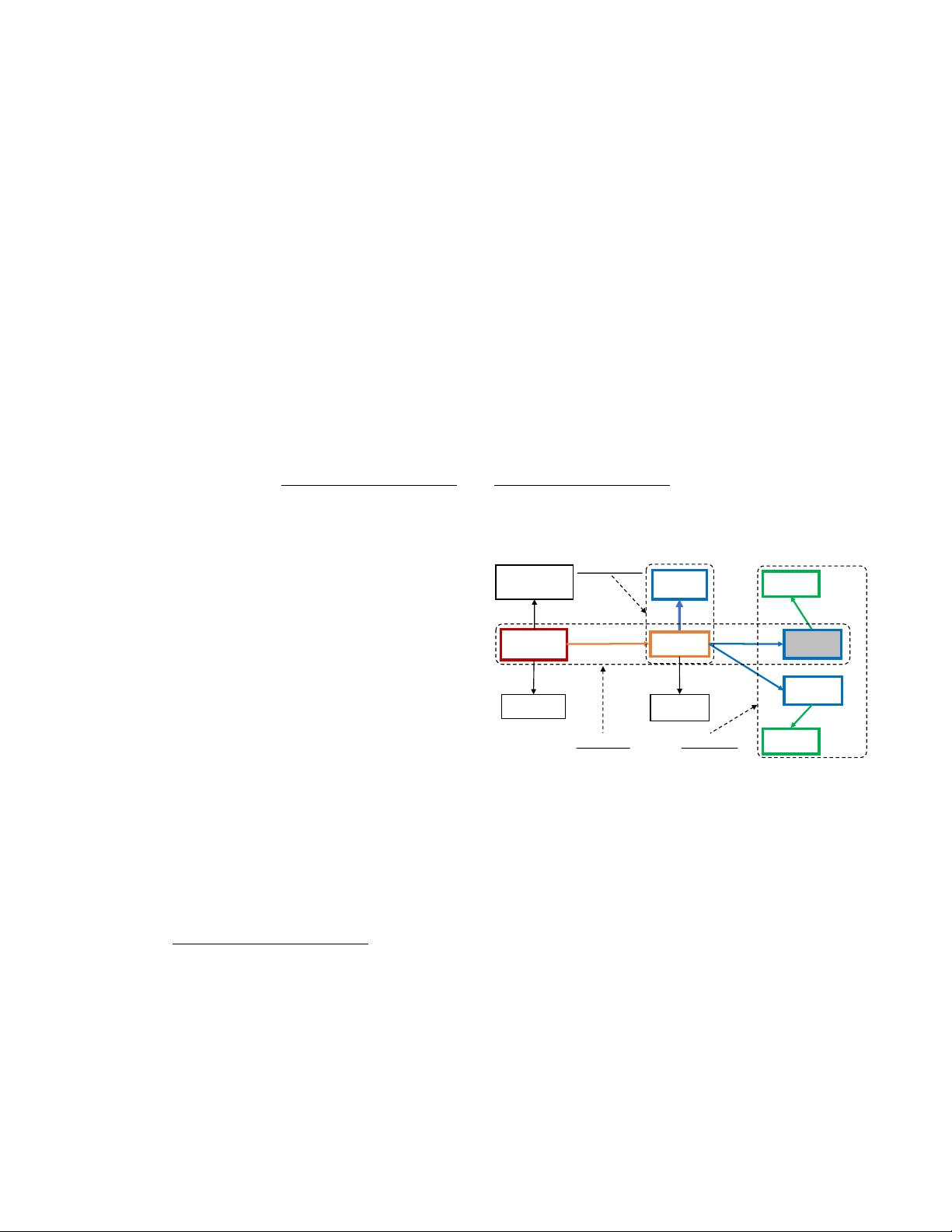

General KBQA systems for simple questions have a pipeline

framework as displayed in Figure 2. The preliminary step

is to identify topic entity e

q

of the question q, which aims

at linking a question to its related entities in the KBs. In

this step, named entity recognition, disambiguation and

linking are performed. It is usually done using some off-

the-shelf entity linking tools, such as S-MART [24], DBpedia

Spotlight [25], and AIDA [26]. Subsequently, an answer

prediction module is leveraged to predict the answer

˜

A

q

taking q as the input.

For simple KBQA task, the predicted answers are usu-

ally located within the neighborhood of the topic entities.

Different features, as well as methods, are proposed to rank

these candidate entities. Since the answer prediction module

usually takes questions with the detected topic entities as

the input and outputs predicted answers, existing relation

extraction tools like OpenNRE [27] can also be directly

utilized as the answer prediction module but may encounter

domain inconsistency issue and result in poor performance.

Therefore, this module is usually designed and trained

using the question and the ground truth. Most of the effort

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功