没有合适的资源?快使用搜索试试~ 我知道了~

资源推荐

资源详情

资源评论

Curriculum Learning for Reinforcement Learning Domains:

A Framework and Survey

Sanmit Narvekar sanmit@cs.utexas.edu

Department of Computer Science

University of Texas at Austin

Austin, TX 78712, USA

Bei Peng bei.peng@cs.ox.ac.uk

Department of Computer Science

University of Oxford

Matteo Leonetti m.leonetti@leeds.ac.uk

School of Computing

University of Leeds

Jivko Sinapov jivko.sinapov@tufts.edu

Department of Computer Science

Tufts University

Matthew E. Taylor matthew.e.taylor@wsu.edu

School of Electrical Engineering and Computer Science

Washington State University

Peter Stone pstone@cs.utexas.edu

Department of Computer Science

University of Texas at Austin

Abstract

Reinforcement learning (RL) is a popular paradigm for addressing sequential decision tasks

in which the agent has only limited environmental feedback. Despite many advances over

the past three decades, learning in many domains still requires a large amount of inter-

action with the environment, which can be prohibitively expensive in realistic scenarios.

To address this problem, transfer learning has been applied to reinforcement learning such

that experience gained in one task can be leveraged when starting to learn the next, harder

task. More recently, several lines of research have explored how tasks, or data samples

themselves, can be sequenced into a curriculum for the purpose of learning a problem that

may otherwise be too difficult to learn from scratch. In this article, we present a framework

for curriculum learning (CL) in reinforcement learning, and use it to survey and classify

existing CL methods in terms of their assumptions, capabilities, and goals. Finally, we

use our framework to find open problems and suggest directions for future RL curriculum

learning research.

Keywords: Curriculum Learning, Reinforcement Learning, Transfer Learning

1. Introduction

Curricula are ubiquitous throughout early human development, formal education, and life-

long learning all the way to adulthood. Whether learning to play a sport, or learning to

become an expert in mathematics, the training process is organized and structured so as

c

2020 Sanmit Narvekar, Bei Peng, Matteo Leonetti, Jivko Sinapov, Matthew E. Taylor, and Peter Stone.

License: CC-BY 4.0, see https://creativecommons.org/licenses/by/4.0/.

arXiv:2003.04960v1 [cs.LG] 10 Mar 2020

Narvekar, Peng, Leonetti, Sinapov, Taylor, and Stone

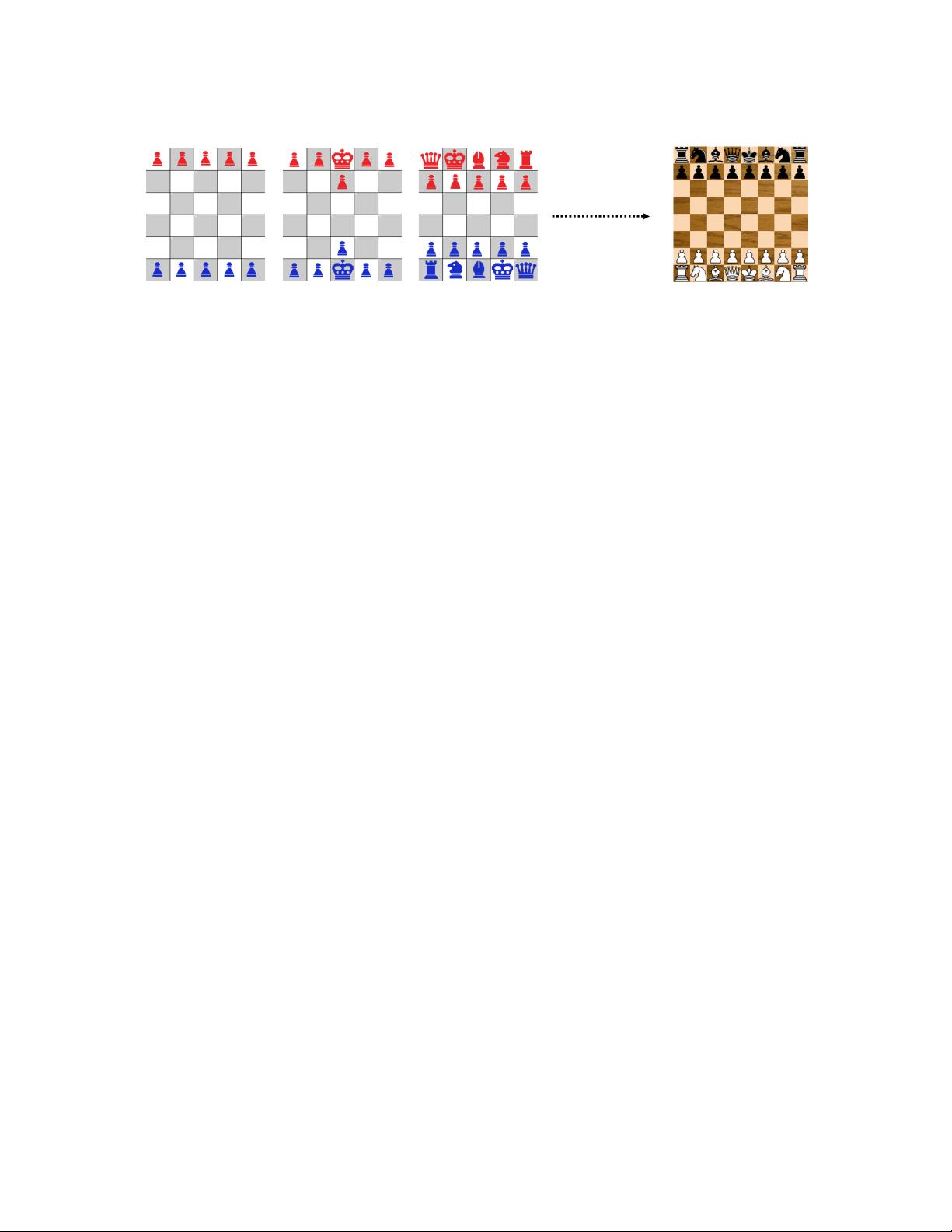

Figure 1: Different subgames in the game of Quick Chess, which are used to form a cur-

riculum for learning the full game of Chess.

to present new concepts and tasks in a sequence that leverages what has previously been

learned. In a variety of human learning domains, the quality of the curricula has been shown

to be crucial in achieving success. Curricula are also present in animal training, where it is

commonly referred to as shaping (Skinner, 1958; Peterson, 2004).

As a motivating example, consider the game of Quick Chess (shown in Figure 1), a game

designed to introduce children to the full game of chess, by using a sequence of progressively

more difficult “subgames.” For example, the first subgame is played on a 5x5 board with

only pawns, where the player learns how pawns move, get promoted, and take other pieces.

Next, in the second subgame, the king piece is added, which introduces a new objective:

keeping the king alive. In each successive subgame, new elements are introduced (such as

new pieces, a larger board, or different configurations) that require learning new skills and

building upon knowledge learned in previous games. The final game is the full game of

chess.

The idea of using such curricula to train artificial agents dates back to the early 1990s,

where the first known applications were to grammar learning (Elman, 1993; Rohde and

Plaut, 1999), robotics control problems (Sanger, 1994), and classification problems (Bengio

et al., 2009). Results showed that the order of training examples matters and that gen-

erally, incremental learning algorithms can benefit when training examples are ordered in

increasing difficulty. The main conclusion from these and subsequent works in curriculum

learning is that starting small and simple and gradually increasing the difficulty of the task

can lead to faster convergence as well as increased performance on a task.

Recently, research in reinforcement learning (Sutton and Barto, 1998) (RL) has been

exploring how agents can leverage transfer learning (Lazaric et al., 2008; Taylor and Stone,

2009) to re-use knowledge learned from a source task when attempting to learn a subsequent

target task. As knowledge is transferred from one task to the next, the sequence of tasks

induces a curriculum, which has been shown to improve performance on a difficult problem

and/or reduce the time it takes to converge to an optimal policy.

Many groups have been studying how such a curriculum can be generated automatically

to train reinforcement learning agents, and many approaches to do so now exist. However,

what exactly constitutes a curriculum and what precisely qualifies an approach as being

an example of curriculum learning is not clearly and consistently defined in the literature.

There are many ways of defining a curriculum: for example, the most common way is as an

ordering of tasks. At a more fundamental level, a curriculum can also be defined as an or-

2

Curriculum Learning for Reinforcement Learning Domains

dering of individual experience samples. In addition, a curriculum does not necessarily have

to be a simple linear sequence. One task can build upon knowledge gained from multiple

source tasks, just as courses in human education can build off of multiple prerequisites.

Methods for curriculum generation have separately been introduced for areas such as

robotics, multi-agent systems, human-computer and human-robot interaction, and intrinsi-

cally motivated learning. This body of work, however, is largely disconnected. In addition,

many landmark results in reinforcement learning, from TD-Gammon (Tesauro, 1995) to

AlphaGo (Silver et al., 2016) have implicitly used curricula to guide training. In some

domains, researchers have successfully used methodologies that align with our definition of

curriculum learning without explicitly describing it that way (e.g., self-play). Given the

many landmark results that have utilized ideas from curriculum learning, we think it is

very likely that future landmark results will also rely on curricula, perhaps more so than

researchers currently expect. Thus, having a common basis for discussion of ideas in this

area is likely to be useful for future AI challenges.

Overview

The goal of this article is to provide a systematic overview of curriculum learning (CL) in

RL settings and to provide an over-arching framework to formalize the problem. We aim to

define classification criteria for computational models of curriculum learning for RL agents,

that describe the curriculum learning research landscape over a broad range of frameworks

and settings. The questions we address in this survey include:

• What is a curriculum, and how can it be represented for reinforcement learning tasks?

At the most basic level, a curriculum can be thought of as an ordering over experience

samples. However, it can also be represented at the task level, where a set of tasks

can be organized into a sequence or a directed acyclic graph that specifies the order

in which they should be learned. We address this question in detail in Section 3.1.

• What is the problem of curriculum learning, and how should we evaluate curriculum

learning methods? We formalize this problem in Section 3.2 as consisting of three

parts, and extend metrics commonly used in transfer learning (introduced in Section

2) to the curriculum setting to facilitate evaluation in Section 3.3.

• How can tasks be constructed for use in a curriculum? The quality of a curriculum

is dependent on the quality of tasks available to select from. Tasks can either be

generated in advance, or dynamically and on-the-fly with the curriculum. Section 4.1

surveys works that examine how to automatically generate good intermediate tasks.

• How can tasks or experience samples be sequenced into a curriculum? In practice,

most curricula for RL agents have been manually generated for each problem. How-

ever, in recent years, automated methods for generating curricula have been proposed.

Each makes different assumptions about the tasks and transfer methodology used. In

Section 4.2, we survey these different automated approaches, as well as describe how

humans have approached curriculum generation for RL agents.

• How can an agent transfer knowledge between tasks as it learns through a curricu-

lum? Curriculum learning approaches make use of transfer learning methods when

3

Narvekar, Peng, Leonetti, Sinapov, Taylor, and Stone

moving from one task to another. Since the tasks in the curriculum can vary in

state/action space, transition function, or reward function, it’s important to transfer

relevant and reusable information from each task, and effectively combine information

from multiple tasks. Methods to do this are enumerated and discussed in Section 4.3.

The next section provides background in reinforcement learning and transfer learning. In

Section 3, we define the curriculum learning problem, evaluation metrics, and the dimensions

along which we will classify curriculum learning approaches. Section 4, which comprises

the core of the survey, provides a detailed overview of the existing state of the art in

curriculum learning in RL, with each subsection considering a different component of the

overall problem. Section 5 discusses paradigms related to curriculum learning for RL, such

as curriculum learning for supervised learning and for human education. Finally, in Section

6, we identify gaps in the existing literature, outline the limitations of existing CL methods

and frameworks, and provide a list of open problems.

2. Background

In this section, we provide background on Reinforcement Learning (RL) and Transfer Learn-

ing (TL).

2.1 Reinforcement Learning

Reinforcement learning considers the problem of how an agent should act in its environment

over time, so as to maximize some scalar reward signal. We can formalize the interaction

of an agent with its environment (also called a task) as a Markov Decision Process (MDP).

In this article, we restrict our attention to episodic MDPs:

1

Definition 1 An episodic MDP M is a 4-tuple (S, A, p, r), where S is the set of states, A is

the set of actions, p(s

0

|s, a) is a transition function that gives the probability of transitioning

to state s

0

after taking action a in state s, and r(s, a, s

0

) is a reward function that gives the

immediate reward for taking action a in state s and transitioning to state s

0

.

We consider time in discrete time steps. At each time step t, the agent observes its state

and chooses an action according to its policy π(a|s). The goal of the agent is to learn an

optimal policy π

∗

, which maximizes the expected return G

t

(the cumulative sum of rewards

R) until the episode ends at timestep T :

G

t

=

T −t

X

i=1

R

t+i

(1)

There are three main classes of methods to learn π

∗

: value function approaches, policy

search approaches, and actor-critic methods. In value function approaches, a value v

π

(s) is

first learned for each state s, representing the expected return achievable from s by following

1. In continuing tasks, a discount factor γ is often included. For simplicity, and due to the fact that

tasks typically terminate in curriculum learning settings, we present the undiscounted case. But unless

otherwise noted, our definitions and discussions can easily apply to the discounted case as well.

4

Curriculum Learning for Reinforcement Learning Domains

policy π. Through policy evaluation and policy improvement, this value function is used

to derive a policy better than π, until convergence towards an optimal policy. Using a

value function in this process requires a model of the reward and transition functions of

the environment. If the model is not known, one option is to learn an action-value function

instead, q

π

(s, a), which gives the expected return for taking action a in state s and following

π after:

q

π

(s, a) =

X

s

0

p(s

0

|s, a)[r(s, a, s

0

) + q

π

(s

0

, a

0

)] , where a

0

∼ π(·|s

0

) (2)

The action-value function can be iteratively improved towards the optimal action-value

function q

∗

with on-policy methods such as SARSA (Sutton and Barto, 1998). The optimal

action-value function can also be learned directly with off-policy methods such as Q-learning

(Watkins and Dayan, 1992). An optimal policy can then be obtained by choosing action

argmax

a

q

∗

(s, a) in each state. If the state space is large or continuous, the (action-)value

function can instead be approximated as a function of state features φ(s) and a weight

vector θ.

In contrast, policy search methods directly search for or learn a parameterized policy

π

θ

(a|s), without using an intermediary value function. Typically, the parameter θ is modi-

fied using search or optimization techniques to maximize some performance measure J(θ).

For example, in the episodic case, J(θ) could correspond to the expected value of the policy

parameterized by θ from the starting state s

0

: v

π

θ

(s

0

).

A third class of methods, actor-critic methods, maintain a parameterized representation

of both the current policy and value function, and compute the optimal policy updates with

respect to the estimated values. An example of actor-critic methods is Deterministic Policy

Gradient (Silver et al., 2014).

2.2 Transfer Learning

In the standard reinforcement learning setting, an agent usually starts with a random policy,

and directly attempts to learn an optimal policy for the target task. When the target task is

difficult, for example due to adversaries, poor state representation, or sparse reward signals,

learning can be very slow.

Transfer learning is one class of methods and area of research that seeks to speed up

training of RL agents. The idea behind transfer learning is that instead of learning on the

target task tabula rasa, the agent can first train on one or more source task MDPs, and

transfer the knowledge acquired to aid in solving the target. This knowledge can take the

form of samples (Lazaric et al., 2008; Lazaric and Restelli, 2011), options (Soni and Singh,

2006), policies (Fern´andez et al., 2010), models (Fachantidis et al., 2013), or value functions

(Taylor and Stone, 2005). As an example, in value function transfer (Taylor et al., 2007),

the parameters of an action-value function q

source

(s, a) learned in a source task are used to

initialize the action-value function in the target task q

target

(s, a). This biases exploration

and action selection in the target task based on experience acquired in the source task.

Some of these methods assume that the source and target MDPs either share state and

action spaces, or that a task mapping (Taylor et al., 2007) is available to map states and

actions in the target task to known states and actions in the source. Such mappings can

5

剩余46页未读,继续阅读

资源评论

syp_net

- 粉丝: 158

- 资源: 1187

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功