Analyzing Multi-Head Self-Attention:

Specialized Heads Do the Heavy Lifting, the Rest Can Be Pruned

Elena Voita

1,2

David Talbot

1

Fedor Moiseev

1,5

Rico Sennrich

3,4

Ivan Titov

3,2

1

Yandex, Russia

2

University of Amsterdam, Netherlands

3

University of Edinburgh, Scotland

4

University of Zurich, Switzerland

5

Moscow Institute of Physics and Technology, Russia

{lena-voita, talbot, femoiseev}@yandex-team.ru

rico.sennrich@ed.ac.uk ititov@inf.ed.ac.uk

Abstract

Multi-head self-attention is a key component

of the Transformer, a state-of-the-art architec-

ture for neural machine translation. In this

work we evaluate the contribution made by in-

dividual attention heads in the encoder to the

overall performance of the model and analyze

the roles played by them. We find that the

most important and confident heads play con-

sistent and often linguistically-interpretable

roles. When pruning heads using a method

based on stochastic gates and a differentiable

relaxation of the L

0

penalty, we observe that

specialized heads are last to be pruned. Our

novel pruning method removes the vast major-

ity of heads without seriously affecting perfor-

mance. For example, on the English-Russian

WMT dataset, pruning 38 out of 48 encoder

heads results in a drop of only 0.15 BLEU.

1

1 Introduction

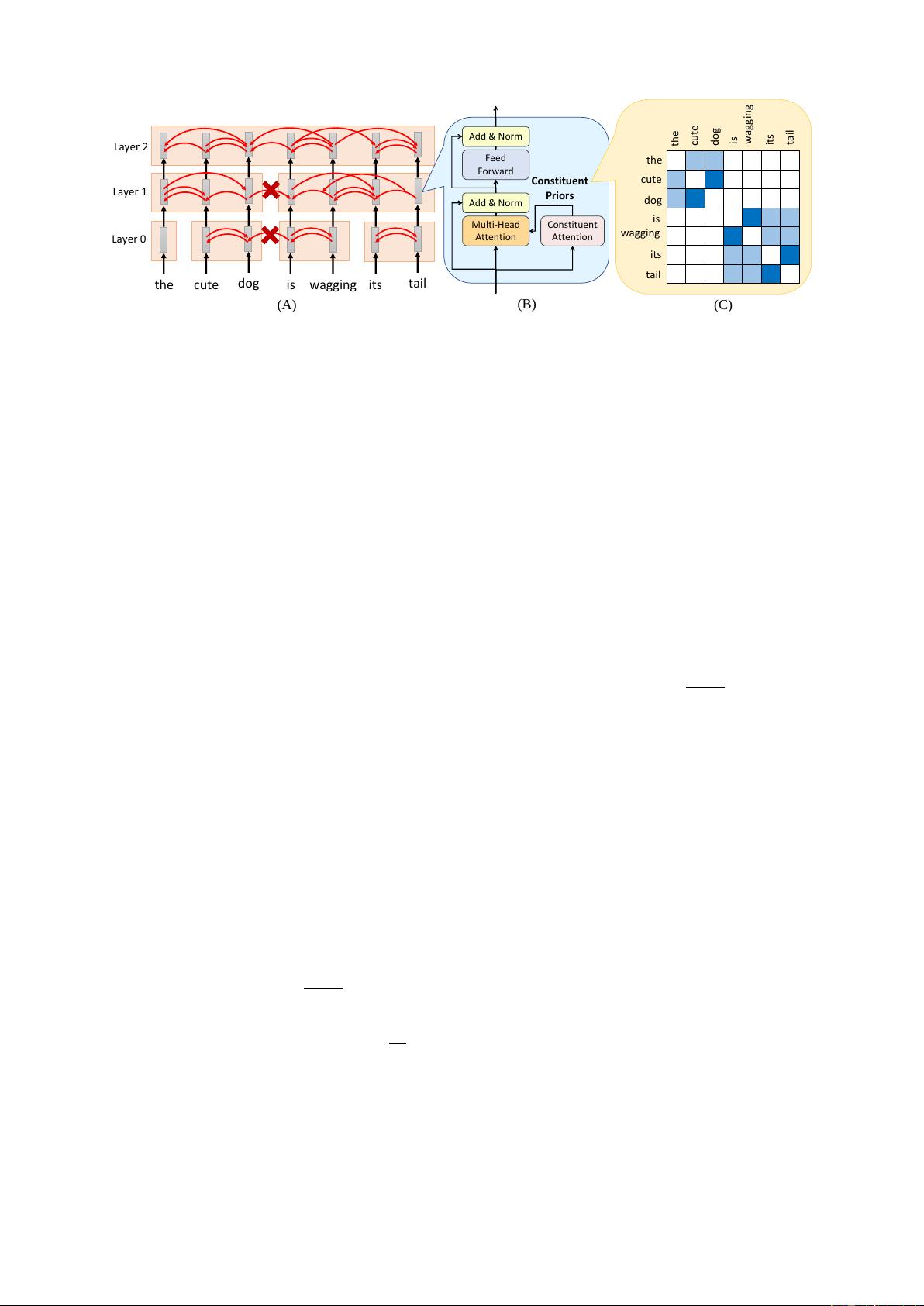

The Transformer (Vaswani et al., 2017) has be-

come the dominant modeling paradigm in neu-

ral machine translation. It follows the encoder-

decoder framework using stacked multi-head self-

attention and fully connected layers. Multi-head

attention was shown to make more efficient use of

the model’s capacity: performance of the model

with 8 heads is almost 1 BLEU point higher than

that of a model of the same size with single-head

attention (Vaswani et al., 2017). The Transformer

achieved state-of-the-art results in recent shared

translation tasks (Bojar et al., 2018; Niehues

et al., 2018). Despite the model’s widespread

adoption and recent attempts to investigate the

kinds of information learned by the model’s en-

coder (Raganato and Tiedemann, 2018), the anal-

ysis of multi-head attention and its importance

1

We release code at https://github.com/

lena-voita/the-story-of-heads.

for translation is challenging. Previous analysis

of multi-head attention considered the average of

attention weights over all heads at a given posi-

tion or focused only on the maximum attention

weights (Voita et al., 2018; Tang et al., 2018),

but neither method explicitly takes into account

the varying importance of different heads. Also,

this obscures the roles played by individual heads

which, as we show, influence the generated trans-

lations to differing extents. We attempt to answer

the following questions:

• To what extent does translation quality de-

pend on individual encoder heads?

• Do individual encoder heads play consistent

and interpretable roles? If so, which are the

most important ones for translation quality?

• Which types of model attention (encoder

self-attention, decoder self-attention or

decoder-encoder attention) are most sensitive

to the number of attention heads and on

which layers?

• Can we significantly reduce the number of

attention heads while preserving translation

quality?

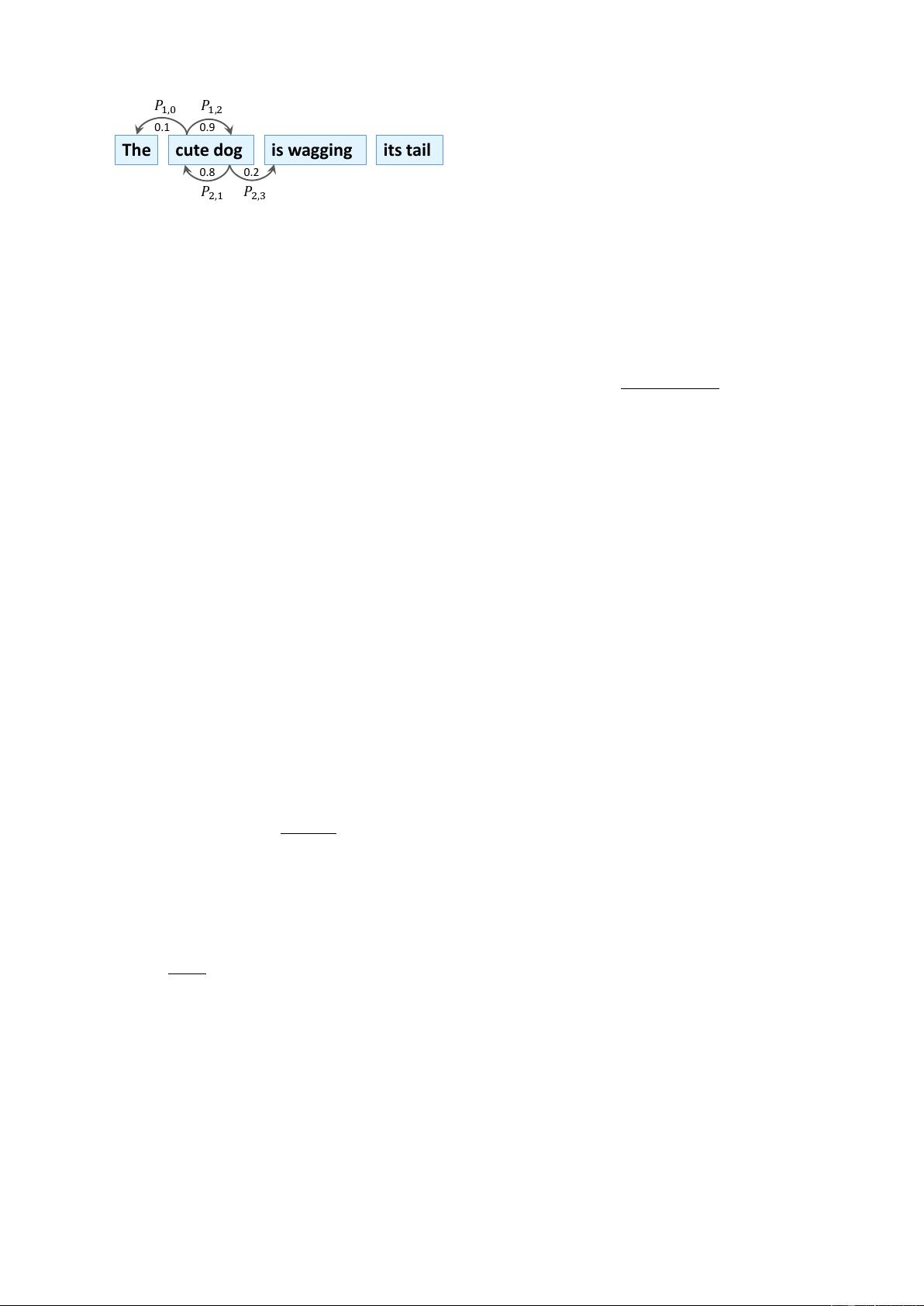

We start by identifying the most important

heads in each encoder layer using layer-wise rele-

vance propagation (Ding et al., 2017). For heads

judged to be important, we then attempt to charac-

terize the roles they perform. We observe the fol-

lowing types of role: positional (heads attending

to an adjacent token), syntactic (heads attending

to tokens in a specific syntactic dependency rela-

tion) and attention to rare words (heads pointing to

the least frequent tokens in the sentence).

To understand whether the remaining heads per-

form vital but less easily defined roles, or are sim-

ply redundant to the performance of the model as

arXiv:1905.09418v2 [cs.CL] 7 Jun 2019