没有合适的资源?快使用搜索试试~ 我知道了~

资源推荐

资源详情

资源评论

Machine Learning

1:

81-106,

1986

©

1986

Kluwer

Academic Publishers, Boston

-

Manufactured

in The

Netherlands

Induction

of

Decision Trees

J.R, QUINLAN (munnarilnswitgould.oz!quinlan@seismo,css.gov)

Centre

for

Advanced Computing Sciences,

New

South

Wales

Institute

of

Technology,

Sydney

2007,

Australia

(Received

August

1,

1985)

Key

words:

classification,

induction, decision trees,

information

theory,

knowledge

acquisition,

expert

systems

Abstract.

The

technology

for

building

knowledge-based

systems

by

inductive

inference

from

examples

has

been

demonstrated

successfully

in

several practical applications. This paper summarizes

an

approach

to

synthesizing

decision trees that

has

been used

in a

variety

of

systems,

and it

describes

one

such

system,

ID3,

in

detail. Results from recent studies show

ways

in

which

the

methodology

can be

modified

to

deal

with

information that

is

noisy

and/or

incomplete.

A

reported shortcoming

of the

basic algorithm

is

discussed

and two

means

of

overcoming

it are

compared.

The

paper concludes

with

illustrations

of

current

research directions.

1.

Introduction

Since

artificial

intelligence

first

achieved recognition

as a

discipline

in the mid

1950's,

machine

learning

has

been

a

central research

area.

Two

reasons

can be

given

for

this

prominence.

The

ability

to

learn

is a

hallmark

of

intelligent behavior,

so any

attempt

to

understand intelligence

as a

phenomenon must include

an

understanding

of

learn-

ing.

More

concretely, learning provides

a

potential

methodology

for

building high-

performance systems.

Research

on

learning

is

made

up of

diverse subfields.

At one

extreme there

are

adaptive systems that monitor their

own

performance

and

attempt

to

improve

it by

adjusting

internal parameters. This approach, characteristic

of a

large proportion

of

the

early learning work, produced self-improving programs

for

playing games

(Samuel, 1967), balancing poles (Michie, 1982), solving problems (Quinlan, 1969)

and

many other domains.

A

quite

different

approach sees learning

as the

acquisition

of

structured knowledge

in the

form

of

concepts (Hunt, 1962; Winston, 1975),

discrimination nets (Feigenbaum

and

Simon,

1963),

or

production rules (Buchanan,

1978).

The

practical

importance

of

machine learning

of

this

latter

kind

has

been underlin-

82

J.R.

QUINLAN

ed by the

advent

of

knowledge-based expert systems.

As

their name suggests, these

systems

are

powered

by

knowledge that

is

represented

explicitly

rather than being

im-

plicit

in

algorithms.

The

knowledge needed

to

drive

the

pioneering expert systems

was

codified

through

protracted

interaction between

a

domain specialist

and a

knowledge

engineer. While

the

typical

rate

of

knowledge elucidation

by

this method

is a few

rules

per man

day,

an

expert system

for a

complex task

may

require hundreds

or

even

thousands

of

such rules.

It is

obvious that

the

interview approach

to

knowledge

ac-

quisition cannot keep

pace

with

the

burgeoning demand

for

expert systems; Feigen-

baum

(1981) terms this

the

'bottleneck' problem. This perception

has

stimulated

the

investigation

of

machine learning methods

as a

means

of

explicating knowledge

(Michie,

1983).

This paper focusses

on one

microcosm

of

machine learning

and on a

family

of

learning

systems that have been used

to

build knowledge-based systems

of a

simple

kind.

Section

2

outlines

the

features

of

this

family

and

introduces

its

members.

All

these systems

address

the

same task

of

inducing decision trees from examples. After

a

more complete specification

of

this task,

one

system (ID3)

is

described

in

detail

in

Section

4.

Sections

5 and 6

present extensions

to ID3

that enable

it to

cope

with

noisy

and

incomplete information.

A

review

of a

central facet

of the

induction algorithm

reveals

possible

improvements

that

are set out in

Section

7. The

paper

concludes with

two

novel initiatives that give some idea

of the

directions

in

which

the

family

may

grow.

2. The

TDIDT family

of

learning systems

Carbonell, Michalski

and

Mitchell (1983) identify

three

principal dimensions along

which

machine learning systems

can be

classified:

• the

underlying learning strategies

used;

• the

representation

of

knowledge acquired

by the

system;

and

• the

application domain

of the

system.

This

paper

is

concerned

with

a

family

of

learning systems

that

have

strong

common

bonds

in

these dimensions.

Taking these features

in

reverse order,

the

application domain

of

these systems

is

not

limited

to any

particular

area

of

intellectual activity such

as

Chemistry

or

Chess;

they

can be

applied

to any

such

area.

While they

are

thus

general-purpose

systems,

the

applications that they address

all

involve

classification.

The

product

of

learning

is

a

piece

of

procedural knowledge that

can

assign

a

hitherto-unseen object

to one

of

a

specified number

of

disjoint

classes.

Examples

of

classification tasks are:

INDUCTION

OF

DECISION TREES

83

1. the

diagnosis

of a

medical condition

from

symptoms,

in

which

the

classes could

be

either

the

various disease states

or the

possible therapies;

2.

determining

the

game-theoretic value

of a

chess position,

with

the

classes

won for

white, lost

for

white,

and

drawn;

and

3.

deciding

from

atmospheric observations whether

a

severe thunderstorm

is

unlike-

ly,

possible

or

probable.

It

might

appear

that classification tasks

are

only

a

minuscule subset

of

procedural

tasks,

but

even activities such

as

robot

planning

can be

recast

as

classification prob-

lems (Dechter

and

Michie, 1985).

The

members

of

this

family

are

sharply characterized

by

their representation

of

ac-

quired

knowledge

as

decision trees. This

is a

relatively simple knowledge formalism

that

lacks

the

expressive power

of

semantic networks

or

other first-order representa-

tions.

As a

consequence

of

this

simplicity,

the

learning methodologies used

in the

TDIDT

family

are

considerably less complex than those employed

in

systems that

can

express

the

results

of

their learning

in a

more

powerful

language. Nevertheless,

it is

still

possible

to

generate knowledge

in the

form

of

decision trees that

is

capable

of

solving

difficult

problems

of

practical significance.

The

underlying strategy

is

non-incremental learning from examples.

The

systems

are

presented with

a set of

cases

relevant

to a

classification task

and

develop

a

deci-

sion tree from

the top

down, guided

by

frequency information

in the

examples

but

not by the

particular order

in

which

the

examples

are

given. This contrasts

with

in-

cremental

methods such

as

that employed

in

MARVIN (Sammut, 1985),

in

which

a

dialog

is

carried

on

with

an

instructor

to

'debug'

partially correct concepts,

and

that

used

by

Winston (1975),

in

which

examples

are

analyzed

one at a

time, each produc-

ing

a

small change

in the

developing concept;

in

both

of

these systems,

the

order

in

which

examples

are

presented

is

most important.

The

systems described here search

for

patterns

in the

given examples

and so

must

be

able

to

examine

and

re-examine

all

of

them

at

many

stages

during learning. Other well-known programs

that

share

this data-driven

approach

include BACON (Langley, Bradshaw

and

Simon, 1983)

and

INDUCE (Michalski,

1980).

In

summary, then,

the

systems described here develop decision trees

for

classifica-

tion tasks. These trees

are

constructed beginning with

the

root

of the

tree

and

pro-

ceeding down

to its

leaves.

The

family's palindromic name emphasizes that

its

members

carry

out the

Top-Down Induction

of

Decision

Trees.

The

example objects

from

which

a

classification rule

is

developed

are

known only

through

their values

of a set of

properties

or

attributes,

and the

decision trees

in

turn

are

expressed

in

terms

of

these same attributes.

The

examples themselves

can be

assembled

in two

ways. They might come

from

an

existing

database

that forms

a

history

of

observations, such

as

patient

records

in

some

area

of

medicine that have

accumulated

at a

diagnosis

center.

Objects

of

this kind give

a

reliable statistical pic-

ture but, since they

are not

organized

in any

way, they

may be

redundant

or

omit

84

J.R.

QUINLAN

Figure

1, The

TDIDT

family

tree.

uncommon

cases that have

not

been encountered during

the

period

of

record-

keeping.

On the

other hand,

the

objects might

be a

carefully

culled

set of

tutorial

ex-

amples

prepared

by a

domain expert, each

with

some particular relevance

to a

com-

plete

and

correct classification rule.

The

expert might take pains

to

avoid redundancy

and to

include examples

of

rare cases. While

the

family

of

systems

will

deal

with

col-

lections

of

either kind

in a

satisfactory way,

it

should

be

mentioned that earlier

TDIDT systems were designed with

the

'historical

record'

approach

in

mind,

but all

systems described here

are now

often used with tutorial sets (Michie, 1985).

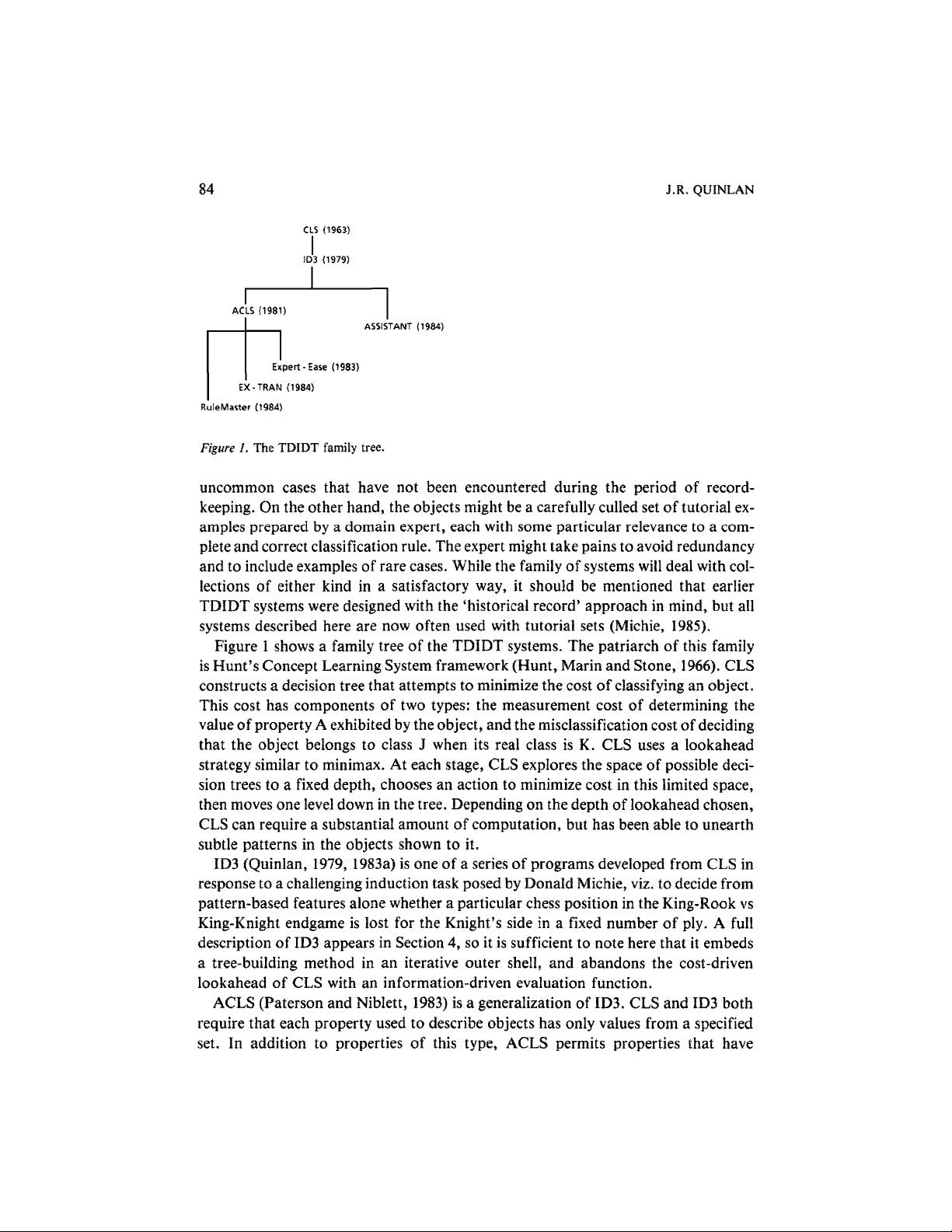

Figure

1

shows

a

family

tree

of the

TDIDT

systems.

The

patriarch

of

this

family

is

Hunt's

Concept

Learning System framework (Hunt, Marin

and

Stone,

1966).

CLS

constructs

a

decision

tree

that attempts

to

minimize

the

cost

of

classifying

an

object.

This cost

has

components

of two

types:

the

measurement cost

of

determining

the

value

of

property

A

exhibited

by the

object,

and the

misclassification cost

of

deciding

that

the

object belongs

to

class

J

when

its

real class

is K. CLS

uses

a

lookahead

strategy

similar

to

minimax.

At

each stage,

CLS

explores

the

space

of

possible deci-

sion

trees

to a

fixed

depth, chooses

an

action

to

minimize cost

in

this limited space,

then

moves

one

level

down

in the

tree. Depending

on the

depth

of

lookahead chosen,

CLS can

require

a

substantial amount

of

computation,

but has

been able

to

unearth

subtle

patterns

in the

objects shown

to it.

ID3

(Quinlan, 1979, 1983a)

is one of a

series

of

programs

developed from

CLS in

response

to a

challenging induction task posed

by

Donald Michie, viz.

to

decide

from

pattern-based features alone whether

a

particular chess position

in the

King-Rook

vs

King-Knight

endgame

is

lost

for the

Knight's side

in a

fixed

number

of

ply.

A

full

description

of ID3

appears

in

Section

4, so it is

sufficient

to

note here that

it

embeds

a

tree-building method

in an

iterative outer shell,

and

abandons

the

cost-driven

lookahead

of CLS

with

an

information-driven

evaluation

function.

ACLS (Paterson

and

Niblett, 1983)

is a

generalization

of

ID3.

CLS and ID3

both

require that each property used

to

describe objects

has

only values from

a

specified

set.

In

addition

to

properties

of

this type, ACLS permits

properties

that have

INDUCTION

OF

DECISION TREES

85

unrestricted

integer values.

The

capacity

to

deal

with

attributes

of

this

kind

has

allow-

ed

ACLS

to be

applied

to

difficult

tasks such

as

image recognition (Shepherd, 1983).

ASSISTANT (Kononenko, Bratko

and

Roskar, 1984) also acknowledges

ID3 as

its

direct ancestor.

It

differs

from

ID3 in

many ways, some

of

which

are

discussed

in

detail

in

later sections. ASSISTANT

further

generalizes

on the

integer-valued

at-

tributes

of

ACLS

by

permitting attributes

with

continuous (real) values. Rather than

insisting

that

the

classes

be

disjoint, ASSISTANT allows them

to

form

a

hierarchy,

so

that

one

class

may be a

finer

division

of

another. ASSISTANT

does

not

form

a

decision tree iteratively

in the

manner

of

ID3,

but

does

include algorithms

for

choos-

ing

a

'good'

training

set

from

the

objects available.

ASSISTANT

has

been used

in

several medical domains

with

promising results.

The

bottom-most three systems

in the

figure

are

commercial derivatives

of

ACLS.

While they

do not

significantly advance

the

underlying theory, they incorporate

many user-friendly innovations

and

utilities

that

expedite

the

task

of

generating

and

using decision

trees.

They

all

have industrial

successes

to

their

credit.

Westinghouse

Electric's

Water

Reactor

Division,

for

example, points

to a

fuel-enrichment applica-

tion

in

which

the

company

was

able

to

boost

revenue

by

'more

than

ten

million

dollars

per

annum' through

the use of one of

them.

1

3. The

induction task

We now

give

a

more precise statement

of the

induction task.

The

basis

is a

universe

of

objects that

are

described

in

terms

of a

collection

of

attributes.

Each attribute

measures

some important feature

of an

object

and

will

be

limited here

to

taking

a

(usually

small)

set of

discrete, mutually

exclusive

values.

For

example,

if the

objects

were

Saturday mornings

and the

classification task involved

the

weather, attributes

might

be

outlook, with values

{sunny,

overcast, rain}

temperature,

with

values {cool, mild, hot}

humidity,

with values {high, normal}

windy,

with values {true, false

}

Taken

together,

the

attributes provide

a

zeroth-order

language

for

characterizing

ob-

jects

in the

universe.

A

particular Saturday morning might

be

described

as

outlook:

overcast

temperature:

cool

humidity:

normal

windy:

false

1

Letter cited

in the

journal

Expert

Systems (January, 1985),

p. 20.

剩余25页未读,继续阅读

资源评论

rexzhao2362011-10-09很经典的东西,全英文,值得阅读

rexzhao2362011-10-09很经典的东西,全英文,值得阅读

suzhuo123456

- 粉丝: 1

- 资源: 3

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功