Cloud Computing and Grid Computing 360-Degree Compared

1,2,3

Ian Foster,

4

Yong Zhao,

1

Ioan Raicu,

5

Shiyong Lu

foster@mcs.anl.gov, yozha@microsoft.com, iraicu@cs.uchicago.edu, shiyong@wayne.edu

1

Department of Computer Science, University of Chicago, Chicago, IL, USA

2

Computation Institute, University of Chicago, Chicago, IL, USA

3

Math & Computer Science Division, Argonne National Laboratory, Argonne, IL, USA

4

Microsoft Corporation, Redmond, WA, USA

5

Department of Computer Science, Wayne State University, Detroit, MI, USA

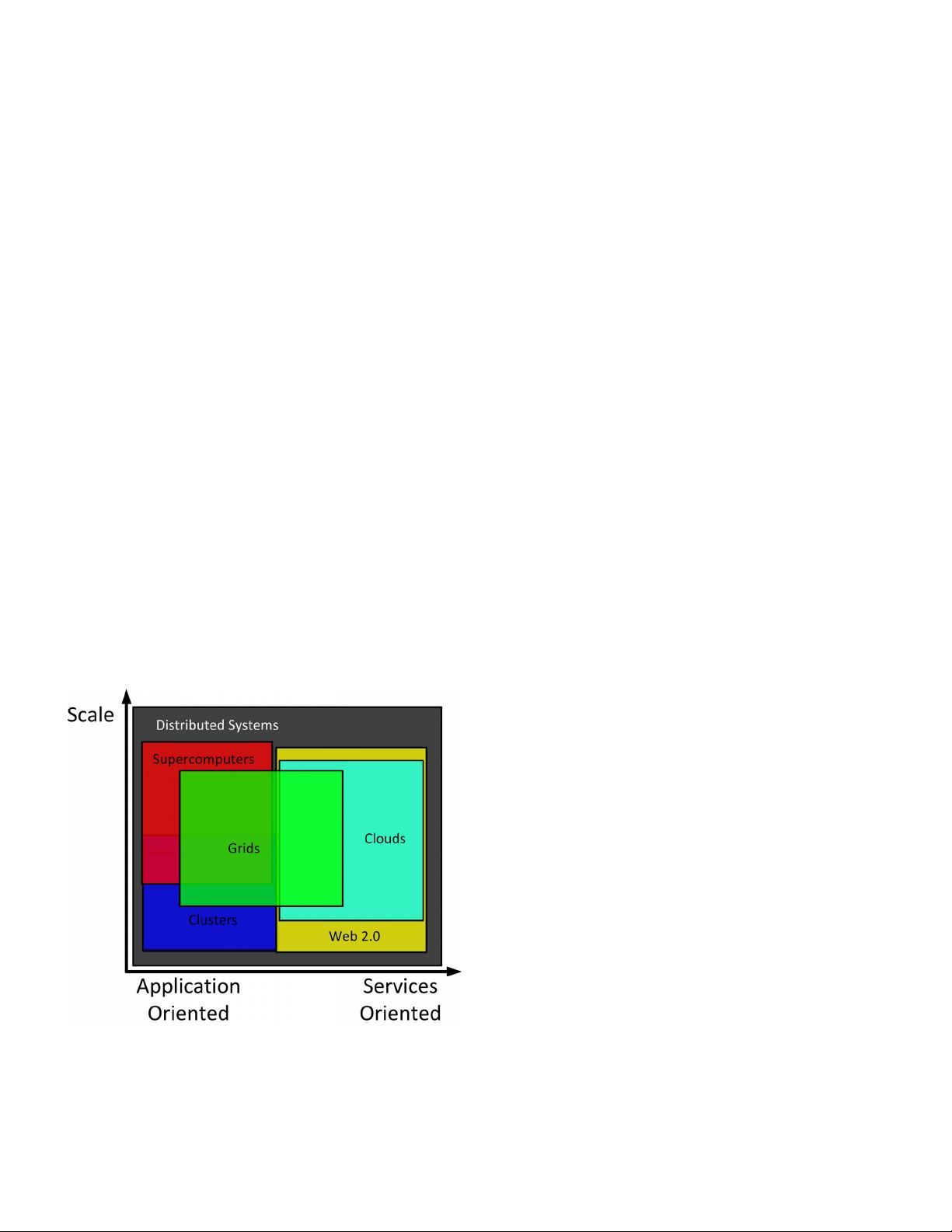

Abstract– Cloud Computing has become another buzzword after Web

2.0. However, there are dozens of different definitions for Cloud

Computing and there seems to be no consensus on what a Cloud is.

On the other hand, Cloud Computing is not a completely new concept;

it has intricate connection to the relatively new but thirteen-year

established Grid Computing paradigm, and other relevant

technologies such as utility computing, cluster computing, and

distributed systems in general. This paper strives to compare and

contrast Cloud Computing with Grid Computing from various angles

and give insights into the essential characteristics of both.

1 100-Mile Overview

Cloud Computing is hinting at a future in which we won’t

compute on local computers, but on centralized facilities

operated by third-party compute and storage utilities. We sure

won’t miss the shrink-wrapped software to unwrap and install.

Needless to say, this is not a new idea. In fact, back in 1961,

computing pioneer John McCarthy predicted that

“computation may someday be organized as a public utility”—

and went on to speculate how this might occur.

In the mid 1990s, the term Grid was coined to describe

technologies that would allow consumers to obtain computing

power on demand. Ian Foster and others posited that by

standardizing the protocols used to request computing power,

we could spur the creation of a Computing Grid, analogous in

form and utility to the electric power grid. Researchers

subsequently developed these ideas in many exciting ways,

producing for example large-scale federated systems (TeraGrid,

Open Science Grid, caBIG, EGEE, Earth System Grid) that

provide not just computing power, but also data and software,

on demand. Standards organizations (e.g., OGF, OASIS)

defined relevant standards. More prosaically, the term was also

co-opted by industry as a marketing term for clusters. But no

viable commercial Grid Computing providers emerged, at least

not until recently.

So is “Cloud Computing” just a new name for Grid? In

information technology, where technology scales by an order

of magnitude, and in the process reinvents itself, every five

years, there is no straightforward answer to such questions.

Yes: the vision is the same—to reduce the cost of computing,

increase reliability, and increase flexibility by transforming

computers from something that we buy and operate ourselves

to something that is operated by a third party.

But no: things are different now than they were 10 years ago.

We have a new need to analyze massive data, thus motivating

greatly increased demand for computing. Having realized the

benefits of moving from mainframes to commodity clusters,

we find that those clusters are quite expensive to operate. We

have low-cost virtualization. And, above all, we have multiple

billions of dollars being spent by the likes of Amazon, Google,

and Microsoft to create real commercial large-scale systems

containing hundreds of thousands of computers. The prospect

of needing only a credit card to get on-demand access to

100,000+ computers in tens of data centers distributed

throughout the world—resources that be applied to problems

with massive, potentially distributed data, is exciting! So we

are operating at a different scale, and operating at these new,

more massive scales can demand fundamentally different

approaches to tackling problems. It also enables—indeed is

often only applicable to—entirely new problems.

Nevertheless, yes: the problems are mostly the same in Clouds

and Grids. There is a common need to be able to manage large

facilities; to define methods by which consumers discover,

request, and use resources provided by the central facilities;

and to implement the often highly parallel computations that

execute on those resources. Details differ, but the two

communities are struggling with many of the same issues.

1.1 Defining Cloud Computing

There is little consensus on how to define the Cloud [49]. We

add yet another definition to the already saturated list of

definitions for Cloud Computing:

A large-scale distributed computing paradigm that is

driven by economies of scale, in which a pool of

abstracted, virtualized, dynamically-scalable, managed

computing power, storage, platforms, and services are

delivered on demand to external customers over the

Internet.

There are a few key points in this definition. First, Cloud

Computing is a specialized distributed computing paradigm; it

differs from traditional ones in that 1) it is massively scalable,

2) can be encapsulated as an abstract entity that delivers

different levels of services to customers outside the Cloud, 3) it

is driven by economies of scale [44], and 4) the services can be

dynamically configured (via virtualization or other approaches)

and delivered on demand.

Governments, research institutes, and industry leaders are

rushing to adopt Cloud Computing to solve their ever-

increasing computing and storage problems arising in the

Internet Age. There are three main factors contributing to the

surge and interests in Cloud Computing: 1) rapid decrease in

hardware cost and increase in computing power and storage

capacity, and the advent of multi-core architecture and modern

supercomputers consisting of hundreds of thousands of cores;

Authorized licensed use limited to: Universitat Wien. Downloaded on June 4, 2009 at 11:02 from IEEE Xplore. Restrictions apply.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功