discrimination. Here, we discuss the two lines of work most

related to this paper: weakly-supervised object localization

and visualizing the internal representation of CNNs.

Weakly-supervised object localization: There have

been a number of recent works exploring weakly-

supervised object localization using CNNs [1, 16, 2, 15].

Bergamo et al [1] propose a technique for self-taught object

localization involving masking out image regions to iden-

tify the regions causing the maximal activations in order to

localize objects. Cinbis et al [2] combine multiple-instance

learning with CNN features to localize objects. Oquab et

al [15] propose a method for transferring mid-level image

representations and show that some object localization can

be achieved by evaluating the output of CNNs on multi-

ple overlapping patches. However, the authors do not ac-

tually evaluate the localization ability. On the other hand,

while these approaches yield promising results, they are not

trained end-to-end and require multiple forward passes of a

network to localize objects, making them difficult to scale

to real-world datasets. Our approach is trained end-to-end

and can localize objects in a single forward pass.

The most similar approach to ours is the work based on

global max pooling by Oquab et al [16]. Instead of global

average pooling, they apply global max pooling to localize

a point on objects. However, their localization is limited to

a point lying in the boundary of the object rather than deter-

mining the full extent of the object. We believe that while

the max and average functions are rather similar, the use

of average pooling encourages the network to identify the

complete extent of the object. The basic intuition behind

this is that the loss for average pooling benefits when the

network identifies all discriminative regions of an object as

compared to max pooling. This is explained in greater de-

tail and verified experimentally in Sec. 3.2. Furthermore,

unlike [16], we demonstrate that this localization ability is

generic and can be observed even for problems that the net-

work was not trained on.

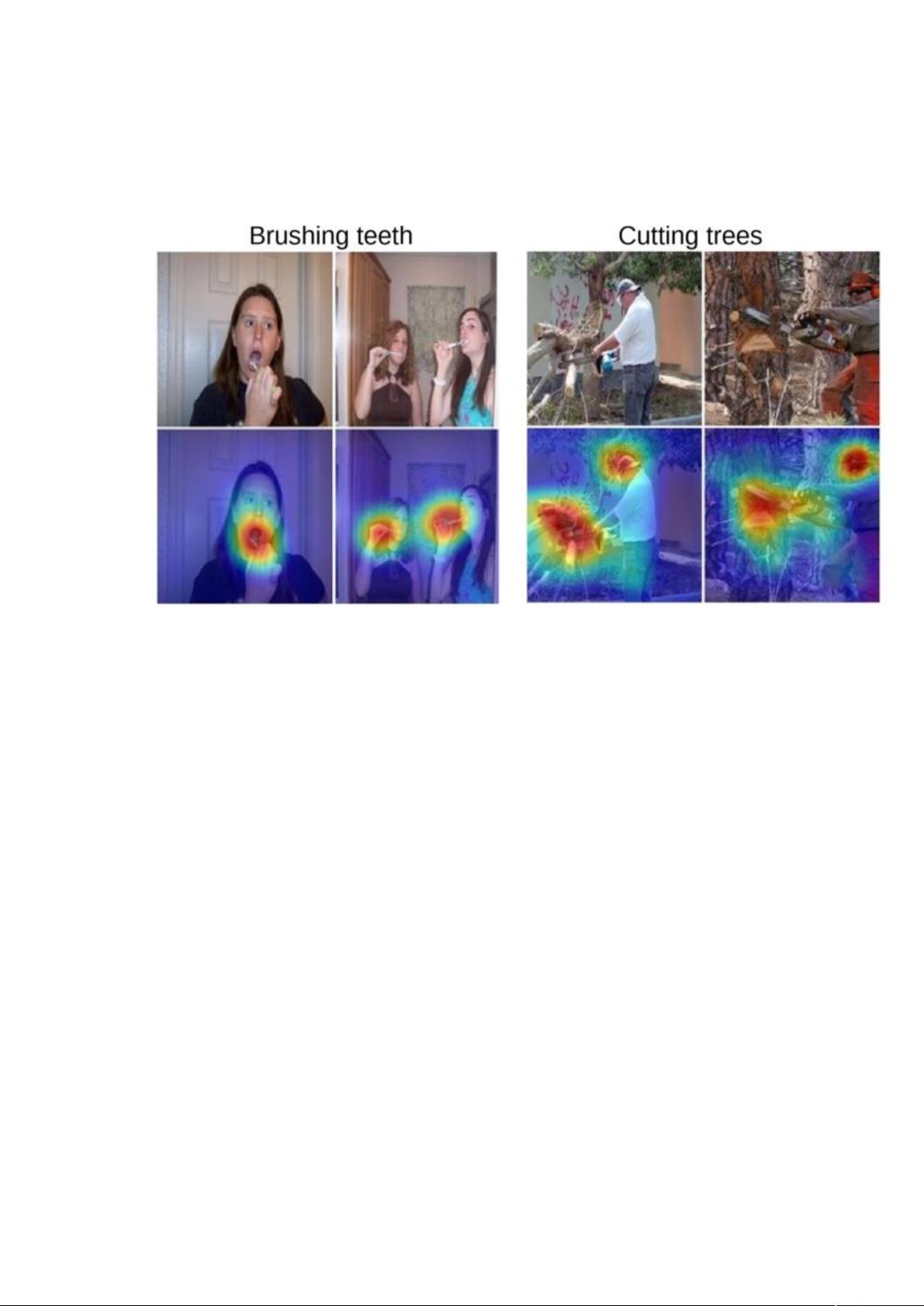

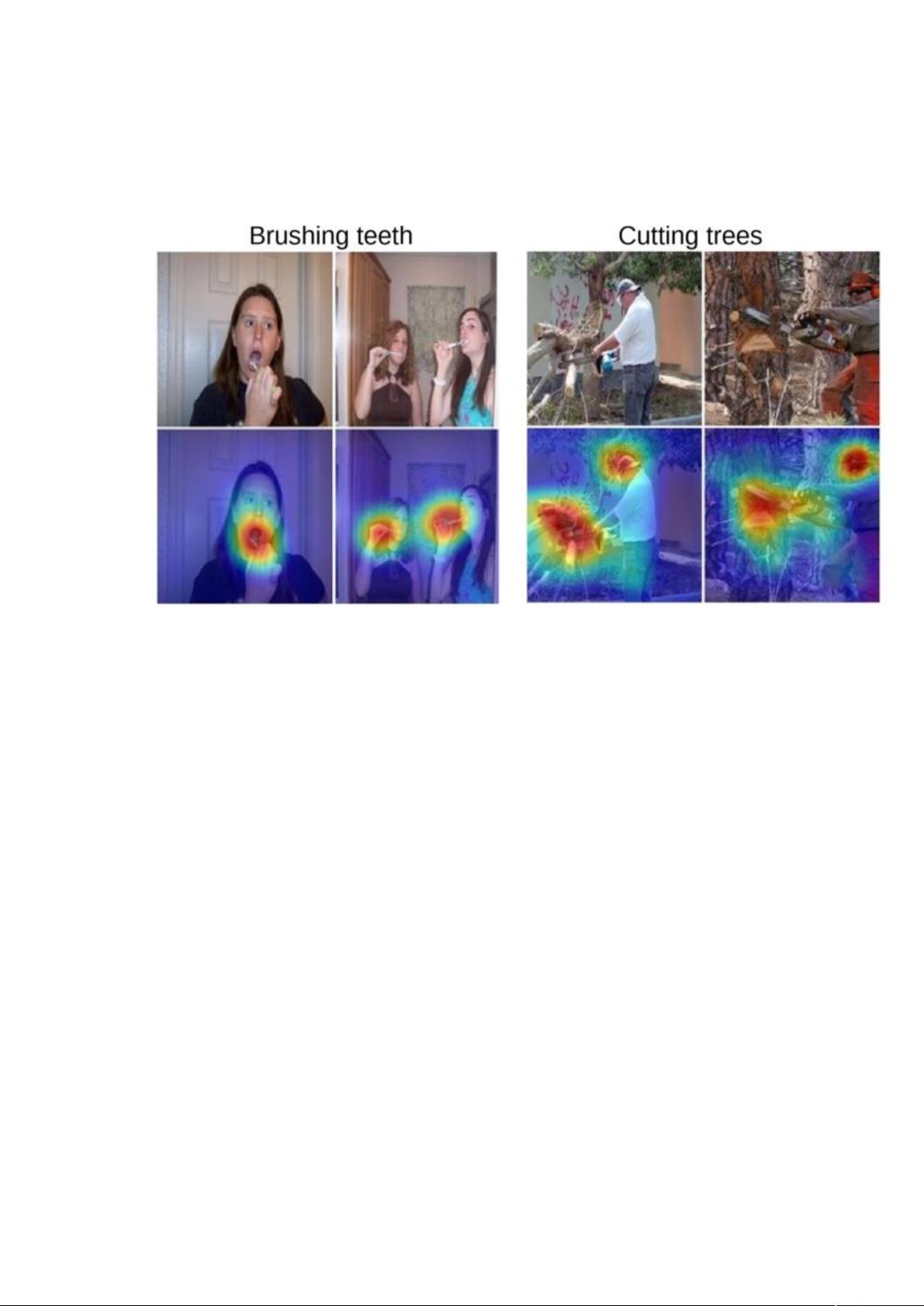

We use class activation map to refer to the weighted acti-

vation maps generated for each image, as described in Sec-

tion 2. We would like to emphasize that while global aver-

age pooling is not a novel technique that we propose here,

the observation that it can be applied for accurate discrimi-

native localization is, to the best of our knowledge, unique

to our work. We believe that the simplicity of this tech-

nique makes it portable and can be applied to a variety of

computer vision tasks for fast and accurate localization.

Visualizing CNNs: There has been a number of recent

works [29, 14, 4, 33] that visualize the internal represen-

tation learned by CNNs in an attempt to better understand

their properties. Zeiler et al [29] use deconvolutional net-

works to visualize what patterns activate each unit. Zhou et

al. [33] show that CNNs learn object detectors while being

trained to recognize scenes, and demonstrate that the same

network can perform both scene recognition and object lo-

calization in a single forward-pass. Both of these works

only analyze the convolutional layers, ignoring the fully-

connected thereby painting an incomplete picture of the full

story. By removing the fully-connected layers and retain-

ing most of the performance, we are able to understand our

network from the beginning to the end.

Mahendran et al [14] and Dosovitskiy et al [4] analyze

the visual encoding of CNNs by inverting deep features

at different layers. While these approaches can invert the

fully-connected layers, they only show what information

is being preserved in the deep features without highlight-

ing the relative importance of this information. Unlike [14]

and [4], our approach can highlight exactly which regions

of an image are important for discrimination. Overall, our

approach provides another glimpse into the soul of CNNs.

2. Class Activation Mapping

In this section, we describe the procedure for generating

class activation maps (CAM) using global average pooling

(GAP) in CNNs. A class activation map for a particular cat-

egory indicates the discriminative image regions used by the

CNN to identify that category (e.g., Fig. 3). The procedure

for generating these maps is illustrated in Fig. 2.

We use a network architecture similar to Network in Net-

work [13] and GoogLeNet [24] - the network largely con-

sists of convolutional layers, and just before the final out-

put layer (softmax in the case of categorization), we per-

form global average pooling on the convolutional feature

maps and use those as features for a fully-connected layer

that produces the desired output (categorical or otherwise).

Given this simple connectivity structure, we can identify

the importance of the image regions by projecting back the

weights of the output layer on to the convolutional feature

maps, a technique we call class activation mapping.

As illustrated in Fig. 2, global average pooling outputs

the spatial average of the feature map of each unit at the

last convolutional layer. A weighted sum of these values is

used to generate the final output. Similarly, we compute a

weighted sum of the feature maps of the last convolutional

layer to obtain our class activation maps. We describe this

more formally below for the case of softmax. The same

technique can be applied to regression and other losses.

For a given image, let f

k

(x, y) represent the activation

of unit k in the last convolutional layer at spatial location

(x, y). Then, for unit k, the result of performing global

average pooling, F

k

is

P

x,y

f

k

(x, y). Thus, for a given

class c, the input to the softmax, S

c

, is

P

k

w

c

k

F

k

where w

c

k

is the weight corresponding to class c for unit k. Essentially,

w

c

k

indicates the importance of F

k

for class c. Finally the

output of the softmax for class c, P

c

is given by

exp(S

c

)

P

c

exp(S

c

)

.

Here we ignore the bias term: we explicitly set the input

2

Learning Deep Features for Discriminative Localization论文原文加翻译.rar (2个子文件)

Learning Deep Features for Discriminative Localization论文原文加翻译.rar (2个子文件)  Learning Deep Features for Discriminative Localization翻译.docx 4.58MB

Learning Deep Features for Discriminative Localization翻译.docx 4.58MB Learning Deep Features for Discriminative Localization(CVPR 2016).pdf 2.49MB

Learning Deep Features for Discriminative Localization(CVPR 2016).pdf 2.49MB

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功