2 B. Fang, X. Ma, J. Wang et al. / Robotics and Autonomous Systems 131 (2020) 103592

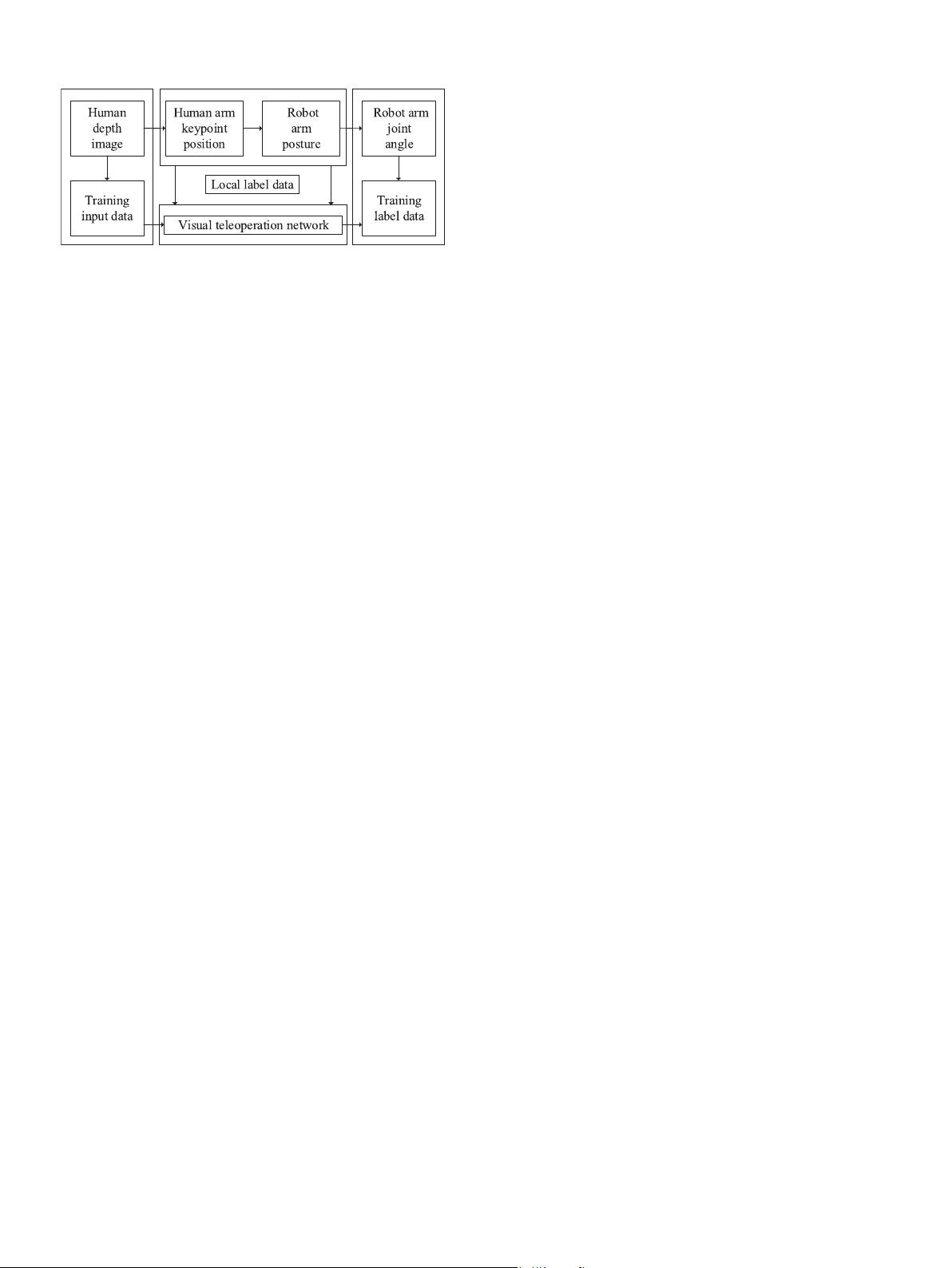

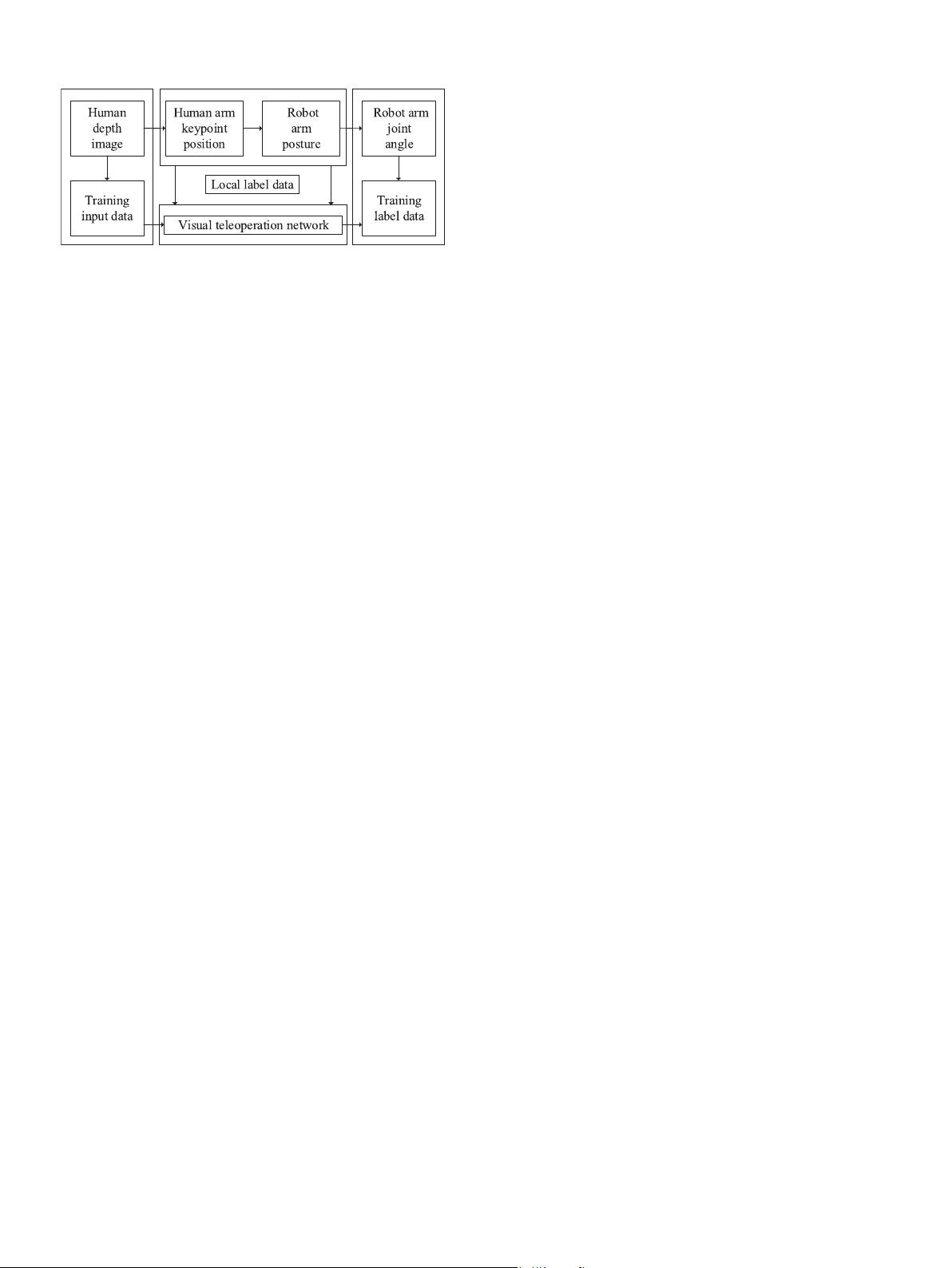

Fig. 1. The procedure of training the visual teleoperation network.

visual teleoperation network is also influenced by the quantity

and quality of train dataset directly. A dataset named UTD-

MHAD which consists of RGB images, depth images and skeleton

positions of human is provided in [19]. [20] includes 3.6 million

accurate 3D Human poses for training realistic human sensing

systems and evaluating human pose estimation algorithms. How-

ever, a human–robot posture-consistent dataset which contains

human–robot paired data needs to be established to train the

multi-stage visual teleoperation network. Therefore, it is mean-

ingful to develop a human–robot posture-consistent mapping for

the establishment of a train database.

A visual teleoperation framework based on deep neural net-

works is proposed for human–robot posture-consistent teleoper-

ation in the paper. A dataset with human–robot posture-

consistent data is generated by a novel mapping method which

maps human body data to the corresponding robot arm joint

angle data. A multi-stage visual teleoperation network is trained

by the human–robot posture-consistent dataset and then used to

teleoperate a robot arm. An illustrative experiment is conducted

to verify the visual teleoperation scheme developed. The main

contributions of this paper are shown as follows,

i A visual teleoperation framework is proposed, which fea-

tures a deep neural network structure and a posture map-

ping method.

ii A human–robot posture-consistent dataset is established

by a data generator, which is able to calculate the corre-

sponding robot arm joint angle data from the human body

data.

iii A multi-stage network structure has been proposed to in-

crease flexibility in training and using of the visual teleop-

eration network.

In this paper, a structure of visual a teleoperation network

is proposed and introduced in Section 2. In Section 3, a novel

human–robot posture-consistent mapping method is designed.

Finally, the experiments are described in Section 4 to test the

visual teleoperation network. A procedure of training a visual

teleoperation network is shown in Fig. 1.

Notation: Throughout this paper, R

n

is the n-dimensional Eu-

clidean space. ∥ · ∥

2

represents the 2-norm of vectors.

2. Visual teleoperation network

Training a deep neural network to solve a robot arm joint

angle data regression problem from human body data is chal-

lenging, because the regression problem is a highly nonlinear

mapping which causes difficulties in the learning procedure. To

overcome these difficulties, a multi-stage visual teleoperation

network which contains overall losses is proposed to generate

robot arm joint angle data from human body data.

2.1. Network structure

The proposed multi-stage visual teleoperation network con-

sists of three stages included a human arm keypoint position

estimation, a robot arm posture estimation and a robot arm

joint angle generation. The structure of the multi-stage visual

teleoperation network is show in Fig. 2.

Skeleton point estimation stage: To supervise human arm key-

point positions from a human depth image, a pixel-to-pixel part

and a pixel-to-point part are proposed in designing the human

arm keypoint position estimation stage. Three kinds of building

blocks are given in the pixel-to-pixel part. The first one is a

residual block which consists of convolution layers, batch nor-

malization layers and a activation function. The second block is

a downsampling block which is identical to a max pooling layer.

The last block is a upsampling block which contains deconvolu-

tion layers, batch normalization layers and a activation function.

The kernel size of the residual blocks is 3 × 3 and that of the

downsampling and upsampling layers is 2 × 2 with stride 2.

Furthermore, Max pooling layers, fully connected layers, batch

normalization and a activation function are proposed in the pixel

to point part.

Robot arm posture estimation: Robot arm directional angles are

estimated from human arm keypoint positions in the robot arm

posture estimation stage. Max pooling layers, fully connected lay-

ers, batch normalization and a activation function are proposed in

this stage.

Robot arm joint angle generation: In this stage, robot arm

joint angles are generated from robot arm directional angles by

fully connected layers, batch normalization layer and a activation

function.

2.2. Loss function

A overall loss function for training the multi-stage visual tele-

operation network consists of a human arm keypoint position

estimation loss, a robot arm posture estimation loss, a robot arm

joint angle generation loss and a physical constraint loss.

Skeleton point estimation loss: A mean squared error (MSE)

function is adopted as the human arm keypoint position loss L

se

as follows,

L

se

=

N

∑

n=1

∥X

n

−

˜

X

n

∥

2

(1)

where X

n

= (x

n

, y

n

, z

n

) ∈ R

3

and

˜

X

n

= (

˜

x

n

,

˜

y

n

,

˜

z

n

) ∈ R

3

denote

the groundtruth coordinate and the estimated coordinate of the

nth keypoint of human arm, respectively. N denotes the number

of keypoints selected on the human arm.

Robot arm posture loss: A MSE function is given for the robot

arm posture loss L

rp

as follows,

L

rp

=

N

∑

n=1

∥R −

˜

R∥

2

(2)

where R = (r

xn

, r

yn

, r

zn

) ∈ R

3

and

˜

R = (

˜

r

xn

,

˜

r

yn

,

˜

r

zn

) ∈ R

3

are

groundtruth robot arm directional angles and estimated robot

arm directional angles of nth robot keypoints, respectively. Note

that N denotes the number of the robot keypoints.

Robot arm joint angle generation loss: The robot arm joint angle

generation loss L

rg

for the robot angle generation stage supervised

by a MSE function is shown as follows,

L

rg

= ∥Θ −

˜

Θ∥

2

(3)

where Θ = (θ

1

, . . . , θ

n

) ∈ R

n

and

˜

Θ = (

˜

θ

1

, . . . ,

˜

θ

n

) ∈ R

n

are the

groundtruth robot arm joint angles and estimated robot arm joint

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功