没有合适的资源?快使用搜索试试~ 我知道了~

文章主要探讨了大型语言模型(LLM)在其两种基本推理类型即演绎推理和归纳推理方面的能力。通过对不同的推理方式研究设计了一套新的评估架构(称为SolverLearner),用以独立考察LLM归纳推理的独特形式并进行对比。结果显示LLM对于传统任务拥有极好的归纳推理技能但在面对‘反事实’情境的任务时则表现出较弱的演绎能力。这项发现提供了对大型语言模型内部机制的理解新视角。 适用人群:自然语言处理领域的研究人员和技术开发者;涉及深度学习模型训练的专业人士。 使用场景及目标:用于检验大型语言模型(LLMs)在不同情景下处理演绎性和归纳型问题的能力差异及其背后的机理。 本研究成果可以帮助科研工作者深入理解和改进基于大型语言模型的人工智能系统,尤其是提高它们解决新型或者非常规推理挑战的能力。

资源推荐

资源详情

资源评论

Inductive or Deductive? Rethinking the Fundamental Reasoning Abilities

of LLMs

Kewei Cheng

1,2

, Jingfeng Yang

2

, Haoming Jiang

2

, Zhengyang Wang

2

, Binxuan Huang

2

Ruirui Li

2

, Shiyang Li

2

, Zheng Li

2

, Yifan Gao

2

, Xian Li

2

, Bing Yin

2

, Yizhou Sun

1

1

University of California, Los Angeles

2

Amazon

{chenkewe, jingfe, jhaoming, zhengywa, binxuan, ruirul,

syangli, amzzhe, yifangao, xianlee, alexbyin}@amazon.com

yzsun@cs.ucla.edu

Abstract

Reasoning encompasses two typical types: de-

ductive reasoning and inductive reasoning. De-

spite extensive research into the reasoning ca-

pabilities of Large Language Models (LLMs),

most studies have failed to rigorously differenti-

ate between inductive and deductive reasoning,

leading to a blending of the two. This raises an

essential question: In LLM reasoning, which

poses a greater challenge - deductive or induc-

tive reasoning? While the deductive reasoning

capabilities of LLMs, (i.e. their capacity to

follow instructions in reasoning tasks), have

received considerable attention, their abilities

in true inductive reasoning remain largely unex-

plored. To investigate the true inductive reason-

ing capabilities of LLMs, we propose a novel

framework, SolverLearner. This framework

enables LLMs to learn the underlying function

(i.e.,

𝑦 = 𝑓

𝑤

(𝑥)

), that maps input data points

(𝑥)

to their corresponding output values

(𝑦)

,

using only in-context examples. By focusing

on inductive reasoning and separating it from

LLM-based deductive reasoning, we can isolate

and investigate inductive reasoning of LLMs in

its pure form via SolverLearner. Our observa-

tions reveal that LLMs demonstrate remarkable

inductive reasoning capabilities through Solver-

Learner, achieving near-perfect performance

with ACC of 1 in most cases. Surprisingly, de-

spite their strong inductive reasoning abilities,

LLMs tend to relatively lack deductive reason-

ing capabilities, particularly in tasks involving

“counterfactual” reasoning.

1 Introduction

Recent years have witnessed notable progress in

Natural Language Processing (NLP) with the de-

velopment of Large Language Models (LLMs) like

GPT-3 (Brown et al., 2020) and ChatGPT (Ope-

nAI, 2023). While these models exhibit impressive

reasoning abilities across various tasks, they face

challenges in certain domains. For example, a re-

cent study (Wu et al., 2023) has shown that while

LLMs excel in conventional tasks (e.g., base-10

arithmetic), they often experience a notable decline

in accuracy when dealing “counterfactual” reason-

ing tasks that deviate from the conventional cases

seen during pre-training (e.g., base-9 arithmetic).

It remains unclear whether they are capable of fun-

damental reasoning, or just approximate retrieval.

In light of this, our paper seeks to investigate

the reasoning capabilities of LLMs. Reasoning

can encompasses two types: deductive reasoning

and inductive reasoning, as depicted in Fig. 1. De-

ductive reasoning starts with a general hypothesis

and proceeds to derive specific conclusions about

individual instances while inductive reasoning in-

volves formulating broad generalizations or princi-

ples from a set of instance observations. Despite

extensive research into the reasoning capabilities of

LLMs, most studies have not clearly differentiated

between inductive and deductive reasoning. For in-

stance, arithmetic reasoning task primarily focuses

on comprehending and applying mathematical con-

cepts to solve arithmetic problems, aligning more

with deductive reasoning. Yet, when employing

in-context learning for arithmetic reasoning tasks,

where the model is prompted with a few

〈

input,

output

〉

examples, the observed improvements are

often attributed to their inductive reasoning capac-

ity. This fusion of reasoning types poses a critical

question: Which is the more significant limita-

tion in LLM reasoning, deductive or inductive

reasoning?

To explore this question, it’s crucial to differen-

tiate between deductive and inductive reasoning.

Current methods that investigate deductive and in-

ductive reasoning often rely on disparate datasets,

making direct comparisons challenging (Xu et al.,

2023a; Tang et al., 2023; Dalvi et al., 2021; Han

et al., 2022; Sinha et al., 2019; Yu et al., 2020). To

overcome this limitation, we have designed a set of

comparative experiments that utilize a consistent

task across different contexts, each emphasizing

arXiv:2408.00114v2 [cs.AI] 7 Aug 2024

(b) Few-shot IO w/ Mapping Function

Q: Assuming that all numbers are in base-8 where

the digits are "01234567", what is 57+27?

A: The result for 57+27 is 106.

Q: Assuming that all numbers are in base-8 where

the digits are "01234567", what is 36+33?

A:

(Output) The result for 36+33 is 71.

(a) Zero-shot

Q: Assuming that all numbers are in

base-8 where the digits are

"01234567", what is 36+33?

A:

(Output) 71

Deductive Reasoning Inductive Reasoning

General Principle Specific Conclusion

The result for 36+33 is 71.

General ➔ Specific

Deductive

Reasoning

(c) Few-shot IO w/o Mapping Function

Q: You are asked to add two numbers, the

base of which is unknown, what is 57+27?

A: The result for 57+27 is 106.

Q: You are asked to add two numbers, the

base of which is unknown, what is 36+33?

A:

(Output) The result for 36+33 is 71.

(d) SolverLearner

Q: You are asked to add two numbers, the base of which is unknown, what is 57+27?

A: The result for 57+27 is 106.

Q: What is the function to map the input to the output?

A:

(Output) def solver(n1: str, n2: str) -> str:\n # Let's analyze the given examples to find

the base\n # 57 + 27 = 106\n # It seems like the base is 8 (octal)\n\n # Convert the

input strings to integers in base 8\n num1 = int(n1, 8)\n num2 = int(n2, 8)\n\n #

Calculate the sum\n result = num1 + num2\n\n # Convert the result back to a string in

base 8\n return oct(result)[2:]

Addition in base 8

Begin from the rightmost digit, perform the addition. If the sum

exceeds 8, subtract 8, record the remainder, and carry over 1

to the next column. Repeat this process from right to left for

each column, and your final result will be the sum in base 8.

The result for 71+44 is 135.

The result for 42+70 is 132.

The result for 50+45 is 115.

The result for 61+55 is 136.

The result for 63+22 is 105.

Specific ➔ Specific Specific ➔ GeneralGeneral + Specific ➔ Specific

Specific Observation General Principle

Addition in base 8

Begin from the rightmost digit, perform the addition. If the sum

exceeds 8, subtract 8, record the remainder, and carry over 1

to the next column. Repeat this process from right to left for

each column, and your final result will be the sum in base 8.

Inductive

Reasoning

Integrate the deductive reasoning with few-shot

examples

Traditional IO prompting for inductive

reasoning

Completely decouple inductive reasoning from deductive reasoningDeductive Reasoning

Deductive Setting (mapping function is provided)

Inductive Setting (mapping function is not provided)

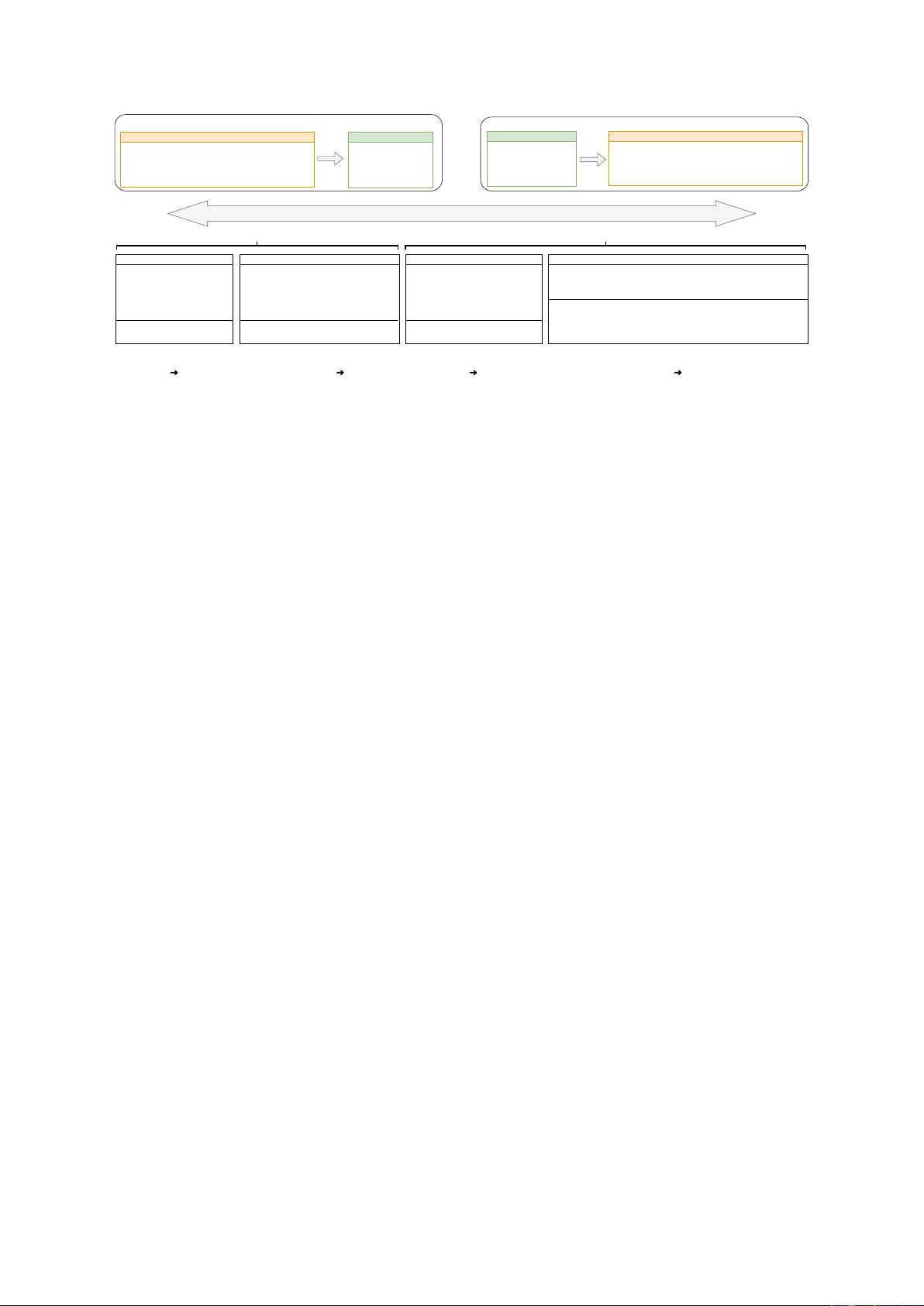

Figure 1: We have designed a set of comparative experiments that utilize a consistent task across different contexts, each

emphasizing either deductive (i.e., methods (a) and (b)) or inductive reasoning (i.e., methods (c) and (d)). As we move from left

to right across the figure, the methods gradually transition their primary focus from deductive reasoning to inductive reasoning.

Specifically, method (a) is designed to demonstrate the LLMs’ deductive reasoning in its pure form. Conversely, method (c)

utilizes Input-Output (IO) prompting strategies, which are prevalent for probing the inductive reasoning skills of LLMs. However,

we can observe that methods (c) cannot fully disentangle inductive reasoning from deductive reasoning as their learning process

directly moves from observations to specific instances, blurring the lines between the two. To exclusively focus on and examine

inductive reasoning, we introduce a novel framework called SolverLearner, positioned at the far right of the spectrum.

either deductive (i.e., methods (a) and (b)) or induc-

tive reasoning (i.e., methods (c) and (d)), as depicted

in Fig 1. For instance, in an arithmetic task, the pro-

ficiency of a LLM in deductive reasoning depends

on its ability to apply a given input-output mapping

function to solve problems when this function is

explicitly provided. Conversely, an LLM’s skill

in inductive reasoning is measured by its ability

to infer these input-output mapping functions (i.e.,

𝑦 = 𝑓

𝑤

(𝑥)

), that maps input data points

(𝑥)

to their

corresponding output values

(𝑦)

, based solely on

in-context examples. The base system often serves

as the input-output mapping function in an arith-

metic task. In line with the aforementioned setup,

we employ four methods to investigate the reason-

ing capacity of LLMs. As we move from left to

right across Fig. 1, the methods gradually transition

their primary focus from deductive reasoning to

inductive reasoning. Method (a), at the far left of

the figure, aims to explore the deductive reasoning

capabilities of LLMs in its pure form, where no in-

context-learning examples are provided (zero-shot

settings). While exploring deductive reasoning in

its pure form appears relatively straightforward in

zero-shot settings, untangling inductive reasoning

poses more significant challenges. Recent studies

have investigated the inductive reasoning abilities

of LLMs (Yang et al., 2022; Gendron et al., 2023;

Xu et al., 2023b), they have primarily used Input-

Output (IO) prompting (Mirchandani et al., 2023),

which involves providing models with a few

〈

in-

put, output

〉

as demonstrations without providing

the underlying mapping function. The models

are then evaluated based on their ability to han-

dle unseen examples, as illustrated in method (c).

These studies often find LLMs facing difficulties

with inductive reasoning. Our research suggests

that the use of IO prompting might not effectively

separate LLMs’ deductive reasoning skills from

their inductive reasoning abilities. This is because

the approach moves directly from observations to

specific instances, obscuring the inductive reason-

ing steps. Consequently, the underperformance in

the context of inductive reasoning tasks may be

attributed to poor deductive reasoning capabilities,

i.e., the ability of LLMs to execute tasks, rather than

being solely indicative of their inductive reasoning

capability.

To disentangle inductive reasoning from deduc-

tive reasoning, we propose a novel model, referred

to as SolverLearner. Given our primary focus on in-

ductive reasoning, SolverLearner follows a two-step

process to segregate the learning of input-output

mapping functions from the application of these

functions for inference. Specifically, functions are

applied through external interpreters, such as code

interpreters, to avoid incorporating LLM-based

deductive reasoning.

We evaluate the performance of several LLMs

across various tasks. LLMs consistently demon-

strate remarkable inductive reasoning capabilities

through SolverLearner, achieving near-perfect per-

formance with ACC of 1 in most cases. Surprisingly,

despite their strong inductive reasoning abilities,

LLMs tend to exhibit weaker deductive capabilities,

particularly in terms of “counterfactual” reasoning.

This finding, though unexpected, aligns with the

previous research (Wu et al., 2023). In a zero-shot

scenario, the ability of an LLM to correctly exe-

cute tasks by applying principles (i.e. deductive

reasoning) heavily relies on the frequency with

which the model was exposed to the tasks during

its pre-training phase.

2 Task Definition

Our research is focused on a relatively unexplored

question: Which presents a greater challenge to

LLMs - deductive reasoning or inductive reasoning?

To explore this, we designed a set of comparative

experiments that apply a uniform task across var-

ious contexts, each emphasizing either deductive

or inductive reasoning. The primary distinction

between the deductive and inductive settings is

whether we explicitly present input-output map-

pings to the models. Informally, we can describe

these mappings as a function

𝑓

𝑤

: 𝑋 → 𝑌

, where

an input

𝑥 ∈ 𝑋

is transformed into an output

𝑦 ∈ 𝑌

.

We distinguish between the deductive and inductive

settings as follows:

•

Deductive setting: we provide the models with

direct input-output mappings (i.e., 𝑓

𝑤

).

•

Inductive setting: we offer the models a few

examples (i.e.,

(𝑥, 𝑦)

pairs) while intentionally

leaving out input-output mappings (i.e., 𝑓

𝑤

).

For example, consider arithmetic tasks, where the

base system is the input-output mapping function.

The two approaches on the left side of Fig. 1 (i.e.,

method (a) and (b)) follow the deductive setting,

illustrating the case where the arithmetic base is

explicitly provided. In contrast, the two methods

(i.e., method (c) and (d)) on right side of Fig. 1

adhere to the inductive setting, depicting the sce-

nario characterized by the absence of a specified

arithmetic base, while a few input-output examples

are provided for guidance.

3 Our Framework for Inductive

Reasoning: SolverLearner

While recent studies have explored the inductive

reasoning abilities of LLMs (Yang et al., 2022; Gen-

dron et al., 2023; Xu et al., 2023b), they have primar-

ily relied on Input-Output (IO) prompting (Mirchan-

dani et al., 2023). This method involves providing

models with a few

〈

input, output

〉

demonstrations

and then evaluating their performance on unseen

examples, as depicted in method (c) in Fig. 1. Our

research suggests that the use of IO prompting and

directly evaluating the final instance performance

might not effectively separate LLMs’ deductive

reasoning skills from their inductive reasoning abil-

ities. This is because the approach moves directly

from observations to specific instances, obscuring

the inductive reasoning steps. To better disentangle

inductive reasoning, we propose a novel framework,

SolverLearner. This framework enables LLMs to

learn the function (i.e.,

𝑦 = 𝑓

𝑤

(𝑥)

), that maps in-

put data points

(𝑥)

to their corresponding output

values

(𝑦)

, using only in-context examples. By

focusing on inductive reasoning and setting aside

LLM-based deductive reasoning, we can isolate and

investigate inductive reasoning of LLMs in its pure

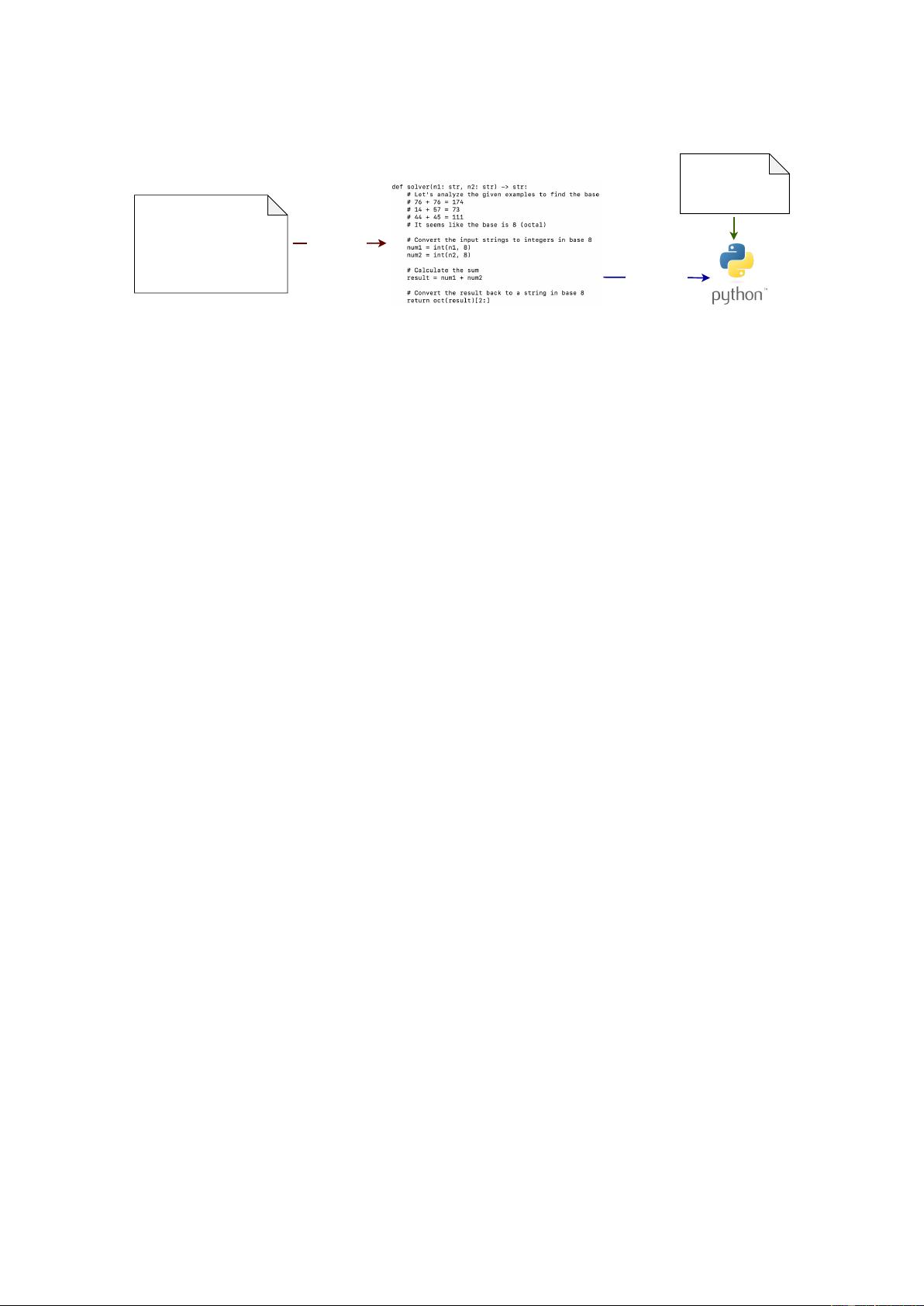

form via SolverLearner. SolverLearner includes

two-stages as illustrated in Fig. 2:

•

Function Proposal: In this initial phase, we

propose a function, that could be used to map

input data points

(𝑥)

to their corresponding output

values

(𝑦)

. This is corresponding to the inductive

reasoning process.

•

Function Execution: In the second phase, the

proposed function is applied through external

code interpreters to solve the test queries for

evaluation purposes. This phase ensures that

the LLM is fully prevented from engaging in

deductive reasoning.

3.1 Framework

In this subsection, we will take the arithmetic task

as a case study to demonstrate the entire process.

Function Proposal: Given the in-context ex-

amples, the primary goal of LLMs is to learn a

function that can map input data points

(𝑥)

to their

corresponding output values

(𝑦)

. This process of

learning the mapping between inputs and outputs

is akin to inductive reasoning, while employing

the learned function to address unseen queries

aligns with deductive reasoning. In order to sepa-

rate inductive reasoning from deductive reasoning,

the execution of the learned function should be

completely detached from LLMs. To achieve this

separation, external tools such as code interpreters

serve as efficient way to execute these functions in-

dependently. By encapsulating the learned function

within Python code, we can effectively detach the

duty of deductive reasoning from LLMs, assigning

The result for 71+44 is 135.

The result for 42+70 is 132.

The result for 50+45 is 115.

The result for 61+55 is 136.

The result for 63+22 is 105.

The result for 72+62 is 154.

The result for 57+27 is 106.

The result for 52+76 is 150.

8 Shot Examples

Python Function

14+57

44+45

...

61+23

22+77

Test Queries

② Function

Execution

① Function

Proposal

Figure 2: An overview of our framework SolverLearner for inductive reasoning. SolverLearner follows a two-step process to

segregate the learning of input-output mapping functions from the application of these functions for inference. Specifically,

functions are applied through external code interpreters, to avoid incorporating LLM-based deductive reasoning.

it solely to these external executors. For instance,

in function proposal stage for an arithmetic task,

we have:

“You are an expert mathematician and program-

mer. You are asked to add two numbers, the base

of which is unknown. Below are some provided

examples: The result for 76+76 is 174.

Please identify the underlying pattern to determine

the base being used and implement a solver() func-

tion to achieve the goal.

def solver(n1: str, n2: str) -> str:

# Let’s write a Python program step by step

# Each input is a number represented as a string.

# The function computes the sum of these numbers

and returns it as a string. ”

Function Execution: In the second phase, func-

tions are executed through external code interpreters

to solve the test cases for evaluation purposes. These

code interpreters act as “oracle” deductive reason-

ers, fully preventing the LLM from involving deduc-

tive reasoning. This ensures that the final results

reflect only the inductive reasoning capability of the

LLM. To further decouple the LLM’s influence in

this phase, test cases are generated using a template

without involving the LLM. More details can be

found in Appendix A.1.3.

4 Tasks

In this section, we provide a brief overview of the

tasks under consideration. Our focus is on inves-

tigating the reasoning abilities of LLMs in both

deductive and inductive reasoning scenarios. To

ensure a robust evaluation, we carefully select tasks

that lend themselves well to comparison. Firstly, to

prevent LLMs from reciting tasks seen frequently

during pre-training, which could artificially inflate

performance in deductive reasoning, a significant

portion of the tasks falls into the category of “coun-

terfactual reasoning” tasks. Secondly, in the context

of inductive reasoning, where only a few in-context

examples are available without the mapping func-

tion, our objective is to learn the function that

maps inputs to outputs based on this restricted

dataset. To achieve this, we choose tasks that are

well-constrained, ensuring the existence of a single,

unique function capable of fitting this limited data.

Detailed descriptions of each task and the prompts

used can be found in Appendix A.1 and A.2.

Arithmetic In this study, we focus on the two-

digit addition task previously explored in the work

of Wu et al. (2023). We investigate multiple

numerical bases, specifically base-8, 9, 10, 11, and

16 where base 10 corresponds to the commonly

observed case during pretraining. In the context of

deductive reasoning, the base is explicitly provided

without any accompanying in-context examples,

and the LLM is expected to perform the addition

computation by relying on its inherent deductive

reasoning abilities. Conversely, in the context of

inductive reasoning, instead of explicitly providing

the base information to LLMs, we provide LLMs

solely with few-shot examples and require them

to induce the base through these examples and

subsequently generate a function to solve arithmetic

problems.

Basic Syntactic Reasoning In this setting, we

concentrate on tasks related to syntactic recognition

previously explored by Wu et al. (2023). Our

objective is to evaluate LLMs using artificially

constructed English sentences that vary from the

conventional subject-verb-object (SVO) word order.

For deductive reasoning, we directly provide the

new word order to LLMs without any contextual

examples, challenging them to identify the subject,

verb, and object within this artificial language. In

contrast, for inductive reasoning, we do not give

剩余17页未读,继续阅读

资源评论

豪AI冰

- 粉丝: 73

- 资源: 68

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功