没有合适的资源?快使用搜索试试~ 我知道了~

Re-Reading提升大型语言模型推理能力

试读

25页

需积分: 0 2 下载量 144 浏览量

更新于2024-09-20

收藏 2.42MB PDF 举报

内容概要:介绍了RE2方法,即通过对问题进行两次阅读来增强大型语言模型(LLMs)的推理能力。与传统链思考(CoT)不同,RE2通过改进输入问题的理解提升了模型的整体表现,在多个数据集上进行了验证并展示了良好的通用性和兼容性。相较于普通CoT提示方式,实验结果显示重新读取问题的形式明显增强了模型对任务的理解关注。

适用人群:从事自然语言处理或大型语言模型研究人员。

使用场景及目标:提高各种推理性能评估中LLM的能力。如数学推理任务、文本理解和复杂多步推理等场景,通过简单有效的提示方法来优化模型的推理准确率。

其他说明:RE2不仅能独立改善模型的表现,还能与现有的多种LLM改进策略相结合使用,提供了丰富的应用可能性。

Re-Reading Improves Reasoning in Large Language Models

Xiaohan Xu

1

*

, Chongyang Tao

2

, Tao Shen

3

, Can Xu

2

,

Hongbo Xu

1

, Guodong Long

3

, Jian-guang Lou

2

1

Institute of Information Engineering, CAS, {xuxiaohan,hbxu}@iie.ac.cn

2

Microsoft Corporation, {chotao,caxu,jlou}@microsoft.com

3

AAII, School of CS, FEIT, UTS, {tao.shen,guodong.long}@uts.edu.au

Abstract

To enhance the reasoning capabilities of off-

the-shelf Large Language Models (LLMs),

we introduce a simple, yet general and effec-

tive prompting method, RE2, i.e., Re-Reading

the question as input. Unlike most thought-

eliciting prompting methods, such as Chain-of-

Thought (CoT), which aim to elicit the reason-

ing process in the output, RE2 shifts the fo-

cus to the input by processing questions twice,

thereby enhancing the understanding process.

Consequently, RE2 demonstrates strong gen-

erality and compatibility with most thought-

eliciting prompting methods, including CoT.

Crucially, RE2 facilitates a "bidirectional" en-

coding in unidirectional decoder-only LLMs

because the first pass could provide global in-

formation for the second pass. We begin with

a preliminary empirical study as the founda-

tion of RE2, illustrating its potential to enable

"bidirectional" attention mechanisms. We then

evaluate RE2 on extensive reasoning bench-

marks across 14 datasets, spanning 112 exper-

iments, to validate its effectiveness and gener-

ality. Our findings indicate that, with the ex-

ception of a few scenarios on vanilla ChatGPT,

RE2 consistently enhances the reasoning per-

formance of LLMs through a simple re-reading

strategy. Further analyses reveal RE2’s adapt-

ability, showing how it can be effectively inte-

grated with different LLMs, thought-eliciting

prompting, and ensemble strategies.

1

1 Introduction

In the ever-evolving landscape of artificial in-

telligence, large language models (LLMs) have

emerged as a cornerstone of natural language un-

derstanding and generation (Brown et al., 2020;

Touvron et al., 2023a,a; OpenAI, 2023). As these

LLMs have grown in capability, a pivotal challenge

has come to the forefront: imbuing them with the

*

This work was done during internship at Microsoft.

1

Our code is available at

https://github.com/Tebmer/

Rereading-LLM-Reasoning/

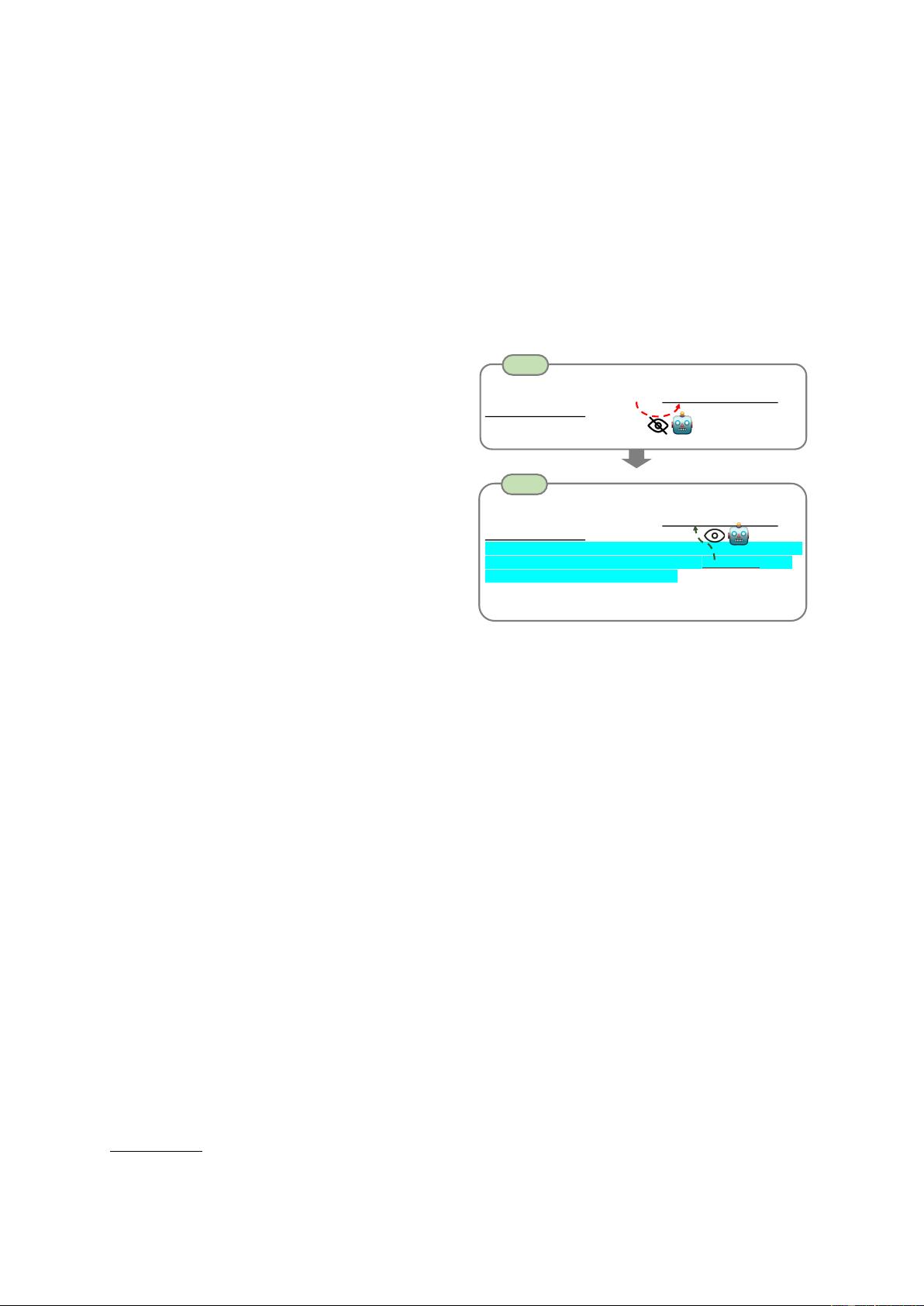

Input

CoT

Input

CoT+RE2

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis

balls. Each can has 3 tennis balls. How many tennis balls

does he have now?

A: Let’s think step by step.

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis

balls. Each can has 3 tennis balls. How many tennis balls

does he have now?

Read the question again: Roger has 5 tennis balls. He buys 2

more cans of tennis balls. Each can has 3 tennis balls. How

many tennis balls does he have now?

A: Let’s think step by step.

Figure 1: Example inputs of CoT prompting versus

CoT prompting with RE2. RE2 is a simple prompting

method that repeats the question as input. Typically,

tokens in the question, such as "tennis balls", cannot

see subsequent tokens in the original setup for LLMs

(the top figure). In contrast, LLMs with RE2 allows

"tennis balls" in the second pass to see the entire ques-

tion containing "How many ...", achieving an effect of a

"bidirectional" understanding (the bottom figure).

ability to reason effectively. The capacity to engage

in sound reasoning is a hallmark of human intelli-

gence, enabling us to infer, deduce, and solve prob-

lems. In LLMs, this skill is paramount for enhanc-

ing their practical utility. Despite their remarkable

capabilities, LLMs often struggle with nuanced rea-

soning (Blair-Stanek et al., 2023; Arkoudas, 2023),

prompting researchers to explore innovative strate-

gies to bolster their reasoning prowess (Wei et al.,

2022b; Gao et al., 2023; Besta et al., 2023).

Existing research on reasoning has predomi-

nantly concentrated on designing diverse thought-

eliciting prompting strategies to elicit reasoning

processes in the output phase, such as Chain-

of-Thought (CoT) (Wei et al., 2022b), Program-

Aided Language Model (PAL) (Gao et al., 2023),

etc. (Yao et al., 2023a; Besta et al., 2023; Wang

et al., 2023a). In contrast, scant attention has been

arXiv:2309.06275v2 [cs.CL] 29 Feb 2024

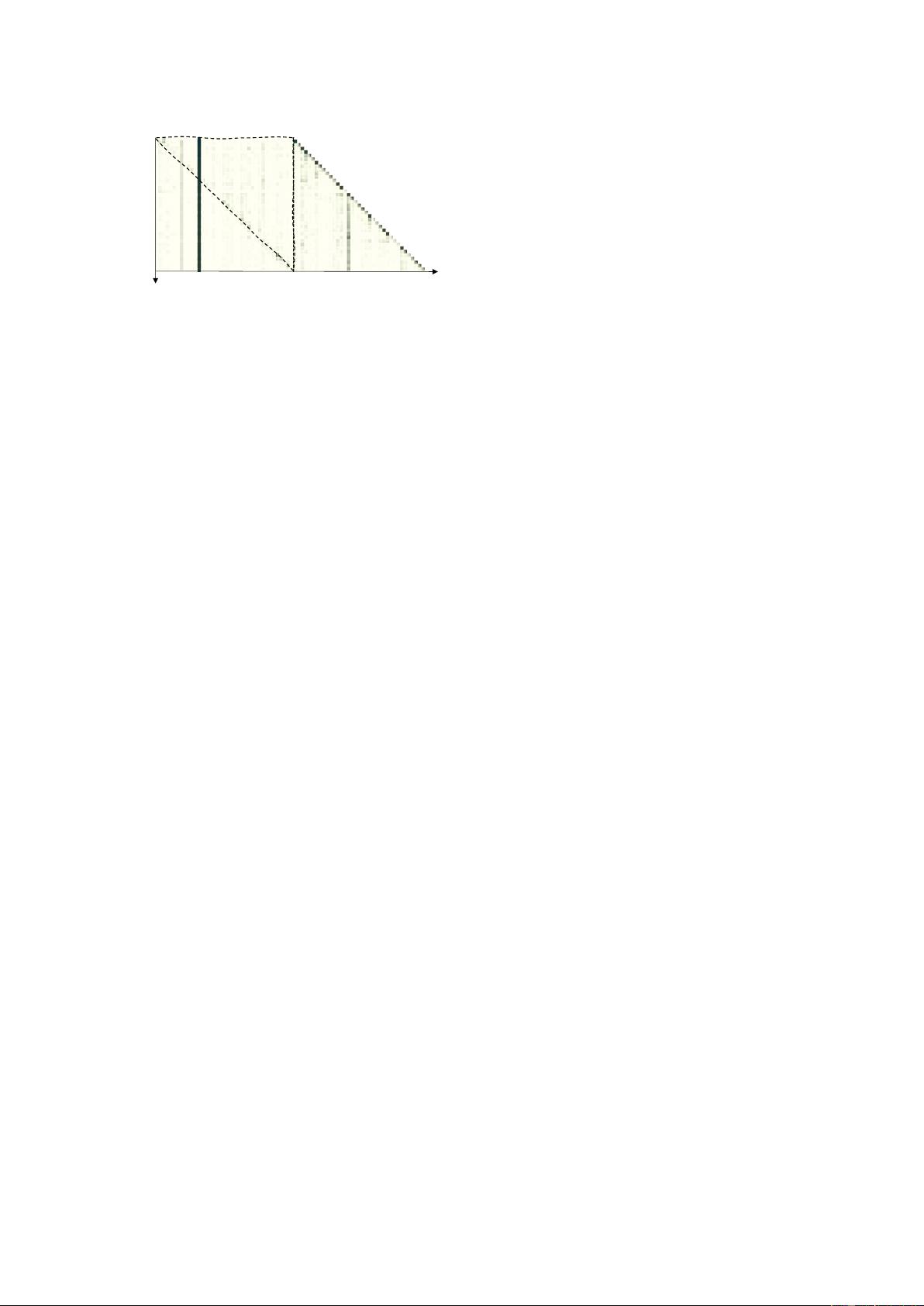

Second

Pass

First Pass Second Pass

Query

Key

Second Pass → (First Pass + Second Pass)

Figure 2: Illustration of the attention distribution of

each token in the second pass attending to the entire

input in LLaMA-2 using the GSM8K dataset. A darker

cell indicates higher attention. The region within the

dashed upper triangle demonstrates that every token in

the second pass has obvious attention to its subsequent

tokens in the first pass. This suggests that re-reading

in LLMs is promising for achieving a "bidirectional"

understanding of the question.

paid to the understanding of the input phase. In fact,

comprehension is the first step before solving the

problem, which is crucially important. However,

in the era of generative AI, most LLMs adopt the

decoder-only LLMs with unidirectional attention,

like GPT-3 (Brown et al., 2020) and LLaMA (Tou-

vron et al., 2023b). Compared with encoder-based

language models that feature bidirectional atten-

tion (Devlin et al., 2019), the unidirectional atten-

tion limits every token’s visibility to only previ-

ous tokens when encoding a question, potentially

impairing the global understanding of the ques-

tion (Du et al., 2022) (the top figure in Figure 1).

Fortunately, many cognitive science studies have re-

vealed that humans tend to re-read questions during

learning and problem-solving to enhance the com-

prehension process (Dowhower, 1987, 1989; Ozek

and Civelek, 2006). Motivated by this, we also

conduct a preliminary empirical study for LLaMA-

2 (Touvron et al., 2023b) by repeating the ques-

tion two times using the GSM8K dataset (Cobbe

et al., 2021). Figure 2 shows that LLaMA-2 with

re-reading is promising to achieve a "bidirectional"

understanding of the question, and further improve

the reasoning performance.

Based on the observation and inspired by the hu-

man strategy of re-reading, we present a simple yet

effective and general reasoning prompting strategy,

RE2, i.e., Re-Reading the question as input (see

the illustration in Figure 1). Similar to the human

problem-solving process, where the primary task is

to comprehend the problem, we place our emphasis

on designing the prompting strategy on the input

phase. Thus, RE2 is orthogonal to and compatible

with most thought-eliciting prompting methods in

the output phase, such as CoT, PAL, etc. Moreover,

instead of processing the input in only a single

pass, the repetition of questions enables LLMs to

allocate more computational resources to input en-

coding, akin to "horizontally" increasing the depth

of neural networks. Therefore, LLMs with RE2

is promising to have a deeper understanding of

the question and improve reasoning performance.

More interestingly, LLMs with RE2 show potential

for a "bidirectional" understanding of questions in

the context of unidirectional LLMs. This is be-

cause every token in the second pass could also

attend to its subsequent tokens in the first pass (see

illustration in Figure 1 and Figure 2).

To validate the efficacy and generality of RE2,

we conducted extensive experiments spanning arith-

metic, commonsense, and symbolic reasoning tasks

across 14 datasets and 112 experiments. The

results show that, with the exception of certain

scenarios on vanilla ChatGPT, our RE2 with a

simple re-reading strategy consistently enhances

the reasoning performance of LLMs. RE2 ex-

hibits versatility across various LLMs, such as

Text-Davinci-003, ChatGPT, LLaMA-2-13B, and

LLaMA-2-70B, spanning both instruction fine-

tuning (IFT) and non-IFT models. We also ex-

plore RE2 in task settings of zero-shot and few-

shot, thought-eliciting prompting methods, and the

self-consistency setting, highlighting its generality.

2 Methodology

2.1 Vanilla Chain-of-Thought for Reasoning

We begin with a unified formulation to leverage

LLMs with CoT prompting to solve reasoning tasks.

In formal, given an input

x

and a target

y

, a LLM

p with CoT prompting can be formulated as

y ∼

X

z∼ p(z|C

x

)

p(y|C

x

, z) · p(z|C

x

),

where C

x

= c

(cot)

(x). (1)

In this formulation,

C

x

denotes the prompted

input.

c

(cot)

(·)

represents the template with CoT

prompting instructions, such as ‘let’s think step by

step’.

z

stands for a latent variable of rationale, and

z

denotes a sampled rationale in natural language.

Consequently, the LLMs can break down complex

tasks into more manageable reasoning steps, treat-

ing each step as a component of the overall solution

chain. We employ CoT as a baseline to solve rea-

soning tasks without compromising its generality.

In addition to CoT, our proposed simple RE2 can

serve as a "plug & play" module adaptable to most

other prompting methods (§2.3).

2.2 Re-Reading (RE2) Improves Reasoning

Drawing inspiration from the human strategy of

re-reading, we introduce this strategy for LLM rea-

soning, dubbed RE2, to enhance understanding in

the input phase. With RE2, the prompting process

in Eq. 1 can be readily rephrased as:

y ∼

X

z∼ p(z|C

x

)

p(y|C

x

, z) · p(z|C

x

),

where C

x

= c

(cot)

(re2(x)). (2)

In this formulation,

re2(·)

is the re-reading opera-

tion of the input. We don’t seek complex adjust-

ments for LLMs but aim for a general implementa-

tion of re2(x) that is as simple as follows:

RE2 Prompting

Q: {Input Query}

Read the question again: {Input Query}

# Thought-eliciting prompt (e.g.,“Let’s

think step by step") #

where ‘{Input Query}‘ is a placeholder for the in-

put query,

x

. The left part of this prompting could

incorporate other thought-eliciting prompts. In-

tuitively, RE2 offers two advantages to enhance

the understanding process: (1) it allocates more

computational resources to the input, and (2) it

facilitates a "bidirectional" understanding of the

question, where the first pass provides global infor-

mation for the second pass.

2.3 Generality of RE2

Due to RE2’s simplicity and emphasis on the input

phase, it can be seamlessly integrated with a wide

range of LLMs and algorithms, including few-shot

settings, self-consistency, various thought-eliciting

prompting strategies, and more. We offer insights

into the integration of RE2 with other thought-

eliciting prompting strategies as an illustration.

Compared with those thought-eliciting prompt-

ing strategies that focus on the output phase, RE2

shifts the emphasis towards understanding the in-

put. Therefore, RE2 exhibits significant compati-

bility with them, acting as a “plug & play” module.

This synergy has the potential to further enhance

the reasoning abilities of LLMs. With a specific

thought-eliciting prompting,

τ

, designed to elicit

thoughts from the LLMs, Eq. (3) is rewritten as:

y ∼

X

z∼ p(z|C

x

)

p(y|C

x

, z) · p(z|C

x

),

where C

x

= c

(τ )

(re2(x)). (3)

Here,

τ

denotes various thought-eliciting prompt-

ings beyond CoT, such as Plan-and-Solve (Wang

et al., 2023a), and Program-Aided Prompt (Gao

et al., 2023), etc. We also conducted lots of experi-

ments to validate the generality of RE2 in §3.4.

3 Experiments

3.1 Benchmarks

We assess RE2 prompting across three key cat-

egories of reasoning benchmarks. Details of all

datasets are shown in Appendix A

Arithmetic Reasoning We consider the fol-

lowing seven arithmetic reasoning benchmarks:

the GSM8K benchmark of math word prob-

lems (Cobbe et al., 2021), the SVAMP dataset of

math word problems with varying structures (Patel

et al., 2021), the ASDiv dataset of diverse math

word problems (Miao et al., 2020), the AQuA

dataset of algebraic word problems (Ling et al.,

2017), the AddSub (Hosseini et al., 2014) of math

word problems on addition and subtraction for third

to fifth grader, MultiArith (Roy and Roth, 2015)

dataset of math problems with multiple steps, and

the SingelEQ (Roy et al., 2015) dataset of elemen-

tary math word problems with single operation.

Commonsense and Symbolic Reasoning For

commonsense reasoning, we use CSQA (Talmor

et al., 2019), StrategyQA (Geva et al., 2021), and

the ARC (Clark et al., 2018). CSQA dataset con-

sists of questions that necessitate various common-

sense knowledge. The StrategyQA dataset com-

prises questions that demand multi-step reasoning.

The ARC dataset (denoted as ARC-t) is divided

into two sets: a Challenge Set (denoted as ARC-

c), containing questions that both retrieval-based

and word co-occurrence algorithms answered in-

correctly, and an Easy Set (denoted as ARC-e). We

evaluate two symbolic reasoning tasks: date under-

standing (Suzgun et al., 2023a) and Coinflip (Wei

et al., 2022b). Date understanding is a subset of

BigBench datasets (Suzgun et al., 2023a), which

have posed challenges for previous fine-tuning ef-

forts. Coinflip is a dataset of questions on whether

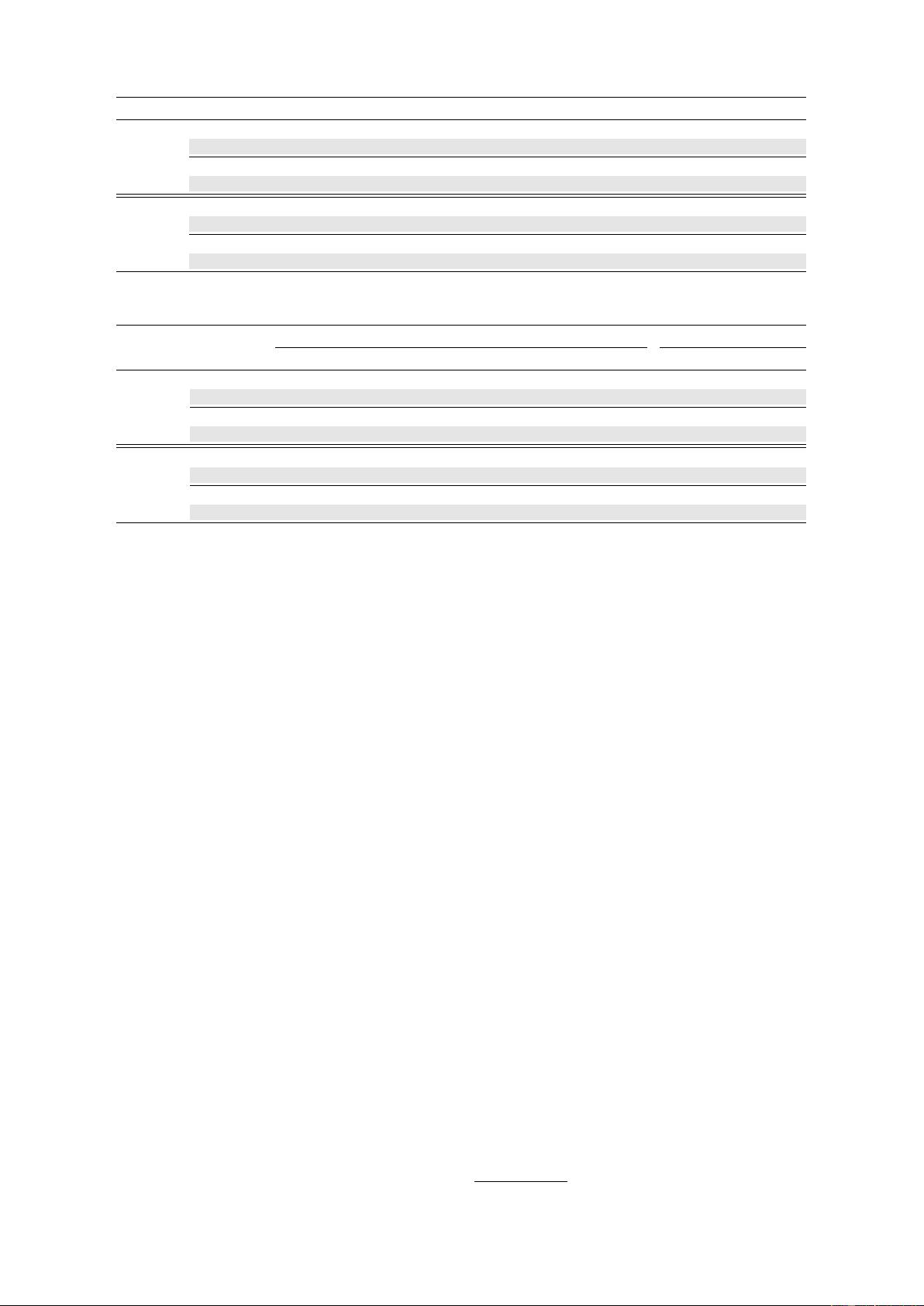

LLMs Methods GSM SVAMP ASDIV AQUA MultiArith SingleEQ AddSub

davinci-003

Vanilla 19.48 67.60 69.00 28.74 31.33 86.22 89.87

Vanilla+RE2 24.79 ↑ 5.31 70.90 ↑ 3.30 71.20 ↑ 2.20 30.31 ↑ 1.57 42.33 ↑ 11.00 87.20 ↑ 0.98 92.15 ↑ 2.28

CoT 58.98 78.30 77.60 40.55 89.33 92.32 91.39

CoT+RE2 61.64 ↑ 2.68 81.00 ↑ 2.70 78.60 ↑ 1.00 44.49 ↑ 3.94 93.33 ↑ 4.00 93.31 ↑ 0.99 91.65 ↑ 0.26

ChatGPT

Vanilla 77.79 81.50

∗

87.00

∗

63.39

∗

97.83

∗

95.28

∗

92.41

∗

Vanilla+RE2 79.45 ↑ 1.66 84.20 ↑ 2.70 88.40 ↑ 1.40 58.27 ↓ 5.12 96.67 ↓ 1.16 94.49 ↓ 0.79 91.65 ↓ 0.76

CoT 78.77 78.70 85.60 55.91 95.50 93.70 88.61

CoT+RE2 80.59 ↑ 1.82 80.00 ↑ 1.30 86.00 ↑ 0.40 59.06 ↑ 3.15 96.50 ↑ 1.00 95.28 ↑ 1.58 89.87 ↑ 1.26

Table 1: Results on arithmetic reasoning benchmarks.

∗

denotes that Vanilla is even superior to CoT prompting.

LLMs Methods

Commonsense Symbolic

CSQA StrategyQA ARC-e ARC-c ARC-t Date Coin

davinci-003

Vanilla 74.20

∗

59.74 84.81 72.01 80.58 40.92 49.80

Vanilla+RE2 76.99 ↑ 2.79 59.91 ↑ 0.17 88.22 ↑ 3.41 75.68 ↑ 3.67 84.07 ↑ 3.49 42.01 ↑ 1.09 52.40 ↑ 2.60

CoT 71.66 67.55 85.69 73.21 81.57 46.07 95.60

CoT+RE2 73.05 ↑ 1.39 66.24 ↓ 1.31 87.84 ↑ 2.15 76.02 ↑ 2.81 83.94 ↑ 2.37 52.57 ↑ 6.50 99.60 ↑ 4.00

ChatGPT

Vanilla 76.66

∗

62.36 94.32

∗

85.41

∗

91.37

∗

47.43

∗

52.00

Vanilla+RE2 78.38 ↑ 1.72 66.99 ↑ 4.63 93.81 ↓ 0.51 83.19 ↓ 2.22 90.30 ↓ 1.07 47.97 ↑ 0.54 57.20 ↑ 5.20

CoT 69.94 67.82 93.35 83.53 90.11 43.63 88.80

CoT+RE2 71.66 ↑ 1.72 69.34 ↑ 1.52 93.14 ↓ 0.21 84.47 ↑ 0.94 90.27 ↑ 0.16 47.15 ↑ 3.52 95.20 ↑ 6.40

Table 2: Results on commonsense and symbolic reasoning benchmarks.

∗

denotes that Vanilla is even superior to

CoT prompting.

a coin is still heads up after it is flipped or not based

on steps given in the questions.

3.2 Language Models and Implementations

Baseline Prompting. In our implementation, we

rigorously evaluate the performance of our RE2

model on two baseline prompting methods: Vanilla

and CoT. The Vanilla approach aligns with the

standard prompting method outlined in (Wei et al.,

2022b; Kojima et al., 2022), wherein no specific

prompts are employed to elicit thoughts from

LLMs. Conversely, the CoT method guides the

model through a step-by-step thought process.

RE2 Prompting. We incorporate our RE2 strat-

egy into these baseline methods to assess its im-

pact, denoted as Vanilla+RE2 and CoT+RE2. To

avoid the impact of randomness introduced by the

demonstrations in a few-shot setting, we mainly

assess our method in a zero-shot setting, following

(Chen et al., 2023; Wang et al., 2023a; Du et al.,

2023). Additionally, for different tasks, we design

answer-format instructions in prompts to regulate

the structure of the final answer, facilitating precise

answer extraction. Detailed information regard-

ing the baseline prompting, RE2 prompting, and

answer-format instructions can be found in the pa-

per’s Appendix B.

Implementations. Our decoding strategy uses

greedy decoding with a temperature setting of

0, thus leading to deterministic outputs. For

these experiments, we employ two powerful back-

bones: ChatGPT (gpt-3.5-turbo-0613) (OpenAI,

2022) and davinci-003 (text-davinci-003)

2

, across

all prompting methods, including Vanilla, CoT,

Vanilla+RE2, and CoT+RE2.

3.3 Evaluation Results

Table 1 presents the results on arithmetic reasoning

datasets, and Table 2 on commonsense reasoning

and symbolic reasoning. In almost all scenarios,

LLMs with RE2 achieve consistent improvements

across both LLMs (davinci-003 and ChatGPT) and

prompting methods (Vanilla and CoT). Specifically,

davinci-003 with Vanilla+RE2 shows average im-

provements of 3.81, 2.51, and 1.85 in arithmetic,

commonsense, and symbolic tasks, respectively.

With CoT, davinci-003 generates intermediate rea-

soning steps, significantly enhancing the reasoning

performance of LLMs. By applying RE2, davinci-

003 with CoT+RE2 demonstrates further improve-

ment, with average gains of 2.22, 1.23, and 5.25

in the same categories, respectively. These results

indicate that RE2 can benefit LLMs in directly gen-

erating answers and improve the performance of

CoT leading to correct answers.

When applied to ChatGPT, RE2 exhibits consis-

2

https://platform.openai.com/docs/models/gpt-3-5

1 2 3 4 5

Times of reading

77

78

79

80

81

Accuracy

77.79

78.77

79.45

80.59

79.15

80.89

78.77

80.29

77.56

80.29

1 2 3 4 5

Times of reading

20

30

40

50

60

19.48

58.98

24.79

61.64

18.8

60.12

18.35

58.83

17.06

57.47

ChatGPT Vanilla ChatGPT CoT davinci-003 Vanilla davinci-003 CoT

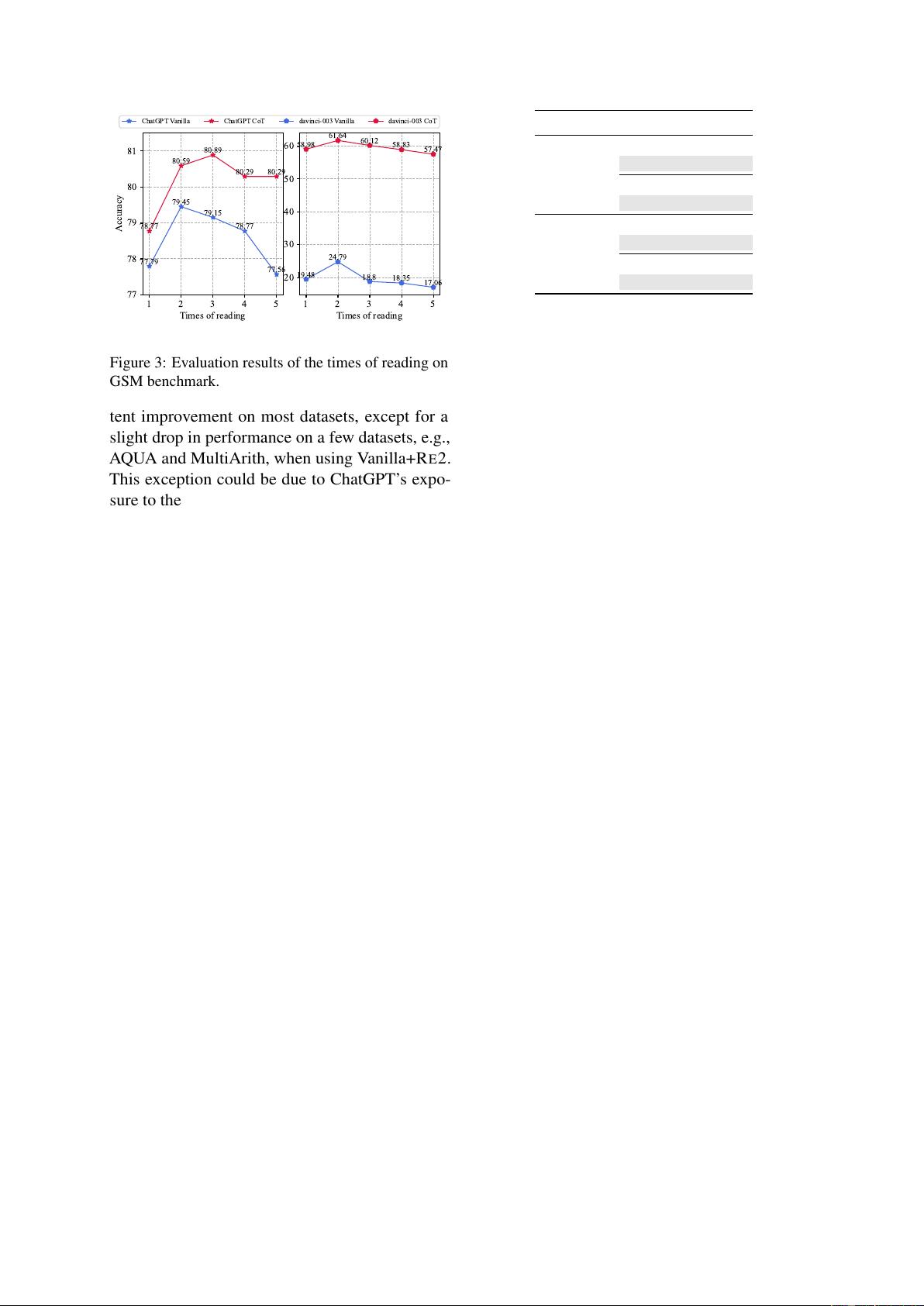

Figure 3: Evaluation results of the times of reading on

GSM benchmark.

tent improvement on most datasets, except for a

slight drop in performance on a few datasets, e.g.,

AQUA and MultiArith, when using Vanilla+RE2.

This exception could be due to ChatGPT’s expo-

sure to these datasets with CoT outputs during in-

struction fine-tuning (IFT) (Chen et al., 2023). On

such datasets, ChatGPT with Vanilla still produces

CoT-like output (see examples in Appendix E) and

even outperforms ChatGPT with CoT (as indicated

by the

∗

results in Tables 1 and 2). Chen et al.

(2023) obtained similar experimental results and

suggested that this occurs because ChatGPT may

have been exposed to these task datasets contain-

ing CoT explanations without explicit prompting.

Therefore, additional explicit instructions, like CoT

or RE2, might disrupt this learned pattern in Chat-

GPT, possibly leading to decreased performance.

Nonetheless, on some datasets like SVAMP, AS-

DIV, CSQA, and Date, RE2 still manages to im-

prove the baseline Vanilla prompting. Moreover, in

datasets where CoT prompting normally surpasses

Vanilla prompting, such as GSM, StrategyQA, and

Coin, RE2 significantly enhances Vanilla prompt-

ing (

↑

4.63 on StrategyQA and

↑

5.20 on the Coin

dataset). Overall, our RE2 method still achieves

improvements in 71% of the experiments on Chat-

GPT. More examples from the experiment results

can be found in Appendix E.

3.4 Discussions

Times of Question Reading We delve deeper

into the impact of the times of question re-reading

on reasoning performance. Figure 3 illustrates how

the performance of two distinct LLMs evolves con-

cerning various times of question re-reading. An

overarching pattern emerges across all models: per-

formance improves until the number of re-reads

reaches 2 or 3, after which it begins to decline with

LLMs Methods GSM

ChatGPT

PS 75.59

PS+RE2 76.27

PAL 75.59

PAL + RE2 79.38

davinci-003

PS 55.65

PS+RE2 58.68

PAL 68.61

PAL + RE2 70.20

Table 3: Evaluation results of some thought-eliciting

promptings beyond CoT with RE2.

further increases in question re-reading times. The

potential reasons for inferior performance when

reading the question multiple times are two-fold:

i) overly repeating questions may act as demon-

strations to encourage LLMs to repeat the ques-

tion rather than generate the answer, and ii) re-

peating the question significantly increase the in-

consistency of the LLMs between our inference

and pretraining/alignment (intuitively in the learn-

ing corpora, we usually repeat a question twice to

emphasize the key part, rather not more). It’s note-

worthy that reading the question two times tends

to be optimal for accommodating most scenarios

in our experiments, which is why we refer to this

practice as “re-reading” in our paper.

Compatibility with Thought-Eliciting Prompt

Strategies Compared to previous methods at-

tempting to elicit thoughts in the output from

LLMs, our RE2 emphasizes the understanding of

the input. Therefore, we are intrigued to explore

whether RE2 is effective with various thought-

eliciting prompting strategies other than CoT. To

investigate this, we apply RE2 to two other recently

introduced prompting methods, namely, Plan-and-

Solve (PS) (Wang et al., 2023a) and Program-

Aided Language models (PAL) (Gao et al., 2023).

The former model devises a plan to divide the en-

tire task into smaller subtasks, and then carries out

the subtasks according to the plan, while the latter

generates programs as the intermediate reasoning

steps. We directly apply our RE2 to these two

methods by making a simple alteration to the in-

put by repeating the question. Table 3 presents

the evaluation findings on the GSM benchmark.

Our observations reveal a consistent trend, akin to

what was observed with chain-of-thought prompt-

ing. These results suggest that the effectiveness

of our RE2 mechanism generally extends across

various prompting methodologies.

剩余24页未读,继续阅读

资源推荐

资源评论

197 浏览量

192 浏览量

158 浏览量

113 浏览量

2005-12-13 上传

170 浏览量

2021-09-16 上传

187 浏览量

2022-05-26 上传

t-l-51729-little-red-riding-hood-traditional-tales-differentiated-reading-comprehension-activity.pdf

2021-07-25 上传

2021-11-15 上传

2019-09-25 上传

196 浏览量

2019-10-10 上传

2022-09-21 上传

2022-09-19 上传

191 浏览量

192 浏览量

2023-03-27 上传

2021-10-27 上传

168 浏览量

154 浏览量

2023-10-06 上传

187 浏览量

资源评论

豪AI冰

- 粉丝: 73

- 资源: 68

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于javaswing的可视化学生信息管理系统

- 车辆、人检测14-TFRecord数据集合集.rar

- 车辆、人员、标志检测26-YOLO(v5至v11)、COCO、CreateML、Paligemma、TFRecord、VOC数据集合集.rar

- 一款完全免费的屏幕水印工具

- 基于PLC的空调控制原理图

- 基于VUE的短视频推荐系统

- Windows环境下Hadoop安装配置与端口管理指南

- 起重机和汽车检测17-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

- XAMPP 是一个免费且易于安装的Apache发行版

- 汽车软件需求开发与管理-从需求分析到实现的全流程解析

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功