### Download the model and place it in the models directory

- Link 1: https://github.com/mymagicpower/AIAS/releases/download/apps/denoiser.zip

- Link 2: https://github.com/mymagicpower/AIAS/releases/download/apps/speakerEncoder.zip

- Link 3: https://github.com/mymagicpower/AIAS/releases/download/apps/tacotron2.zip

- Link 4: https://github.com/mymagicpower/AIAS/releases/download/apps/waveGlow.zip

-

### TTS text to speech

Note: To prevent the cloning of someone else's voice for illegal purposes, the code limits the use of voice files to only those provided in the program.

Voice cloning refers to synthesizing audio that has the characteristics of the target speaker by using a specific voice, combining the pronunciation of the text with the speaker's voice.

When training a speech cloning model, the target voice is used as the input of the Speaker Encoder, and the model extracts the speaker's features (voice) as the Speaker Embedding.

Then, when training the model to resynthesize speech of this type, in addition to the input target text, the speaker's features will also be added as additional conditions to the model's training.

During prediction, a new target voice is selected as the input of the Speaker Encoder, and its speaker's features are extracted, finally realizing the input of text and target voice and generating a speech segment of the target voice speaking the text.

The Google team proposed a neural system for text-to-speech synthesis that can learn the speech features of multiple different speakers with only a small amount of samples, and synthesize their speech audio. In addition, for speakers that the network has not encountered during training, their speech can be synthesized with only a few seconds of unknown speaker audio without retraining, that is, the network has zero-shot learning ability.

Traditional natural speech synthesis systems require a large amount of high-quality samples during training, usually requiring hundreds or thousands of minutes of training data for each speaker, which makes the model generally not universal and cannot be widely used in complex environments (with many different speakers). These networks combine the processes of speech modeling and speech synthesis.

The SV2TTS work first separates these two processes and uses the first speech feature encoding network (encoder) to model the speaker's speech features, and then uses the second high-quality TTS network to convert features into speech.

- SV2TTS paper

[Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis](https://arxiv.org/pdf/1806.04558.pdf)

- Network structure

#### Mainly composed of three parts:

#### Sound feature encoder (speaker encoder)

Extract the speaker's voice feature information. Embed the speaker's voice into a fixed dimensional vector, which represents the speaker's potential voice features.

The encoder mainly embeds the reference speech signal into a vector space of fixed dimension, and uses it as supervision to enable the mapping network to generate original voice signals (Mel spectrograms) with the same features.

The key role of the encoder is similarity measurement. For different speeches of the same speaker, the vector distance (cosine angle) in the embedding vector space should be as small as possible, while for different speakers, it should be as large as possible.

In addition, the encoder should also have the ability to resist noise and robustness, and extract the potential voice feature information of the speaker's voice without being affected by the specific speech content and background noise.

These requirements are consistent with the requirements of the speech recognition model (speaker-discriminative), so transfer learning can be performed.

The encoder is mainly composed of three layers of LSTM, and the input is a 40-channel logarithmic Mel spectrogram. After the output of the last frame cell of the last layer is processed by L2 regularization, the embedding vector representation of the entire sequence is obtained.

In actual inference, any length of input speech signal will be divided into multiple segments by an 800ms window, and each segment will get an output. Finally, all outputs are averaged and superimposed to obtain the final embedding vector.

This method is very similar to the Short-Time Fourier Transform (STFT).

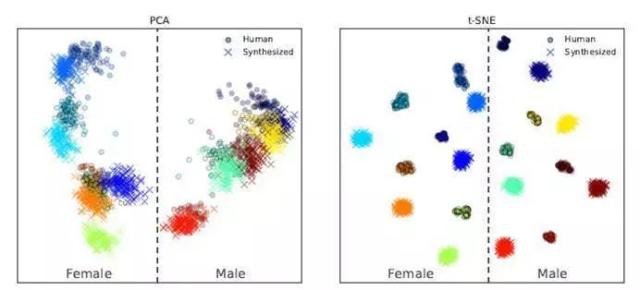

The generated embedding space vector is visualized as follows:

It can be seen that different speakers correspond to different clustering ranges in the embedding space, which can be easily distinguished, and speakers of different genders are located on both sides.

(However, synthesized speech and real speech are also easy to distinguish, and synthesized speech is farther away from the clustering center. This indicates that the realism of synthesized speech is not enough.)

####Sequence-to-sequence mapping synthesis network (Tacotron 2)

Based on the Tacotron 2 mapping network, the vector obtained from the text and sound feature encoder is used to generate a logarithmic Mel spectrogram.

The Mel spectrogram takes the logarithm of the spectral frequency scale Hz and converts it to the Mel scale, so that the sensitivity of the human ear to sound is linearly positively correlated with the Mel scale.

This network is trained independently of the encoder network. The audio signal and the corresponding text are used as inputs. The audio signal is first feature-extracted by a pre-trained encoder, and then used as input to the attention layer.

The network output feature consists of a sequence of length 50ms and a step size of 12.5ms. After Mel scaling filtering and logarithmic dynamic range compression, the Mel spectrogram is obtained.

In order to reduce the influence of noisy data, L1 regularization is additionally added to the loss function of this part.

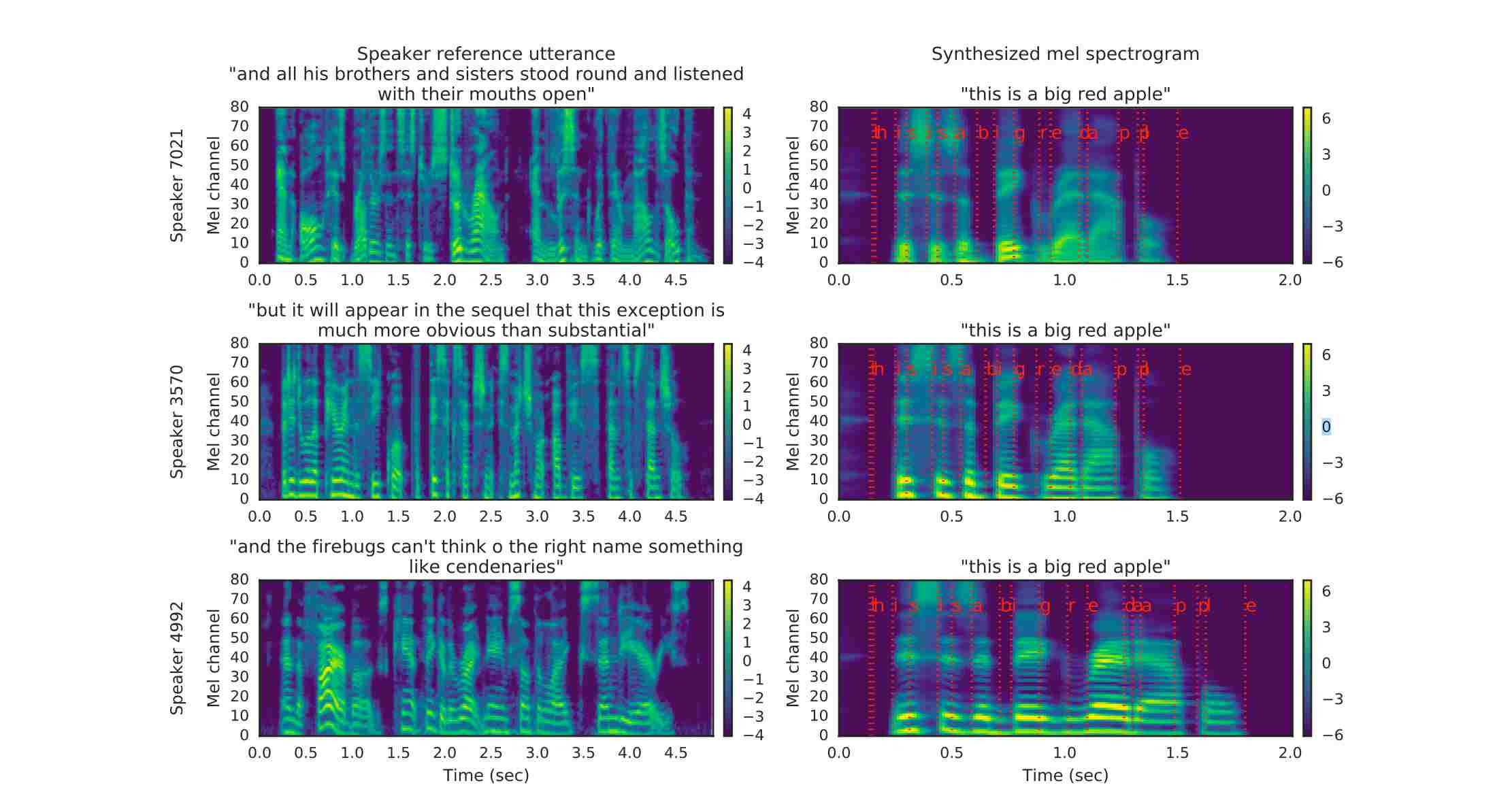

The comparison of the input Mel spectrogram and the synthesized spectrogram is shown below:

The red line on the right graph represents the correspondence between text and spectrogram. It can be seen that the speech signal used for reference supervision does not need to be consistent with the target speech signal in the text, which is also a major feature of the SV2TTS paper.

#### Speech synthesis network (WaveGlow)

WaveGlow: A network that synthesizes high-quality speech by relying on streams from Mel spectrograms. It combines Glow and WaveNet to generate fast, good, and high-quality rhythms without requiring automatic regression.

The Mel spectrogram (frequency domain) is converted into a time series sound waveform (time domain) to complete speech synthesis.

It should be noted that these three parts of the network are trained independently, and the voice encoder network mainly plays a conditional supervision role for the sequence mapping network, ensuring that the generated speech has the unique voice features of the speaker.

## Running Example- TTSExample

After successful operation, the command line should see the following information:

```text

...

[INFO] - Text: Convert text to speech based on the given voice

[INFO] - Given voice: src/test/resources/biaobei-009502.mp3

#Generate feature vector:

[INFO] - Speaker Embedding Shape: [256]

[INFO] - Speaker Embedding: [0.06272025, 0.0, 0.24136968, ..., 0.027405139, 0.0, 0.07339379, 0.0]

[INFO] - Mel spectrogram data Shape: [80, 331]

[INFO] - Mel spectrogram data: [-6.739388, -6.266942, -5.752069, ..., -10.643405, -10.558134, -10.5380535]

[INFO] - Generate wav audio file: build/output/audio.wav

```

The speech effect generated by the text "Convert text to speech based on the given voice":

[audio.wav](https://aias-home.oss-cn-beijing.aliyuncs.com/AIAS/voice_sdks/audio.wav)

没有合适的资源?快使用搜索试试~ 我知道了~

AIAS (AI Acceleration Suite) - 人工智能加速器套件 提供: 包括SDK,平台引擎,场景套件在内

共2000个文件

java:752个

md:243个

js:233个

1.该资源内容由用户上传,如若侵权请联系客服进行举报

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

版权申诉

0 下载量 80 浏览量

2024-03-18

10:42:28

上传

评论

收藏 97.53MB ZIP 举报

温馨提示

AIAS (AI Acceleration Suite) - 人工智能加速器套件。提供: 包括SDK,平台引擎,场景套件在内,合计超过100个项目组成的项目集。1. SDK:包含了对各Model Hub,以及GitHub优选模型的支持。2. 平台引擎:包含了API平台引擎,搜索引擎,训练引擎,边缘计算引擎等。3. 场景套件:包含了面向ToB,ToC,ToG各场景的套件,比如:生物医药套件。 AIAS的目标:1. 加速算法落地。2. 为集成商赋能。3. 为企业内部项目赋能

资源推荐

资源详情

资源评论

收起资源包目录

AIAS (AI Acceleration Suite) - 人工智能加速器套件 提供: 包括SDK,平台引擎,场景套件在内 (2000个子文件)

AIAS (AI Acceleration Suite) - 人工智能加速器套件 提供: 包括SDK,平台引擎,场景套件在内 (2000个子文件)  gradlew.bat 2KB

gradlew.bat 2KB mvnw.cmd 6KB

mvnw.cmd 6KB mvnw.cmd 6KB

mvnw.cmd 6KB mvnw.cmd 6KB

mvnw.cmd 6KB .env.development 93B

.env.development 93B .env.development 90B

.env.development 90B .env.development 90B

.env.development 90B .env.development 90B

.env.development 90B .env.development 90B

.env.development 90B .env.development 90B

.env.development 90B .env.development 90B

.env.development 90B sougou.dict 983KB

sougou.dict 983KB user.dict 85B

user.dict 85B .editorconfig 243B

.editorconfig 243B .editorconfig 243B

.editorconfig 243B .editorconfig 243B

.editorconfig 243B .editorconfig 243B

.editorconfig 243B .editorconfig 243B

.editorconfig 243B .editorconfig 243B

.editorconfig 243B .editorconfig 243B

.editorconfig 243B .eslintignore 34B

.eslintignore 34B .eslintignore 34B

.eslintignore 34B .eslintignore 34B

.eslintignore 34B .eslintignore 34B

.eslintignore 34B .eslintignore 34B

.eslintignore 34B .eslintignore 34B

.eslintignore 34B .eslintignore 34B

.eslintignore 34B build.gradle 2KB

build.gradle 2KB build.gradle 1KB

build.gradle 1KB settings.gradle 39B

settings.gradle 39B gradlew 5KB

gradlew 5KB 3d5160f217754a5994d875a55d3c06f3.html 1KB

3d5160f217754a5994d875a55d3c06f3.html 1KB index.html 691B

index.html 691B index.html 620B

index.html 620B index.html 620B

index.html 620B index.html 620B

index.html 620B index.html 620B

index.html 620B index.html 620B

index.html 620B index.html 620B

index.html 620B favicon.ico 17KB

favicon.ico 17KB favicon.ico 17KB

favicon.ico 17KB favicon.ico 17KB

favicon.ico 17KB favicon.ico 17KB

favicon.ico 17KB favicon.ico 17KB

favicon.ico 17KB favicon.ico 17KB

favicon.ico 17KB favicon.ico 17KB

favicon.ico 17KB iocr-demo.iml 29KB

iocr-demo.iml 29KB ocr-demo.iml 29KB

ocr-demo.iml 29KB face_align_sdk.iml 23KB

face_align_sdk.iml 23KB camera_face_sdk.iml 22KB

camera_face_sdk.iml 22KB mp4_face_sdk.iml 22KB

mp4_face_sdk.iml 22KB rtsp_face_sdk.iml 22KB

rtsp_face_sdk.iml 22KB camera-face-sdk.iml 22KB

camera-face-sdk.iml 22KB mp4-face-sdk.iml 22KB

mp4-face-sdk.iml 22KB rtsp-face-sdk.iml 22KB

rtsp-face-sdk.iml 22KB ndarray_audio_sdk.iml 22KB

ndarray_audio_sdk.iml 22KB imagekit_java.iml 22KB

imagekit_java.iml 22KB first_order_sdk.iml 20KB

first_order_sdk.iml 20KB image_sdk.iml 19KB

image_sdk.iml 19KB platform-train.iml 14KB

platform-train.iml 14KB api-platform.iml 11KB

api-platform.iml 11KB flink_face_sdk.iml 8KB

flink_face_sdk.iml 8KB flink_sentence_encoder_sdk.iml 8KB

flink_sentence_encoder_sdk.iml 8KB npy_npz_sdk.iml 4KB

npy_npz_sdk.iml 4KB dishes_sdk.iml 4KB

dishes_sdk.iml 4KB tokenizer_sdk.iml 4KB

tokenizer_sdk.iml 4KB kafka_sentence_encoder_sdk.iml 4KB

kafka_sentence_encoder_sdk.iml 4KB senta_textcnn_sdk.iml 4KB

senta_textcnn_sdk.iml 4KB pedestrian_sdk.iml 4KB

pedestrian_sdk.iml 4KB vehicle_sdk.iml 4KB

vehicle_sdk.iml 4KB super_resolution_sdk.iml 4KB

super_resolution_sdk.iml 4KB pose_estimation_sdk.iml 4KB

pose_estimation_sdk.iml 4KB traffic_sdk.iml 4KB

traffic_sdk.iml 4KB animal_sdk.iml 4KB

animal_sdk.iml 4KB kafka_face_sdk.iml 4KB

kafka_face_sdk.iml 4KB semantic_simnet_bow_sdk.iml 3KB

semantic_simnet_bow_sdk.iml 3KB translation_zh_en_sdk.iml 3KB

translation_zh_en_sdk.iml 3KB translation_en_de_sdk.iml 3KB

translation_en_de_sdk.iml 3KB porn_detection_sdk.iml 3KB

porn_detection_sdk.iml 3KB senta_bilstm_sdk.iml 3KB

senta_bilstm_sdk.iml 3KB lac_sdk.iml 3KB

lac_sdk.iml 3KB face_landmark_sdk.iml 3KB

face_landmark_sdk.iml 3KB fasttext_sdk.iml 3KB

fasttext_sdk.iml 3KB face_sdk.iml 3KB

face_sdk.iml 3KB mask_sdk.iml 3KB

mask_sdk.iml 3KB sentence_encoder_100_sdk.iml 3KB

sentence_encoder_100_sdk.iml 3KB image_text_search_40_sdk.iml 3KB

image_text_search_40_sdk.iml 3KB image_text_search_sdk.iml 3KB

image_text_search_sdk.iml 3KB bert_qa_sdk.iml 3KB

bert_qa_sdk.iml 3KB super-resolution-sdk.iml 3KB

super-resolution-sdk.iml 3KB crowd_sdk.iml 3KB

crowd_sdk.iml 3KB semantic_search_publications_sdk.iml 3KB

semantic_search_publications_sdk.iml 3KB qa_retrieval_msmarco_s_sdk.iml 3KB

qa_retrieval_msmarco_s_sdk.iml 3KB face_feature_sdk.iml 3KB

face_feature_sdk.iml 3KB qa_natural_questions_sdk.iml 3KB

qa_natural_questions_sdk.iml 3KB sentence_encoder_15_sdk.iml 3KB

sentence_encoder_15_sdk.iml 3KB sentence_encoder_en_sdk.iml 3KB

sentence_encoder_en_sdk.iml 3KB sentiment_analysis_sdk.iml 3KB

sentiment_analysis_sdk.iml 3KB cross_encoder_en_sdk.iml 3KB

cross_encoder_en_sdk.iml 3KB reflective_vest_sdk.iml 3KB

reflective_vest_sdk.iml 3KB共 2000 条

- 1

- 2

- 3

- 4

- 5

- 6

- 20

资源评论

Java程序员-张凯

- 粉丝: 1w+

- 资源: 7454

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- Proteus仿真自动门控制系统

- Proteus仿真自动门控制系统

- 基于微信小程序的扫码一键连接WiFi设计源码

- 基于Go语言的用户中心设计源码分享

- 全自动屏蔽罩检测包装机pro4全套技术资料100%好用.zip

- 基于TypeScript和Vue的附近114同城商家电话小程序uniapp设计源码

- 基于Typescript的Elasticsearch可视化工具ES查询客户端设计源码

- 基于Python语言的GLINP科研项目设计源码

- 基于Vue3+Vite+Vant-UI的招聘APP双端设计源码

- 基于SpringBoot和Mybatis的简易网页聊天室设计源码

- 全自动UV平板打印机sw16可编辑全套技术资料100%好用.zip

- 基于Vue.js的旅行推荐系统客户端前端设计源码

- 基于Go语言和Shell、C语言的rubik QoS管理代理设计源码

- 基于Java开发的烟草一体化管控系统后端设计源码

- 基于PrimeVue的Bee-Primevue-Admin Vue设计源码,跨语言支持 TypeScript & CSS

- 基于微信小程序的276款母婴商城设计源码模板

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功