馃摎 This guide explains how to use **Weights & Biases** (W&B) with YOLOv5 馃殌. UPDATED 29 September 2021.

- [About Weights & Biases](#about-weights-&-biases)

- [First-Time Setup](#first-time-setup)

- [Viewing runs](#viewing-runs)

- [Disabling wandb](#disabling-wandb)

- [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

- [Reports: Share your work with the world!](#reports)

## About Weights & Biases

Think of [W&B](https://wandb.ai/site?utm_campaign=repo_yolo_wandbtutorial) like GitHub for machine learning models. With a few lines of code, save everything you need to debug, compare and reproduce your models 鈥� architecture, hyperparameters, git commits, model weights, GPU usage, and even datasets and predictions.

Used by top researchers including teams at OpenAI, Lyft, Github, and MILA, W&B is part of the new standard of best practices for machine learning. How W&B can help you optimize your machine learning workflows:

- [Debug](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Free-2) model performance in real time

- [GPU usage](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#System-4) visualized automatically

- [Custom charts](https://wandb.ai/wandb/customizable-charts/reports/Powerful-Custom-Charts-To-Debug-Model-Peformance--VmlldzoyNzY4ODI) for powerful, extensible visualization

- [Share insights](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Share-8) interactively with collaborators

- [Optimize hyperparameters](https://docs.wandb.com/sweeps) efficiently

- [Track](https://docs.wandb.com/artifacts) datasets, pipelines, and production models

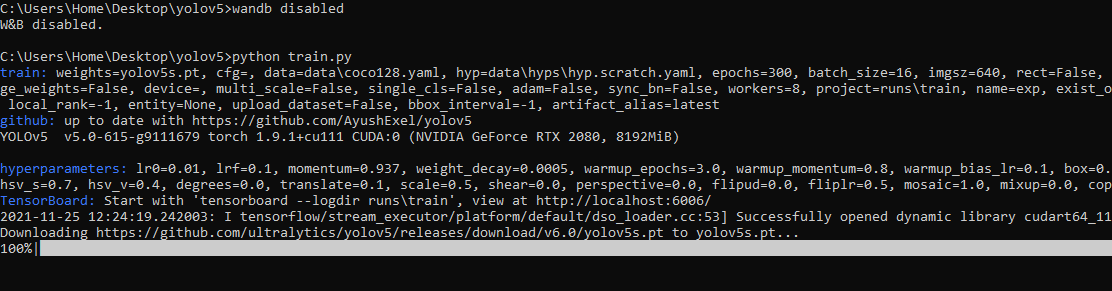

## First-Time Setup

<details open>

<summary> Toggle Details </summary>

When you first train, W&B will prompt you to create a new account and will generate an **API key** for you. If you are an existing user you can retrieve your key from https://wandb.ai/authorize. This key is used to tell W&B where to log your data. You only need to supply your key once, and then it is remembered on the same device.

W&B will create a cloud **project** (default is 'YOLOv5') for your training runs, and each new training run will be provided a unique run **name** within that project as project/name. You can also manually set your project and run name as:

```shell

$ python train.py --project ... --name ...

```

YOLOv5 notebook example: <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

<img width="960" alt="Screen Shot 2021-09-29 at 10 23 13 PM" src="https://user-images.githubusercontent.com/26833433/135392431-1ab7920a-c49d-450a-b0b0-0c86ec86100e.png">

</details>

## Viewing Runs

<details open>

<summary> Toggle Details </summary>

Run information streams from your environment to the W&B cloud console as you train. This allows you to monitor and even cancel runs in <b>realtime</b> . All important information is logged:

- Training & Validation losses

- Metrics: Precision, Recall, mAP@0.5, mAP@0.5:0.95

- Learning Rate over time

- A bounding box debugging panel, showing the training progress over time

- GPU: Type, **GPU Utilization**, power, temperature, **CUDA memory usage**

- System: Disk I/0, CPU utilization, RAM memory usage

- Your trained model as W&B Artifact

- Environment: OS and Python types, Git repository and state, **training command**

<p align="center"><img width="900" alt="Weights & Biases dashboard" src="https://user-images.githubusercontent.com/26833433/135390767-c28b050f-8455-4004-adb0-3b730386e2b2.png"></p>

</details>

## Disabling wandb

- training after running `wandb disabled` inside that directory creates no wandb run

- To enable wandb again, run `wandb online`

## Advanced Usage

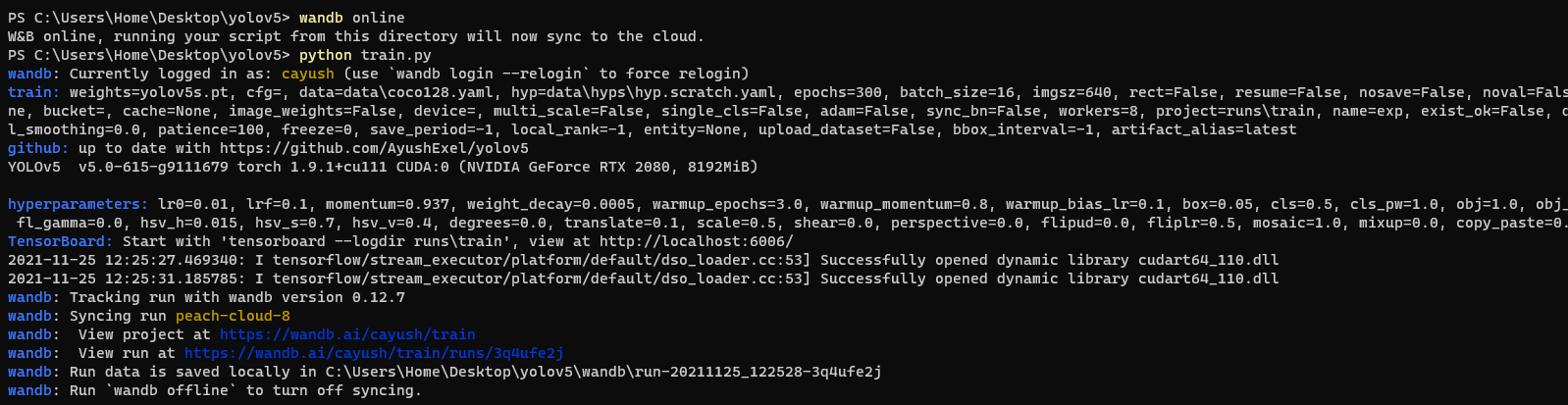

You can leverage W&B artifacts and Tables integration to easily visualize and manage your datasets, models and training evaluations. Here are some quick examples to get you started.

<details open>

<h3> 1: Train and Log Evaluation simultaneousy </h3>

This is an extension of the previous section, but it'll also training after uploading the dataset. <b> This also evaluation Table</b>

Evaluation table compares your predictions and ground truths across the validation set for each epoch. It uses the references to the already uploaded datasets,

so no images will be uploaded from your system more than once.

<details open>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --upload_data val</code>

</details>

<h3>2. Visualize and Version Datasets</h3>

Log, visualize, dynamically query, and understand your data with <a href='https://docs.wandb.ai/guides/data-vis/tables'>W&B Tables</a>. You can use the following command to log your dataset as a W&B Table. This will generate a <code>{dataset}_wandb.yaml</code> file which can be used to train from dataset artifact.

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --project ... --name ... --data .. </code>

</details>

<h3> 3: Train using dataset artifact </h3>

When you upload a dataset as described in the first section, you get a new config file with an added `_wandb` to its name. This file contains the information that

can be used to train a model directly from the dataset artifact. <b> This also logs evaluation </b>

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --data {data}_wandb.yaml </code>

</details>

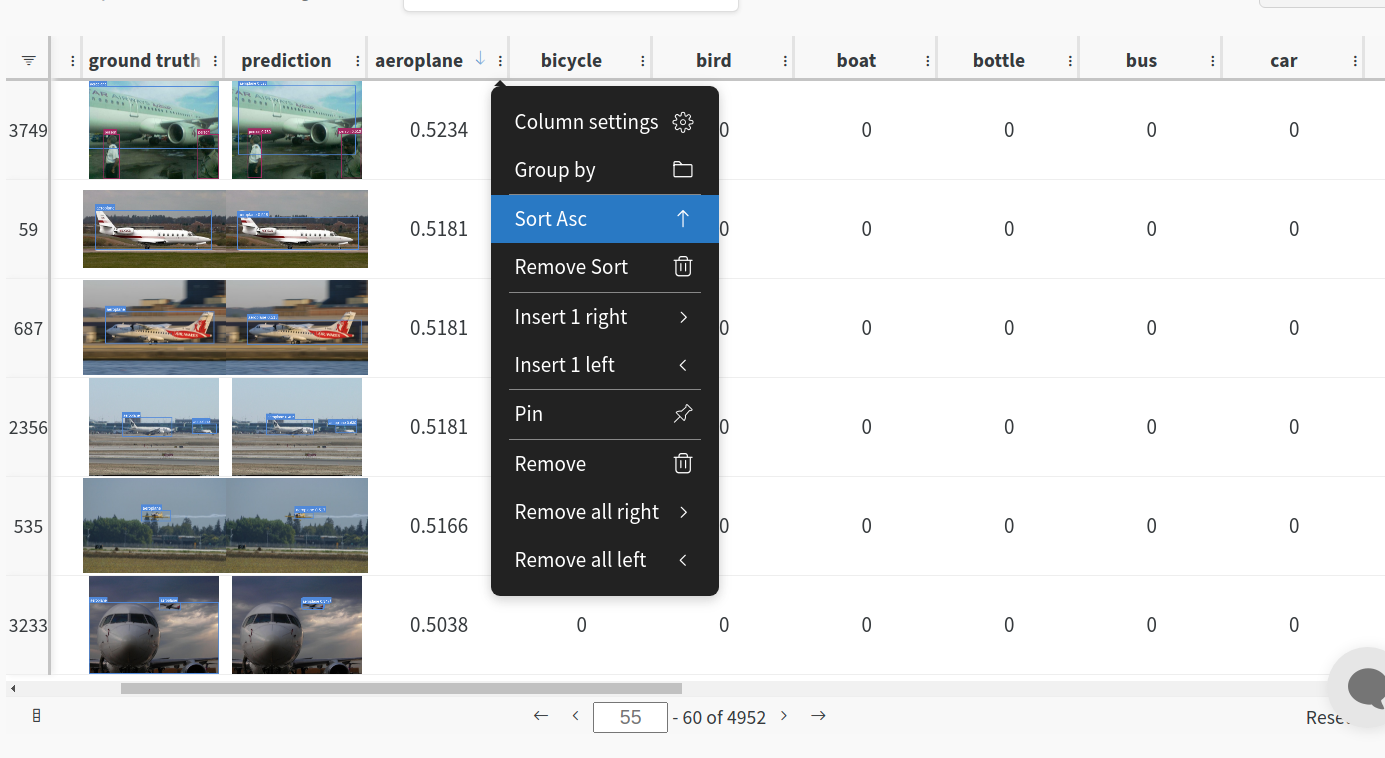

<h3> 4: Save model checkpoints as artifacts </h3>

To enable saving and versioning checkpoints of your experiment, pass `--save_period n` with the base cammand, where `n` represents checkpoint interval.

You can also log both the dataset and model checkpoints simultaneously. If not passed, only the final model will be logged

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --save_period 1 </code>

</details>

</details>

<h3> 5: Resume runs from checkpoint artifacts. </h3>

Any run can be resumed using artifacts if the <code>--resume</code> argument starts with聽<code>wandb-artifact://</code>聽prefix followed by the run path, i.e,聽<code>wandb-artifact://username/project/runid </code>. This doesn't require the model checkpoint to be present on the local system.

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --resume wandb-artifact://{run_path} </code>

</details>

<h3> 6: Resume runs from dataset artifact & checkpoint artifacts. </h3>

<b> Local dataset or model checkpoints are not required. This can be used to resume runs directly on a different device </b>

The syntax is same as the previous section, but you'll need to lof both the dataset and model checkpoints as artifacts, i.e, set bot <code>--upload_dataset</code> or

train fro

没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

【资源说明】 基于yolov5的细胞检测系统源码+训练好的模型+数据集+操作使用说明(高分项目)基于yolov5的细胞检测系统源码+训练好的模型+数据集+操作使用说明(高分项目)基于yolov5的细胞检测系统源码+训练好的模型+数据集+操作使用说明(高分项目) 【备注】 1、该项目是个人高分毕业设计项目源码,已获导师指导认可通过,答辩评审分达到95分 2、该资源内项目代码都经过测试运行成功,功能ok的情况下才上传的,请放心下载使用! 3、本项目适合计算机相关专业(如软件工程、计科、人工智能、通信工程、自动化、电子信息等)的在校学生、老师或者企业员工下载使用,也可作为毕业设计、课程设计、作业、项目初期立项演示等,当然也适合小白学习进阶。 4、如果基础还行,可以在此代码基础上进行修改,以实现其他功能,也可直接用于毕设、课设、作业等。 欢迎下载,沟通交流,互相学习,共同进步!

资源推荐

资源详情

资源评论

收起资源包目录

基于yolov5的细胞检测系统源码+训练好的模型+数据集+操作使用说明(高分项目) (239个子文件)

基于yolov5的细胞检测系统源码+训练好的模型+数据集+操作使用说明(高分项目) (239个子文件)  setup.cfg 2KB

setup.cfg 2KB Dockerfile 2KB

Dockerfile 2KB Dockerfile 821B

Dockerfile 821B Dockerfile-arm64 2KB

Dockerfile-arm64 2KB Dockerfile-cpu 2KB

Dockerfile-cpu 2KB tutorial.ipynb 56KB

tutorial.ipynb 56KB bus.jpg 476KB

bus.jpg 476KB malaria-2.jpg 256KB

malaria-2.jpg 256KB malaria-1.jpg 247KB

malaria-1.jpg 247KB malaria-3.jpg 237KB

malaria-3.jpg 237KB malaria-4.jpg 228KB

malaria-4.jpg 228KB zidane.jpg 165KB

zidane.jpg 165KB bloodcell-2.jpg 22KB

bloodcell-2.jpg 22KB bloodcell-4.jpg 21KB

bloodcell-4.jpg 21KB bloodcell-1.jpg 21KB

bloodcell-1.jpg 21KB bloodcell-3.jpg 21KB

bloodcell-3.jpg 21KB logo.jpg 9KB

logo.jpg 9KB LICENSE 34KB

LICENSE 34KB README.md 11KB

README.md 11KB 项目需求文档.md 5KB

项目需求文档.md 5KB CONTRIBUTING.md 5KB

CONTRIBUTING.md 5KB README.md 3KB

README.md 3KB README.md 2KB

README.md 2KB 1b44d22643830cd4f23c9deadb0bd499fb392fb2cd9526d81547d93077d983df.png 861KB

1b44d22643830cd4f23c9deadb0bd499fb392fb2cd9526d81547d93077d983df.png 861KB 4217e25defac94ff465157d53f5a24b8a14045b763d8606ec4a97d71d99ee381.png 824KB

4217e25defac94ff465157d53f5a24b8a14045b763d8606ec4a97d71d99ee381.png 824KB 5d58600efa0c2667ec85595bf456a54e2bd6e6e9a5c0dff42d807bc9fe2b822e.png 821KB

5d58600efa0c2667ec85595bf456a54e2bd6e6e9a5c0dff42d807bc9fe2b822e.png 821KB 2a1a294e21d76efd0399e4eb321b45f44f7510911acd92c988480195c5b4c812.png 809KB

2a1a294e21d76efd0399e4eb321b45f44f7510911acd92c988480195c5b4c812.png 809KB 08275a5b1c2dfcd739e8c4888a5ee2d29f83eccfa75185404ced1dc0866ea992.png 804KB

08275a5b1c2dfcd739e8c4888a5ee2d29f83eccfa75185404ced1dc0866ea992.png 804KB 8d05fb18ee0cda107d56735cafa6197a31884e0a5092dc6d41760fb92ae23ab4.png 801KB

8d05fb18ee0cda107d56735cafa6197a31884e0a5092dc6d41760fb92ae23ab4.png 801KB 54793624413c7d0e048173f7aeee85de3277f7e8d47c82e0a854fe43e879cd12.png 798KB

54793624413c7d0e048173f7aeee85de3277f7e8d47c82e0a854fe43e879cd12.png 798KB 091944f1d2611c916b98c020bd066667e33f4639159b2a92407fe5a40788856d.png 798KB

091944f1d2611c916b98c020bd066667e33f4639159b2a92407fe5a40788856d.png 798KB 5e263abff938acba1c0cff698261c7c00c23d7376e3ceacc3d5d4a655216b16d.png 797KB

5e263abff938acba1c0cff698261c7c00c23d7376e3ceacc3d5d4a655216b16d.png 797KB 1a11552569160f0b1ea10bedbd628ce6c14f29edec5092034c2309c556df833e.png 796KB

1a11552569160f0b1ea10bedbd628ce6c14f29edec5092034c2309c556df833e.png 796KB 4e07a653352b30bb95b60ebc6c57afbc7215716224af731c51ff8d430788cd40.png 772KB

4e07a653352b30bb95b60ebc6c57afbc7215716224af731c51ff8d430788cd40.png 772KB result-2.png 771KB

result-2.png 771KB 7f38885521586fc6011bef1314a9fb2aa1e4935bd581b2991e1d963395eab770.png 771KB

7f38885521586fc6011bef1314a9fb2aa1e4935bd581b2991e1d963395eab770.png 771KB 76a372bfd3fad3ea30cb163b560e52607a8281f5b042484c3a0fc6d0aa5a7450.png 754KB

76a372bfd3fad3ea30cb163b560e52607a8281f5b042484c3a0fc6d0aa5a7450.png 754KB 3594684b9ea0e16196f498815508f8d364d55fea2933a2e782122b6f00375d04.png 738KB

3594684b9ea0e16196f498815508f8d364d55fea2933a2e782122b6f00375d04.png 738KB result-1.png 538KB

result-1.png 538KB 5d75a63972ef643efd7c42f20668b167f2af43635d6263962d84e62e7609ab51.png 151KB

5d75a63972ef643efd7c42f20668b167f2af43635d6263962d84e62e7609ab51.png 151KB eb1df8ed879d04b36980b0958a0e8fc446ad08c0bdcf3b5f42e3db023187c7e5.png 145KB

eb1df8ed879d04b36980b0958a0e8fc446ad08c0bdcf3b5f42e3db023187c7e5.png 145KB 00ae65c1c6631ae6f2be1a449902976e6eb8483bf6b0740d00530220832c6d3e.png 144KB

00ae65c1c6631ae6f2be1a449902976e6eb8483bf6b0740d00530220832c6d3e.png 144KB 7f55678298adb736987d9fb5d1d2daefb08fe5bf4d81b2380bedf9449f79cc38.png 144KB

7f55678298adb736987d9fb5d1d2daefb08fe5bf4d81b2380bedf9449f79cc38.png 144KB 0c2550a23b8a0f29a7575de8c61690d3c31bc897dd5ba66caec201d201a278c2.png 143KB

0c2550a23b8a0f29a7575de8c61690d3c31bc897dd5ba66caec201d201a278c2.png 143KB 337b6eed0726f07531cd467cd62b6676c31a8c9e716bdbc49433986c022252cf.png 142KB

337b6eed0726f07531cd467cd62b6676c31a8c9e716bdbc49433986c022252cf.png 142KB 0e4c2e2780de7ec4312f0efcd86b07c3738d21df30bb4643659962b4da5505a3.png 142KB

0e4c2e2780de7ec4312f0efcd86b07c3738d21df30bb4643659962b4da5505a3.png 142KB e414b54f2036bcab61b9c0a966f65adf4b169097c13c740e03d6292ac076258c.png 141KB

e414b54f2036bcab61b9c0a966f65adf4b169097c13c740e03d6292ac076258c.png 141KB 353ab00e964f71aa720385223a9078b770b7e3efaf5be0f66e670981f68fe606.png 140KB

353ab00e964f71aa720385223a9078b770b7e3efaf5be0f66e670981f68fe606.png 140KB 7f34dfccd1bc2e2466ee3d6f74ff05821a0e5404e9cf2c9568da26b59f7afda5.png 140KB

7f34dfccd1bc2e2466ee3d6f74ff05821a0e5404e9cf2c9568da26b59f7afda5.png 140KB 4e92129f4e8066d6f560d6022cd343a2245939aa49d8b06cddbd9bfc7e7eeb0e.png 139KB

4e92129f4e8066d6f560d6022cd343a2245939aa49d8b06cddbd9bfc7e7eeb0e.png 139KB 2dd3356f2dcf470aec4003800744dfec6490e75d88011e1d835f4f3d60f88e7a.png 135KB

2dd3356f2dcf470aec4003800744dfec6490e75d88011e1d835f4f3d60f88e7a.png 135KB 2c61fdcb36fd1b2944895af6204279e9f6c164ba894198b40c8b7a3c9bf500ea.png 135KB

2c61fdcb36fd1b2944895af6204279e9f6c164ba894198b40c8b7a3c9bf500ea.png 135KB 1ec74a26e772966df764e063f1391109a60d803cff9d15680093641ed691bf72.png 134KB

1ec74a26e772966df764e063f1391109a60d803cff9d15680093641ed691bf72.png 134KB 1d02c4b5921e916b9ddfb2f741fd6cf8d0e571ad51eb20e021c826b5fb87350e.png 129KB

1d02c4b5921e916b9ddfb2f741fd6cf8d0e571ad51eb20e021c826b5fb87350e.png 129KB 4d14a3629b6af6de86d850be236b833a7bfcbf6d8665fd73c6dc339e06c14607.png 128KB

4d14a3629b6af6de86d850be236b833a7bfcbf6d8665fd73c6dc339e06c14607.png 128KB 65c8527c16a016191118e8adc3d307fe3a73d37cbe05597a95aebd75daf8d051.png 123KB

65c8527c16a016191118e8adc3d307fe3a73d37cbe05597a95aebd75daf8d051.png 123KB 52a6b8ae4c8e0a8a07a31b8e3f401d8811bf1942969c198e51dfcbd98520aa60.png 122KB

52a6b8ae4c8e0a8a07a31b8e3f401d8811bf1942969c198e51dfcbd98520aa60.png 122KB 3bfa8b3b01fd24a28477f103063d17368a7398b27331e020f3a0ef59bf68c940.png 117KB

3bfa8b3b01fd24a28477f103063d17368a7398b27331e020f3a0ef59bf68c940.png 117KB 2e2d29fc44444a85049b162eb359a523dec108ccd5bd75022b25547491abf0c7.png 115KB

2e2d29fc44444a85049b162eb359a523dec108ccd5bd75022b25547491abf0c7.png 115KB 0d3640c1f1b80f24e94cc9a5f3e1d9e8db7bf6af7d4aba920265f46cadc25e37.png 114KB

0d3640c1f1b80f24e94cc9a5f3e1d9e8db7bf6af7d4aba920265f46cadc25e37.png 114KB 细胞检测系统.png 113KB

细胞检测系统.png 113KB 4d4f254f3b8b4408d661df3735591554b2f6587ce1952928d619b48010d55467.png 112KB

4d4f254f3b8b4408d661df3735591554b2f6587ce1952928d619b48010d55467.png 112KB 1e488c42eb1a54a3e8412b1f12cde530f950f238d71078f2ede6a85a02168e1f.png 110KB

1e488c42eb1a54a3e8412b1f12cde530f950f238d71078f2ede6a85a02168e1f.png 110KB 0bf33d3db4282d918ec3da7112d0bf0427d4eafe74b3ee0bb419770eefe8d7d6.png 106KB

0bf33d3db4282d918ec3da7112d0bf0427d4eafe74b3ee0bb419770eefe8d7d6.png 106KB 4e23ecf65040f86420e0201134e538951acdeda84fbb274311f995682044dd64.png 106KB

4e23ecf65040f86420e0201134e538951acdeda84fbb274311f995682044dd64.png 106KB 4ae4f936a9ade472764dad80f60f7168e4be067aa66ce9d06d60ebe34951dca4.png 101KB

4ae4f936a9ade472764dad80f60f7168e4be067aa66ce9d06d60ebe34951dca4.png 101KB 01d44a26f6680c42ba94c9bc6339228579a95d0e2695b149b7cc0c9592b21baf.png 100KB

01d44a26f6680c42ba94c9bc6339228579a95d0e2695b149b7cc0c9592b21baf.png 100KB 0e21d7b3eea8cdbbed60d51d72f4f8c1974c5d76a8a3893a7d5835c85284132e.png 99KB

0e21d7b3eea8cdbbed60d51d72f4f8c1974c5d76a8a3893a7d5835c85284132e.png 99KB 4ca5081854df7bbcaa4934fcf34318f82733a0f8c05b942c2265eea75419d62f.png 95KB

4ca5081854df7bbcaa4934fcf34318f82733a0f8c05b942c2265eea75419d62f.png 95KB 4cbd6c37f3a55a538d759d440344c287cac66260d3047a83f429e63e7a0f7f20.png 93KB

4cbd6c37f3a55a538d759d440344c287cac66260d3047a83f429e63e7a0f7f20.png 93KB 1c681dfa5cf7e413305d2e90ee47553a46e29cce4f6ed034c8297e511714f867.png 50KB

1c681dfa5cf7e413305d2e90ee47553a46e29cce4f6ed034c8297e511714f867.png 50KB 2ab91a4408860ae8339689ed9f87aa9359de1bdd4ca5c2eab7fff7724dbd6707.png 48KB

2ab91a4408860ae8339689ed9f87aa9359de1bdd4ca5c2eab7fff7724dbd6707.png 48KB 1f9e429c12f4477221b5b855a5f494fda2ef6d064ff75b061ffaf093e91758c5.png 46KB

1f9e429c12f4477221b5b855a5f494fda2ef6d064ff75b061ffaf093e91758c5.png 46KB 0bda515e370294ed94efd36bd53782288acacb040c171df2ed97fd691fc9d8fe.png 43KB

0bda515e370294ed94efd36bd53782288acacb040c171df2ed97fd691fc9d8fe.png 43KB 0e5edb072788c7b1da8829b02a49ba25668b09f7201cf2b70b111fc3b853d14f.png 42KB

0e5edb072788c7b1da8829b02a49ba25668b09f7201cf2b70b111fc3b853d14f.png 42KB 1b6044e4858a9b7cee9b0028d8e54fbc8fb72e6c4424ab5b9f3859bfc72b33c5.png 41KB

1b6044e4858a9b7cee9b0028d8e54fbc8fb72e6c4424ab5b9f3859bfc72b33c5.png 41KB 2e172afb1f43b359f1f0208da9386aefe97c0c1afe202abfe6ec09cdca820990.png 41KB

2e172afb1f43b359f1f0208da9386aefe97c0c1afe202abfe6ec09cdca820990.png 41KB 3ab9cab6212fabd723a2c5a1949c2ded19980398b56e6080978e796f45cbbc90.png 40KB

3ab9cab6212fabd723a2c5a1949c2ded19980398b56e6080978e796f45cbbc90.png 40KB 0c6507d493bf79b2ba248c5cca3d14df8b67328b89efa5f4a32f97a06a88c92c.png 39KB

0c6507d493bf79b2ba248c5cca3d14df8b67328b89efa5f4a32f97a06a88c92c.png 39KB 003cee89357d9fe13516167fd67b609a164651b21934585648c740d2c3d86dc1.png 39KB

003cee89357d9fe13516167fd67b609a164651b21934585648c740d2c3d86dc1.png 39KB 1b2bf5933b0fb82918d278983bee66e9532b53807c3638efd9af66d20a2bae88.png 39KB

1b2bf5933b0fb82918d278983bee66e9532b53807c3638efd9af66d20a2bae88.png 39KB 1d4a5e729bb96b08370789cad0791f6e52ce0ffe1fcc97a04046420b43c851dd.png 38KB

1d4a5e729bb96b08370789cad0791f6e52ce0ffe1fcc97a04046420b43c851dd.png 38KB 3a508d2dc03db46e7f97a2a30eabb62ab2886f3cedfea303de8f6a42e50d20eb.png 38KB

3a508d2dc03db46e7f97a2a30eabb62ab2886f3cedfea303de8f6a42e50d20eb.png 38KB 2dd9d8c797fc695665326fc8fd0eb5cd292139fa478ccb5acb7fb352f7030063.png 38KB

2dd9d8c797fc695665326fc8fd0eb5cd292139fa478ccb5acb7fb352f7030063.png 38KB 3a3fee427e6ef7dfd0d82681e2bcee2d054f80287aea7dfa3fa4447666f929b9.png 38KB

3a3fee427e6ef7dfd0d82681e2bcee2d054f80287aea7dfa3fa4447666f929b9.png 38KB 2f929b067a59f88530b6bfa6f6889bc3a38adf88d594895973d1c8b2549fd93d.png 37KB

2f929b067a59f88530b6bfa6f6889bc3a38adf88d594895973d1c8b2549fd93d.png 37KB 1db1cddf28e305c9478519cfac144eee2242183fe59061f1f15487e925e8f5b5.png 37KB

1db1cddf28e305c9478519cfac144eee2242183fe59061f1f15487e925e8f5b5.png 37KB 0acd2c223d300ea55d0546797713851e818e5c697d073b7f4091b96ce0f3d2fe.png 36KB

0acd2c223d300ea55d0546797713851e818e5c697d073b7f4091b96ce0f3d2fe.png 36KB help.png 36KB

help.png 36KB 1c2f9e121fc207efff79d46390df1a740566b683ff56a96d8cabe830a398dd2e.png 36KB

1c2f9e121fc207efff79d46390df1a740566b683ff56a96d8cabe830a398dd2e.png 36KB 0b2e702f90aee4fff2bc6e4326308d50cf04701082e718d4f831c8959fbcda93.png 35KB

0b2e702f90aee4fff2bc6e4326308d50cf04701082e718d4f831c8959fbcda93.png 35KB 1a75e9f15481d11084fe66bc2a5afac6dc5bec20ed56a7351a6d65ef0fe8762b.png 35KB

1a75e9f15481d11084fe66bc2a5afac6dc5bec20ed56a7351a6d65ef0fe8762b.png 35KB 0bf4b144167694b6846d584cf52c458f34f28fcae75328a2a096c8214e01c0d0.png 33KB

0bf4b144167694b6846d584cf52c458f34f28fcae75328a2a096c8214e01c0d0.png 33KB 3b3f516ebc9a16cff287a5ffd3a1861a345a6d38bedbba74f1c0b0e0eac62afd.png 33KB

3b3f516ebc9a16cff287a5ffd3a1861a345a6d38bedbba74f1c0b0e0eac62afd.png 33KB 2b50b1e3fa5c5aa39bc84ebfaea9961b7199c4d2488ae0b48d0b3459807d59d2.png 32KB

2b50b1e3fa5c5aa39bc84ebfaea9961b7199c4d2488ae0b48d0b3459807d59d2.png 32KB 2ad489c11ed8b77a9d8a2339ac64ffc38e79281c03a2507db4688fd3186c0fe5.png 30KB

2ad489c11ed8b77a9d8a2339ac64ffc38e79281c03a2507db4688fd3186c0fe5.png 30KB 0ddd8deaf1696db68b00c600601c6a74a0502caaf274222c8367bdc31458ae7e.png 29KB

0ddd8deaf1696db68b00c600601c6a74a0502caaf274222c8367bdc31458ae7e.png 29KB 2cfa857e63be1b418c91ad5ea1f8d136fd1b80fc856e1d4277274c3dea28011c.png 29KB

2cfa857e63be1b418c91ad5ea1f8d136fd1b80fc856e1d4277274c3dea28011c.png 29KB 1d5f4717e179a03675a5aac3fc1c862fb442ddc3e373923016fd6b1430da889b.png 29KB

1d5f4717e179a03675a5aac3fc1c862fb442ddc3e373923016fd6b1430da889b.png 29KB 0a7d30b252359a10fd298b638b90cb9ada3acced4e0c0e5a3692013f432ee4e9.png 29KB

0a7d30b252359a10fd298b638b90cb9ada3acced4e0c0e5a3692013f432ee4e9.png 29KB共 239 条

- 1

- 2

- 3

资源评论

不走小道

- 粉丝: 3389

- 资源: 5050

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功