II. RELATED WORK

Spatial Clustering algorithms can be partitioned into four

general categories: Partitioning, h ierarchical, density-based and

grid-based.

Partitioning algorithms divide the entire dataset into a

number of disjoint groups. Each disjoint group is a cluster.

K-means [3], EM (Expectation Maximization) [8] and K-

medoid [4] are three well-known partitioning based clustering

algorithms. These use an iterative approach and try to group the

data into K clusters, where K is a u ser specified parameter. The

shortcoming of the algorithms is that they are not suitable for

finding arbitrary shaped clusters. Further, they are dependent

on the u ser specified parameter K.

Hierarchical clustering algorithms use a distance matrix

as an input and generates a hierarchical set of clusters. This

hierarchy is generally formed in two ways: bottom-up and top-

down [4]. The top-down approach starts with all the objects in

the same cluster. In each successive iteration a bigger cluster

is split into smaller clusters based on some distance measure,

until each object is in one cluster itself. The clustering level

is chosen between the root (a single large cluster) and the

leaf nodes (a cluster for each individual object). The bottom-

up approach starts with each object as one cluster. It then

successively merges the clusters until all the clusters are

merged together to form a single big cluster. The weakness

of the hierarchical algorithms is that they are computationally

very expensive.

BIRCH [9] and CURE [10] are hierarchical clustering

algorithms. In BIRCH, data objects are compressed into small

sub-clusters, then the clustering algorithm is applied on these

sub-clusters. In CURE, instead of using a single centroid, a

fixed number of well scattered objects are selected to represent

each cluster.

Density-based methods can filter out the outliers and can

discover arbitrary shaped clusters. DBSCAN [5] is the first

proposed density-based clustering algorithm. This algorithm

is based on two parameters: and MinPts. Density around

each point depends on the number of neighbours within its

distance. A data point is considered dense if the number

of its neighbours is greater than MinPts. DBSCAN can

find clusters of arbitrary shapes, but it cannot handle data

containing clusters of varying densities. Further, the cluster

quality in DBSCAN algorithm depends on the ability of the

user to select a good set of parameters.

OPTICS [6] is another density based clustering algorithm,

proposed to overcome the major weakness of DBSCAN algo-

rithm. This algorithm can handle data with varying density.

This algorithm does not produce clusters explicitly, rather

computes an augmented cluster ordering such that spatially

closest points become neighbours in that order.

The DENCLUE [7] algorithm was proposed to handle high

dimensional data efficiently. In this algorithm density of a data

object is determined based on the sum of influence functions

of the data points around it. DENCLUE also requires a careful

selection o f clusterin g parameters which may significantly

influence the quality of the clusters.

The Shared Nearest Neighbour (SNN) [11] clustering al-

gorithm was proposed to find clusters of different densities

in high dimensional data. A similarity measure is based on

the number of shared neighbours between two objects instead

of traditional Euclidean distance. This algorithm needs 3

parameters (k, , MinPt).

Grid-based clustering algorith m divides the data space into

a finite number of g rid cells forming a grid structure on

which operations are performed to obtain the clusters. Some

examples of grid based methods include STING [12], Wave-

Cluster [13] and CLIQUE [14]. The STING [12] algorithm

calculates statistical information in each grid cells. The Wave-

Cluster [13] algorithm applies wavelet transformation to the

feature base. Input parameters include the number o f g rid

cells for each dimension. This algorithm is applicable for low

dimensional data space. The CLIQUE [14] algorithm adopts a

combination of grid-based and density-based approaches and

this algorithm can detect clusters in high-dimensional space.

III. P

ROPOSED ALGORITHM

In this section, we focus on the basic steps of our proposed

algorithm. We propose K-DBSCAN algorithm, which works in

two phases.

• K Level Density Partitioning: In this phase, we calcu-

late the density of each data point based on its distance

from its nearest neighbouring data points. Then we

partition all the data points into K groups based on

their density value.

• Density Level Clustering: In this phase, we introduce

a modified version of DBSCAN algorithm that works

on different density levels.

A. K-DBSCAN Phase 1 - K level Density Partitioning

In real world spatial datasets, different data objects may

be located in different density regions. So, it is very difficult

or almost impossible to characterize the cluster structures by

using only one global density parameter [15].

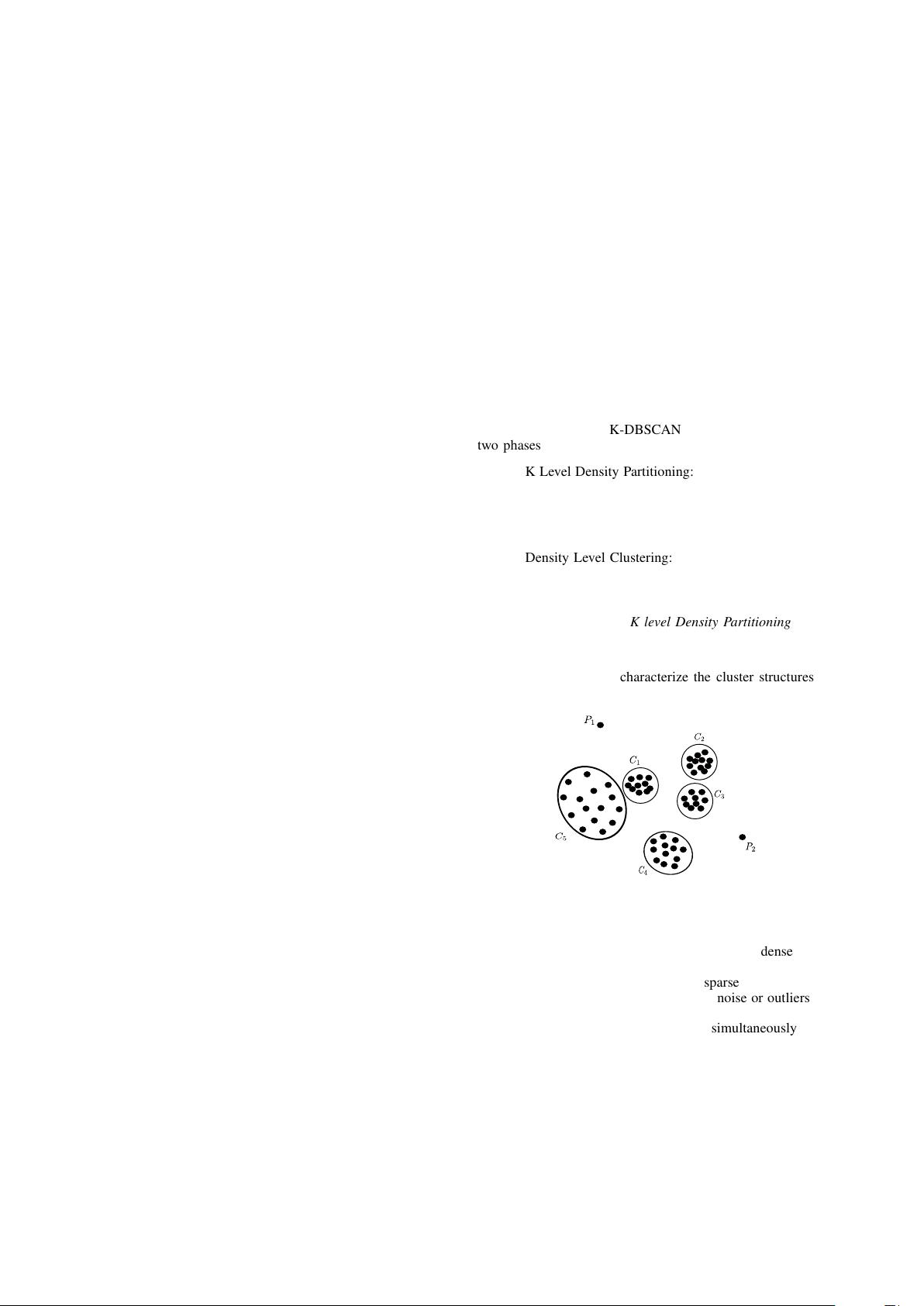

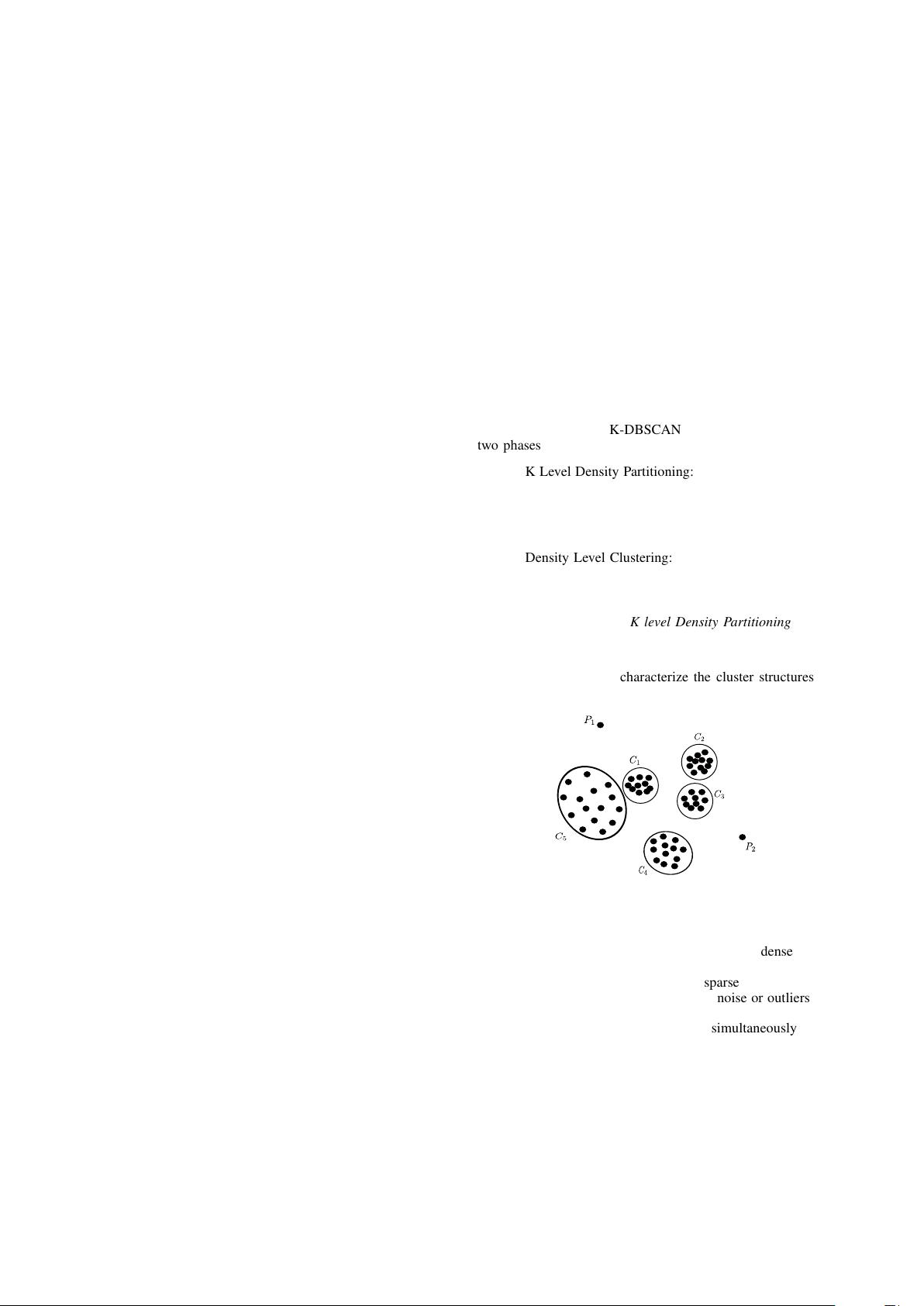

Fig. 1: Points in different density regions

Consider the example from Figure 1. In this example,

points in clusters C

1

, C

2

and C

3

represents very dense neigh-

bourhoods. Points in cluster C

4

represents a less dense region,

while points in cluster C

5

represent a sparse neighbourhood.

Point P

1

and P

2

should be considered as noise or outliers. As

different data points are located in different density regions, it

is impossible to obtain all the clusters simultaneou sly using

one global density parameter. Because, if we consider the

density estimation for points located in C

1

, we have to choose

5352

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功