International Journal of Computer Vision 29(1), 5–28 (1998)

c

° 1998 Kluwer Academic Publishers. Manufactured in The Netherlands.

CONDENSATION—Conditional Density Propagation for Visual Tracking

MICHAEL ISARD AND ANDREW BLAKE

Department of Engineering Science, University of Oxford, Oxford OX1 3PJ, UK

Received July 16, 1996; Accepted March 3, 1997

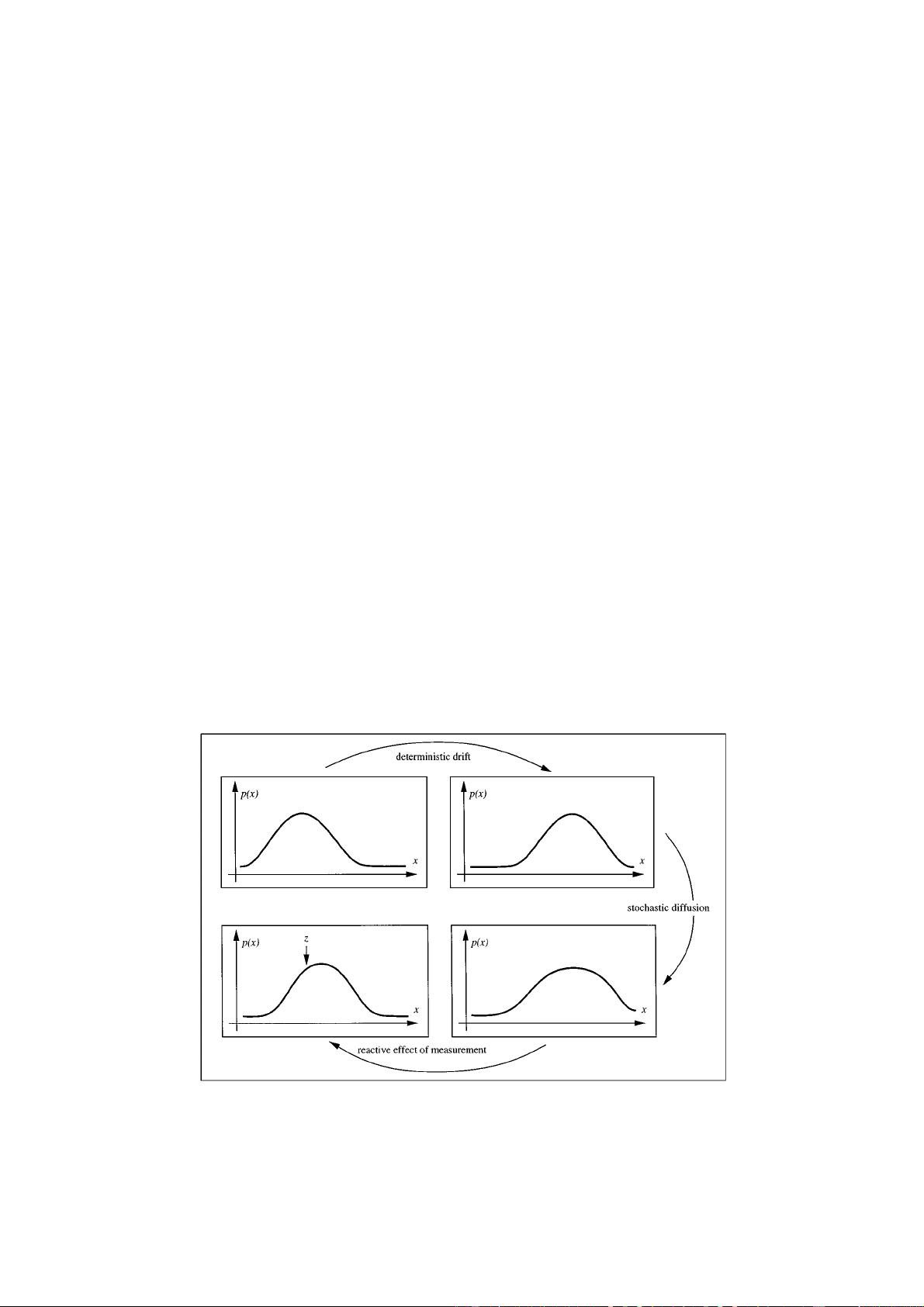

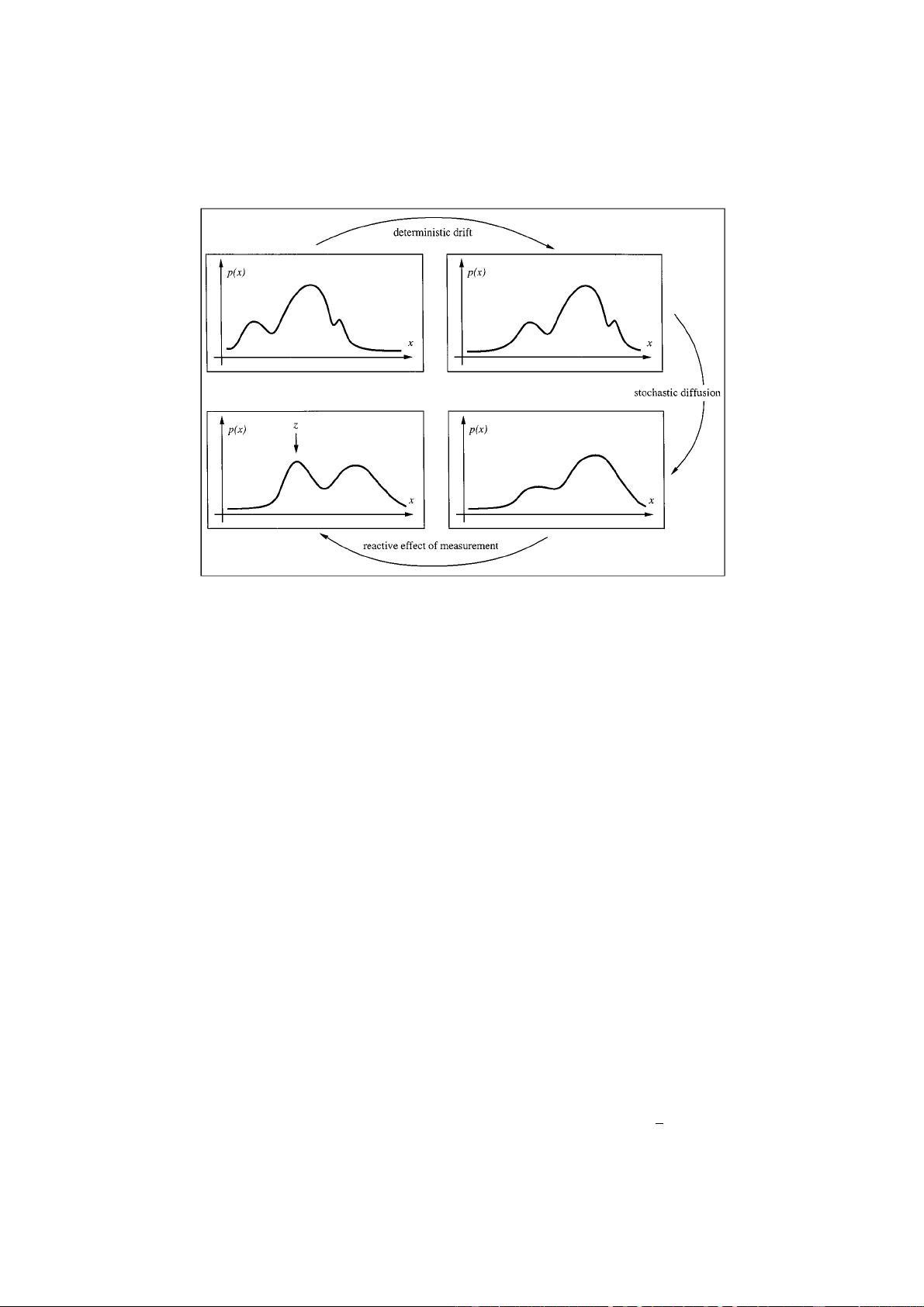

Abstract. The problem of tracking curves in dense visual clutter is challenging. Kalman filtering is inadequate

because itis based on Gaussian densities which, being unimodal, cannotrepresent simultaneousalternative hypothe-

ses. The Condensation algorithm uses “factored sampling”, previously applied to the interpretation of static

images, in which the probability distribution of possible interpretations is represented by a randomly generated set.

Condensation uses learned dynamical models, together with visual observations, to propagate the random set

over time. The result is highly robust tracking of agile motion. Notwithstanding the use of stochastic methods, the

algorithm runs in near real-time.

1. Tracking Curves in Clutter

The purpose of this paper

1

is to establish a stochas-

tic framework for tracking curves in visual clutter, us-

ing a sampling algorithm. The approach is rooted in

ideas from statistics, control theory and computer vi-

sion. The problem is to track outlines and features of

foreground objects, modelled as curves, as they move

in substantial clutter, and to do it at, or close to, video

frame-rate. This is challenging because elements in

the background clutter may mimic parts of foreground

features. In the most severe case of camouflage, the

background may consist of objects similar to the fore-

ground object, for instance, when a person is moving

past a crowd. Our approach aims to dissolve the result-

ing ambiguity by applying probabilistic models of ob-

ject shapeand motionto analyse the video-stream. The

degree of generality of these models is pitched care-

fully: sufficiently specific for effective disambiguation

but sufficiently general to be broadly applicable over

entire classes of foreground objects.

1.1. Modelling Shape and Motion

Effective methods have arisen in computer vision for

modelling shape and motion. When suitable geometric

models of a moving object are available, they can be

matched effectively to image data, though usually at

considerable computational cost (Hogg, 1983; Lowe,

1991; Sullivan, 1992; Huttenlocher et al., 1993). Once

an object has been located approximately, tracking it

insubsequent images becomesmoreefficientcomputa-

tionally (Lowe, 1992), especially if motion is modelled

as well as shape (Gennery, 1992; Harris, 1992). One

important facility is the modelling of curve segments

which interact with images (Fischler and Elschlager,

1973; Yuille and Hallinan, 1992) or image sequences

(Kass et al., 1987; Dickmanns, and Graefe, 1988).

This is more general than modelling entire objects but

more clutter-resistant than applying signal-processing

to low-level corners or edges. The methods to be dis-

cussed here have been applied at this level, to segments

of parametric B-spline curves (Bartels et al., 1987)

tracking over image sequences (Menet et al., 1990;

Cipolla and Blake, 1990). The B-spline curves could,

in theory, be parameterised by their control points. In

practice, this allows too many degrees of freedom for

stable tracking and it is necessary to restrict the curve

to a low-dimensional parameter x, for example, over

an affine space (Koenderink and Van Doorn, 1991;

Ullman and Basri, 1991; Blake et al., 1993), or more

generally allowing a “shape-space” of non-rigid mo-

tion (Cootes et al., 1993).

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功