Stauffer1999A_Adaptive background mixture models for real-time t...

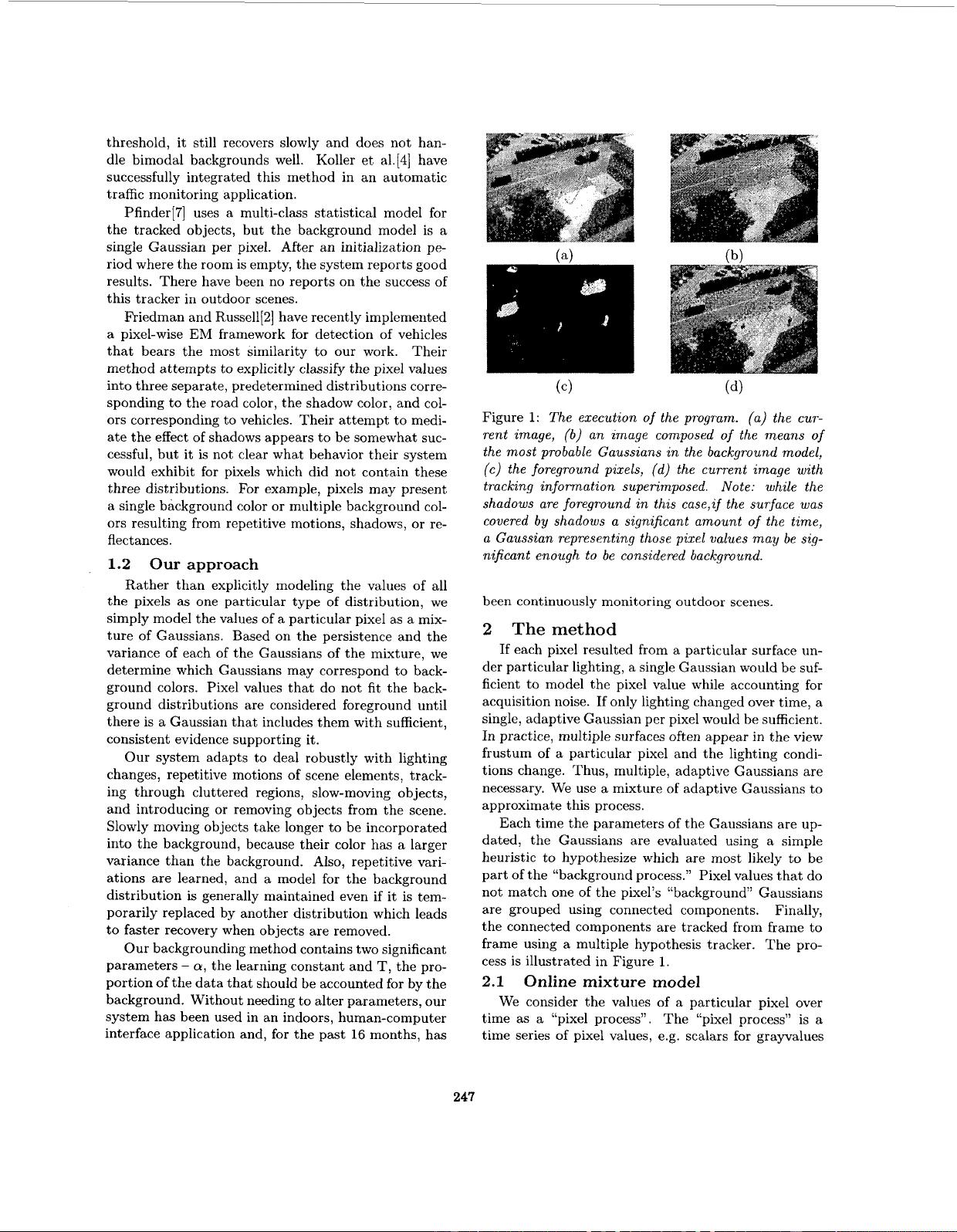

### 混合高斯模型在实时跟踪中的应用 #### 概述 本文主要探讨了混合高斯模型(Mixture of Gaussians, MOG)在实时背景分割与目标跟踪中的应用。该研究由Chris Stauffer和W.E.L Grimson共同完成,并发表于1999年。研究的主要目的是开发一种鲁棒、自适应的目标跟踪系统,能够处理各种复杂的场景变化,如光照变化、移动场景杂波、多个移动对象以及其他任意的变化。 #### 混合高斯模型的原理 混合高斯模型是一种统计方法,用于估计像素值的概率分布。对于每个像素位置,模型假设存在一个由多个高斯分布组成的混合分布。这些高斯分布代表了该像素位置可能出现的不同值。随着时间的推移,通过不断更新这些高斯分布的参数(均值和方差),模型可以有效地适应背景的变化。 #### 模型更新策略 在Stauffer等人的研究中,采用了一种非线性近似方法来更新模型。这种方法允许模型快速适应背景的变化,同时保持对运动物体的有效检测。具体来说,当一个新的图像帧到达时,模型会计算当前像素值与背景模型之间的差异,并根据这个差异更新相应的高斯分布参数。这种更新机制确保了即使在复杂多变的环境中,也能准确地识别出运动区域。 #### 背景建模与分类 为了实现有效的背景分割,研究人员提出了一个基于混合高斯模型的方法。对于每个像素,模型会评估哪些高斯分布最能代表当前像素值。如果某个像素值最有可能来自背景过程,则该像素被归类为背景的一部分;反之,则被认为是前景或者运动区域。通过这种方式,可以将图像分为背景和前景两个部分。 #### 实验验证与应用场景 该研究通过一系列实验验证了所提出方法的有效性。系统在各种天气条件下进行了长达16个月的连续运行,包括雨天和雪天,这证明了其在户外环境下的鲁棒性和实用性。此外,该系统特别适用于场景级别的视频监控应用,能够在复杂多变的环境中稳定工作,有效地处理各种挑战,如光照变化、重复运动、长期场景变化等。 #### 结论与未来展望 混合高斯模型提供了一种有效的方法来解决实时跟踪和背景分割问题。它不仅能够应对各种复杂的场景变化,还具有高度的鲁棒性和自适应能力。尽管如此,随着技术的发展和新的挑战的出现,未来的研究还可以进一步优化算法性能,提高处理速度,以及探索更广泛的应用场景。 ### 扩展阅读 - **理论基础**:深入理解高斯分布和混合模型的基本概念是理解和应用本文方法的关键。 - **实际应用**:除了视频监控外,混合高斯模型还可以应用于自动驾驶、无人机导航等领域。 - **最新进展**:近年来,深度学习方法在视觉跟踪领域取得了显著成果,结合传统方法可以进一步提升系统的性能。 - **开源工具**:探索可用的开源库和工具,如OpenCV,可以帮助开发者快速实现混合高斯模型的相关功能。 通过对混合高斯模型及其在实时跟踪中的应用进行深入研究,不仅可以帮助我们更好地理解这一领域的基础知识和技术细节,还能为实际应用提供有力的支持。

剩余6页未读,继续阅读

michaelsoros2015-06-19比较有实际价值,不愧是MIT的。

michaelsoros2015-06-19比较有实际价值,不愧是MIT的。

- 粉丝: 1

- 资源: 15

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 机械设计液晶面板AOI检测机sw18可编辑全套设计资料100%好用.zip

- 基于扰动观察法 电导增量法的光伏电池最大功率点跟踪仿真模型 (PLECS平台搭建)

- 毕业论文设计 基于单片机的八路扫描式抢答器详细项目实例

- 基于springboot的健身房管理系统源码(java毕业设计完整源码).zip

- 基于SpringBoot的健身房管理系统源码(java毕业设计完整源码+LW).zip

- 4-上市银行常用数据整理(2000-2022年).zip

- mysql数据库JDBC驱动程序.zip

- 机械设计一次性帽子生产设备sw18全套设计资料100%好用.zip

- 基于java的车库智能管理平台开题报告.docx

- 三菱Q PLC案例程序,三菱Q系列程序 QD75MH总线伺服本案例是液晶电视导光板加工,此案例采用三菱Q系列PLC 有QD75MH定位模块SSNET总线伺服,QJ61BT11N 远程主站和远程IO

- 基于java的出租车管理系统开题报告.docx

- 基于SpringBoot的口腔诊所系统的设计与实现源码(java毕业设计完整源码).zip

- 基于java的穿戴搭配系统的开题报告.docx

- Java+Servlet+JSP+Bootstrap+Mysql学生信息管理系统源码+说明(高分项目)

- 基于SpringBoot的哈利波特书影音互动科普网站源码(java毕业设计完整源码+LW).zip

- 基于springboot的图书管理系统源码(java毕业设计完整源码+LW).zip

信息提交成功

信息提交成功