googLeNet 深度学习四篇论文

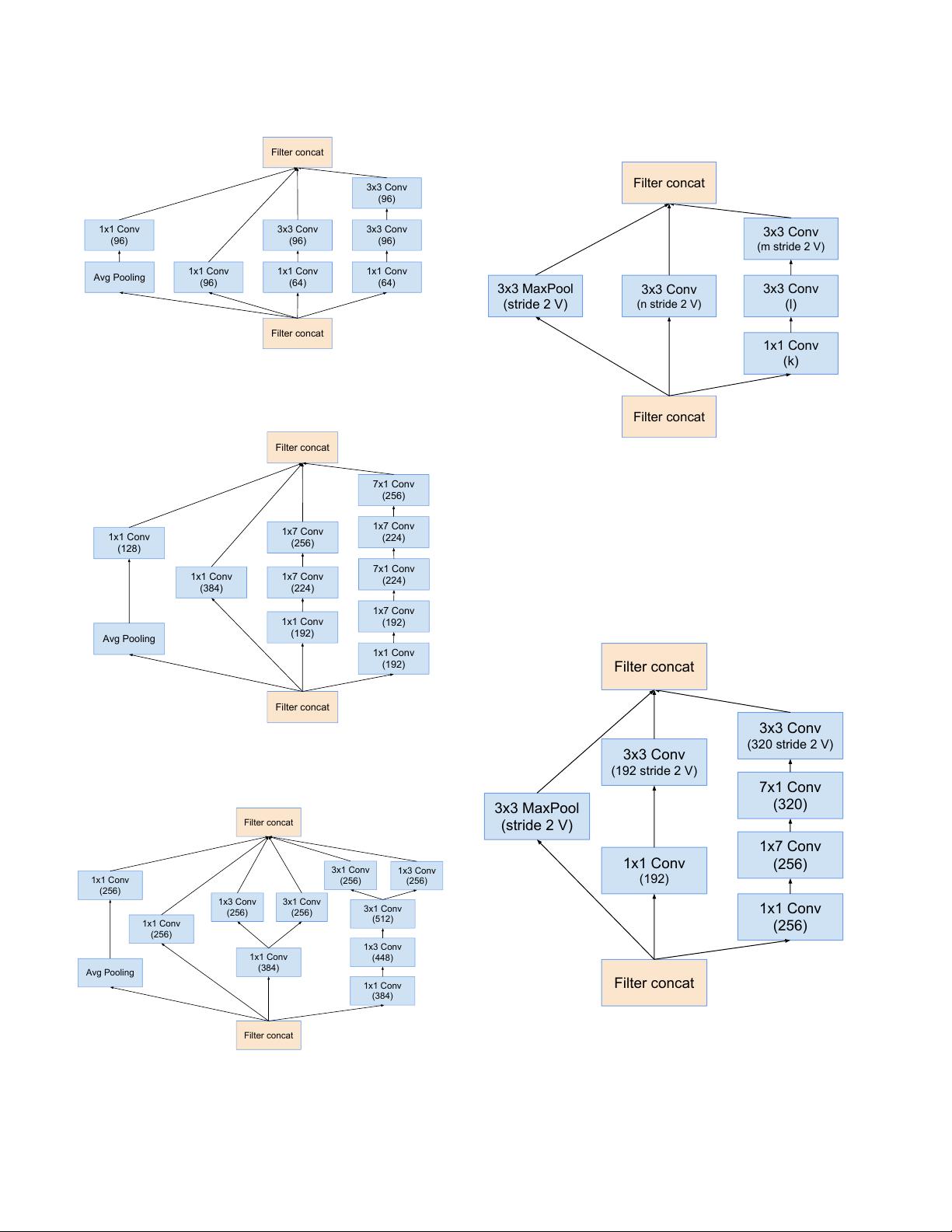

深度学习领域的GoogLeNet系列论文是计算机视觉和神经网络发展的重要里程碑,它们引入了创新的网络架构,显著提升了模型的性能与效率。这四篇论文分别介绍了Inception-v1, Inception-v2, Inception-v3以及Inception-v4模型,让我们逐一深入探讨。 1. Inception-v1(1409.4842 - Going Deeper with Convolutions):这是GoogLeNet系列的开创之作,由Szegedy等人在2014年提出。该模型的主要创新是引入了“ inception module”(又称Inception结构),它通过并行组合不同大小的卷积核,同时处理不同尺度的特征,提高了网络的深度和宽度而不增加计算负担。此外,Inception-v1首次在ImageNet大规模图像识别挑战赛中实现了较高的准确率,同时也减少了参数数量,避免了过拟合。 2. Inception-v2(1502.03167 - GoogLeNet-inception-V2 - Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift):Inception-v2在前作的基础上引入了批量归一化(Batch Normalization)技术,有效解决了内部协变量漂移问题,加速了训练过程,并提高了模型的泛化能力。批量归一化使得每一层的输入保持恒定的分布,降低了训练的难度。此外,通过调整Inception模块的设计,模型的性能进一步提升。 3. Inception-v3(1512.00567 - GoogLeNet-V3 - Rethinking the Inception Architecture for Computer Vision):Inception-v3继续优化Inception模块,引入了更精细的网络设计。比如,将3x3的卷积层替换为1x3和3x1的卷积层串联,减少了计算量,同时保持了感受野。另外,它引入了“residual connections”(残差连接的预兆),尽管不如后来的ResNet那么直接,但也有助于信息流的传递。这些改进使得Inception-v3在保持高效的同时,提高了模型的准确性。 4. Inception-v4(1602.07261 - GoogLeNet-Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning):Inception-v4结合了Inception模块和ResNet的残差连接,形成了Inception-ResNet结构,显著提升了模型的深度和复杂性,同时保持了训练的稳定性。残差连接使得信息可以直接跨层传递,解决了深度网络中的梯度消失问题,使网络能够学习更复杂的特征表示。 这四篇论文不仅推动了深度学习模型的发展,还对后续的网络设计如ResNet、Xception等产生了深远影响。通过不断优化Inception模块和引入新的训练策略,GoogLeNet系列模型为计算机视觉任务提供了更强大、更高效的解决方案。在实际应用中,这些模型被广泛用于图像分类、目标检测、语义分割等多个领域。

GoogLeNet.rar (4个子文件)

GoogLeNet.rar (4个子文件)  1512.00567-GoogLeNet-V3-RethinkingtheInceptionArchitectureforComputerVision.pdf 505KB

1512.00567-GoogLeNet-V3-RethinkingtheInceptionArchitectureforComputerVision.pdf 505KB 1602.07261-GoogLeNet-Inception-v4,Inception-ResNetand theImpactofResidualConnectionsonLearning.pdf 935KB

1602.07261-GoogLeNet-Inception-v4,Inception-ResNetand theImpactofResidualConnectionsonLearning.pdf 935KB 1502.03167-GoogLeNet-inception-V2-Batch Normalization Accelerating Deep Network Training by Reducing Internal Covariate Shift.pdf 169KB

1502.03167-GoogLeNet-inception-V2-Batch Normalization Accelerating Deep Network Training by Reducing Internal Covariate Shift.pdf 169KB 1409.4842-GoogLeNet-inception-V1-Going deeper with convolutions.pdf 1.14MB

1409.4842-GoogLeNet-inception-V1-Going deeper with convolutions.pdf 1.14MB- 1

- 粉丝: 2

- 资源: 32

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 华彩-舜宇项目—公司年度培训计划表.doc

- 华彩-舜宇项目—联想---规划培训.ppt

- Screenshot_20241218_134907.jpg

- 华彩-舜宇项目—培训管理制度.doc

- 华彩-舜宇项目—培训管理体系.doc

- 基于flink (SQL)的特征加工平台详细文档+全部资料.zip

- 基于Flink+ClickHouse实时计算平台详细文档+全部资料.zip

- 华彩-舜宇项目—如何进行战略与年度规划培训.ppt

- 基于Flink 的商品实时推荐系统。当用户产生评分行为时,数据由 kafka 发送到 flink,根据用户历史评分行为进行实时和离线推荐。实时推荐包括:基于行为

- 基于Flink+ClickHouse构建亿级电商实时数据分析平台(PC、移动、小程序)详细文档+全部资料.zip

- 基于flink1.9.1,flink-sql-client模块SDK单独实现,支持Yarn集群的远程SQL任务发布,可以支撑flink sql任务的远程化执行详细文档+全部资料.zip

- 基于flink-sql在flink上运行sql构建数据流的平台详细文档+全部资料.zip

- 华彩咨询—杭挂集团—杭挂企业集团培训管理办法--外派培训.doc

- 华彩咨询—杭挂集团—杭挂企业集团培训管理办法(总则).doc

- 华彩咨询—杭挂集团—杭挂企业集团培训管理办法--新员工培训.doc

- 华彩咨询—杭挂集团—教育培训制度.doc

信息提交成功

信息提交成功