没有合适的资源?快使用搜索试试~ 我知道了~

MetaPruning-Meta Learning for Automatic Neural Network Channel P...

需积分: 37 3 下载量 181 浏览量

2019-10-28

22:04:53

上传

评论 1

收藏 4.39MB PDF 举报

温馨提示

本文提出来一个最新的元学习方法,对非常深的神经网络进行自动化通道剪枝。首先训练出一个PruningNet,对于给定目标网络的任何剪枝结构都可以生成权重参数。我们使用一个简单的随机结构采样方法来训练PruningNet,然后应用一个进化过程来搜索性能好的剪枝网络。这个搜索方法是非常高效的,因为权重直接通过训练好的PruningNet生成,并不需要在搜索时间中进行任何的微调。只需要为目标网络训练处一个简单的PruningNet,我们可以在不同的人工约束下搜索不同的剪枝网络。与当前最先进的剪枝方式相比,MetaPruning在MobileNet V1/V2和ResNet上有着最好的性能表现。

资源推荐

资源详情

资源评论

MetaPruning: Meta Learning for Automatic Neural Network Channel Pruning

Zechun Liu

1

Haoyuan Mu

2

Xiangyu Zhang

3

Zichao Guo

3

Xin Yang

4

Tim Kwang-Ting Cheng

1

Jian Sun

3

1

Hong Kong University of Science and Technology

2

Tsinghua University

3

Megvii Technology

4

Huazhong University of Science and Technology

Abstract

In this paper, we propose a novel meta learning ap-

proach for automatic channel pruning of very deep neural

networks. We first train a PruningNet, a kind of meta net-

work, which is able to generate weight parameters for any

pruned structure given the target network. We use a sim-

ple stochastic structure sampling method for training the

PruningNet. Then, we apply an evolutionary procedure to

search for good-performing pruned networks. The search is

highly efficient because the weights are directly generated

by the trained PruningNet and we do not need any fine-

tuning at search time. With a single PruningNet trained

for the target network, we can search for various Pruned

Networks under different constraints with little human par-

ticipation. Compared to the state-of-the-art pruning meth-

ods, we have demonstrated superior performances on Mo-

bileNet V1/V2 and ResNet. Codes are available on https:

//github.com/liuzechun/MetaPruning.

1. Introduction

Channel pruning has been recognized as an effective

neural network compression/acceleration method [32, 22, 2,

3, 21, 52] and is widely used in the industry. A typical prun-

ing approach contains three stages: training a large over-

parameterized network, pruning the less-important weights

or channels, finetuning or re-training the pruned network.

The second stage is the key. It usually performs iterative

layer-wise pruning and fast finetuning or weight reconstruc-

tion to retain the accuracy [17, 1, 33, 41].

Conventional channel pruning methods mainly rely

on data-driven sparsity constraints [28, 35], or human-

designed policies [22, 32, 40, 25, 38, 2]. Recent AutoML-

style works automatically prune channels in an iterative

mode, based on a feedback loop [52] or reinforcement

learning [21]. Compared with the conventional pruning

This work is done when Zechun Liu and Haoyuan Mu are interns at

Megvii Technology.

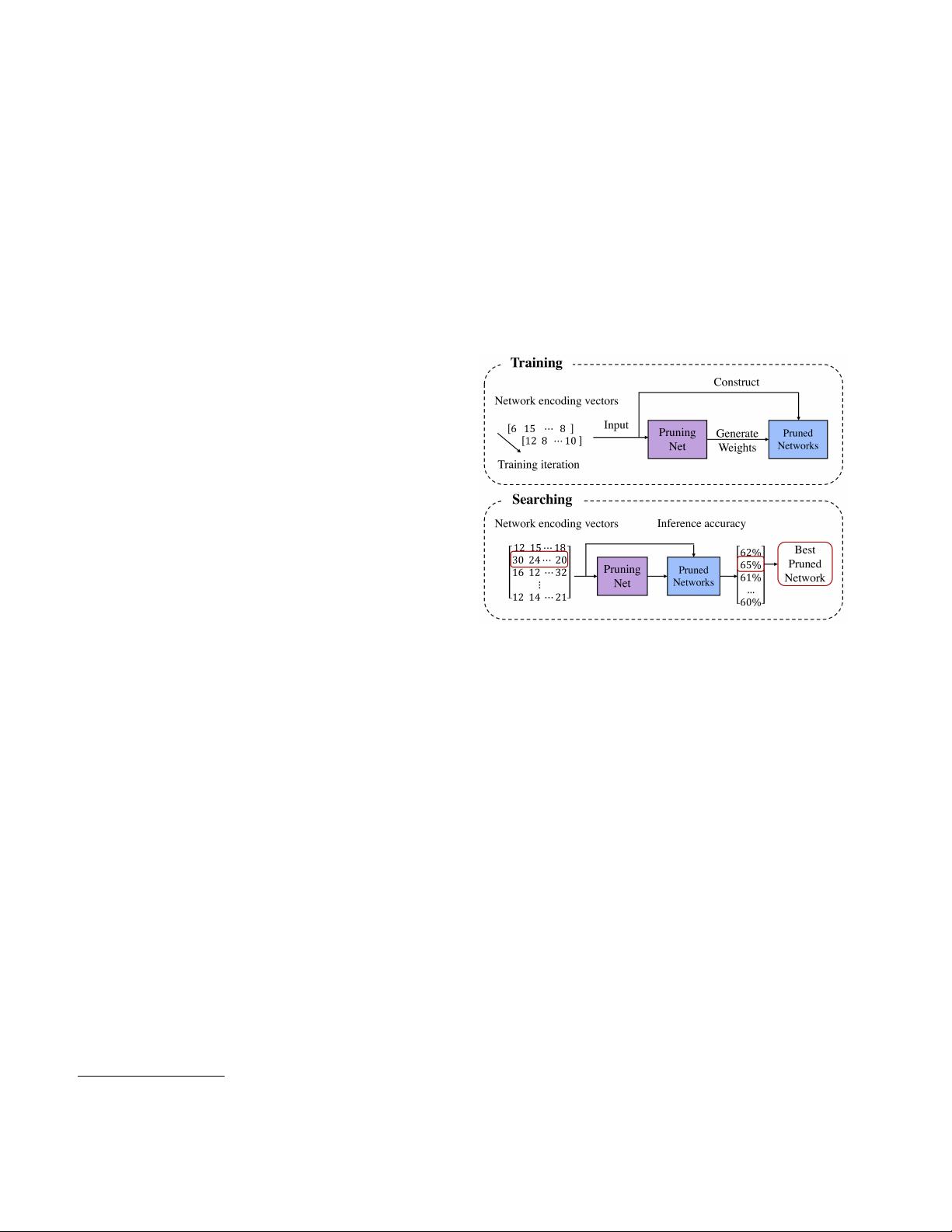

Figure 1. Our MetaPruning has two steps. 1) training a Prun-

ingNet. At each iteration, a network encoding vector (i.e., the

number of channels in each layer) is randomly generated. The

Pruned Network is constructed accordingly. The PruningNet takes

the network encoding vector as input and generates the weights

for the Pruned Network. 2) searching for the best Pruned Net-

work. We construct many Pruned Networks by varying network

encoding vector and evaluate their goodness on the validation data

with the weights predicted by the PruningNet. No finetuning or

re-training is needed at search time.

methods, the AutoML methods save human efforts and can

optimize the direct metrics like the hardware latency.

Apart from the idea of keeping the important weights in

the pruned network, a recent study [36] finds that the pruned

network can achieve the same accuracy no matter it inher-

its the weights in the original network or not. This finding

suggests that the essence of channel pruning is finding good

pruning structure - layer-wise channel numbers.

However, exhaustively finding the optimal pruning struc-

ture is computationally prohibitive. Considering a network

with 10 layers and each layer contains 32 channels. The

possible combination of layer-wise channel numbers could

be 32

10

. Inspired by the recent Neural Architecture Search

(NAS), specifically One-Shot model [5], as well as the

weight prediction mechanism in HyperNetwork [15], we

arXiv:1903.10258v3 [cs.CV] 14 Aug 2019

propose to train a PruningNet that can generate weights for

all candidate pruned networks structures, such that we can

search good-performing structures by just evaluating their

accuracy on the validation data, which is highly efficient.

To train the PruningNet, we use a stochastic structure

sampling. As shown in Figure 1, the PruningNet generates

the weights for pruned networks with corresponding net-

work encoding vectors, which is the number of channels

in each layer. By stochastically feeding in different net-

work encoding vectors, the PruningNet gradually learns to

generate weights for various pruned structures. After the

training, we search for good-performing Pruned Networks

by an evolutionary search method which can flexibly incor-

porate various constraints such as computation FLOPs or

hardware latency. Moreover, by directly searching the best

pruned network via determining the channels for each layer

or each stage, we can prune channels in the shortcut without

extra effort, which is seldom addressed in previous chan-

nel pruning solutions. We name the proposed method as

MetaPruning.

We apply our approach on MobileNets [24, 46] and

ResNet [19]. At the same FLOPs, our accuracy is 2.2%-

6.6% higher than MobileNet V1, 0.7%-3.7% higher than

MobileNet V2, and 0.6%-1.4% higher than ResNet-50.

At the same latency, our accuracy is 2.1%-9.0% higher

than MobileNet V1, and 1.2%-9.9% higher than MobileNet

V2. Compared with state-of-the-art channel pruning meth-

ods [21, 52], our MetaPruning also produces superior re-

sults.

Our contribution lies in four folds:

• We proposed a meta learning approach, MetaPruning, for

channel pruning. The central of this approach is learn-

ing a meta network (named PruningNet) which gener-

ates weights for various pruned structures. With a sin-

gle trained PruningNet, we can search for various pruned

networks under different constraints.

• Compared to conventional pruning methods, MetaPrun-

ing liberates human from cumbersome hyperparameter

tuning and enables the direct optimization with desired

metrics.

• Compared to other AutoML methods, MetaPruning can

easily enforce constraints in the search of desired struc-

tures, without manually tuning the reinforcement learn-

ing hyper-parameters.

• The meta learning is able to effortlessly prune the chan-

nels in the short-cuts for ResNet-like structures, which

is non-trivial because the channels in the short-cut affect

more than one layers.

2. Related Works

There are extensive studies on compressing and accel-

erating neural networks, such as quantization [54, 43, 37,

23, 56, 57], pruning [22, 30, 16] and compact network de-

sign [24, 46, 55, 39, 29]. A comprehensive survey is pro-

vided in [47]. Here, we summarize the approaches that are

most related to our work.

Pruning Network pruning is a prevalent approach for

removing redundancy in DNNs. In weight pruning, people

prune individual weights to compress the model size [30,

18, 16, 14]. However, weight pruning results in unstruc-

tured sparse filters, which can hardly be accelerated by

general-purpose hardware. Recent works [25, 32, 40, 22,

38, 53] focus on channel pruning in the CNNs, which re-

moves entire weight filters instead of individual weights.

Traditional channel pruning methods trim channels based

on the importance of each channel either in an iterative

mode [22, 38] or by adding a data-driven sparsity [28, 35].

In most traditional channel pruning, compression ratio for

each layer need to be manually set based on human ex-

perts or heuristics, which is time consuming and prone to

be trapped in sub-optimal solutions.

AutoML Recently, AutoML methods [21, 52, 8, 12] take

the real-time inference latency on multiple devices into ac-

count to iteratively prune channels in different layers of a

network via reinforcement learning [21] or an automatic

feedback loop [52]. Compared with traditional channel

pruning methods, AutoML methods help to alleviate the

manual efforts for tuning the hyper-parameters in channel

pruning. Our proposed MetaPruning also involves little hu-

man participation. Different from previous AutoML prun-

ing methods, which is carried out in a layer-wise prun-

ing and finetuning loop, our methods is motivated by re-

cent findings [36], which suggests that instead of selecting

“important” weights, the essence of channel pruning some-

times lies in identifying the best pruned network. From

this prospective, we propose MetaPruning for directly find-

ing the optimal pruned network structures. Compared to

previous AutoML pruning methods [21, 52], MetaPruning

method enjoys higher flexibility in precisely meeting the

constraints and possesses the ability of pruning the channel

in the short-cut.

Meta Learning Meta-learning refers to learning from

observing how different machine learning approaches per-

form on various learning tasks. Meta learning can be used

in few/zero-shot learning [44, 13] and transfer learning [48].

A comprehensive overview of meta learning is provided

in [31]. In this work we are inspired by [15] to use meta

learning for weight prediction. Weight predictions refer to

weights of a neural network are predicted by another neural

network rather than directly learned [15]. Recent works also

applies meta learning on various tasks and achieves state-of-

the-art results in detection [51], super-resolution with arbi-

trary magnification [27] and instance segmentation [26].

Neural Architecture Search Studies for neural archi-

tecture search try to find the optimal network structures

剩余9页未读,继续阅读

资源评论

librahfacebook

- 粉丝: 198

- 资源: 2

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于SpringBoot+Vue的的医院药品管理系统设计与实现(Java毕业设计,附源码,部署教程).zip

- 基于SpringBoot+Vue的的游戏交易系统(Java毕业设计,附源码,部署教程).zip

- 基于SpringBoot+Vue的影院订票系统的设计与实现(Java毕业设计,附源码,部署教程).zip

- 基于SpringBoot+Vue的影院订票系统的设计与实现2(Java毕业设计,附源码,部署教程).zip

- 基于SpringBoot+Vue的的医院药品管理系统设计与实现2(Java毕业设计,附源码,部署教程).zip

- 基于HAL库STM32F407的大彩TFT彩屏串口通信程序 STM32F4xx.7z

- 基于java的健身房管理系统的设计与实现+vue(Java毕业设计,附源码,数据库,教程).zip

- 基于java和mysql的多角色学生管理系统+jsp(Java毕业设计,附源码,数据库,教程).zip

- 基于Java的图书管理系统+jsp(Java毕业设计,附源码,数据库,教程).zip

- 基于Java语言校园快递代取系统的设计与实现+jsp(Java毕业设计,附源码,数据库,教程).zip

- 基于SpringBoot+Vue的的信息技术知识竞赛系统的设计与实现2(Java毕业设计,附源码,部署教程).zip

- 基于SpringBoot+Vue的的信息技术知识赛系统的设计与实现2(Java毕业设计,附源码,部署教程).zip

- 基于SpringBoot+Vue的的信息技术知识赛系统的设计与实现(Java毕业设计,附源码,部署教程).zip

- 基于spring框架的中小企业人力资源管理系统的设计及实现+jsp(Java毕业设计,附源码,数据库,教程).zip

- 基于jsp的精品酒销售管理系统+jsp(Java毕业设计,附源码,数据库,教程).zip

- 基于SpringBoot+Vue的的小学生身体素质测评管理系统设计与实现(Java毕业设计,附源码,部署教程).zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功