没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

This paper develops a model that addresses sentence embedding, a hot topic in current natural language processing research, using recurrent neural networks (RNN) with Long Short-Term Memory (LSTM) cells. The proposed LSTM-RNN model sequentially takes each word in a sentence, extracts its information, and embeds it into a semantic vector. Due to its ability to capture long term memory, the LSTM-RNN accumulates increasingly richer information as it goes through the sentence, and when it reaches the last word, the hidden layer of the network provides a semantic representation of the whole sentence.

资源推荐

资源详情

资源评论

1

Deep Sentence Embedding Using Long

Short-Term Memory Networks: Analysis and

Application to Information Retrieval

Hamid Palangi, Li Deng, Yelong Shen, Jianfeng Gao, Xiaodong He, Jianshu Chen, Xinying Song,

Rabab Ward

Abstract—This paper develops a model that addresses

sentence embedding, a hot topic in current natural lan-

guage processing research, using recurrent neural networks

(RNN) with Long Short-Term Memory (LSTM) cells. The

proposed LSTM-RNN model sequentially takes each word

in a sentence, extracts its information, and embeds it into

a semantic vector. Due to its ability to capture long term

memory, the LSTM-RNN accumulates increasingly richer

information as it goes through the sentence, and when it

reaches the last word, the hidden layer of the network

provides a semantic representation of the whole sentence.

In this paper, the LSTM-RNN is trained in a weakly

supervised manner on user click-through data logged by a

commercial web search engine. Visualization and analysis

are performed to understand how the embedding process

works. The model is found to automatically attenuate the

unimportant words and detects the salient keywords in

the sentence. Furthermore, these detected keywords are

found to automatically activate different cells of the LSTM-

RNN, where words belonging to a similar topic activate the

same cell. As a semantic representation of the sentence,

the embedding vector can be used in many different

applications. These automatic keyword detection and topic

allocation abilities enabled by the LSTM-RNN allow the

network to perform document retrieval, a difficult language

processing task, where the similarity between the query and

documents can be measured by the distance between their

corresponding sentence embedding vectors computed by

the LSTM-RNN. On a web search task, the LSTM-RNN

embedding is shown to significantly outperform several

existing state of the art methods. We emphasize that the

proposed model generates sentence embedding vectors that

are specially useful for web document retrieval tasks. A

comparison with a well known general sentence embedding

method, the Paragraph Vector, is performed. The results

show that the proposed method in this paper significantly

outperforms it for web document retrieval task.

Index Terms—Deep Learning, Long Short-Term Mem-

ory, Sentence Embedding.

I. INTRODUCTION

H. Palangi and R. Ward are with the Department of Electrical and

Computer Engineering, University of British Columbia, Vancouver,

BC, V6T 1Z4 Canada (e-mail: {hamidp,rababw}@ece.ubc.ca)

L. Deng, Y. Shen, J.Gao, X. He, J. Chen and X. Song are

with Microsoft Research, Redmond, WA 98052 USA (e-mail:

{deng,jfgao,xiaohe,yeshen,jianshuc,xinson}@microsoft.com)

L

EARNING a good representation (or features) of

input data is an important task in machine learning.

In text and language processing, one such problem is

learning of an embedding vector for a sentence; that is, to

train a model that can automatically transform a sentence

to a vector that encodes the semantic meaning of the

sentence. While word embedding is learned using a

loss function defined on word pairs, sentence embedding

is learned using a loss function defined on sentence

pairs. In the sentence embedding usually the relationship

among words in the sentence, i.e., the context informa-

tion, is taken into consideration. Therefore, sentence em-

bedding is more suitable for tasks that require computing

semantic similarities between text strings. By mapping

texts into a unified semantic representation, the embed-

ding vector can be further used for different language

processing applications, such as machine translation [1],

sentiment analysis [2], and information retrieval [3].

In machine translation, the recurrent neural networks

(RNN) with Long Short-Term Memory (LSTM) cells, or

the LSTM-RNN, is used to encode an English sentence

into a vector, which contains the semantic meaning of

the input sentence, and then another LSTM-RNN is

used to generate a French (or another target language)

sentence from the vector. The model is trained to best

predict the output sentence. In [2], a paragraph vector

is learned in an unsupervised manner as a distributed

representation of sentences and documents, which are

then used for sentiment analysis. Sentence embedding

can also be applied to information retrieval, where the

contextual information are properly represented by the

vectors in the same space for fuzzy text matching [3].

In this paper, we propose to use an RNN to sequen-

tially accept each word in a sentence and recurrently map

it into a latent space together with the historical informa-

tion. As the RNN reaches the last word in the sentence,

the hidden activations form a natural embedding vector

for the contextual information of the sentence. We further

incorporate the LSTM cells into the RNN model (i.e. the

LSTM-RNN) to address the difficulty of learning long

term memory in RNN. The learning of such a model

arXiv:1502.06922v3 [cs.CL] 16 Jan 2016

2

is performed in a weakly supervised manner on the

click-through data logged by a commercial web search

engine. Although manually labelled data are insufficient

in machine learning, logged data with limited feedback

signals are massively available due to the widely used

commercial web search engines. Limited feedback in-

formation such as click-through data provides a weak

supervision signal that indicates the semantic similarity

between the text on the query side and the clicked text

on the document side. To exploit such a signal, the

objective of our training is to maximize the similarity

between the two vectors mapped by the LSTM-RNN

from the query and the clicked document, respectively.

Consequently, the learned embedding vectors of the

query and clicked document are specifically useful for

web document retrieval task.

An important contribution of this paper is to analyse

the embedding process of the LSTM-RNN by visualizing

the internal activation behaviours in response to different

text inputs. We show that the embedding process of the

learned LSTM-RNN effectively detects the keywords,

while attenuating less important words, in the sentence

automatically by switching on and off the gates within

the LSTM-RNN cells. We further show that different

cells in the learned model indeed correspond to differ-

ent topics, and the keywords associated with a similar

topic activate the same cell unit in the model. As the

LSTM-RNN reads to the end of the sentence, the topic

activation accumulates and the hidden vector at the last

word encodes the rich contextual information of the

entire sentence. For this reason, a natural application

of sentence embedding is web search ranking, in which

the embedding vector from the query can be used to

match the embedding vectors of the candidate documents

according to the maximum cosine similarity rule. Evalu-

ated on a real web document ranking task, our proposed

method significantly outperforms many of the existing

state of the art methods in NDCG scores. Please note

that when we refer to document in the paper we mean

the title (headline) of the document.

II. RELATED WORK

Inspired by the word embedding method [4], [5], the

authors in [2] proposed an unsupervised learning method

to learn a paragraph vector as a distributed representation

of sentences and documents, which are then used for

sentiment analysis with superior performance. However,

the model is not designed to capture the fine-grained

sentence structure. In [6], an unsupervised sentence

embedding method is proposed with great performance

on large corpus of contiguous text corpus, e.g., the

BookCorpus [7]. The main idea is to encode the sentence

s(t) and then decode previous and next sentences, i.e.,

s(t−1) and s(t+1), using two separate decoders. The en-

coder and decoders are RNNs with Gated Recurrent Unit

(GRU) [8]. However, this sentence embedding method

is not designed for document retrieval task having a

supervision among queries and clicked and unclicked

documents. In [9], a Semi-Supervised Recursive Au-

toencoder (RAE) is proposed and used for sentiment

prediction. Similar to our proposed method, it does not

need any language specific sentiment parsers. A greedy

approximation method is proposed to construct a tree

structure for the input sentence. It assigns a vector per

word. It can become practically problematic for large

vocabularies. It also works both on unlabeled data and

supervised sentiment data.

Similar to the recurrent models in this paper, The

DSSM [3] and CLSM [10] models, developed for in-

formation retrieval, can also be interpreted as sentence

embedding methods. However, DSSM treats the input

sentence as a bag-of-words and does not model word

dependencies explicitly. CLSM treats a sentence as a bag

of n-grams, where n is defined by a window, and can

capture local word dependencies. Then a Max-pooling

layer is used to form a global feature vector. Methods in

[11] are also convolutional based networks for Natural

Language Processing (NLP). These models, by design,

cannot capture long distance dependencies, i.e., depen-

dencies among words belonging to non-overlapping n-

grams. In [12] a Dynamic Convolutional Neural Network

(DCNN) is proposed for sentence embedding. Similar to

CLSM, DCNN does not rely on a parse tree and is easily

applicable to any language. However, different from

CLSM where a regular max-pooling is used, in DCNN a

dynamic k-max-pooling is used. This means that instead

of just keeping the largest entries among word vectors in

one vector, k largest entries are kept in k different vec-

tors. DCNN has shown good performance in sentiment

prediction and question type classification tasks. In [13],

a convolutional neural network architecture is proposed

for sentence matching. It has shown great performance in

several matching tasks. In [14], a Bilingually-constrained

Recursive Auto-encoders (BRAE) is proposed to create

semantic vector representation for phrases. Through ex-

periments it is shown that the proposed method has great

performance in two end-to-end SMT tasks.

Long short-term memory networks were developed

in [15] to address the difficulty of capturing long term

memory in RNN. It has been successfully applied to

speech recognition, which achieves state-of-art perfor-

mance [16], [17]. In text analysis, LSTM-RNN treats a

sentence as a sequence of words with internal structures,

i.e., word dependencies. It encodes a semantic vector of

a sentence incrementally which differs from DSSM and

CLSM. The encoding process is performed left-to-right,

word-by-word. At each time step, a new word is encoded

3

into the semantic vector, and the word dependencies

embedded in the vector are “updated”. When the process

reaches the end of the sentence, the semantic vector has

embedded all the words and their dependencies, hence,

can be viewed as a feature vector representation of the

whole sentence. In the machine translation work [1], an

input English sentence is converted into a vector repre-

sentation using LSTM-RNN, and then another LSTM-

RNN is used to generate an output French sentence.

The model is trained to maximize the probability of

predicting the correct output sentence. In [18], there are

two main composition models, ADD model that is bag

of words and BI model that is a summation over bi-gram

pairs plus a non-linearity. In our proposed model, instead

of simple summation, we have used LSTM model with

letter tri-grams which keeps valuable information over

long intervals (for long sentences) and throws away use-

less information. In [19], an encoder-decoder approach is

proposed to jointly learn to align and translate sentences

from English to French using RNNs. The concept of

“attention” in the decoder, discussed in this paper, is

closely related to how our proposed model extracts

keywords in the document side. For further explanations

please see section V-A2. In [20] a set of visualizations

are presented for RNNs with and without LSTM cells

and GRUs. Different from our work where the target task

is sentence embedding for document retrieval, the target

tasks in [20] were character level sequence modelling for

text characters and source codes. Interesting observations

about interpretability of some LSTM cells and statistics

of gates activations are presented. In section V-A we

show that some of the results of our visualization are

consistent with the observations reported in [20]. We

also present more detailed visualization specific to the

document retrieval task using click-through data. We also

present visualizations about how our proposed model can

be used for keyword detection.

Different from the aforementioned studies, the method

developed in this paper trains the model so that sentences

that are paraphrase of each other are close in their

semantic embedding vectors — see the description in

Sec. IV further ahead. Another reason that LSTM-RNN

is particularly effective for sentence embedding, is its

robustness to noise. For example, in the web document

ranking task, the noise comes from two sources: (i) Not

every word in query / document is equally important,

and we only want to “remember” salient words using

the limited “memory”. (ii) A word or phrase that is

important to a document may not be relevant to a

given query, and we only want to “remember” related

words that are useful to compute the relevance of the

document for a given query. We will illustrate robustness

of LSTM-RNN in this paper. The structure of LSTM-

RNN will also circumvent the serious limitation of using

a fixed window size in CLSM. Our experiments show

that this difference leads to significantly better results in

web document retrieval task. Furthermore, it has other

advantages. It allows us to capture keywords and key

topics effectively. The models in this paper also do not

need the extra max-pooling layer, as required by the

CLSM, to capture global contextual information and they

do so more effectively.

III. SENTENCE EMBEDDING USING RNNS WITH AND

WITHOUT LSTM CELLS

In this section, we introduce the model of recurrent

neural networks and its long short-term memory version

for learning the sentence embedding vectors. We start

with the basic RNN and then proceed to LSTM-RNN.

A. The basic version of RNN

The RNN is a type of deep neural networks that

are “deep” in temporal dimension and it has been used

extensively in time sequence modelling [21], [22], [23],

[24], [25], [26], [27], [28], [29]. The main idea of using

RNN for sentence embedding is to find a dense and

low dimensional semantic representation by sequentially

and recurrently processing each word in a sentence and

mapping it into a low dimensional vector. In this model,

the global contextual features of the whole text will be

in the semantic representation of the last word in the

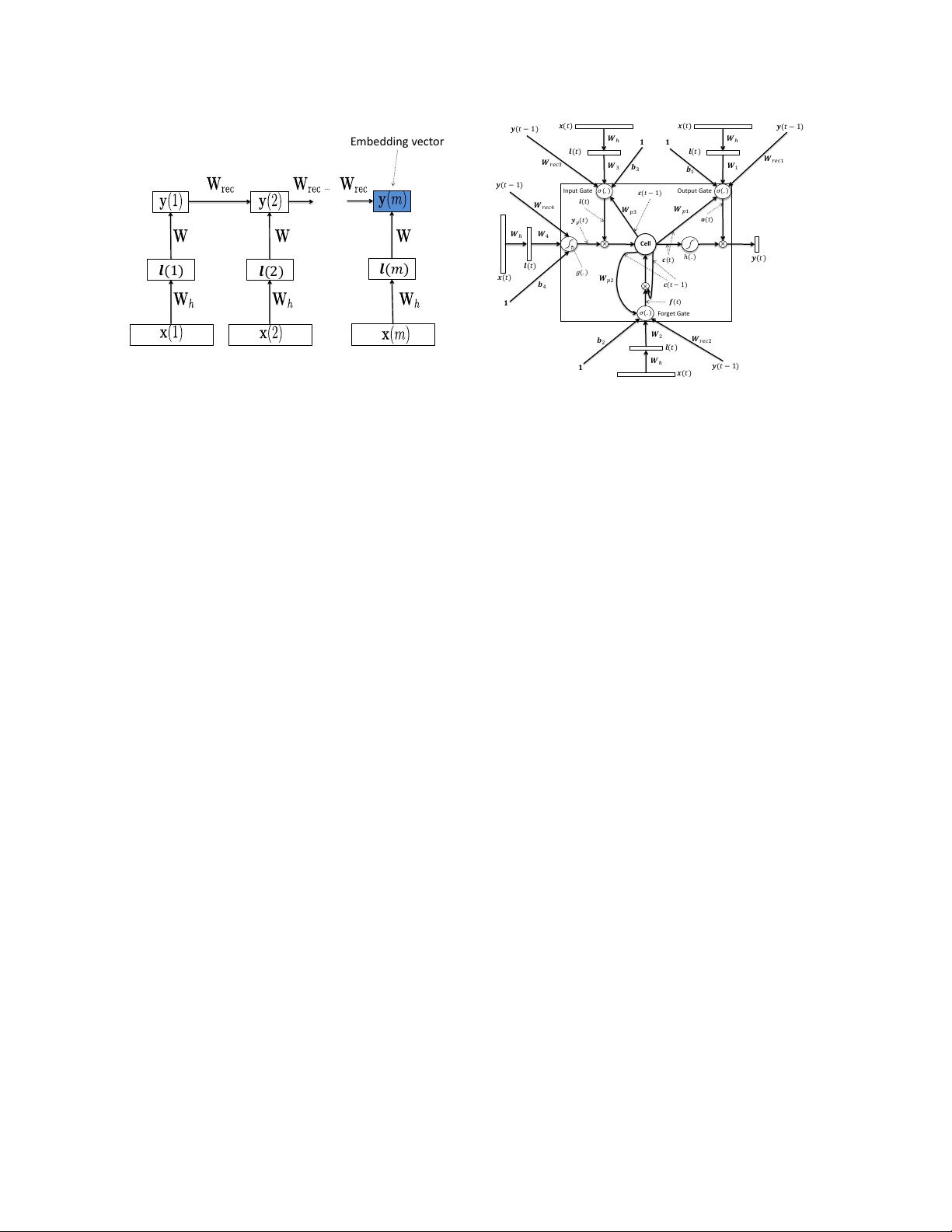

text sequence — see Figure 1, where x(t) is the t-th

word, coded as a 1-hot vector, W

h

is a fixed hashing

operator similar to the one used in [3] that converts the

word vector to a letter tri-gram vector, W is the input

weight matrix, W

rec

is the recurrent weight matrix, y(t)

is the hidden activation vector of the RNN, which can be

used as a semantic representation of the t-th word, and

y(t) associated to the last word x(m) is the semantic

representation vector of the entire sentence. Note that

this is very different from the approach in [3] where the

bag-of-words representation is used for the whole text

and no context information is used. This is also different

from [10] where the sliding window of a fixed size (akin

to an FIR filter) is used to capture local features and a

max-pooling layer on the top to capture global features.

In the RNN there is neither a fixed-sized window nor

a max-pooling layer; rather the recurrence is used to

capture the context information in the sequence (akin

to an IIR filter).

The mathematical formulation of the above RNN

model for sentence embedding can be expressed as

l(t) = W

h

x(t)

y(t) = f(Wl(t) + W

rec

y(t − 1) + b) (1)

where W and W

rec

are the input and recurrent matrices

to be learned, W

h

is a fixed word hashing operator, b

4

…

Embedding vector

𝒍(2)

𝒍(1)

𝒍(𝑚)

Fig. 1. The basic architecture of the RNN for sentence embedding,

where temporal recurrence is used to model the contextual information

across words in the text string. The hidden activation vector corre-

sponding to the last word is the sentence embedding vector (blue).

is the bias vector and f(·) is assumed to be tanh(·).

Note that the architecture proposed here for sentence

embedding is slightly different from traditional RNN in

that there is a word hashing layer that convert the high

dimensional input into a relatively lower dimensional

letter tri-gram representation. There is also no per word

supervision during training, instead, the whole sentence

has a label. This is explained in more detail in section

IV.

B. The RNN with LSTM cells

Although RNN performs the transformation from the

sentence to a vector in a principled manner, it is generally

difficult to learn the long term dependency within the

sequence due to vanishing gradients problem. One of

the effective solutions for this problem in RNNs is

using memory cells instead of neurons originally pro-

posed in [15] as Long Short-Term Memory (LSTM) and

completed in [30] and [31] by adding forget gate and

peephole connections to the architecture.

We use the architecture of LSTM illustrated in Fig.

2 for the proposed sentence embedding method. In this

figure, i(t), f(t) , o(t) , c(t) are input gate, forget gate,

output gate and cell state vector respectively, W

p1

, W

p2

and W

p3

are peephole connections, W

i

, W

reci

and b

i

,

i = 1, 2, 3, 4 are input connections, recurrent connections

and bias values, respectively, g(·) and h(·) are tanh(·)

function and σ(·) is the sigmoid function. We use this

architecture to find y for each word, then use the y(m)

corresponding to the last word in the sentence as the

semantic vector for the entire sentence.

Considering Fig. 2, the forward pass for LSTM-RNN

Input Gate Output Gate

Forget Gate

Cell

Fig. 2. The basic LSTM architecture used for sentence embedding

model is as follows:

y

g

(t) = g(W

4

l(t) + W

rec4

y(t − 1) + b

4

)

i(t) = σ(W

3

l(t) + W

rec3

y(t − 1) + W

p3

c(t − 1) + b

3

)

f(t) = σ(W

2

l(t) + W

rec2

y(t − 1) + W

p2

c(t − 1) + b

2

)

c(t) = f(t) ◦ c(t − 1) + i(t) ◦ y

g

(t)

o(t) = σ(W

1

l(t) + W

rec1

y(t − 1) + W

p1

c(t) + b

1

)

y(t) = o(t) ◦ h(c(t)) (2)

where ◦ denotes Hadamard (element-wise) product. A

diagram of the proposed model with more details is

presented in section VI of Supplementary Materials.

IV. LEARNING METHOD

To learn a good semantic representation of the input

sentence, our objective is to make the embedding vectors

for sentences of similar meaning as close as possible,

and meanwhile, to make sentences of different meanings

as far apart as possible. This is challenging in practice

since it is hard to collect a large amount of manually

labelled data that give the semantic similarity signal

between different sentences. Nevertheless, the widely

used commercial web search engine is able to log

massive amount of data with some limited user feedback

signals. For example, given a particular query, the click-

through information about the user-clicked document

among many candidates is usually recorded and can be

used as a weak (binary) supervision signal to indicate

the semantic similarity between two sentences (on the

query side and the document side). In this section, we

explain how to leverage such a weak supervision signal

to learn a sentence embedding vector that achieves the

aforementioned training objective. Please also note that

above objective to make sentences with similar meaning

as close as possible is similar to machine translation

剩余24页未读,继续阅读

资源评论

ken_henderson

- 粉丝: 2

- 资源: 11

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- C# winform置托盘图标并闪烁演示源码.zip

- 打包和分发Rust工具.pdf

- SQL中的CREATE LOGFILE GROUP 语句.pdf

- C语言-leetcode题解之第172题阶乘后的零.zip

- C语言-leetcode题解之第171题Excel列表序号.zip

- C语言-leetcode题解之第169题多数元素.zip

- ocr-图像识别资源ocr-图像识别资源

- 图像识别:基于Resnet50 + VGG16模型融合的人体细胞癌症分类模型实现-图像识别资源

- C语言-leetcode题解之第168题Excel列表名称.zip

- C语言-leetcode题解之第167题两数之和II-输入有序数组.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功