没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文探讨了自动评估由大型语言模型(LLMs)生成的引用有效性的问题。主要介绍了三种类型的引用错误:完全支持、推测性和矛盾性,并提出了两种自动评估方法:提示语言模型和微调小型模型。文中还构建了两个数据集用于测试,一个是模拟数据集AttrEval-Simulation,另一个是从New Bing搜索引擎提取的实际查询实例AttrEval-GenSearch。研究表明,GPT-4在实际应用中有较高的准确性,但仍存在一些挑战。 适合人群:对自然语言处理特别是生成模型及其可信度验证感兴趣的科研人员和技术开发者。 使用场景及目标:适用于需要自动化评估大型语言模型生成文本可信度的研究项目或商业产品。帮助减少人工评估的负担,提高评估效率。 其他说明:研究指出了目前存在的局限性,如小模型性能欠佳、数据质量等问题,并提出了一些改进方向。

资源推荐

资源详情

资源评论

Automatic Evaluation of Attribution by Large Language Models

Xiang Yue Boshi Wang Ziru Chen Kai Zhang Yu Su Huan Sun

The Ohio State University

{yue.149,wang.13930,chen.8336,zhang.13253,su.809,sun.397}@osu.edu

Abstract

A recent focus of large language model (LLM)

development, as exemplified by generative

search engines, is to incorporate external refer-

ences to generate and support its claims. How-

ever, evaluating the attribution, i.e., verifying

whether the generated statement is fully sup-

ported by the cited reference, remains an open

problem. Although human evaluation is com-

mon practice, it is costly and time-consuming.

In this paper, we investigate automatic evalu-

ation of attribution given by LLMs. We be-

gin by defining different types of attribution

errors, and then explore two approaches for

automatic evaluation: prompting LLMs and

fine-tuning smaller LMs. The fine-tuning data

is repurposed from related tasks such as ques-

tion answering, fact-checking, natural language

inference, and summarization. We manually cu-

rate a set of test examples covering 12 domains

from a generative search engine, New Bing.

Our results on this curated test set and simu-

lated examples from existing benchmarks high-

light both promising signals and challenges.

We hope our problem formulation, testbeds,

and findings will help lay the foundation for

future studies on this important problem.

1

1 Introduction

Generative large language models (LLMs) (Brown

et al., 2020; Ouyang et al., 2022; Chowdhery et al.,

2022; OpenAI, 2023a,b, inter alia) often struggle

with producing factually accurate statements, re-

sulting in hallucinations (Ji et al., 2023). Recent

efforts aim to alleviate this issue by augmenting

LLMs with external tools (Schick et al., 2023) such

as retrievers (Shuster et al., 2021; Borgeaud et al.,

2022) and search engines (Nakano et al., 2021;

Thoppilan et al., 2022; Shuster et al., 2022).

Incorporating external references for generation

inherently implies that the generated statement is

1

Our code and dataset are available at:

https://github.

com/OSU-NLP-Group/AttrScore

backed by these references. However, the valid-

ity of such attribution, i.e., whether the generated

statement is fully supported by the cited reference,

remains questionable.

2

According to Liu et al.

(2023), only 52% of the statements generated by

state-of-the-art generative search engines such as

New Bing and PerplexityAI are fully supported by

their respective cited references.

3

Inaccurate attribution compromises the trustwor-

thiness of LLMs, introducing significant safety

risks and potential harm. For instance, in health-

care, an LLM might attribute incorrect medical ad-

vice to a credible source, potentially leading users

to make harmful health decisions. Similarly, in

finance, faulty investment advice attributed to a re-

liable source may cause substantial financial losses.

To identify attribution errors, existing attributed

LLMs (Nakano et al., 2021; Thoppilan et al., 2022)

rely heavily on human evaluation, which is both

expensive and time-consuming. For instance, the

average cost of annotating a single (query, answer,

reference) example is about $1 in Liu et al. (2023).

In the actual use of attributed LLMs, it is the user

who needs to be wary of the attribution and manu-

ally verify it, which puts a tremendous burden on

their side. Therefore, effective and reliable meth-

ods to automatically evaluate attribution and iden-

tify potential attribution errors are highly desired.

Towards this goal, we take the first step by intro-

ducing AttrScore (Figure 1), a framework designed

for automatic evaluation of attribution and identi-

fication of specific types of attribution errors. We

propose a new problem formulation that catego-

rizes attribution into three types: 1) attributable:

the reference fully supports the generated state-

ment; 2) extrapolatory: the reference lacks suffi-

cient information to support the generated state-

2

Attribution primarily refers to “the act of attributing some-

thing” in this paper, which is similar to “verifiability” as de-

fined in Liu et al. (2023).

3

www.bing.com/new, www.perplexity.ai

arXiv:2305.06311v2 [cs.CL] 7 Oct 2023

Q: What is the temperature range on

the moon?

A: The average temperature on the

moon can range from -298 degrees F

(-183 degrees C) at night to 224 degrees

F (106 degrees C) during the day [1].

The temperature on the Moon

The average temperature on the Moon (at

the equator and mid latitudes) varies from

-298 degrees Fahrenheit (-183 degrees

Celsius), at night, to 224 degrees

Fahrenheit (106 degrees Celsius) during

the day. Because the Moon has no...

References

[1] coolcosmos.ipac.caltech.edu

Q: What is the highest price of a gallon

of regular gas in the US in 2022?

A: According to a news article by NPR,

as of June 2022, the highest average

price of a gallon of regular gas in the

US was $6.34 in California [1].

In 10 states, a gallon of gas now

costs more than $5

...U.S. gas prices hit a new record on

Monday, with a gallon of regular gas costing

an average of $4.865 (not adjusting for

inflation), according to the most recent data

from American Automobile Association...

References

[1] npr.org/states-highest-gas-prices

Q: What was the unemployment

rate in Germany in 2020?

A: According to [1], the unemployment

rate in Germany for 2020 was 4.31%,

which was a 1.17% increase from 2019.

It's important to note that the COVID...

Germany Unemployment Rate

1991-2023

Unemployment refers to the share of the

labor force that is without work but

available for and seeking...Germany

unemployment rate for 2020 was 3.81%,

a 0.67% increase from 2019.

References

[1] macrotrends.net/unemployment-rate

As an Attribution Validator, your task is to

verify whether a given a reference can support

the answer to a provided question. A contradictory

error means the answer contradicts the fact in the

attribution, while an extrapolatory error means that

there is not enough information in the attribution..

AttrScore

Attributable

Extrapolatory Contradictory

(1) Prompt LLMs with a clear

evaluation instruction

(2) Fine-tune LMs on a set of

diverse repurposed datasets

QA Fact-Checking

NLI Summarization

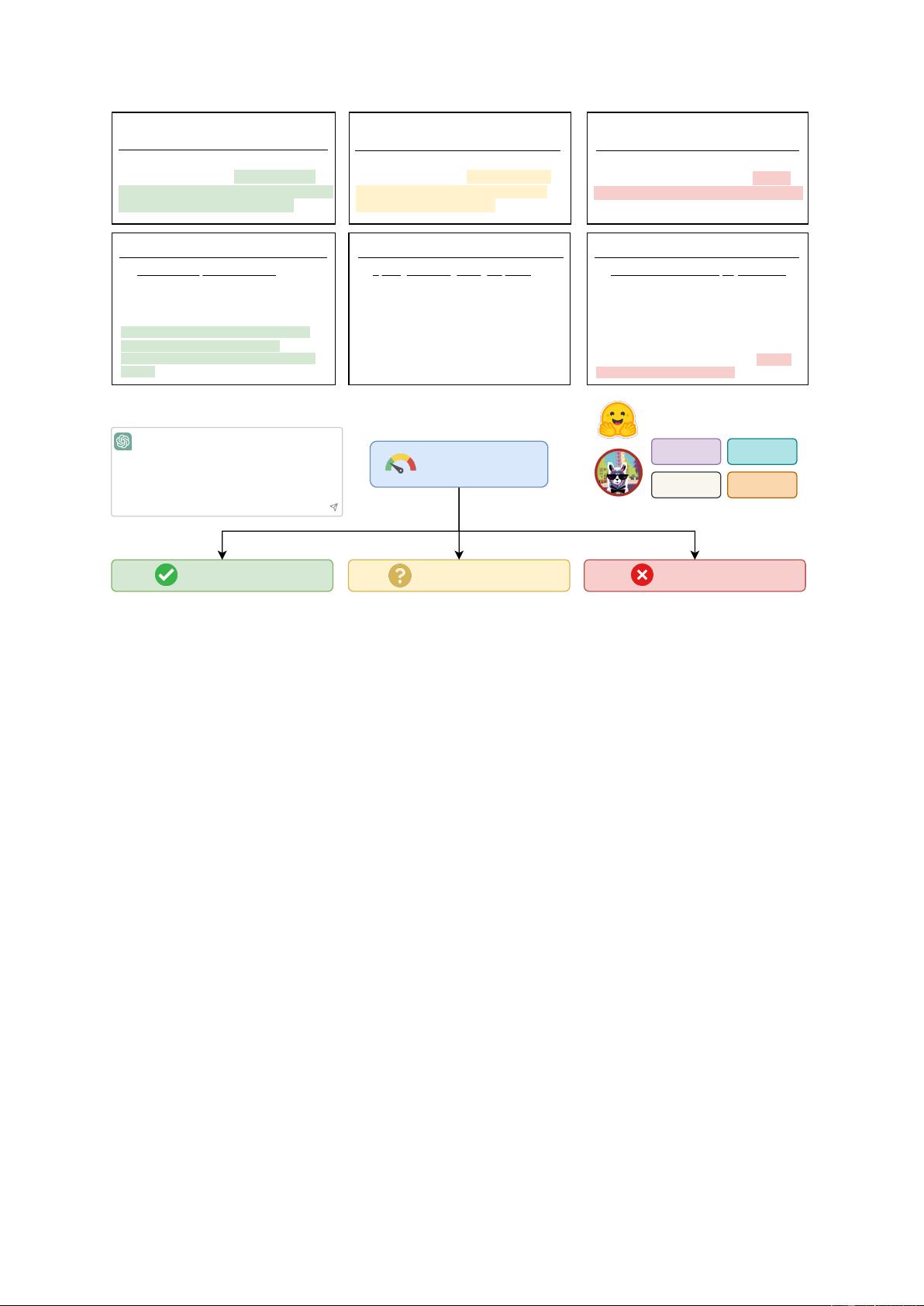

Figure 1: We make the first step towards automatically evaluating attribution and identifying specific types of

errors with AttrScore. We explore two approaches in AttrScore: (1) prompting LLMs, and (2) fine-tuning LMs on

simulated and repurposed datasets from related tasks.

ment, and 3) contradictory: the generated state-

ment directly contradicts the cited reference. Un-

like existing work (Bohnet et al., 2022) that uses

binary categorization (i.e., attributable or not) and

Liu et al. (2023) that defines the degree of refer-

ence support for the generated statement as “full”,

“partial”, or “no support”, our fine-grained error

categorization aids humans in better understanding

the type of an attribution error made by an LLM.

This not only enhances safe system usage but also

provides valuable insights for future development

of mechanisms tailored to correct specific errors.

We explore two approaches in AttrScore: 1)

prompting LLMs and 2) fine-tuning LMs on simu-

lated and repurposed data from related tasks such

as question answering (QA), fact-checking, natural

language inference (NLI), and summarization. For

evaluation, unlike existing work (Liu et al., 2023;

Gao et al., 2023) that only uses queries from exist-

ing benchmarks, we curate a set of test examples

covering 12 different domains from a generative

search engine, New Bing. This is the first eval-

uation set for measuring the attribution of LLMs

with queries created based on real-life interactions,

hence avoiding the data contamination issue.

Our results indicate that both approaches show

reasonable performance on our curated and simu-

lated test sets; yet there is still substantial room for

further improvement. Major sources of evaluation

failures include insensitivity to fine-grained infor-

mation comparisons, such as overlooking contex-

tual cues in the reference, disregard for numerical

values, and failure in performing symbolic opera-

tions. In light of these findings, we discuss poten-

tial directions for improving AttrScore, including

training models to be more strongly conditioned on

the reference, and augmenting them with external

tools for numerical and logical operations.

With the new formulation of attribution errors,

the development of AttrScore, the introduction of

new test sets, and the insights into challenges and

potential directions for future work, we hope our

work can help lay the foundation for the important

task of automatically evaluating LLM attributions.

2 Problem Formulation

The primary task in this paper is to evaluate attribu-

tion, which involves verifying whether a reference

provides sufficient support for a generated answer

to a user’s query. Our task setting prioritizes one

reference per statement, a unit task that more com-

plex scenarios can be decomposed to. We study

such a setting as it forms the basis for dealing with

multiple references or distinct segments (Liu et al.,

2023; Gao et al., 2023).

Prior work, such as Rashkin et al. (2021); Gao

et al. (2022); Bohnet et al. (2022), mainly focuses

on binary verification, i.e., determining if a refer-

ence supports the generated answer or not. We

propose advancing this task by introducing a more

fine-grained categorization. Specifically, we clas-

sify attributions into three distinct categories:

4

•

Attributable: The reference fully supports the

generated answer.

•

Extrapolatory: The reference lacks sufficient

information to validate the generated answer.

•

Contradictory: The generated answer contra-

dicts the information presented in the reference.

To illustrate, consider a contradictory example

(Figure 1). The query is “What was the unemploy-

ment rate in Germany in 2020?”, and the generated

answer is “4.31%”. However, the reference states

that the rate was “3.81%”, contradicting the gen-

erated answer. An extrapolatory instance, on the

other hand, would be a query about the “gas price

in California”. While the reference is relevant, it

does not contain specific information to verify the

correctness of the generated answer.

Following these examples, we see the impor-

tance of granularity in error classification. A fine-

grained classification allows us to pinpoint the na-

ture of the errors, be it contradiction or extrapo-

lation. Users can better understand the type of

errors an LLM might make, enabling them to use

the model more safely. Additionally, such an er-

ror identification system can guide future training

processes of attributed LLMs, leading to specific

mechanisms’ development to correct such errors.

Our categorization also offers a departure from

the existing approach (Liu et al., 2023), which em-

phasizes on degree of support (“full”, “partial”,

or “none”) rather than attribution error types. Our

approach highlights specific issues in attribution

4

We acknowledge that while these categories are generally

mutually exclusive, complex scenarios might blur the bound-

aries between them. However, such cases are very rare. For

the purpose of this study, we maintain their exclusivity to

enable clear and focused error analysis.

evaluation for more effective error management

and system improvement.

Formally, the task of attribution evaluation in-

volves a natural language query

q

, a generated an-

swer

a

, and a reference

x

from an attributed LLM.

The goal is to develop a function, denoted as

f

, that

inputs

(q, a, x)

and outputs a class label indicating

whether “according to

x

, the answer

a

to the query

q is attributable, extrapolatory or contradictory.”

5

3 Automatic Evaluation of Attribution

Following our problem definition, we introduce

two approaches for automatic evaluation of attri-

bution: prompting LLMs and fine-tuning LMs on

simulated and repurposed data from related tasks.

3.1 Prompting LLMs

Recent research (Fu et al., 2023) has demonstrated

the possibility of prompting LLMs to evaluate the

quality of generated text using their emergent ca-

pabilities (Wei et al., 2022b), such as zero-shot in-

struction (Wei et al., 2022a) and in-context learning

(Brown et al., 2020). Following this approach, we

prompt LLMs, such as ChatGPT (OpenAI, 2023a),

using a clear instruction that includes definitions of

the two types of errors (as shown in Figure 1) and

an input triple of the query, answer, and reference

for evaluation. The complete prompt used in our

study can be found in Appendix Table 6.

3.2 Fine-tuning LMs on Repurposed Data

The primary challenge in fine-tuning LMs for auto-

matic attribution evaluation is the lack of training

data. One potential approach is to hire annotators to

collect real samples, but the cost can be prohibitive.

Here, we first repurpose datasets from three re-

lated tasks (fact-checking, NLI, and summariza-

tion). We then propose to further simulate more

realistic samples from existing QA benchmarks.

Repurpose data from fact-checking, NLI, and

summarization tasks. Given the connections be-

tween our attribution evaluation task and the tasks

of fact-checking, NLI, and summarization, we pro-

pose to utilize datasets from these fields to enrich

our training examples. Fact-checking data and NLI

data, with their emphasis on assessing the consis-

tency and logical relationship between claims (hy-

pothesis) and evidence (premise), mirrors our task’s

5

It is important to note that this evaluation focuses on the

“verifiability” of the answer based on the reference. It does not

measure the “relevance”, i.e., whether the answer correctly

responds to the query (Liu et al., 2023).

Query: Which apostle had a

thorn in his side?

Long Ans: Paul was an apostle

who had a thorn in his side [1].

Thorn in the flesh

Thorn in the flesh is a phrase of

New Testament origin used to

describe a chronic infirmity,

annoyance, or trouble in one's

life, drawn from Paul the

Apostle's use of the phrase in

his Second Epistle to the

Corinthians 12 : 7 -- 9

References

[1] en.wikipedia.org/wiki/

Thorn_in_the_flesh

Thorn in the flesh

Thorn in the flesh is a phrase of

New Testament origin used to

describe a chronic infirmity,

annoyance, or trouble in one's

life, drawn from Paul the

Apostle's use of the phrase in

his Second Epistle to the

Corinthians 12 : 7 -- 9

References

[1] en.wikipedia.org/wiki/

Thorn_in_the_flesh

Thorn in the flesh

Thorn in the flesh is a phrase of

New Testament origin used to

describe a chronic infirmity,

annoyance, or trouble in one's

life, drawn from John the

Apostle's use of the phrase in

his Second Epistle to the

Corinthians 12 : 7 -- 9

References

[1] en.wikipedia.org/wiki/

Thorn_in_the_flesh

Thorn (letter)

Thorn or þorn (Þ, þ) is a letter

in the Old English, Old Norse,

Old Swedish and modern

Icelandic alphabets, as well as

modern transliterations of the

Gothic alphabet, Middle Scots,

and some dialects of Middle

English. It was also used ...

References

[1] https://en.wikipedia.org/

wiki/Thorn_(letter)

(A): Attributable (B): Contradictory (C): Contradictory (D): Extrapolatory

Query: Which apostle had a

thorn in his side?

Long Ans: Phillip had a thorn

in his side [1].

Query: Which apostle had a

thorn in his side?

Long Ans: Paul was an apostle

who had a thorn in his side [1].

Query: Which apostle had a

thorn in his side?

Long Ans: The apostle who had

a thorn in his side is Paul [1].

Short Ans: Paul [1] Short Ans: Phillip [1] Short Ans: Paul [1] Short Ans: Paul [1]

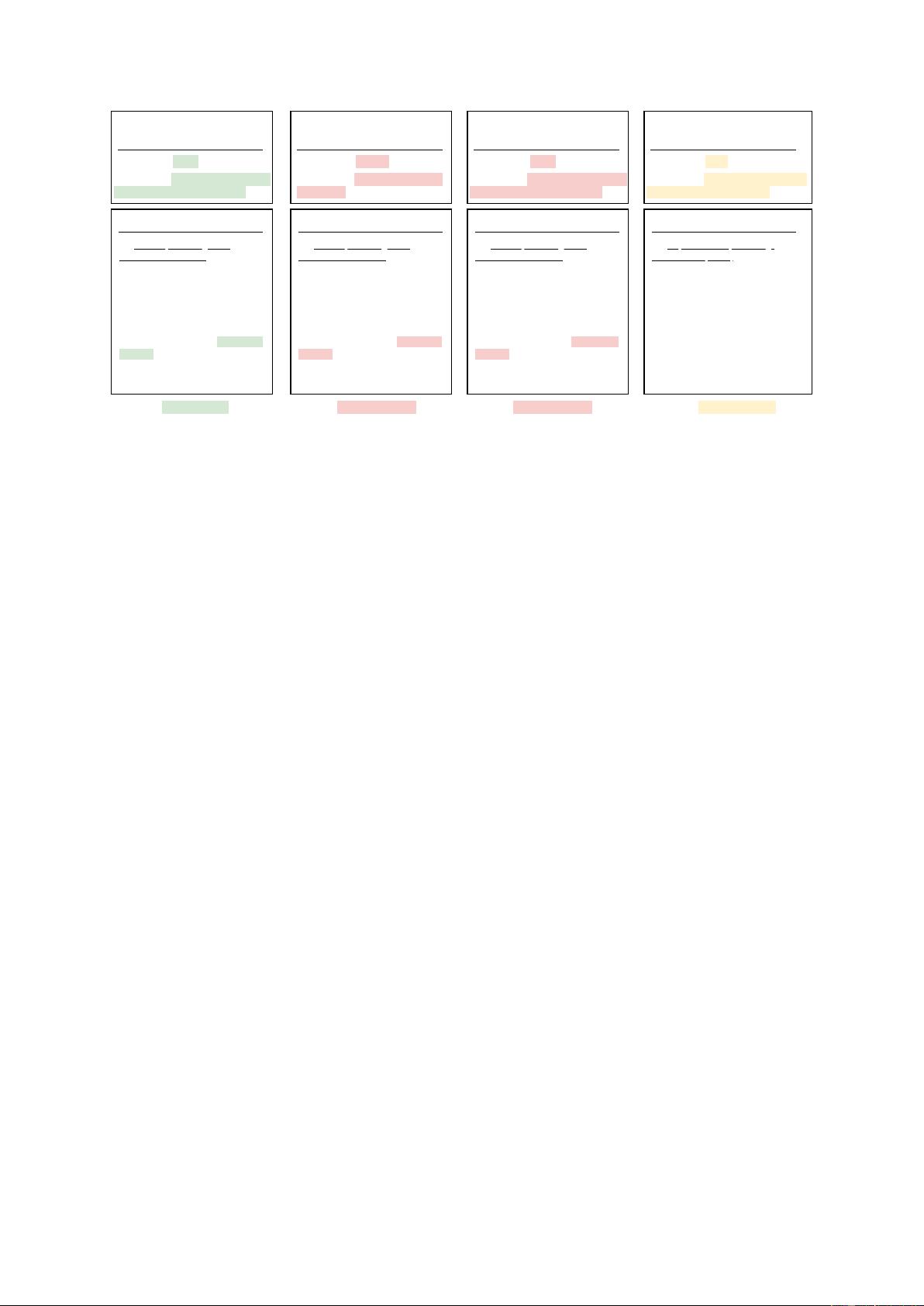

Figure 2: Examples simulated from open-domain QA. We 1) use the original (question, answer, context) pair as an

attributable instance (A), 2) substitute the answer or the answer span in the context to simulate a contradictory error

example (B, C), and 3) replace the context with alternatives to simulate an extrapolatory error example (D). In order

for models trained the simulated data to generalize well to the long answer setting in real-life search engines like

New Bing, we convert the short answer to a long one (using ChatGPT).

objective of checking the supporting relationship

between reference documents and generated state-

ments. Summarization datasets, especially those

involving the detection of hallucinations (including

both intrinsic and extrinsic (Maynez et al., 2020),

could provide a useful starting point for identify-

ing attribution inconsistencies. Nevertheless, these

datasets would require suitable adaptation. We

keep their original data sequences and modify their

data label space to suit the specific needs of the

attribution evaluation definition. Additional infor-

mation on this can be found in Appendix A.

Simulate data from open-domain QA. QA bench-

marks provide an ideal platform for data simula-

tion, as they comprise questions, their correspond-

ing ground truth answers, and reference contexts.

These elements can be directly employed as at-

tributable examples (Figure 2, A). In open-domain

QA datasets, answers are typically brief text spans.

To cater to the long answer setting in most at-

tributed LLMs, we convert these short answers

into longer sentences using ChatGPT. For simulat-

ing contradictory errors, we propose two methods:

(1) The first involves modifying the correct answer

with an alternative candidate from an off-the-shelf

QA model, an answer substitution model, or a ran-

dom span generator (Figure 2, B). (2) The second

retains the original answer but replaces the answer

span in the reference context with a comparable

candidate (Figure 2, C). To emulate extrapolatory

errors, we employ a BM25 retriever on the ques-

tion, retrieving relevant external documents from

resources such as Wikipedia, which do not contain

the ground truth answers (Figure 2, D). More de-

tails regarding the simulation of these errors from

QA datasets can be found in Appendix A.

4 Experimental Setup

4.1 Datasets

This section presents the datasets utilized for train-

ing and testing methods for automatic attribu-

tion evaluation. In particular, we develop two

evaluation sets, AttrEval-Simulation and AttrEval-

GenSearch, derived from existing QA datasets

and a generative search engine, respectively. The

dataset statistics are presented in Table 1.

Training data. To repurpose and simulate train-

ing examples, we follow the method in Section

3.2 based on four similar tasks’ datasets. For

QA, we consider NaturalQuestions (Kwiatkowski

et al., 2019). For fact-checking, we include FEVER

(Thorne et al., 2018), Adversarial FEVER (Thorne

et al., 2019), FEVEROUS (Aly et al., 2021), VI-

TAMINC (Schuster et al., 2021), MultiFC (Augen-

stein et al., 2019), PubHealth (Kotonya and Toni,

2020), and SciFact (Wadden et al., 2020). For NLI,

we include SNLI (Bowman et al., 2015), MultiNLI

(Williams et al., 2018), ANLI (Nie et al., 2020)

and SciTail (Khot et al., 2018). For summarization,

we include XSum-Halluc. (Maynez et al., 2020),

XENT (Cao et al., 2022), and FactCC (Kryscinski

Split

Related Tasks

Data Sources #Samples

Train

QA NaturalQuestions 20K

Fact-checking

FEVER, VITAMINC,

Adversarial FEVER,

FEVEROUS, SciFact

PubHealth, MultiFC

20K

NLI

SNLI, MultiNLI

ANLI, SciTail

20K

Summarization

XSum-Hallucinations,

XENT, FactCC

3.8K

Test

QA

PopQA, EntityQuestions,

HotpotQA, TriviaQA,

WebQuestions, TREC

4K

-

Annotated samples from a

generative search engine

242

Table 1: Statistics of the training and test datasets for

attribution evaluation. We include the distributions of

the labels and data sources in Appendix B.

et al., 2020). We use all examples in the summariza-

tion task datasets, and sample 20K examples from

QA, fact-checking, and NLI task datasets. We com-

bine all the simulated datasets to create the training

set for our main experiment.

AttrEval-Simulation. For testing, we first simu-

late examples from six out-of-domain QA datasets:

HotpotQA (Yang et al., 2018), EntityQuestions

(Sciavolino et al., 2021), PopQA (Mallen et al.,

2022), TREC (Baudis and Sedivý, 2015), Trivi-

aQA (Joshi et al., 2017), and WebQuestions (Be-

rant et al., 2013). Note that we intend to use dif-

ferent QA datasets for training and testing, as to

test the model’s generalization ability, and evalu-

ate its performance across a diverse set of domains

and question formats. Our manual examination

indicates that 84% of 50 randomly sampled exam-

ples accurately align with their category, and the

labeling errors are primarily due to incorrect an-

notations in the original QA datasets or heuristics

used to formulate comparable answer candidates

for contradictory errors and to retrieve negative

passages for extrapolatory errors.

AttrEval-GenSearch. To examine the real-life ap-

plication of automatic attribution evaluation, ap-

proximately 250 examples from the New Bing

search engine are annotated carefully by the au-

thors. This process comprises two subtasks: creat-

ing queries and verifying attributions. To avoid the

issue of training data contamination, new queries

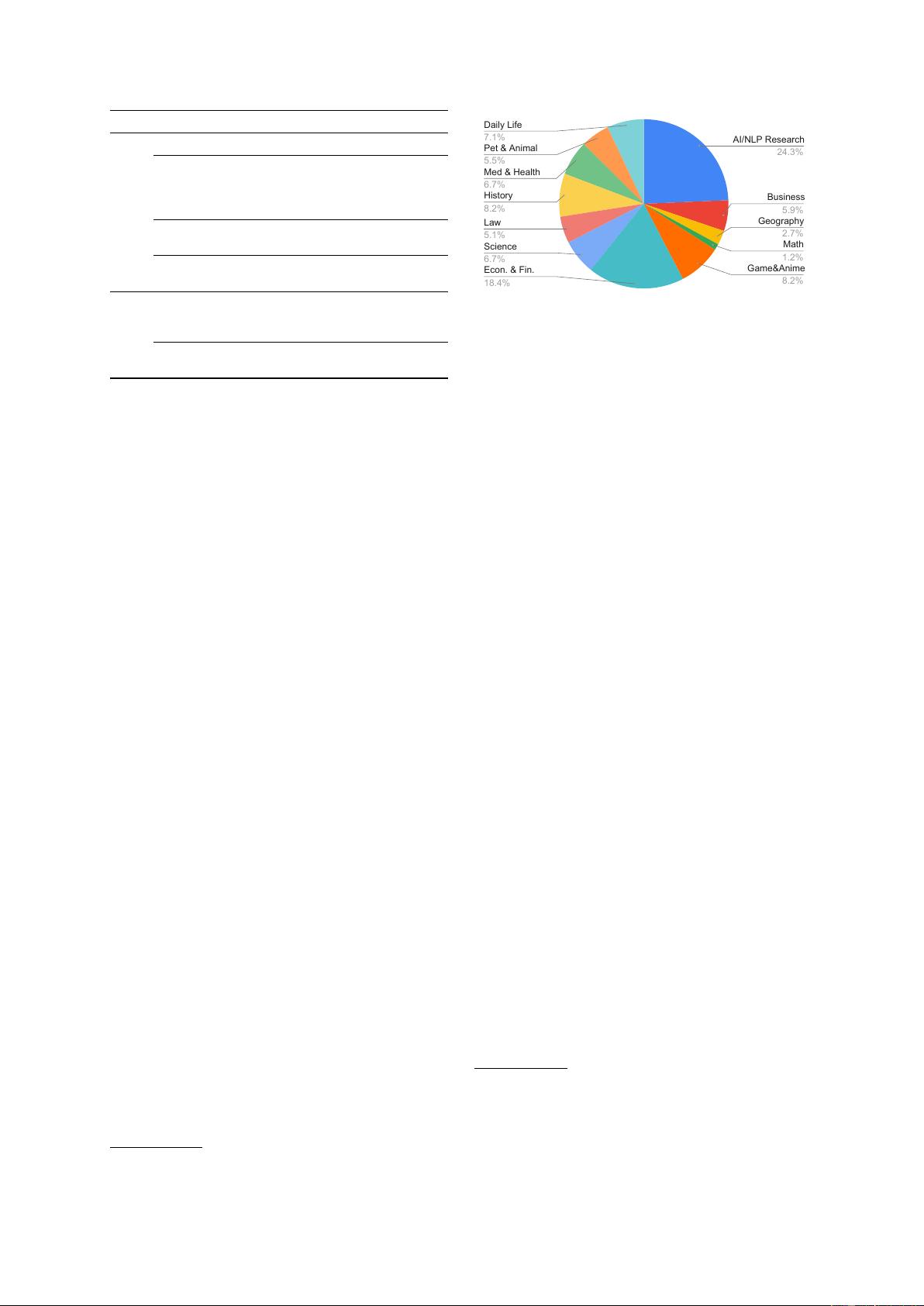

are manually created across 12 domains (Figure

3).

6

To facilitate and motivate query annotation,

6

The “AI/NLP Research” domain is inspired by recent

discussions on social media about testing LLMs’ knowledge

on researchers, e.g., “Is XX a co-author of the paper XX?”

Daily Life

7.1%

Pet & Animal

5.5%

Med & Health

6.7%

History

8.2%

Law

5.1%

Science

6.7%

Econ. & Fin.

18.4%

AI/NLP Research

24.3%

Business

5.9%

Geography

2.7%

Math

1.2%

Game&Anime

8.2%

Figure 3: Domain distribution of our annotated AttrEval-

GenSearch test set (covering 12 domains in total).

keywords from a specific domain are randomly

generated using ChatGPT, and relevant facts within

that domain are compiled from the Web.

7

In the verification process, queries are sent to the

New Bing search engine under a balanced mode fol-

lowing Liu et al. (2023), which balances accuracy

and creativity. The validity of the output generated

by New Bing is evaluated, where we consider only

the first sentence that answers the question along

with its reference. As we state in Section 2, our

evaluation emphasizes the error type in a single

reference per statement. In the case of a sentence

having multiple references or distinct segments (for

example, “XXX [1][2]” or “XXX [1] and YYY

[2]”), each reference or segment is treated as a

separate sample, and the attributions are verified

individually. Finally, the samples are categorized

by the annotators as attributable, contradictory, or

extrapolatory. Detailed annotation guidelines can

be found in Appendix D.

4.2 Implementation Details

In the configuration of “prompting LLMs”, we

test Alpaca (Taori et al., 2023), Vicuna (Chiang

et al., 2023), ChatGPT (OpenAI, 2023a) and GPT-

4 (OpenAI, 2023b), where we use OpenAI’s offi-

cial APIs (

gpt-3.5-turbo, gpt-4-0314

)

8

, and

weights from Alpaca and Vicuna from the official

repository

9

. For Alpaca and Vicuna inference, doc-

uments are tokenized and truncated at a maximum

of 2048 tokens. We generate text with a temper-

ature of

0

. The prompts for the task of evaluat-

ing attribution are provided in Appendix Table 6,

7

We make an effort to collect new facts post-2021 to test

about “knowledge confliction” (Zhou et al., 2023; Xie et al.,

2023) between parametric and external knowledge.

8

platform.openai.com/docs/api-reference/chat

.

Given GPT-4’s high cost and slow inference speed, we

evaluate it on 500 random samples from AttrEval-Simulation.

9

https://github.com/tatsu-lab/stanford_alpaca

,

https://github.com/lm-sys/FastChat

剩余20页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2304

- 资源: 2398

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 非常好的python入门书傻瓜式教程100%好用.zip

- 小型流畅接口,更轻松地在 redis 中缓存 sequelize 数据库查询结果.zip

- 火焰检测19-YOLO(v5至v7)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

- 项目二 李白代表作品页面(资源)

- 将数据存储在 Redis 数据库中的 Node.js 应用.zip

- 将你的 Laravel 应用程序与 redis 管理器集成.zip

- 将一个 redis db 复制到另一个 redis db.zip

- 将 redis 示例 twitter 应用程序移植到 Ruby 和 Sinatra.zip

- 非常好的Python入门教程100%好用.zip

- 非常好的教程Python 编程指南100%好用.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功