没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文提出了API-Bank这一全新的评估基准,专为工具增强型大型语言模型(LLMs)设计,用以系统评价这些模型利用外部工具的能力。研究通过模拟真实世界情境,构建了53个常用API以及一套完整的交互流程,包括从决定是否调用API到执行具体操作的所有步骤。API-Bank包含264段带注释对话,共涉及568次API调用,旨在测试并提高大型语言模型通过多轮次对话解决复杂问题的能力。此外,研究还进行了详细的实验分析与错误分类,结果显示GPT-4相比早期版本GPT-3和GPT-3.5拥有更强的规划性能,但仍存在一定改进空间,如逻辑判断、用户意图理解和跨API协同等方面。尽管如此,本研究成果揭示了将外部工具集成进语言模型以满足人类日常需求的巨大潜力。 适用人群:对自然语言处理领域内的大模型训练、API接口设计及其实现感兴趣的科研人员和技术开发者。 使用场景及目标:适用于研究机构和企业在进行语言模型能力测试时采用,旨在衡量LLMs通过调用第三方工具完成任务的效果。同时,它还可以指导未来的研究方向,帮助提升模型的实际应用价值,比如在智能助手、虚拟客服等领域发挥关键作用。 其他说明:本文不仅详细介绍了API-Bank的设计理念和方法论,还包括具体的案例分析和技术细节讨论。

资源推荐

资源详情

资源评论

API-Bank: A Benchmark for Tool-Augmented LLMs

Minghao Li

1

, Feifan Song

2

, Bowen Yu

1

∗

, Haiyang Yu

1

, Zhoujun Li

3

, Fei Huang

1

, Yongbin Li

1

1

Alibaba DAMO Academy

2

MOE Key Laboratory of Computational Linguistics, Peking University

3

Shenzhen Intelligent Strong Technology Co., Ltd.

{lmh397008, yubowen.ybw, yifei.yhy, f.huang, shuide.lyb}@alibaba-inc.com

songff@stu.pku.edu.cn

lizhoujun@aistrong.com

Abstract

Recent research has shown that Large Lan-

guage Models (LLMs) can utilize external

tools to improve their contextual processing

abilities, moving away from the pure language

modeling paradigm and paving the way for

Artificial General Intelligence. Despite this,

there has been a lack of systematic evalua-

tion to demonstrate the efficacy of LLMs using

tools to respond to human instructions. This

paper presents API-Bank, the first benchmark

tailored for Tool-Augmented LLMs. API-

Bank includes 53 commonly used API tools,

a complete Tool-Augmented LLM workflow,

and 264 annotated dialogues that encompass

a total of 568 API calls. These resources have

been designed to thoroughly evaluate LLMs’

ability to plan step-by-step API calls, retrieve

relevant APIs, and correctly execute API calls

to meet human needs. The experimental re-

sults show that GPT-3.5 emerges the ability to

use the tools relative to GPT3, while GPT-4

has stronger planning performance. Neverthe-

less, there remains considerable scope for fur-

ther improvement when compared to human

performance. Additionally, detailed error anal-

ysis and case studies demonstrate the feasibil-

ity of Tool-Augmented LLMs for daily use, as

well as the primary challenges that future re-

search needs to address.

1 Introduction

Over the past several years, significant progress has

been made in the development of large language

models (LLMs), including GPT-3 (Brown et al.,

2020), Codex (Chen et al., 2021), ChatGPT, and

impressive GPT-4 (Bubeck et al., 2023). These

models exhibit increasingly human-like capabil-

ities, such as powerful conversation, in-context

learning, and code generation across a wide range

of open-domain tasks. Some researchers even be-

∗

Corresponding author.

lieve that LLMs could provide a gateway to Artifi-

cial General Intelligence (Bubeck et al., 2023).

Despite their usefulness, however, LLMs are

still limited as they can only learn from their train-

ing data (Brown et al., 2020). This information

can become outdated and may not be suitable

for all applications (Trivedi et al., 2022; Mialon

et al., 2023). Consequently, there has been a surge

in research aimed at augmenting LLMs with the

ability to use external tools to access up-to-date

information (Izacard et al., 2022), perform com-

putations (Schick et al., 2023), and interact with

third-party services (Liang et al., 2023) in response

to user requests. Tool use has traditionally been

viewed as uniquely human behavior, and the emer-

gence of tool use has been considered a significant

milestone in primate evolution, even serving to

demarcate the appearance of the genus Homo (Am-

brose, 2001). Analogous to the timeline of human

evolution, we believe that at this current juncture,

we must address two key questions: (1) How effec-

tive are current LLMs in using tools? (2) What are

the remaining obstacles for LLMs to use tools?

In this paper, we introduce API-Bank, the

first systematic benchmark for evaluating Tool-

Augmented LLMs’ ability to use tools. We imagine

a vision where, with access to a global repository of

tools, LLMs can aid humans in

plan

ning a require-

ment by outlining all the steps necessary to achieve

it. Subsequently, it will

retrieve

the tool pool for

the needed tools and, through possibly multiple

rounds of API

call

s, fulfill the human requirement,

thus becoming truly helpful and all-knowing. To

achieve this goal, we first simulate the real-world

scenario by creating 53 commonly used tools, such

as SearchEngine, PlayMusic, BookHotel, Image-

Caption, and organize them in an API Pool for

LLMs to call. We then propose a complete work-

flow for LLMs to use these tools, which includes

determining whether to call an API, which API to

call, generating an API call, and self-assessing the

arXiv:2304.08244v1 [cs.CL] 14 Apr 2023

No

API Call? Yes

Yes

No

Found?

Account

Management

Information

Retrieval

Health

Management

Entertainment

Travel

Schedule

Management

Smart

Home

Finance

Management

API Pool

External Service

No

Yes

Give Up

Satisfied

?

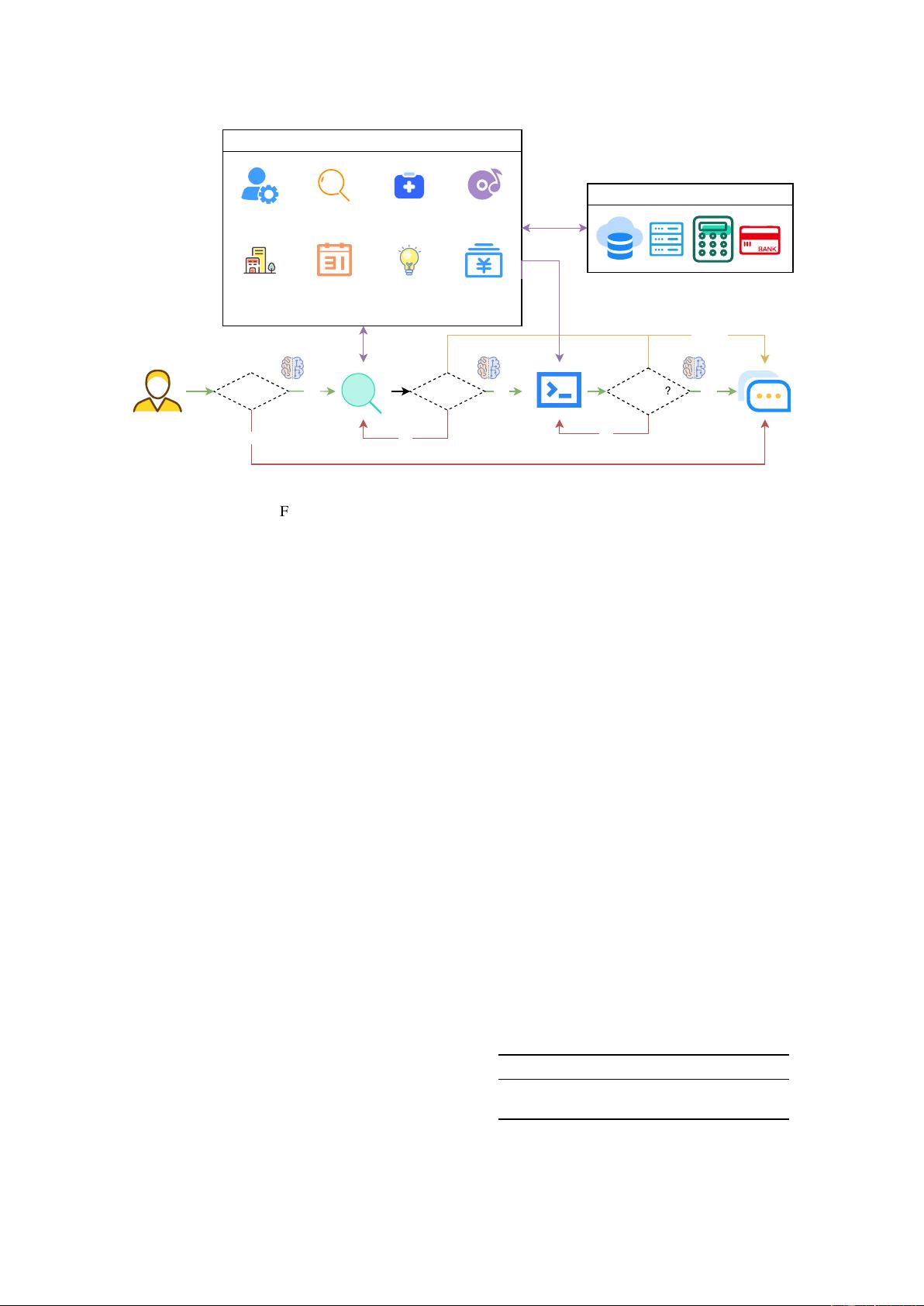

Figure 1: The proposed Tool-Augmented LLMs paradigm.

correctness of the API call. We further manually

review 264 dialogues that contain 568 API calls,

along with an automated scoring script to fairly

evaluate each LLM’s performance in using tools.

Similar to our vision, we divide all dialogues into

three levels. Level-1 evaluates the LLM’s ability

to call the API. Given an API’s description, the

model needs to determine whether to call the API,

call it correctly, and respond appropriately to its

return. Level-2 further assesses the LLM’s ability

to

retrieve

the API. LLMs must search for possi-

ble APIs that may solve the user’s requirement and

learn how to use the API. Level-3 examines the

LLM’s ability to

plan

API beyond retrieve and call.

In this level, the user’s requirement may be unclear

and require multiple API steps to solve. For exam-

ple, "I want to travel from Shanghai to Beijing for

a week starting tomorrow. Help me plan the travel

route and book flights, tickets, and hotels." LLMs

must infer a reasonable travel plan and call flight,

hotel, and ticket booking APIs based on the plan,

taking into account compatibility issues with time.

On our constructed API-Bank benchmark, we

conduct experimental analysis for the first time on

the effectiveness of popular LLMs in utilizing API

tools. Our findings suggest that calling API is an

emergent ability that shares similarities with math

word problems (Wei et al., 2022). Specifically,

we observe that GPT-3-Davinci struggles to call

APIs in the simplest level-1 correctly. However,

with GPT-3.5-Turbo, the correctness of API calls

dramatically improves, with around 50% success

rate. Moving to level-2, which involves API re-

trieval, GPT-3.5-Turbo achieves a 40% success rate.

However, when it comes to level-3, which requires

API planning, GPT-3.5-Turbo encounters numer-

ous errors, necessitating an average of 9.9 rounds

of dialogue to complete user requests. This is ap-

proximately 38% more than what is required by

GPT-4. Meanwhile, it should be noted that GPT-4

remains imperfections, as it utilizes approximately

35% more conversation rounds in API planning

when compared to humans. We also provide a de-

tailed error analysis to summarize the obstacles

faced by LLMs when using tools. These include

refusing to make API calls despite explicit instruc-

tions in the prompt and generating non-existent

API calls. Overall, our study sheds light on the po-

tential of LLMs to utilize API tools and highlights

the challenges that need to be addressed in future

research.

level-1 level-2 level-3

Num of Dialogues 214 50 8

Num of API calls 399 135 34

Table 1: The statistics of API-Bank.

2 Tool-Augmented LLMs Paradigm

The existing works on Tool-Augmented LLMs usu-

ally teach the language model to use tools in two

different ways: In-context learning and Fine-tuning.

The former is to show the model the instructions

and the examples of all the candidate tools, which

can extend the general model directly but is limited

by the context length. In comparison, the latter is

fine-tuning the language model by annotated data,

which has no length problem but will damage the

robustness of the model. In this work, we mainly

focus on in-context learning and solve its shortage

of limited context length.

To address this issue, we design a new paradigm

that may be the only solution to use a large num-

ber of tools under the context length limit. Fig-

ure 1 shows the flowchart of the proposed paradigm.

This is an example process for a chatbot, and the

paradigm can be generalized to any generative

model application.

In the proposed paradigm, there is an API Pool

containing various APIs focusing on different as-

pects of life, as well as a keyword-based API search

engine to help the language model find API. Be-

fore starting, the model will be given a prompt to

explain the whole process and its task, as well as

how to use the API search engine.

In the longest path of the whole flowchart, the

model needs to make several judgments (the dia-

monds in the Figure 1) as follows:

API Call

After each user statement, the model

needs to determine if an API call is required to

access the external service, which requires the abil-

ity to know the boundaries of its knowledge or the

need for outside action. This judgment leads to two

different options: regular reply or starting the API

call process. During the regular reply, the model

could chat with people or try to figure out the needs

of the user and plan the process of completing them.

If the model already understands the user’s needs

and decides to start the API call process, it will

continue with subsequent steps.

Find the Right API

To address the input limi-

tations of the model, the model is given only the

instructions of the API search engine at the begin-

ning without any specific API introduction. An

API search is required before every specific API

call. When performing an API search, the model

should summarize the demand of the user to a few

keywords. The API search engine will look up the

API pool, find the best match and return the related

documentation to help the model understand how

to use it. The retrieved API may not be what the

model needs, so the model has to decide whether

to modify the keywords and search them again, or

give up the API call and reply.

Reply after API Call

After completing the API

call and obtaining the returned results, the model

needs to take action based on the results. If the

returned results are expected, the model can reply

to the user based on the results. If there is an ex-

ception in the API call or the model is not satisfied

with the results, the model can choose to refine and

call again based on the returned information, or

give up the API call and reply.

The pseudo-code description of the complete

API call procedure is as follows:

Algorithm 1 API call process

1: Input: us ← U serSt atement

2: if API Call is needed then

3: while API not found do

4: keywords ← summarize(us)

5: api ← search(k eywords)

6: if Give Up then

7: break

8: end if

9: end while

10: if API found then

11: api_doc ← api.documentation

12: while Response not satisfied do

13: api_call ← gen_api_call(api_doc, us)

14: api_re ← execute_api_call(api_call)

15: if Give Up then

16: break

17: end if

18: end while

19: end if

20: end if

21: if response then

22: re ← generate_response(api_re)

23: else

24: re ← generate_response()

25: end if

26: Output: ResponseT oUser

3 Benchmark Construction

3.1 System Design

The evaluation system mainly contains 53 APIs,

supporting databases, and a ToolManager. The

complete list of APIs is attached in Appendix A.

The built system includes the most common needs

of life and work. The other types of AI models are

also abstracted in the form of APIs that can be used

by the LLMs, which will extend the capabilities of

specific aspects of the model. In addition, some

operating system interfaces are included to allow

models to control external applications.

剩余11页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2304

- 资源: 2398

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 灭火器检测4-YOLO(v5至v9)数据集合集.rar

- 最快的基于thunk,promise的redis客户端,支持所有redis功能 .zip

- 更快地缓存 Wordpress.zip

- 版本控制系统中Git的安装与配置指南

- Building Resilient Architectures on AWS.pdf

- 扫描 Redis RDB 以查找大键 分析redis的RDB文件,输出big key报告.zip

- 我使用 redis 的工具.zip

- 灭火器检测39-YOLO(v5至v9)、COCO、CreateML、Darknet、Paligemma、TFRecord、VOC数据集合集.rar

- 异步并发哈希图 与 Redis 不同,它支持多线程和高级数据结构.zip

- 常见有用的服务器配置,包括nginx,mysql,tomcat,redis.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功