没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文介绍了 SELF-INSTRUCT 方法——一种用于改善预训练语言模型跟随指令能力的框架。作者提出了一种通过自动生成的指令数据对原始模型进行微调的新方法。首先从少量种子任务开始,利用现有语言模型(如GPT3)生成新的指令、输入与输出样例,然后剪枝并用这些样本来增强原有模型的表现。实验证明,采用 SELF-INSTRUCT 微调后的GPT3,在 SUPER-NATURALINSTRUCTIONS 数据集上取得了超过 33% 的相对提升,并在多项测试中展示了广泛的任务适应性和优秀的性能。 适合人群:对自然语言处理感兴趣的研究人员、机器学习爱好者以及需要提升AI系统指令理解和响应质量的技术人员。 使用场景及目标:适用于希望减少人工标注数据依赖、提升大规模语言模型泛化能力和多任务解决能力的项目;尤其适合于那些涉及复杂语言任务的应用场合。 阅读建议:虽然论文的技术细节较多,但重点应放在理解框架的整体流程及其带来的显著效益上。对于具体的实验设置和技术参数,可根据自身项目的实际情况做适当调整。

资源推荐

资源详情

资源评论

SELF-INSTRUCT: Aligning Language Model

with Self Generated Instructions

Yizhong Wang

♣

Yeganeh Kordi

♢

Swaroop Mishra

♡

Alisa Liu

♣

Noah A. Smith

♣+

Daniel Khashabi

♠

Hannaneh Hajishirzi

♣+

♣

University of Washington

♢

Tehran Polytechnic

♡

Arizona State University

♠

Johns Hopkins University

+

Allen Institute for AI

yizhongw@cs.washington.edu

Abstract

Large “instruction-tuned” language models

(finetuned to respond to instructions) have

demonstrated a remarkable ability to gener-

alize zero-shot to new tasks. Nevertheless,

they depend heavily on human-written instruc-

tion data that is limited in quantity, diver-

sity, and creativity, therefore hindering the

generality of the tuned model. We intro-

duce SELF-INSTRUCT, a framework for im-

proving the instruction-following capabilities

of pretrained language models by bootstrap-

ping off its own generations. Our pipeline

generates instruction, input, and output sam-

ples from a language model, then prunes

them before using them to finetune the orig-

inal model. Applying our method to vanilla

GPT3, we demonstrate a 33% absolute im-

provement over the original model on SUPER-

NATURALINSTRUCTIONS, on par with the per-

formance of InstructGPT

001

1

, which is trained

with private user data and human annotations.

For further evaluation, we curate a set of

expert-written instructions for novel tasks, and

show through human evaluation that tuning

GPT3 with SELF-INSTRUCT outperforms using

existing public instruction datasets by a large

margin, leaving only a 5% absolute gap behind

InstructGPT

001

. SELF-INSTRUCT provides an

almost annotation-free method for aligning pre-

trained language models with instructions, and

we release our large synthetic dataset to facili-

tate future studies on instruction tuning

2

.

1 Introduction

The recent NLP literature has witnessed a tremen-

dous amount of activity in building models that

1

Unless otherwise specified, our comparisons are with the

text-davinci-001

engine. We focus on this engine since it

is the closest to our experimental setup: supervised fine-tuning

with human demonstrations. The newer engines are more

powerful, though they use more data (e.g., code completion or

latest user queries) or algorithms (e.g., PPO) that are difficult

to compare with.

2

Code and data will be available at

https://github.

com/yizhongw/self-instruct.

can follow natural language instructions (Mishra

et al., 2022; Wei et al., 2022; Sanh et al., 2022;

Wang et al., 2022; Ouyang et al., 2022; Chung et al.,

2022, i.a.). These developments are powered by

two key components: large pre-trained language

models (LM) and human-written instruction data.

PROMPTSOURCE (Bach et al., 2022) and SUPER-

NATURALINSTRUCTIONS (Wang et al., 2022) are

two notable recent datasets that use extensive man-

ual annotation for collecting instructions to con-

struct T

0

(Bach et al., 2022; Sanh et al., 2022) and

T

𝑘

-INSTRUCT (Wang et al., 2022). However, this

process is costly and often suffers limited diver-

sity given that most human generations tend to be

popular NLP tasks, falling short of covering a true

variety of tasks and different ways to describe them.

Given these limitations, continuing to improve the

quality of instruction-tuned models necessitates the

development of alternative approaches for supervis-

ing instruction-tuned models.

In this work, we introduce SELF-INSTRUCT, a

semi-automated process for instruction-tuning a

pretrained LM using instructional signals from the

model itself. The overall process is an iterative

bootstrapping algorithm (see Figure 1), which starts

off with a limited (e.g., 175 in our study) seed set

of manually-written instructions that are used to

guide the overall generation. In the first phase, the

model is prompted to generate instructions for new

tasks. This step leverages the existing collection of

instructions to create more broad-coverage instruc-

tions that define (often new) tasks. Given the newly-

generated set of instructions, the framework also

creates input-output instances for them, which can

be later used for supervising the instruction tuning.

Finally, various measures are used to prune low-

quality and repeated instructions, before adding

them to the task pool. This process can be repeated

for many interactions until reaching a large number

of tasks.

To evaluate SELF-INSTRUCT empirically, we run

arXiv:2212.10560v1 [cs.CL] 20 Dec 2022

175 seed tasks with

1 instruction and

1 instance per task

Task Pool Step 1: Instruction Generation

No

Step 4: Filtering

Output-first

Input-first

Step 2: Classification

Task Identification

Step 3: Instance Generation

Instruction : Give me a quote from a

famous person on this topic.

Task

Yes

Task

Instruction : Give me a quote from a famous person on this topic.

Input: Topic: The importance of being honest.

Output: "Honesty is the first chapter in the book of wisdom." - Thomas

Jefferson

Task

Task

Instruction : Find out if the given text is in favor of or against abortion.

Class Label: Pro-abortion

Input: Text: I believe that women should have the right to choose whether

or not they want to have an abortion.

Task

LM

LM

LM

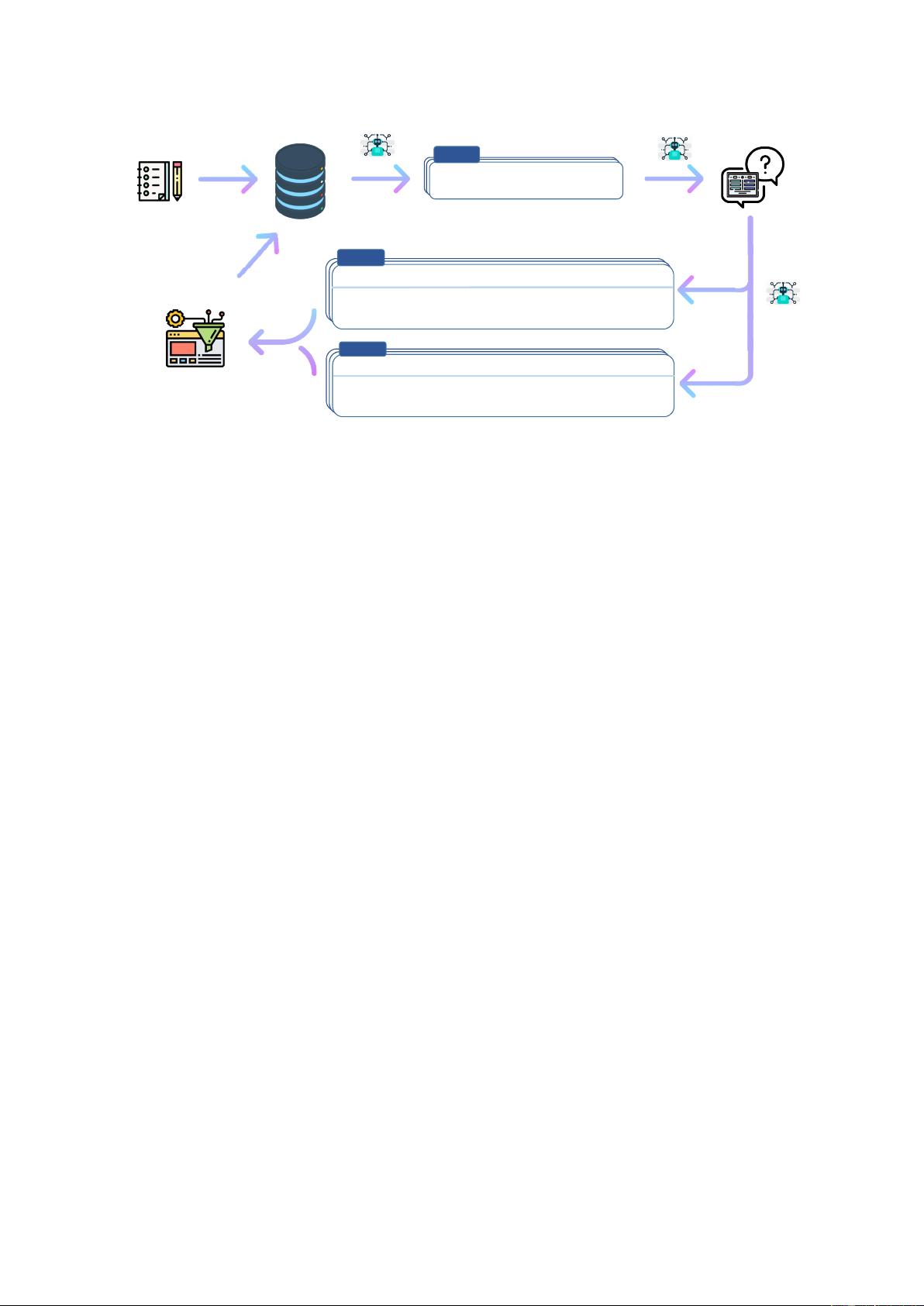

Figure 1: A high-level overview of SELF-INSTRUCT. The process starts with a small seed set of tasks (one instruc-

tion and one input-output instance for each task) as the task pool. Random tasks are sampled from the task pool,

and used to prompt an off-the-shelf LM to generate both new instructions and corresponding instances, followed

by filtering low-quality or similar generations, and then added back to the initial repository of tasks. The resulting

data can be used for the instruction tuning of the language model itself later to follow instructions better. Tasks

shown in the figure are generated by GPT3. See Table 10 for more creative examples.

this framework on GPT3 (Brown et al., 2020),

which is a vanilla LM (§4). The iterative SELF-

INSTRUCT process on this model leads to about

52k instructions, paired with about 82K instance

inputs and target outputs. We observe that the result-

ing data provides a diverse range of creative tasks

and over 50% of them have less than 0.3 ROUGE-

L overlaps with the seed instructions (§4.2). On

this resulting data, we build GPT3

SELF-INST

by

fine-tuning GPT3 (i.e., the same model used for

generating the instructional data). We evaluate

GPT3

SELF-INST

in comparison to various other mod-

els on both typical NLP tasks included in SUPER-

NATURALINSTRUCTIONS (Wang et al., 2022), and

a set of new instructions that are created for novel

usage of instruction-following models (§5). The

SUPERNI results indicate that GPT3

SELF-INST

out-

performs GPT3 (the original model) by a large

margin (+33.1%) and nearly matches the perfor-

mance of

InstructGPT

001

. Moreover, our human

evaluation on the newly-created instruction set

shows that GPT3

SELF-INST

demonstrates a broad

range of instruction following ability, outperform-

ing models trained on other publicly available in-

struction datasets and leaving only a 5% gap behind

InstructGPT

001

.

In summary, our contributions are: (1) SELF-

INSTRUCT, a method for inducing instruction-

following capability with minimal human-labeled

data; (2) We demonstrate its effectiveness via exten-

sive instruction-tuning experiments; (3) We release

a large synthetic dataset of 52K instructions and a

set of manually-written novel tasks for building and

evaluating future instruction-following models.

2 Related Work

Instruction-following language models.

A se-

ries of works have found evidence that vanilla lan-

guage models can be effective at following general

language instructions if tuned with annotated “in-

structional” data – datasets containing language

instructional commands and their desired outcome

based on human judgement (Weller et al., 2020;

Mishra et al., 2022; Wang et al., 2022; Wei et al.,

2022; Sanh et al., 2022; Ouyang et al., 2022; Par-

mar et al., 2022; Scialom et al., 2022; Chung et al.,

2022; Luo et al., 2022; Puri et al., 2022; Yin et al.,

2022; Chakrabarty et al., 2022; Lin et al., 2022;

Gupta et al., 2022; Muennighoff et al., 2022). Addi-

tionally, they show a direct correlation between the

size and diversity of the “instructional” data and the

generalizability of resulting models to unseen tasks.

Since these developments depend on human anno-

tated “instructional” data, this poses a bottleneck

for progress toward more generalizable models (for

example see Fig. 5a in Wang et al., 2022). Our

work aims to tackle this bottleneck by reducing the

dependence on human annotators.

Additionally, despite the remarkable perfor-

mance of models like

InstructGPT

(Ouyang et al.,

2022), their construction process remains quite

opaque. In particular, the role of data has remained

understudied due to limited transparency and data

released by major corporate entities behind these

key models. Addressing such challenges necessi-

tates the creation of a large-scale, public dataset

covering a broad range of tasks.

Instruction-following models have also been of

interest in the multi-modal learning literature (Fried

et al., 2018; Shridhar et al., 2020; Min et al., 2022;

Weir et al., 2022). SELF-INSTRUCT, as a general

approach to expanding data, can potentially also be

helpful in those settings; however, this is out of the

scope of this work.

Language models for data generation and aug-

mentation.

A variety of works have relied on

generative LMs for data generation (Schick and

Schütze, 2021; Wang et al., 2021; Liu et al., 2022;

Meng et al., 2022) or augmentation (Feng et al.,

2021; Yang et al., 2020; Mekala et al., 2022). For

example, Schick and Schütze (2021) propose to

replace human annotations of a given task with

prompting large LMs and use the resulting data for

fine-tuning (often smaller) models in the context

of SuperGLUE tasks (Wang et al., 2019). While

our work can be viewed as a form of “augmenta-

tion,” our work differs from this line in that it is not

specific to a particular task (say, QA or NLI). In

contrast, a distinct motivation for SELF-INSTRUCT

is to bootstrap new task definitions that may not

have been defined before by any NLP practitioner

(though potentially still important for downstream

users).

Self-training.

A typical self-training framework

(He et al., 2019; Xie et al., 2020; Du et al., 2021;

Amini et al., 2022; Huang et al., 2022) uses trained

models to assign labels to unlabeled data and then

leverages the newly labeled data to improve the

model. In a similar line, Zhou et al. (2022a) use

multiple prompts to specify a single task and pro-

pose to regularize via prompt consistency, encour-

aging consistent predictions over the prompts. This

allows either finetuning the model with extra un-

labeled training data, or direct application at infer-

ence time. While SELF-INSTRUCT has some simi-

larities with the self-training literature, most self-

training methods assume a specific target task as

well as unlabeled examples under it; in contrast,

SELF-INSTRUCT produces a variety of tasks from

scratch.

Knowledge distillation.

Knowledge distilla-

tion (Hinton et al., 2015; Sanh et al., 2019; West

et al., 2021; Magister et al., 2022) often involves

the transfer of knowledge from larger models to

smaller ones. SELF-INSTRUCT can also be viewed

as a form of “knowledge distillation", however, it

differs from this line in the following ways: (1)

the source and target of distillation are the same,

i.e., a model’s knowledge is distilled to itself; (2)

the content of distillation is in the form of an

instruction task (i.e., instructions that define a task,

and a set of examples that instantiate it).

Bootstrapping with limited resources.

A series

of recent works use language models to boot-

strap some inferences using specialized methods.

NPPrompt (Zhao et al., 2022) provides a method

to generate predictions for semantic labels without

any fine-tuning. It uses a model’s own embeddings

to automatically find words relevant to the label of

the data sample and hence reduces the dependency

on manual mapping from model prediction to la-

bel (verbalizers). STAR (Zelikman et al., 2022)

iteratively leverages a small number of rationale

examples and a large dataset without rationales, to

bootstrap a model’s ability to perform reasoning.

Self-Correction (Welleck et al., 2022) decouples an

imperfect base generator (model) from a separate

corrector that learns to iteratively correct imperfect

generations and demonstrates improvement over the

base generator. Our work instead focuses on boot-

strapping new tasks in the instruction paradigm.

Instruction generation.

A series of recent

works (Zhou et al., 2022b; Ye et al., 2022; Singh

et al., 2022; Honovich et al., 2022) generate instruc-

tions of a task given a few examples. While SELF-

INSTRUCT also involves instruction generation, a

major difference in our case is it is task-agnostic;

we generate new tasks (instructions along with in-

stances) from scratch.

3 Method

Annotating large-scale instruction data can be chal-

lenging for humans because it requires 1) creativity

to come up with novel tasks and 2) expertise for

writing the labeled instances for each task. In this

section, we detail our process for SELF-INSTRUCT,

which refers to the pipeline of generating tasks with

a

vanilla pretrained language model

itself and

then conducting instruction tuning with this gener-

ated data in order to align the language model to

follow instructions better. This pipeline is depicted

in Figure 1.

3.1 Defining Instruction Data

The instruction data we want to generate contains

a set of instructions

{𝐼

𝑡

}

, each of which defines a

task

𝑡

in natural language. Each task has one or

more input-output instances

(𝑋

𝑡

, 𝑌

𝑡

)

. A model

𝑀

is expected to produce the output

𝑦

, given the task

instruction

𝐼

𝑡

and the instance input

𝑥

:

𝑀(𝐼

𝑡

, 𝑥) =

𝑦, for (𝑥, 𝑦) ∈ (𝑋

𝑡

, 𝑌

𝑡

)

. Note that the instruction

and instance input does not have a strict boundary

in many cases. For example, “write an essay about

school safety” can be a valid instruction that we

expect models to respond to directly, while it can

also be formulated as “write an essay about the fol-

lowing topic” as the instruction, and “school safety”

as an instance input. To encourage the diversity of

the data format, we allow such instructions that do

not require additional input (i.e., 𝑥 is empty).

3.2 Automatic Instruction Data Generation

Our pipeline for generating the instruction data con-

sists of four steps: 1) instruction generation, 2) iden-

tifying whether the instruction represents a classifi-

cation task or not, 3) instance generation with the

input-first or the output-first approach, and 4) filter-

ing low-quality data.

Instruction Generation.

SELF-INSTRUCT is

based on a finding that large pretrained language

models can be prompted to generate new and

novel instructions when presented with some

existing instructions in the context. This provides

us with a way to grow the instruction data from a

small set of seed human-written instructions. We

propose to generate a diverse set of instructions in

a bootstrapping fashion. We initiate the task pool

with 175 tasks (1 instruction and 1 instance for

each task) written by our authors. For every step,

we sample 8 task instructions from this pool as

in-context examples. Of the 8 instructions, 6 are

from the human-written tasks, and 2 are from the

model-generated tasks in previous steps to promote

diversity. The prompting template is shown in

Table 6.

Classification Task Identification.

Because we

need two different approaches for classification and

non-classification tasks, we next identify whether

the generated instruction represents a classification

task or not.

3

We prompt vanilla GPT3 few-shot to

determine this, using 12 classification instructions

and 19 non-classification instructions from the seed

tasks. The prompting template is shown in Table 7.

Instance Generation.

Given the instructions and

their task type, we generate instances for each in-

struction independently. This is challenging be-

cause it requires the model to understand what the

target task is, based on the instruction, figure out

what additional input fields are needed and gen-

erate them, and finally complete the task by pro-

ducing the output. We found that pretrained lan-

guage models can achieve this to a large extent when

prompted with instruction-input-output in-context

examples from other tasks. A natural way to do this

is the

Input-first Approach

, where we can ask a

language model to come up with the input fields

first based on the instruction, and then produce the

corresponding output. This generation order is sim-

ilar to how models are used to respond to instruction

and input, but here with in-context examples from

other tasks. The prompting template is shown in

Table 8.

However, we found that this approach can gen-

erate inputs biased toward one label, especially for

classification tasks (e.g., for grammar error detec-

tion, it usually generates grammatical input). There-

fore, we additionally propose an

Output-first Ap-

proach

for classification tasks, where we first gener-

ate the possible class labels, and then condition the

input generation on each class label. The prompting

template is shown in Table 9.

4

We apply the output-

first approach to the classification tasks identified

in the former step, and the input-first approach to

the remaining non-classification tasks.

Filtering and Postprocessing.

To encourage di-

versity, a new instruction is added to the task pool

only when its ROUGE-L overlap with any exist-

ing instruction is less than 0.7. We also exclude

instructions that contain some specific keywords

(e.g., images, pictures, graphs) that usually can not

be processed by language models. When generat-

ing new instances for each instruction, we filter out

instances that are exactly the same or those with

the same input but different outputs.

3

More concretely, we regard tasks that have a limited and

small output label space as classification tasks.

4

In this work, we use a fixed set of seed tasks for prompt-

ing the instance generation, and thus only generate a small

number of instances per task in one round. Future work can

use randomly sampled tasks to prompt the model to generate

a larger number of instances in multiple rounds.

剩余18页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2812

- 资源: 3980

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- Java项目:在线拍卖系统(java+SpringBoot+Mybaits+Vue+elementui+mysql)

- 立体口罩接料机sw18可编辑全套技术资料100%好用.zip

- DevExpressComponentsBundleSetup-22.2.7.exe

- 计算机科学+计算机组成原理实验

- STM32F407单片机连接W5500以太网芯片实现设置静态IP的方式连接EMQX平台(MQTT平台)

- Java项目:在线拍卖系统(java+SpringBoot+Mybaits+Vue+elementui+mysql)

- 回声法语音信息隐藏信号处理实验MATLAB源代码

- 立体口罩收料包装机sw18可编辑全套技术资料100%好用.zip

- 含光伏的33节点系统接线图PSCAD,可拿来分析,谐波含量很小,容量为550kW,此外还有两个电动汽车充电桩负荷

- Java项目:在线拍卖系统(java+SpringBoot+Mybaits+Vue+elementui+mysql)

- 源码-科学 PDF 文档翻译及双语对照工具

- 计算机视觉中YOLOv8的最新进展及其在多领域中的应用与优化

- 【岗位说明】4S店各岗位说明.doc

- 【岗位说明】4S店岗位职责说明书.xls

- 【岗位说明】4S店岗位工作说明书配件主管.xls

- 【岗位说明】汽车4S店售后经理岗位职责.doc

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功