没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文详细探讨了如何构建可信的大规模语言模型(LLMs),特别是在金融和医疗领域的具体应用。主要解决的问题包括减少幻觉现象(hallucinations)、增强解释性和提高数据质量。方法涵盖高质量数据的准备、对齐技术和神经符号计算系统的应用。文中介绍了用于持续训练的高质量领域特定数据的获取和清洗过程,以及如何利用增强学习从人类反馈(RLHF)提升模型的信任度。在实验部分,展示了构建的金融LLM-INF-Fin和医疗LLM-INF-Med在多项基准测试中的卓越表现。最后,提供了金融报告评论工具和医疗知识助理两个实际应用场景的案例研究。 适合人群:从事自然语言处理、金融数据分析、医疗信息系统开发的研究人员和从业人员。 使用场景及目标:适用于需要高度准确和可信的行业应用,如自动金融报告生成、医疗知识助手等。目标是提升这些领域中的决策支持系统的能力和可靠性。 其他说明:文章强调了高质量数据、合理的对齐技术和先进的神经符号计算系统对于构建可信大规模语言模型的重要作用。同时指出未来的发展方向在于进一步优化强化学习机制和设计全面的评估指标来量化信任度。

资源推荐

资源详情

资源评论

Towards Trustworthy Large Language Models

in Industry Domains

INF-Team

July 04, 2024

Abstract

This report addresses the challenges and strategies for mitigating hal-

lucinations in large language models (LLMs), particularly in domain-

specific applications. Hallucinations refer to the generation of unrealistic

or illogical outputs by LLMs. We explore several methods to reduce hallu-

cinations, including using high-quality domain-specific data for training,

ensuring that the model stays up-to-date with new knowledge, and em-

ploying alignment techniques to ensure that the LLM adheres to human

instructions. A key proposition is the adoption of neuro-symbolic sys-

tems, which combine large-scale deep learning models with symbolic AI.

These systems leverage neural networks for fast “black box” probabilistic

predictions while also enabling “white box” logical reasoning. The inte-

gration of these approaches represents a significant technical direction for

future artificial general intelligence and provides a “gray box” approach to

developing trustworthy LLMs for industrial applications. This dual capa-

bility enhances logical reasoning and improves explainability. In addition,

we detail our efforts to construct domain-specific LLMs for finance and

healthcare. Using anti-hallucination strategies, our finance LLM outper-

forms GPT-4 on the CFA tests, while our healthcare LLM ranks first on

the public MedBench competition leaderboard.

1 Introduction

With the unprecedented development of large language models (LLMs), LLMs

are used to improve communication, generate creative text formats, translate

languages effectively, and even assist scientific research. However, LLMs are

notorious for yielding unreliable outputs, which greatly hinder their applica-

tion in real-world tasks, especially for high-stakes decision-making applications

in industries such as healthcare, asset investment, criminal justice, and other

domains.

One of the key challenges is the “hallucination” problem, whereby LLMs may

output content that seems reasonable but is, in fact, incorrect or illogical. As

an inherent limitation of LLM [51], the hallucination phenomenon is inevitable.

Hallucinations can be divided into two categories [13,14]. One is the factuality

1

hallucination, where the generated content is inconsistent with the facts of the

real world and contains unexpected fictitious concepts and plots. The other is

faithfulness hallucination, where the generated content is inconsistent with the

input instructions and logic. Both categories pose serious obstacles to ensuring

the accuracy and reliability of model outputs in industry applications.

Domain-specific LLMs focus on understanding and responding to a partic-

ular field or industry, e.g., finance and healthcare, aiming to resolve domain-

specific tasks as highly trained professionals. For real-world industries where

domain-specific LLMs may play crucial roles in decision-making, being trustwor-

thy becomes more demanding. Therefore, domain-specific LLMs must address

the major limitations we discussed above, i.e. hallucinations.

Despite the massive training data in LLM that covers a wide range of top-

ics, a considerable portion of real-world domain knowledge is long-tail, and the

scarcity of domain data may contribute to hallucinations. In specific indus-

try domains, general LLMs may lack professional knowledge and fail to follow

technical instructions due to insufficient domain data at the training stage.

To mitigate factuality hallucination, an effective approach is to address the

issue of data scarcity in training. Feasible solutions include curating high-quality

factual data specifically for the domain, developing automatic data cleaning and

selection techniques, and designing execution engines for high-quality synthetic

data. The massive domain-specific data can greatly reduce the model’s tendency

to fabricate information after training.

Continuous training with high-quality domain-specific data helps LLMs ac-

quire domain knowledge to understand technical nuances in context and instruc-

tions, thereby alleviating faithful hallucinations as well. To further reduce faith-

fulness hallucination and improve productivity, we need to resort to alignment

techniques to ensure that LLMs actively cooperate with professional instructions

to achieve specific goals. Meanwhile, we design reward systems that motivate

LLMs to behave in a way that is consistent with human values and employ re-

inforcement learning to learn from preference feedback in a human-in-the-loop

system that provides safety guidance and supervision.

As a black-box model, LLMs cannot always explain their outputs correctly.

The presence of hallucinations is an intrinsic obstacle that prevents the continu-

ous yielding of reliable explanations, even if prompting LLMs to explain step by

step. Research in the field of interpretability for LLMs is still in development.

For example, dictionary learning from Anthropic is a tool to understand the cor-

respondence between the model components and the particular inputs [3]. The

method has improved analytic capacity to break down the complexity of LLMs

into more understandable features. However, exploring all internal features

learned by LLMs during training is still cost-prohibitive, and effectively manip-

ulating specific features for predictably superior behavior. Lack of explainability

is another challenge for LLMs in gaining trust in high-stakes applications where

a transparent decision process is critical.

To improve explainability in the behavior of LLMs, we can either dive into

attention mechanisms by tools such as dictionary learning to analyze the gen-

eration process, or prompt LLMs to carry out reflections and verification step

2

by step and assign confidence scores to its responses [7]. It is also possible to

produce counterfactual explanations by providing alternative scenarios where

the output of LLMs would change, offering insights into its reasoning process.

While these methods allow users to assess the reliability of the information, the

interpretations are contaminated by the intrinsic hallucination within LLMs. To

break through innate limitations, we have introduced neural symbolic systems

to assist LLMs in gaining explainability and transparency in content generation.

Neural symbolic systems are emerging in AI that aim to combine the strengths

of two different AI techniques, i.e., deep learning and symbolic AI. LLMs are

very successful exemplars of deep learning, which excels at learning from vast

amounts of data and generating creative text formats but struggles with tasks

requiring reasoning, logic, and explainability. Symbolic AI uses symbols and

rules to represent knowledge and excels in logical reasoning and explainability.

However, it can be less efficient in learning from data. To bridge this gap by

integrating both approaches, we leverage the reading comprehension capabilities

of LLMs to process raw data and generate an initial understanding. The prelim-

inary instances are then passed to our in-house symbolic reasoning engine that

performs reasoning on domain-specific causal graphs to make decisions. The

final output may combine the multiple interactive results from both modules.

The homemade symbolic reasoning engine can visualize all feasible reasoning

paths, offering more logical and explainable outputs. We name this proposal as

a unique “gray box” approach to trustworthy LLMs in industry domains.

The rest of the content is organized as follows. In Section 2, we detail our

approach to implementing trustworthy domain-specific LLMs, including high-

quality data collection, alignment techniques, and neural symbolic computation.

In Sections 3 and 4, we introduce two domain-specific LLMs, healthcare and fi-

nance, respectively. Our approach to trustworthy domain-sepcific LLMs is com-

patible with any open source foundation model. To demonstrate the feasibility,

we use a homemade 34B foundation model to develop our healthcare LLM, and

choose the open-source Qwen2-72B base model for continuous training and in-

struction alignment to build our finance LLM. In Section 5, we conclude and

then discuss some directions for future work.

2 Methodology Overview

In this section, we present our methodology to construct trustworthy LLMs

in industry domains, which consists of three parts including high-quality data

preparation, alignment techniques, and neuro-symbolic computing techniques.

2.1 High-quality Data Preparation

2.1.1 General Data Processing Pipeline

High-quality training data are essential for effective large language model (LLM)

training. To achieve this, we have amassed a substantial dataset. The primary

3

sources of text data include Common Crawl, Wikipedia, books, academic pa-

pers, journals, patents, news articles, and educational resources for K-12. For

code data, the main sources are GitHub and Stack Overflow. Following data

collection, we perform data cleansing, which involves three main steps: filtering,

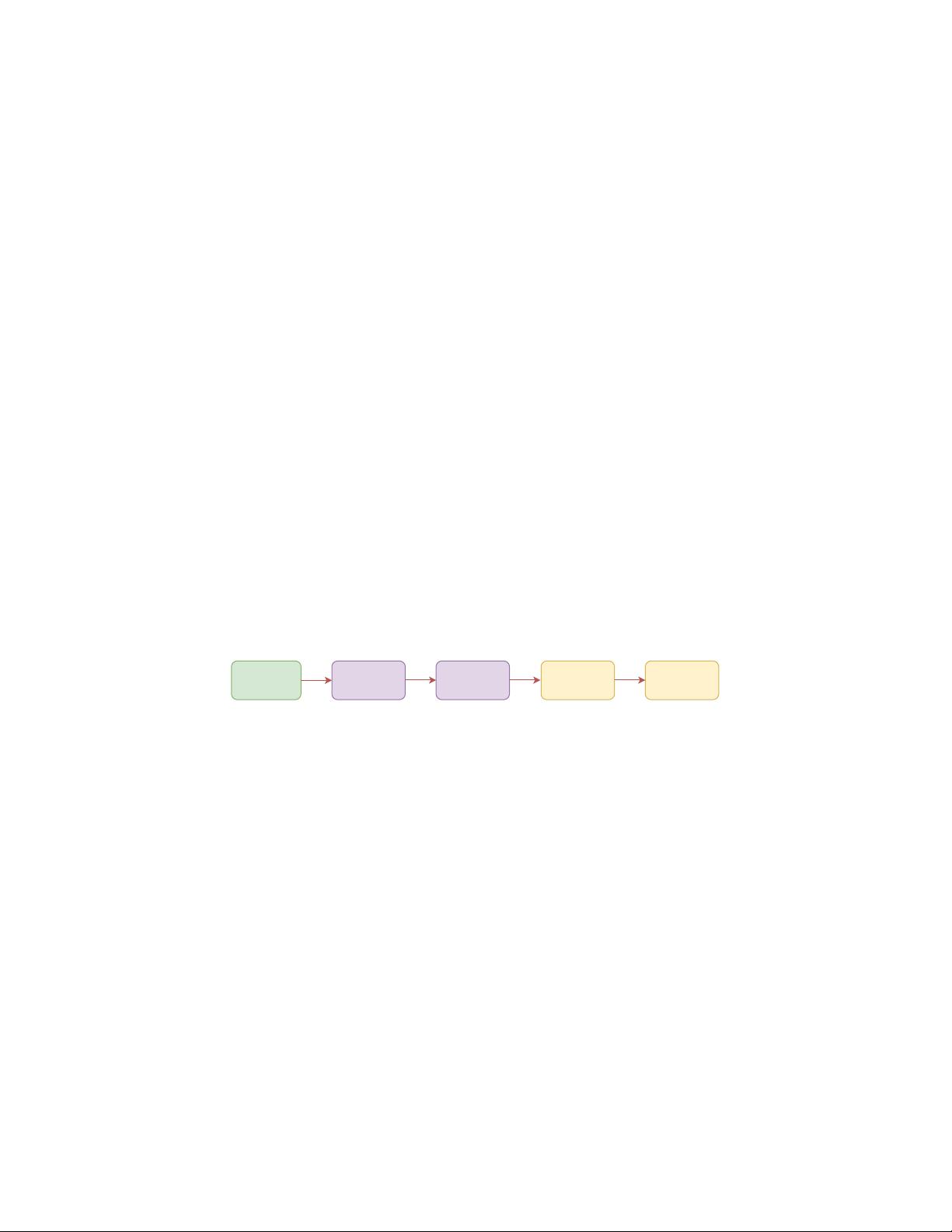

deduplication, and selection. The overall process is illustrated in Fig. 1.

Filtering: For the filtering process, we employed heuristic rules to filter the

text, which helps avoid selection bias. These heuristic rules allow us to eliminate

low-quality data effectively. Different rules are applied to different types of

text. The filtering primarily focuses on removing duplicate texts using n-gram

repetition detection and sentence-level detection. Additionally, we created a list

of sensitive words and removed any documents that contained those words and

personal identifiable information.

Deduplication: Deduplication includes fuzzy deduplication and exact dedu-

plication [15]. For fuzzy deduplication, We employ Minhash-LSH for approx-

imate deduplication. The process involves several steps: (1) standardize the

text, split the text into sequences using text segmentation, and apply N-Gram

processing to the sequences; (2) compute Minhash values and compress them

into a set of bucketed hash values using Locality Sensitive Hashing (LSH). (3)

perform the approximate deduplication by the hashes. Then, we utilize a suffix

array algorithm for exact deduplication. This method includes: (1) dividing files

according to memory limitations; (2) loading them into memory to compute the

suffix array; (3) identifying duplicate intervals and deleting the entire document

exceeding a predefined duplication threshold (to maintain text integrity). This

step requires substantial memory and is therefore performed at last.

Source

Document

Quality Singal

Computation

Fuzzy

Deduplication

Exact

Deduplication

Filtering

Figure 1: The figure illustrates the primary workflow of our data-cleaning pro-

cess. The purple sections indicate the filtering stages, while the yellow sections

represent the deduplication process.

2.1.2 Recalling High Quality Data from Common Crawl

The Common Crawl (CC) dataset contains an immense collection of web pages.

Traditionally, our approach to processing CC data has been limited to filtering

and deduplication, preventing more advanced analysis of this extensive dataset.

However, to identify reliable and high-quality data across various fields, we

need a more sophisticated processing method. We propose a fine-grained divi-

sion strategy for CC data. Initially, we segment the URLs in all snapshots of

the CC dataset by base URL (e.g., www.google.com is considered a base URL).

We count the occurrences of each base URL and then rank them in descending

order. Our findings indicate that the top 2 million base URLs account for ap-

proximately 65% of the entire CC dataset. Therefore, we believe that annotating

these base URLs with their type, topic, and language will provide valuable in-

4

formation. This method allows for a preliminary fine-grained segmentation of

CC data, although it may introduce some inaccuracies.

High-quality data plays a crucial role in the capabilities of general models

[54] [55], but related corpora are extremely scarce. Therefore, we employ a

method similar to the data-recalling mechanism in DeepSeek-Math [34]. This

method recalls high-quality data from CommonCrawl (CC), focusing on three

domains: code, math, and Wiki. The code and math data enhance the model’s

reasoning capabilities, while Wikidata enriches the model’s knowledge. This

process includes seed acquisition, URL aggregation, and fastText-based recall,

as shown in Fig. 2.

Seed collection: For the mathematical and code data, We choose Open-

WebMath [27], StackOverflow pages, and Wikipedia pages as our English initial

seeds. Public available Chinese training datasets are limited to mathematics and

code. Reference to AutoMathText [59], we prompt a base LLM to autonomously

annotate data for topic relevance and educational value, subsequently retrieving

the top 50K entries as Chinese initial seeds. For knowledge, we employ an LLM

to annotate Wikipedia data with educational scores and subsequently train a

classifier. We then collect 50,000 high-quality seeds from wiki sources such as

Wikipedia. We train a fastText model using collected seed data as the positive

samples and random CC documents as the negative samples.

URL aggregation: Due to the insufficient diversity of the seeds, many

target data remain uncollected after the first round of recall. We further enhance

the diversity of the seeds through URL aggregation. We manually annotate sub

URLs (e.g., cloud.tencent.com/developer) from domains where over 10% of the

pages are hit and incorporate the uncollected samples into the seed set.

Iterative recall: After collecting more diverse seeds, we retrain the fastText

model and further recall more target webpages. We repeat the process of URL

aggregation and fastText retraining until over 98% of the recall results have

been collected.

Figure 2: The illustration of high quality data recall.

5

剩余59页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2284

- 资源: 2353

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 【java毕业设计】新闻资讯系统源码(springboot+vue+mysql+说明文档+LW).zip

- PromptSource: 自然语言提示的集成开发环境与公共资源库

- 在线英语阅读分级平台:SpringBoot 与 HTML5 + CSS3 框架内的阅读教学蓝图与实施规划

- 利用网页设计语言制作的一款简易打地鼠小游戏

- 步进电机foc+弱磁驱动方案.zip

- 【java毕业设计】志同道合交友网站源码(springboot+vue+mysql+说明文档+LW).zip

- PHP民宿酒店管理系统源码带文字安装教程数据库 MySQL源码类型 WebForm

- 生活の日本語,用于日常生活用语总结

- 制造业数据合集1.0(三份)-最新出炉.zip

- 制造业高质量发展水平测算(原始数据+测算结果)2011-2022年-最新出炉.zip

- 年全国各城市和县域社会经济统计面板数据(2000-2022年)-最新出炉.zip

- 1990-2023年A股上市公司制造业数据大全-最新出炉.zip

- 2007-2023年全国各城市小区二手房挂牌价格明细数据-最新出炉.zip

- 数字经济指数与制造业相关数据集-最新出炉.zip

- axure 图表 资源文件

- 【java毕业设计】医院药品管理系统设计与实现源码(springboot+vue+mysql+说明文档+LW).zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功