YOLOv5是一种高效且准确的目标检测模型,它在计算机视觉领域被广泛应用,尤其是在实时对象检测上表现出色。本项目是基于YOLOv5和PyTorch实现的简单停车标志检测模型,旨在帮助自动驾驶车辆或其他智能系统识别和定位停车标志。 1. **YOLOv5介绍** YOLO(You Only Look Once)是一种单阶段的目标检测框架,以其实时性能和高精度而闻名。YOLOv5是该系列的最新版本,由 Ultralytics 团队开发,它在YOLOv3和YOLOv4的基础上进行了优化,包括更快的训练速度、更高的检测精度以及更小的模型大小。 2. **PyTorch框架** PyTorch是Facebook AI Research团队开发的一个深度学习框架,以其灵活性和易用性而受到开发者们的青睐。YOLOv5模型利用PyTorch的强大功能进行构建和训练,允许开发者快速实现和调试神经网络模型。 3. **模型训练** 在这个项目中,模型的训练过程会涉及数据预处理,包括图像增强(如翻转、缩放等)以增加模型泛化能力。训练数据集应包含各种停车标志的标注图像,每个图像都带有其对应的边界框坐标和类别标签。 4. **模型架构** YOLOv5的核心是其卷积神经网络架构,它包含多个backbone(如Darknet-53)和neck(如SPP-Block和Path Aggregation Network),以及用于预测边界框和类别的head部分。这种设计使得模型能同时对多个尺度的目标进行检测。 5. **损失函数** 训练过程中,模型会通过最小化多任务损失函数来优化权重,这通常包括分类损失、坐标回归损失等,以确保模型能准确地识别和定位停车标志。 6. **推理与应用** 一旦模型训练完成,可以将其部署到实际应用场景中,例如在自动驾驶车辆上。在运行时,模型会接收摄像头输入,实时检测图像中的停车标志,并输出其位置信息。 7. **项目结构** 压缩包文件"Stop-Sign-Detection-main"可能包含以下内容: - `models/`:存储预训练模型或用户训练的模型权重。 - `data/`:包含训练和验证数据集,以及相关的标注文件。 - `utils/`:提供数据处理、训练配置、模型保存和加载等辅助功能的Python脚本。 - `config.yaml`:配置文件,定义了训练参数,如学习率、批大小等。 - `train.py`:负责模型训练的主要脚本。 - `inference.py`:用于模型推理,将模型应用于新的图像或视频流。 8. **后处理步骤** 模型预测得到的是边界框坐标和置信度,还需要通过非极大值抑制(NMS)算法去除重复的检测结果,只保留最有可能的停车标志。 9. **评估与优化** 使用标准的评估指标,如平均精度(mAP)来衡量模型性能。如果表现不佳,可以通过调整超参数、更换数据增强策略或微调模型结构来进行优化。 基于YOLOv5(PyTorch)的停车标志检测模型是一个实用的计算机视觉应用,它结合了深度学习和实时目标检测技术,为自动驾驶和其他相关领域提供了关键的视觉感知能力。通过持续的训练和优化,该模型可以进一步提高停车标志检测的准确性和效率。

Stop-Sign-Detection-main.zip (5个子文件)

Stop-Sign-Detection-main.zip (5个子文件)  Stop-Sign-Detection-main

Stop-Sign-Detection-main  Lab-3 Part2 Report.pdf 1.8MB

Lab-3 Part2 Report.pdf 1.8MB singlenode_demo

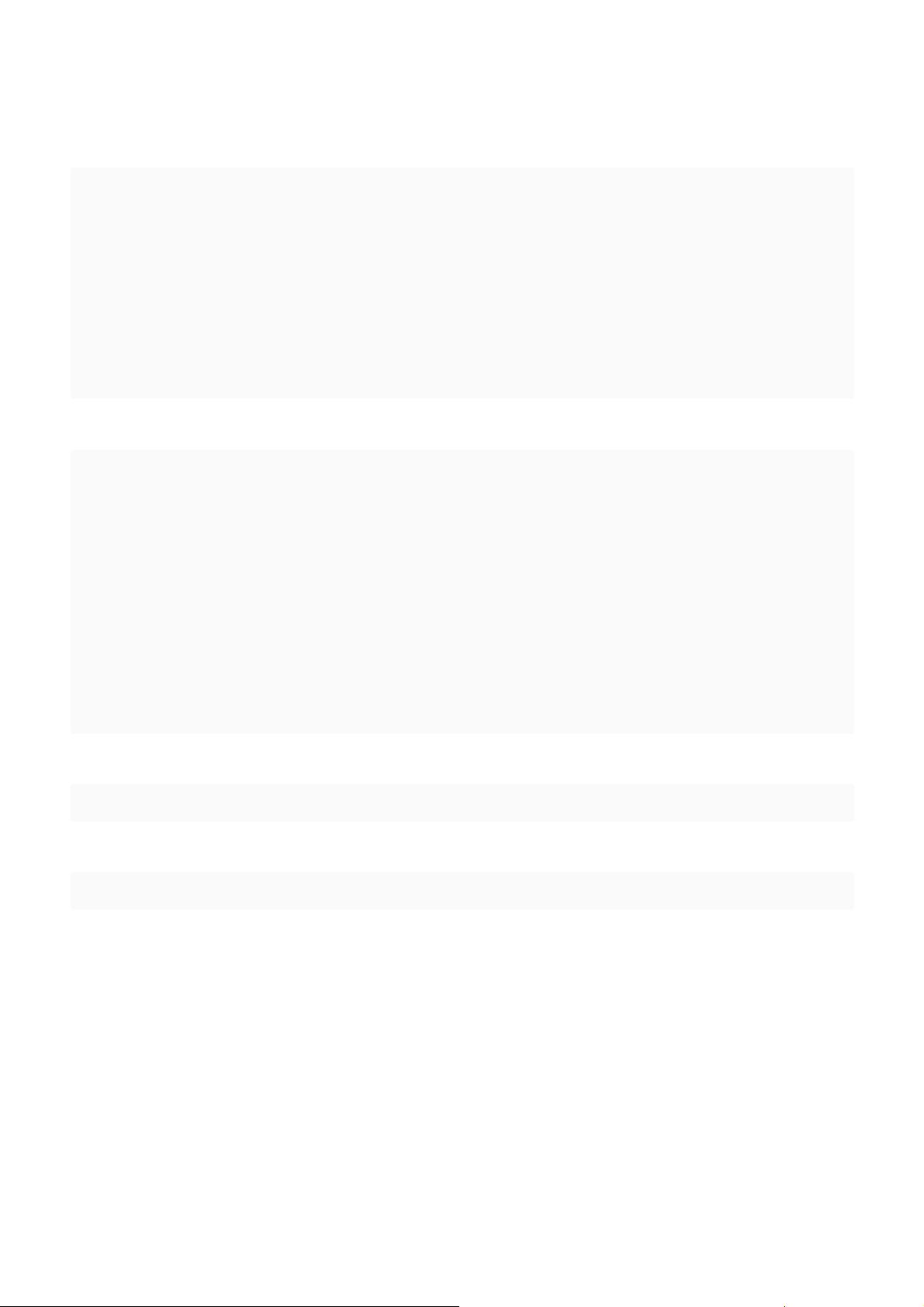

singlenode_demo  TSGStopSigns.ipynb 2.31MB

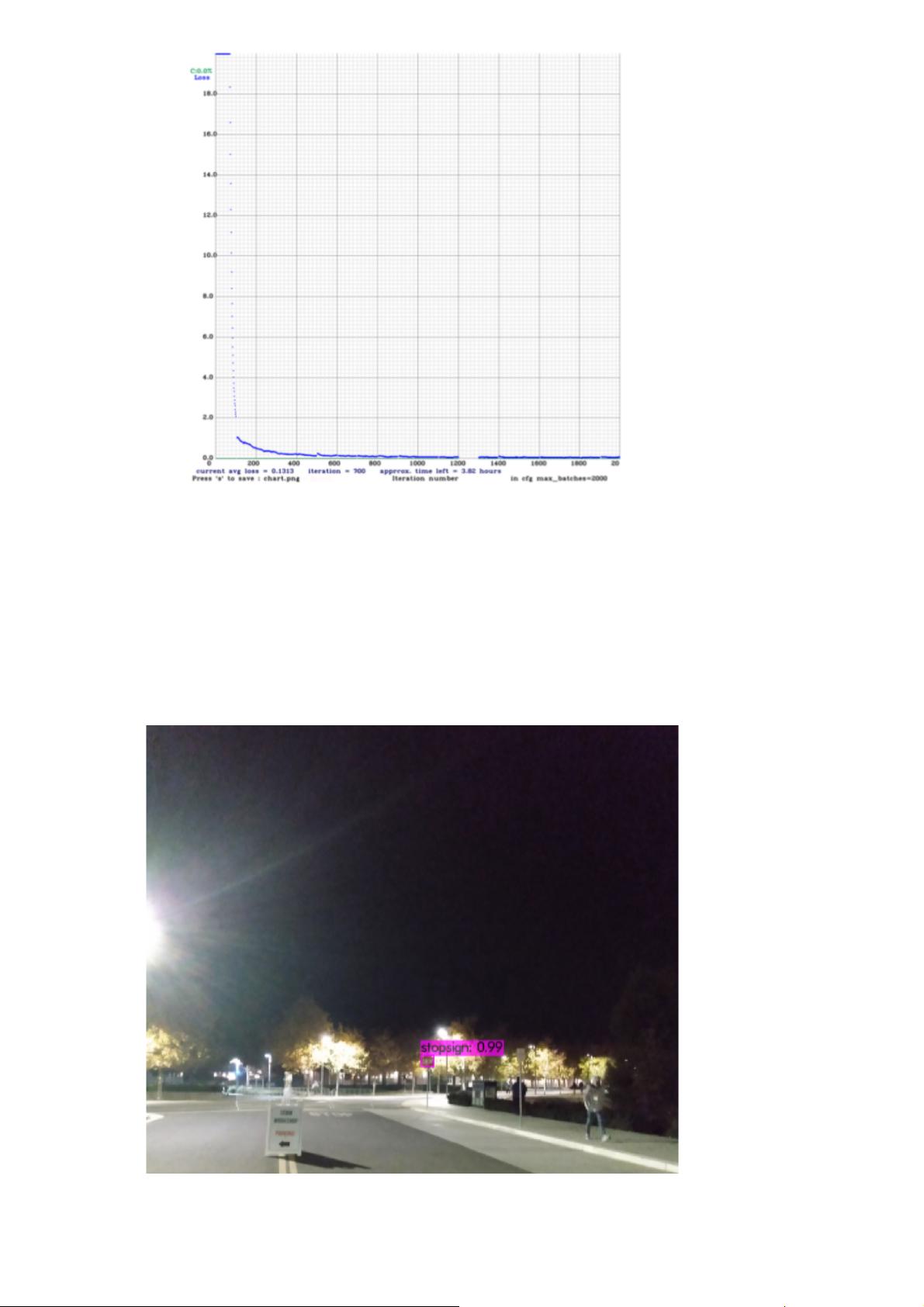

TSGStopSigns.ipynb 2.31MB Train_YOLOv5_ipynb.ipynb 11.53MB

Train_YOLOv5_ipynb.ipynb 11.53MB dist_training

dist_training  train.sbatch 1KB

train.sbatch 1KB train.sh 528B

train.sh 528B- 1

- 粉丝: 6722

- 资源: 1675

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

信息提交成功

信息提交成功