2

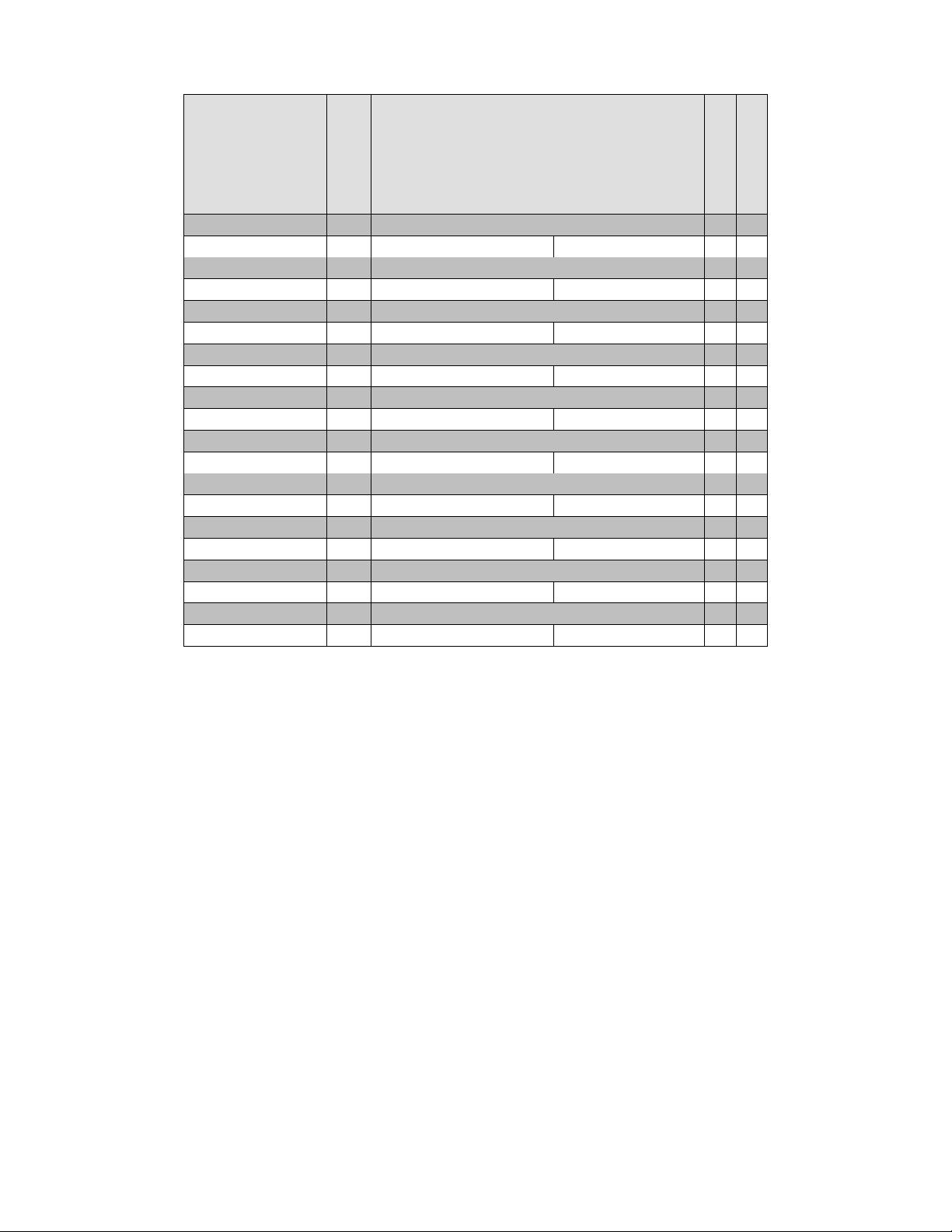

Adversary

Classification

Threshold

Test Sample

Class A Class B

Fig. 1: Evasion attack drags test sample from class A to B.

[29], [30], medical analysis [31], [32], [33], [34], [35], access

control [36], [37], [38], [39], among others.

Malware analysis is one of the most critical fields where

ML is being significantly employed. Traditional malware

detection approaches [40], [41], [42], [43], [44] rely on sig-

natures where unique identifiers of malware files are main-

tained in a database and are compared to extracted signa-

tures from newly encountered suspicious files. However,

several techniques are used to rapidly evolve the malware

to avoid detection (more details in Section 4). With secu-

rity researchers looking for detection techniques addressing

such sophisticated zero-day and evasive malware, ML based

approaches came to their rescue [45]. Most of the modern

anti-malware engines, such as Windows Defender

6

, Avast

7

,

Deep Instinct D-Client

8

and Cylance Smart Antivirus

9

, are

powered by machine learning [46], making them robust

against emerging variants and polymorphic malware [47].

As per some estimates [48], around 12.3 billion devices

are connected worldwide and spread of malware in this

scale can result in catastrophic consequences. As such, it is

evident that economies worth billions of dollars are directly

or indirectly relying on machine learning’s performance and

growth to be protect from this rapidly evolving menace

of malware. Despite the existence of numerous malware

detection approaches, including ones that leverage ML,

recent ransomware attacks, like the Colonial Pipeline attack

where operators had to pay around $5 million for recov-

ering 5,500-mile long pipeline [49], the MediaMarkt attack

worth around $50M bitcoin payment [50] and the computer

giant Acer attack [51], highlight the vulnerabilities and

limitations of current security approaches, and necessitates

more robust, real-time, adaptable and autonomous defense

mechanisms powered by AI and ML.

The performance of ML models relies on the basic as-

sumption that training and testing are carried out under

similar settings and that samples from training and testing

datasets follow independent and identical distribution. This

assumption is overly simplified and, in many cases, does not

hold true for real world use-cases where adversaries deceive

the ML models into performing wrong predictions (i.e.

adversarial attacks). In addition to traditional threats like

malware attack [52], [53], [54], phishing [55], [56], [57], man-

6. https://www.microsoft.com/en-us/windows/comprehensive-

security

7. https://www.avast.com/

8. https://www.deepinstinct.com/endpoint-security

9. https://shop.cylance.com/us

Fig. 2: An adversarial example against GoogLeNet [75] on

ImageNet [76], demonstrated by Goodfellow et al. [74].

in-the-middle attack [58], [59], [60], denial-of-service [61],

[62], [63] and SQL injection [64], [65], [66], adversarial at-

tacks has now emerged as a serious concern, threatening

to dismantle and undermine all the progress made in the

machine learning domain.

Adversarial attacks are carried out either by poisoning

the training data or manipulating the test data (evasion

attacks). Data poisoning attacks [67], [68], [69], [70] have

been prevalent for some time but are less scrutinized as ac-

cess to training data by the attackers is considered unlikely.

In contrast, evasion attacks, first introduced by Szegedy et

al. [71] against deep learning architectures, are carried out

by carefully crafting imperceptible perturbation in test sam-

ples, forcing models to mis-classify as illustrated in Figure 1.

Here, the attacker’s effort is to drag a test sample across the

ML’s decision boundary through the addition of minimal

perturbation to that sample. Considering the availability of

research works and higher-risk in practicality, this survey

will entirely focus on adversarial evasion attacks that are

carried out against the malware detectors.

Adversarial evasion attacks were initially crafted on

images as the only requirement for perturbation in an image

is that it should be imperceptible to the human eye [72], [73].

A very common example for adversarial attack in images,

shown in Figure 2, is performed by Goodfellow et al. [74]

where GoogLeNet [75] trained on ImageNet [76] classifies

panda as gibbon with addition of very small perturbations.

This threat is not limited to experimental research labs but

have already been successfully demonstrated in real world

environments. For instance, Eykholt et al. performed sticker

attacks to road signs forcing the image recognition system

to detect ’STOP’ sign as a speed limit. Researchers from

the Chinese technology company Tencent

10

tricked Tesla’s

11

Autopilot in Model S and forced it to switch lanes by adding

few stickers on the road [77]. Such adversarial attacks on

real world applications force us to rethink the increasing

reliability over smart technologies like Tesla Autopilot

12

.

However, adversarial generation is a completely differ-

ent game in the malware domain, in comparison to com-

puter vision, due to the increased number of constraints.

Perturbations in malware files should be generated in a

way that it should not affect both their functionality and

executability. Adversarial evasion attacks on malware are

carried out by manipulating or inserting few ineffectual

10. https://www.tencent.com/

11. https://www.tesla.com/

12. https://www.tesla.com/autopilot

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功