transferred sentence can be aligned with those in

the source sentence. As shown in Fig 1(b), we can

align “Not” with “Not”, “terrible” with “perfect”,

and leave only a few words unaligned. It shows

that humans regard the alignments between words

as a key aspect of content preservation, but they are

not explicitly modeled by cycle-loss-based models

yet.

Second

, existing models use the cycle loss to

align sentences in two stylistic text spaces, which

lacks control at the word level. For example, in

sentiment transfer, “tasty” should be mapped to

“awful” (because they both depict food tastes) but

not “expensive”. We utilize a non-autoregressive

generator to model the word-level transfer, where

the transferred words are predicted based on con-

textual representations of the aligned source words.

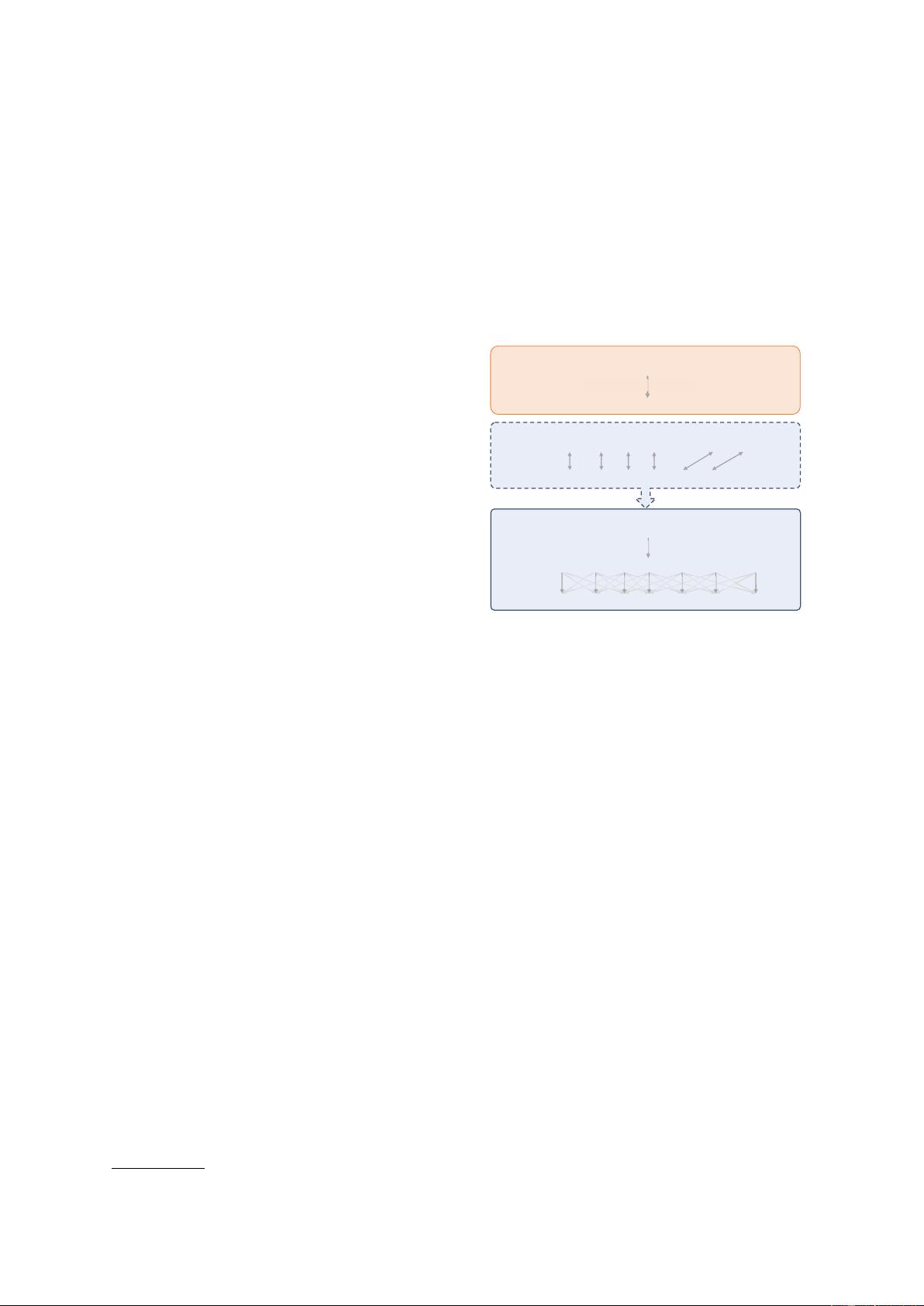

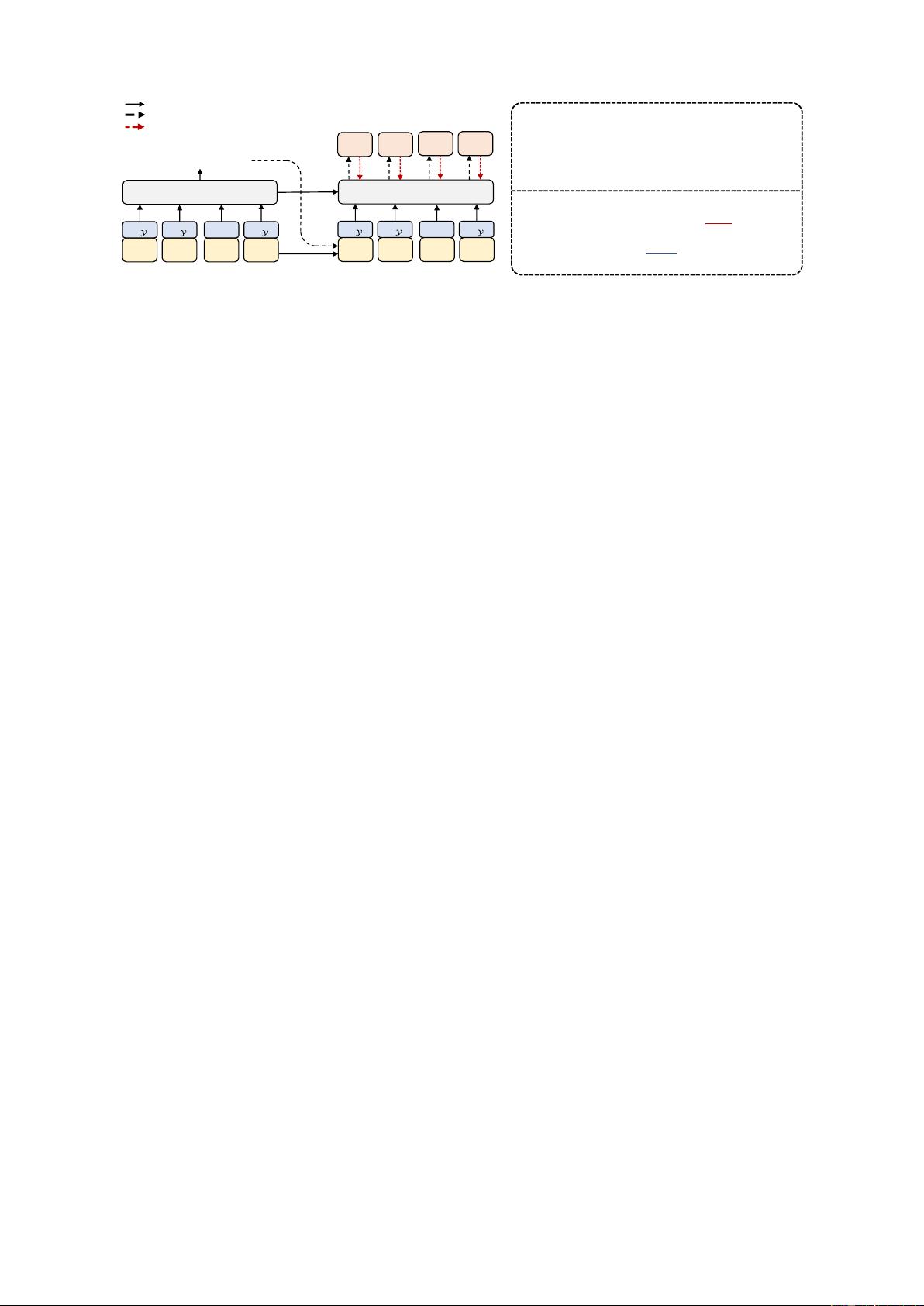

In this paper, we propose a Non-Autoregressive

generator for unsupervised Style Transfer (NAST),

which explicitly models word alignment for better

content preservation. Specifically, our generation

process is decomposed into two steps: first pre-

dicting word alignments conditioned on the source

sentence, and then generating the transferred sen-

tence with a non-autoregressive (NAR) decoder.

Modeling word alignments directly suppresses the

generation of irrelevant words, and the NAR de-

coder exploits the word-level transfer. NAST can

be used to replace the autoregressive generators

of existing cycle-loss-based models. In the exper-

iments, we integrate NAST into two base mod-

els: StyTrans (Dai et al., 2019) and LatentSeq (He

et al., 2020). Results on two benchmark datasets

show that NAST steadily improves the overall per-

formance. Compared with autoregressive models,

NAST greatly accelerates training and inference

and provides better optimization of the cycle loss.

Moreover, we observe that NAST learns explain-

able word alignments. Our contributions are:

•

We propose NAST, a Non-Autoregressive gen-

erator for unsupervised text Style Transfer. By

explicitly modeling word alignments, NAST sup-

presses irrelevant words and improves content

preservation for the cycle-loss-based models. To

the best of our knowledge, we are the first to

introduce a non-autoregressive generator to an

unsupervised generation task.

•

Experiments show that incorporating NAST in

cycle-loss-based models significantly improves

the overall performance and the speed of training

and inference. In further analysis, we find that

NAST provides better optimization of the cycle

loss and learns explainable word alignments.

2 Related Work

Unsupervised Text Style Transfer

We categorize style transfer models into three

types. The first type (Shen et al., 2017; Zhao et al.,

2018; Yang et al., 2018; John et al., 2019) disen-

tangles the style and content representations, and

then combines the content representations with the

target style to generate the transferred sentence.

However, the disentangled representations are lim-

ited in capacity and thus hardly scalable for long

sentences (Dai et al., 2019). The second type is

the editing-based method (Li et al., 2018; Wu et al.,

2019a,b), which edits the source sentence with sev-

eral discrete operations. The operations are usually

trained separately and then constitute a pipeline.

These methods are highly explainable, but they

usually need to locate and replace the stylist words,

which hardly applies to complex tasks that require

changes in sentence structures. Although our two-

step generation seems similar to a pipeline, NAST

is trained in an end-to-end fashion with the cycle

loss. All transferred words in NAST are gener-

ated, not copied, which is essentially different from

these methods. The third type is based on the cy-

cle loss. Zhang et al. (2018); Lample et al. (2019)

introduce the back translation method into style

transfer, where the model is directly trained with

the cycle loss after a proper initialization. The fol-

lowing works (Dai et al., 2019; Luo et al., 2019;

He et al., 2020; Yi et al., 2020) further adopt a style

loss to improve the style control.

A recent study (Zhou et al., 2020) explores the

word-level information for style transfer, which is

related to our motivation. However, they focus on

word-level style relevance in designing novel objec-

tives, while we focus on modeling word alignments

and the non-autoregressive architecture.

Non-Autoregressive Generation

Non-AutoRegressive (NAR) generation is first

introduced in machine translation for parallel de-

coding with low latency (Gu et al., 2018). The

NAR generator assumes that each token is gener-

ated independently of each other conditioned on

the input sentence, which sacrifices the generation

quality in exchange for the inference speed.

Most works on NAR generation focus on im-

proving the generation quality while preserving the

speed acceleration in machine translation. Gu et al.

(2018) find the decoder input is critical to the gener-

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功