Shiva Jahangiri, Michael J. Carey, and Johann-Christoph Freytag

been implemented in the Apache AsterixDB system and evaluated

on dierent storage types, including HDD, SSD, and Amazon EBS.

The remainder of the paper is organized as follows: Section 2

provides background information on Apache AsterixDB and the

workow of the HHJ and Dynamic HHJ operators. Section 3 dis-

cusses previous work related to this study. In Section 4, we discuss

the lower bound on and the suggested default number of partitions

to use in practice. Section 5 introduces and evaluates dierent parti-

tion insertion algorithms. In Section 6, two policies for the growth

of spilled partitions are discussed and evaluated. Section 7 discusses

and evaluates various destaging partition selection policies. In Sec-

tion 8, some optimization techniques in AsterixDB are discussed

before Section 9 concludes the paper.

2 BACKGROUND

2.1 Hybrid Hash Join

Like other hash-based join algorithms, HHJ uses hashing to stage

large inputs to reduce record comparisons during the join. HHJ has

been shown to outperform other join types in computing equijoins

of two datasets. It was designed as a hybrid version of the Grace

Hash Join and Simple Hash Join algorithms [

19

,

50

]. All three men-

tioned hash join algorithms consist of two phases, namely "build"

and "probe". During the build phase, they partition the smaller input,

which we refer to as "build input", into disjoint subsets. Similarly,

the probe phase divides the larger input, which we refer to as "probe

input", into the same number of partitions as the build input. While

all three algorithms share a similar high-level design, they dier in

their details, making each of them suitable for a specic scenario.

Grace Hash Join partitions the build and probe inputs consec-

utively, writing each partition back to disk in a separate le. This

partitioning process continues for each partition until they t into

memory. A hash table is created to process the join once a parti-

tion is small enough to t in memory. Grace Hash Join performs

best when the smaller dataset is signicantly larger than the main

memory.

In Simple Hash Join, records are hashed into two partitions: a

memory-resident and a disk (spilled) partition. A portion of memory

is used for a hash table to hold the memory-resident partition’s

records. Simple Hash Join performs well when memory is large

enough to hold most of the smaller dataset. In Grace Hash Join, the

idea is to use memory to divide a large amount of data into smaller

partitions that t into memory, while Simple Hash Join focuses on

the idea of keeping some portion of data in memory to reduce the

total amount of I/O, considering that a large amount of memory

is available. Next, we discuss the details of the HHJ operator and

compare its design with its parent algorithms.

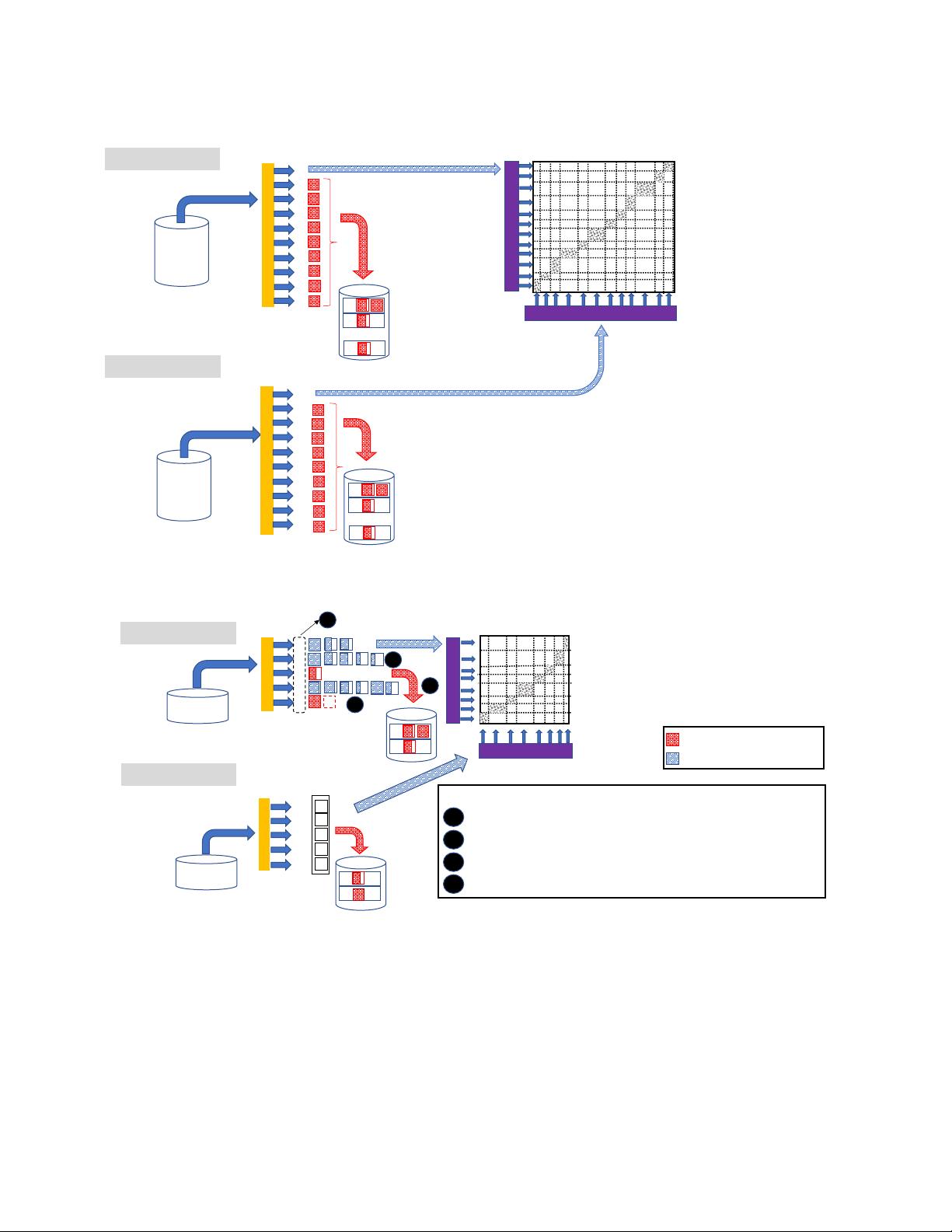

Like Grace Hash Join, HHJ uses hash partitioning to group each

input’s records into "join-able" partitions to avoid unnecessary

record comparisons. Like Simple Hash Join, HHJ uses a portion of

memory to keep one of the partitions and its hash table in memory,

while the rest write to disk. Keeping data in memory reduces the

total amount of I/O, and utilizing a hash table lowers the number

of record comparisons. The overall of Hybrid Hash Join is shown

in Figure 1.

As mentioned earlier, the HHJ operator consists of two consecu-

tive phases of build and probe. During the build phase, the records

of the smaller input are scanned and hash-partitioned based on

the values of the join attributes. We call the hash function used for

partitioning a "split function." The records mapped to the memory-

resident partition remain in memory, while the rest of the partitions

are written (frame by frame) to disk. Pointers to the records of the

memory-resident partition are inserted into a hash table at the end

of the build phase.

After the build phase ends, the probe phase starts by scanning

and hash-partitioning the records of the larger input. The same

split function used during the build phase is used for this step. The

records that map to the memory-resident partition are hashed using

the same hash function used in the build phase to probe the hash

table. All other records belong to spilled partitions and are written

(frame by frame) to that partition’s probe le on disk.

After all records of the probe input have been processed, the

pairs of spilled partitions from the build phase and probe phase are

processed as inputs to the next rounds of HHJ.

2.2 Apache AsterixDB

Apache AsterixDB [

3

,

8

,

37

] is an open-source, parallel, shared-

nothing big data management system (BDMS) built to support

the storage, indexing, modifying, analyzing, and querying of large

volumes of semi-structured data.

The unit of data that is transferred within AsterixDB, as well

as between AsterixDB and disk is called a "frame". A frame is a

xed-size and congurable set of contiguous bytes. AsterixDB uses

Dynamic HHJ, whose design and optimization is the main topic

of this paper. AsterixDB supports dierent join algorithms such

as Block Nested Loop Join, Dynamic HHJ, Broadcast Join, and

Indexed Nested Loop Join. However, Dynamic HHJ is the default

and primary join type in AsterixDB for processing equi-joins due

to its superior performance.

AsterixDB currently does not support statistics, so users may

provide hints to guide AsterixDB at execution time by selecting

an alternative type of join operator or by providing dataset size

information. For example, a user may use the Indexed Nested Loop

Join hint to request this join algorithm instead of a Dynamic HHJ.

AsterixDB follows this hint whenever possible; otherwise, it utilizes

Dynamic HHJ (by default). In addition, a hint to use a Broadcast Join

might be advantageous when the build dataset is small enough to

be sent to all nodes instead of using hash partitioning. The current

release of AsterixDB follows the join order in a query’s FROM

clause for determining the build and probe inputs. The rst input

in the FROM clause will serve as the probe relation; the rest will be

build inputs.

We chose Apache AsterixDB as our primary platform for im-

plementing and evaluating our proposed techniques for several

reasons. First, it is an open-source platform that allows us to share

our techniques and their evaluations with the community. More

importantly, AsterixDB is a parallel big data management system

for managing and processing large amounts of semi-structured

data with a declarative language. Finally, its similarity in structure

and design to other NoSQL and NewSQL database systems and

query engines makes our results and techniques applicable to other

systems as well.

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功