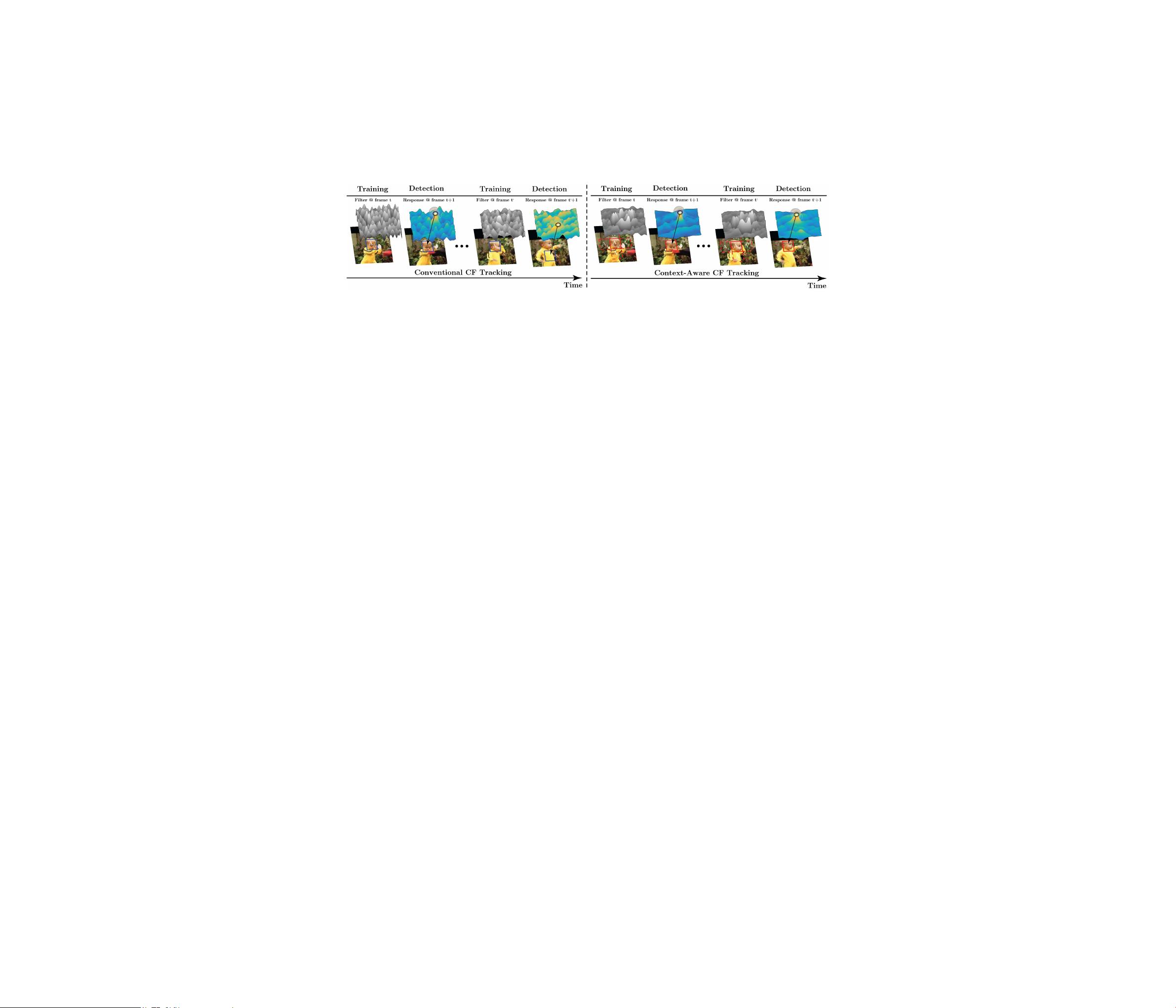

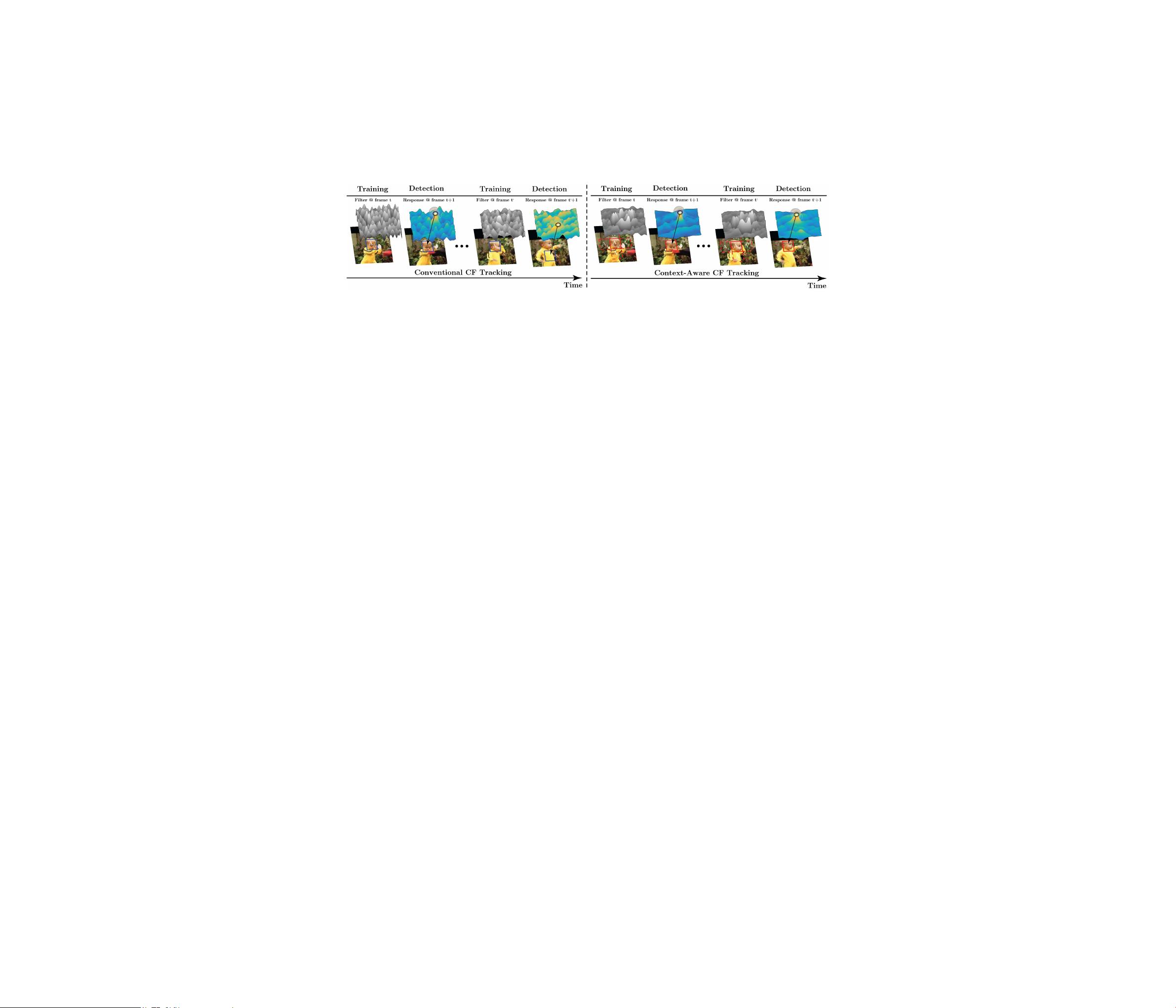

Figure 2: Comparing conventional CF tracking to our proposed context-aware CF tracking.

that there are boundary effects due to the circulant assump-

tion. In addition, the target search region only contains a

small local neighborhood to limit drift and keep computa-

tional cost low. The boundary effects are usually suppressed

by a cosine window, which effectively reduces the search re-

gion even further. Therefore, CF trackers usually have very

limited information about their context and easily drift in

cases of fast motion, occlusion or background clutter. In

order to address this limitation, we propose a framework

that takes global context into account and incorporates it

directly into the learned filter (see Figure 2). We derive a

closed-form solution for our formulation and propose it as a

framework that can be easily integrated with most CF track-

ers to boost their performance, while maintaining their high

frame rate. As shown in Figure 1, integrating our frame-

work with the mediocre tracker SAMF [18] achieves better

tracking results than state-of-the-art trackers by exploiting

context information. Note, that it even outperforms the very

recent HCFT tracker [21], whose hierarchical convolutional

features implicitly contain context information. We show

through extensive evaluation on several large datasets that

integrating our framework improves all tested CF trackers

and allows top-performing CF trackers to exceed current

state-of-the-art precision and success scores on the well-

known OTB-100 benchmark [27].

2. Related Work

CF Trackers. Since the MOSSE work of Bolme et al. [4],

correlation filters (CF) have been studied as a robust and

efficient approach to the problem of visual tracking. Ma-

jor improvements to MOSSE include the incorporation of

kernels and HOG features [10], the addition of color name

features [18] or color histograms [1], integration with sparse

tracking [30], adaptive scale [2, 5, 18], mitigation of bound-

ary effects [6], and the integration of deep CNN features

[21]. Currently, CF-based trackers rank at the top of cur-

rent benchmarks, such as OTB-100 [27], UAV123 [23], and

VOT2015 [17], while remaining computationally efficient.

CF Variations and Improvements. Significant attention

in recent work has focused on extending CF trackers to ad-

dress inherent limitations. For instance, Liu et al. propose

part-based tracking to reduce sensitivity to partial occlu-

sion and better preserve object structure [20]. The work

of [22] performs long term-tracking that is robust to appear-

ance variation by correlating temporal context and training

an online random fern classifier for re-detection. Zhu et al.

propose a collaborative CF that combines a multi-scale ker-

nelized CF to handle scale variation with an online CUR

filter to address target drift [31]. These approaches regis-

ter improvements by either combining external classifiers to

assist the CF or taking advantage of its high computational

speed to run multiple CF trackers at once.

CF Frameworks. Recent work [2, 3] has found that some

of these inherent limitations can be overcome directly by

modifying the conventional CF model used for training. For

example, by adapting the target response (used for ridge re-

gression in CF) as part of a new formulation, Bibi et al.

significantly decrease target drift while remaining compu-

tationally efficient [3]. This method yields a closed-form

solution and can be applied to many CF trackers as a frame-

work. Similarly, this paper also proposes a framework that

makes CF trackers context-aware and increases their per-

formance beyond the improvement attainable by [3], while

being less computationally expensive.

Context Trackers. The use of context for tracking has been

explored in previous work by Dinah et al. [7], where distrac-

tors and supporters are detected and tracked using a sequen-

tial randomized forest, an online template-based appearance

model, and local features. In more recent work, contex-

tual information of a scene is exploited using a multi-level

clustering to detect similar objects and other potential dis-

tractors [28]. A global dynamic constraint is then learned

online to discriminate these distractors from the object of

interest. This approach shows improvement on a subset of

cluttered scenes in OTB-100, where distractors are predom-

inant. However, both of these trackers do not generalize

well and, as a result, their overall performance on current

benchmarks is only average. In contrast, our approach is

more generic and can make use of varying types of con-

textual image regions that may or may not contain distrac-

tors. We show that context awareness enables improvement

across the entire OTB-100 and is not limited to cluttered

scenes, where context can lead to the most improvement.

Contributions. To the best of our knowledge, (i) this is

the first context-aware formulation that can be applied as a

framework to most CF trackers. Its closed form solution al-

1388

Context-Aware-CF-Tracking.rar (3个子文件)

Context-Aware-CF-Tracking.rar (3个子文件)  Context-Aware-CF-Tracking

Context-Aware-CF-Tracking  Context-Aware-CF-Tracking-master.zip 41.31MB

Context-Aware-CF-Tracking-master.zip 41.31MB Mueller-2017-Context-Aware Correlation Filter - Supplementary Material.pdf 444KB

Mueller-2017-Context-Aware Correlation Filter - Supplementary Material.pdf 444KB Mueller-2017-Context-Aware Correlation Filter.pdf 874KB

Mueller-2017-Context-Aware Correlation Filter.pdf 874KB

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜 信息提交成功

信息提交成功