based form of batching and interrupt mode ration; iii)

introduce a n extremely simple but very effective par-

avirtualized extension for the e1000 devices (or other

NICs), providing the same performance of virtio and

alikes with almost no extra complexity; iv) adapt the

hypervisor to our high sp e ed VALE [20] backend, and

v) characterize the behaviour of device polling under

virtualization.

Some of the mechanisms we propose help immensely,

especially within packet processing machines (software

routers, IDS, monitors . . . ). Especially, the fact that

we provide solutions that apply only to the guest, only

to the host, or to both, makes them applicable also in

presence of constraints (e.g., legacy g ue st softwar e that

cannot b e modified; or propr ie tary VMMs).

In our expe riments with QEMU-KVM and e1000 we

reached a VM-to-VM rate of almost 5 Mpps with short

packets, and 25 Gbit/s with 1500-byte frames, and even

higher speeds between a VM and the host. These large

speed impr ovements have been achieved with a very

small amount of code, and our appro ach can be easily

applied to other OSes and virtualization platforms. We

are pushing the relevant changes to QEMU, FreeBSD

and Linux.

In the rest of this paper, Section 2 introduces the

necessary background and terminology on virtualiza-

tion and discusses related work. Section 3 describe s

in detail the four components of our proposal, whereas

Section 4 presents experimental results, and also dis-

cusses the limitations of our work.

2. BACKGROUND AND RELATED WORK

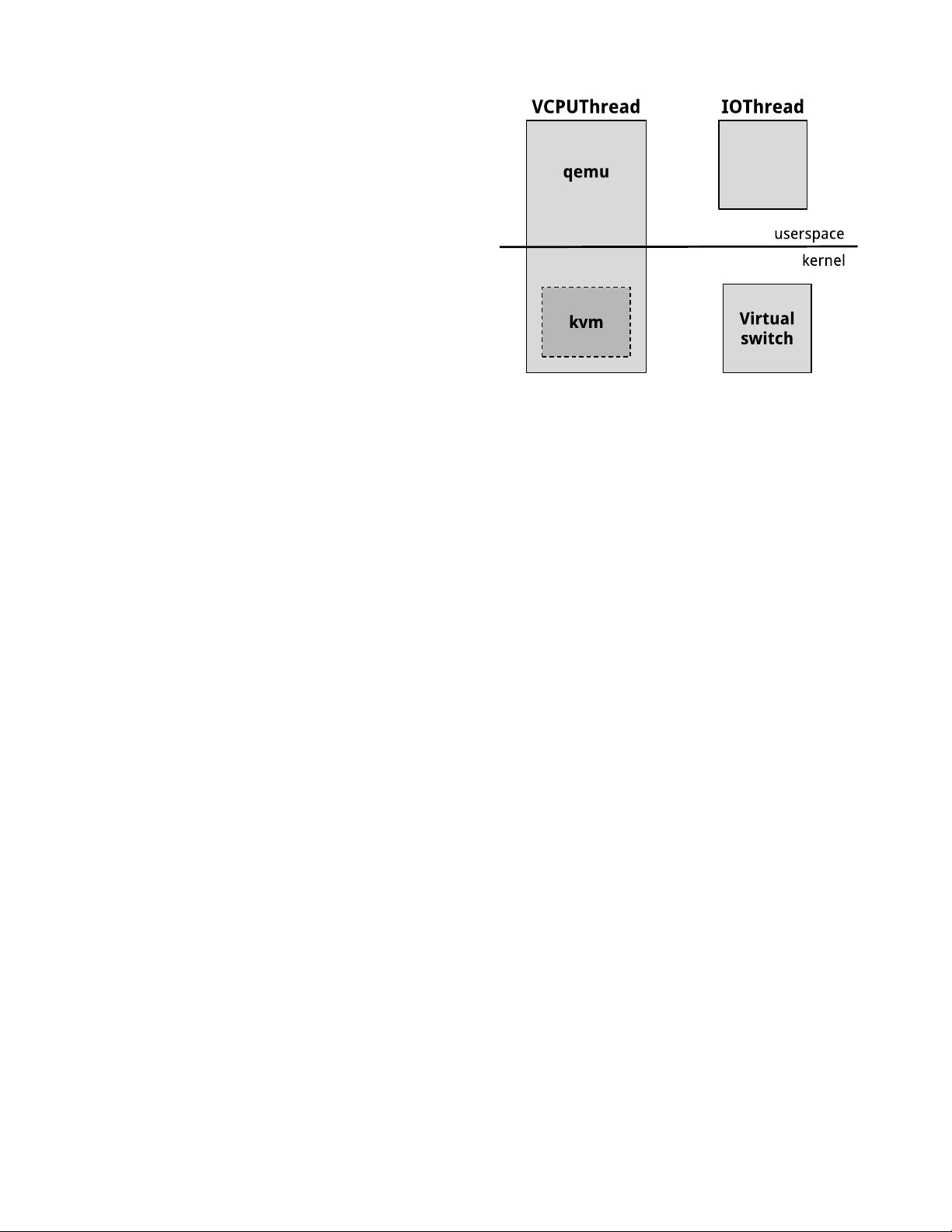

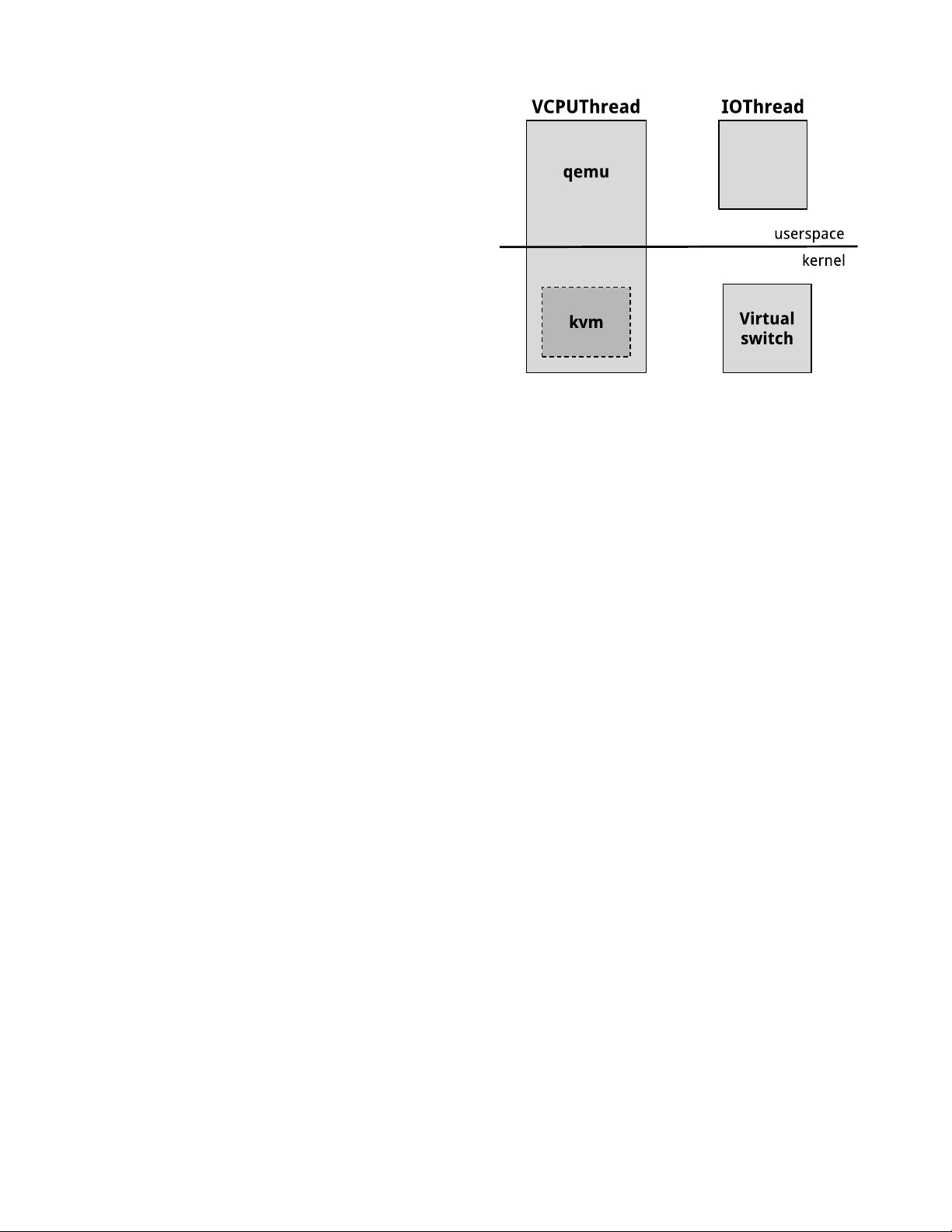

In our (ra ther standard) virtualization model (Fig-

ure 1), Virtual Machines (VMs) run on a Host which

manages hardware resources with the help of a compo-

nent on the Host called hypervisor or Virtual Machine

Monitor (VMM, for brevity). Each VM has a num-

ber of Virtual CPUs (VCPUs, typically implemented

as threads in the host), and also runs additional IO

threads to emulate and access per ipherals. The VMM

(typica lly implemented partly in the kernel and partly

in user space) controls the execution of the VCPUs, and

communicates with the I/O threads.

The way virtual CPUs are emulated depends on the

features of the emulated CPU and of the host. The

x86 architecture does not lend itself to the trap and

emulate implementation of Virtualization [1], so histor-

ical VMMs (Vmware, QE MU) relied for the most part

on binary translation for “safe” instructions, and calls

to emulation code for others. A recent paper [5], long

but very instructive, shows how the x86 architecture

was virtualized without CP U support. The evolution of

these techniques is documented in [1]. A slowdown of

2..10 times can be expected for typical code sequences,

slightly lower if kernel support is available to intercept

Figure 1: In our virtualized execution envi-

ronment a virtual machine uses one VCPU

thread p er CPU, and one or more IO threads

to support asynchronous operation. The hy-

pervisor (VMM) has one component that runs

in userspace (QEMU) and one kernel modu le

(kvm). The virtual switch also runs within the

kernel.

memory accesses to invalid locations.

Modern CPUs provide hardware support for virtual-

ization (Intel VTX, AMD V) [13, 3], so that most of the

code for the guest OS is run directly on the hos t CPU

operating in “VM” mode. In practice, the kernel side

of a VMM enters VM mode through a system call (typ-

ically an ioctl(.. VMSTART ..)), which starts exe-

cuting the guest code within the VCPU thread, and

returns to host mode as descr ibed below.

2.1 Device emulation

Emulation of I/O devices [2 5] generally interprets ac-

cesses to I/O registers and replicates the behaviour of

the corresponding hardware. The VMM component re-

producing the emulated device is called frontend. Data

from/to the frontend ar e in turn pas sed to a component

called backend which communicates with a physica l de-

vice of the same type: a network interface or switch

port, a disk device, USB port, etc.

Access to peripherals from the guest OS, in the form

of IO or MMIO instructions, causes a context switch

(“VM exit”) that r eturns the CPU to “host” mode. VM

exits often occur a lso when delivering interrupts to a

VM. On modern hardware, the cost of a VM exit/VM

enter pair and IO emulation is 3..10 µs, compared to

the 100- 200 ns for IO instructions on bare metal.

The detour into host mode is used by the VCPU

thread to interact with the frontend to emulate the ac-

tions that the real per ipheral would perform on that

2

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功