average relative error for random queries. However, some of

these methods take milliseconds to answer queries [15, 38,

30], which is about three orders of magnitude slower than

other methods. Some other methods answer queries in mi-

croseconds [29, 40], but it is reported that precision of these

methods for close pairs of vertices is not high [30, 4]. This

drawback might be critical for applications such as socially-

sensitive search or context-aware search since, in these ap-

plications, distance queries are employed to distinguish close

items.

1.1 Our Contributions

To address these issues, in this paper, we present a new

method for answering distance queries in complex networks.

The proposed method is an exact method. That is, it always

answers exactly correct distance to queries. It has much bet-

ter scalability than previous exact methods and can handle

graphs with hundreds of millions of edges. Nevertheless,

the query time is very small and around ten microseconds.

Though our method can handle directed and/or weighted

graphs as we mention later, in the following, we assume

undirected, unweighted graphs for simplicity of exposition.

Our method is based on the notion of distance labeling

or distance-aware 2-hop cover. The idea of 2-hop cover is

as follows. For each vertex u, we pick up a set C(u) of

candidate vertices so that every pair of vertices (u, v) has

at least one vertex w ∈ C(u) ∩ C(v) on a shortest path

between the pair. For each vertex u and a vertex w ∈ C(u),

we precompute the distance d

G

(u, w) between them. We

say that the set L(u) = {(w, d

G

(u, w))}

w∈C(u)

is the label

of u. Using labels, it is clear that the distance d

G

(u, v)

between two vertices u and v can be computed as min{δ+δ

0

|

(w, δ) ∈ L(u), (w, δ

0

) ∈ L(v)}. The family of labels {L(u)}

is called a 2-hop cover. Distance labeling is also commonly

used in previous exact methods [13,12,2,17], but we propose

a totally new and different approach to compute the labels,

referred to as the pruned landmark labeling.

The idea of our method is simple and rather radical: from

every vertex, we conduct a breadth-first search and add the

distance information to labels of visited vertices. Of course,

if we naively implement this idea, we need O(nm) prepro-

cessing time and O(n

2

) space to store the index, which is

unacceptable. Here, n is the number of vertices and m is the

number of edges. Our key idea to make this method practi-

cal is pruning during the breadth-first searches. Let S be a

set of vertices and suppose that we already have labels that

can answer correct distance between two vertices if a shortest

path between them passes through a vertex in S. Suppose

we are conducting a BFS from v and visiting u. If there is

a vertex w ∈ S such that d

G

(v, u) = d

G

(v, w) + d

G

(w, u),

then we prune u. That is, we do not traverse any edges from

u. As we prove in Section 4.3, after this pruned BFS from

v, the labels can answer the distance between two vertices

if a shortest path between them passes through a vertex in

S ∪ {v}.

Interestingly, our method combines the advantages of three

different previous successful approaches: landmark-based

approximate methods [29, 38, 30], tree-decomposition-based

exact methods [41,4], and labeling-based exact methods [13,

12, 2]. Landmark-based approximate methods achieve re-

markable precision by leveraging the existence of highly cen-

tral vertices in complex networks [29]. This fact is also

the main reason of the power of our pruning: by conduct-

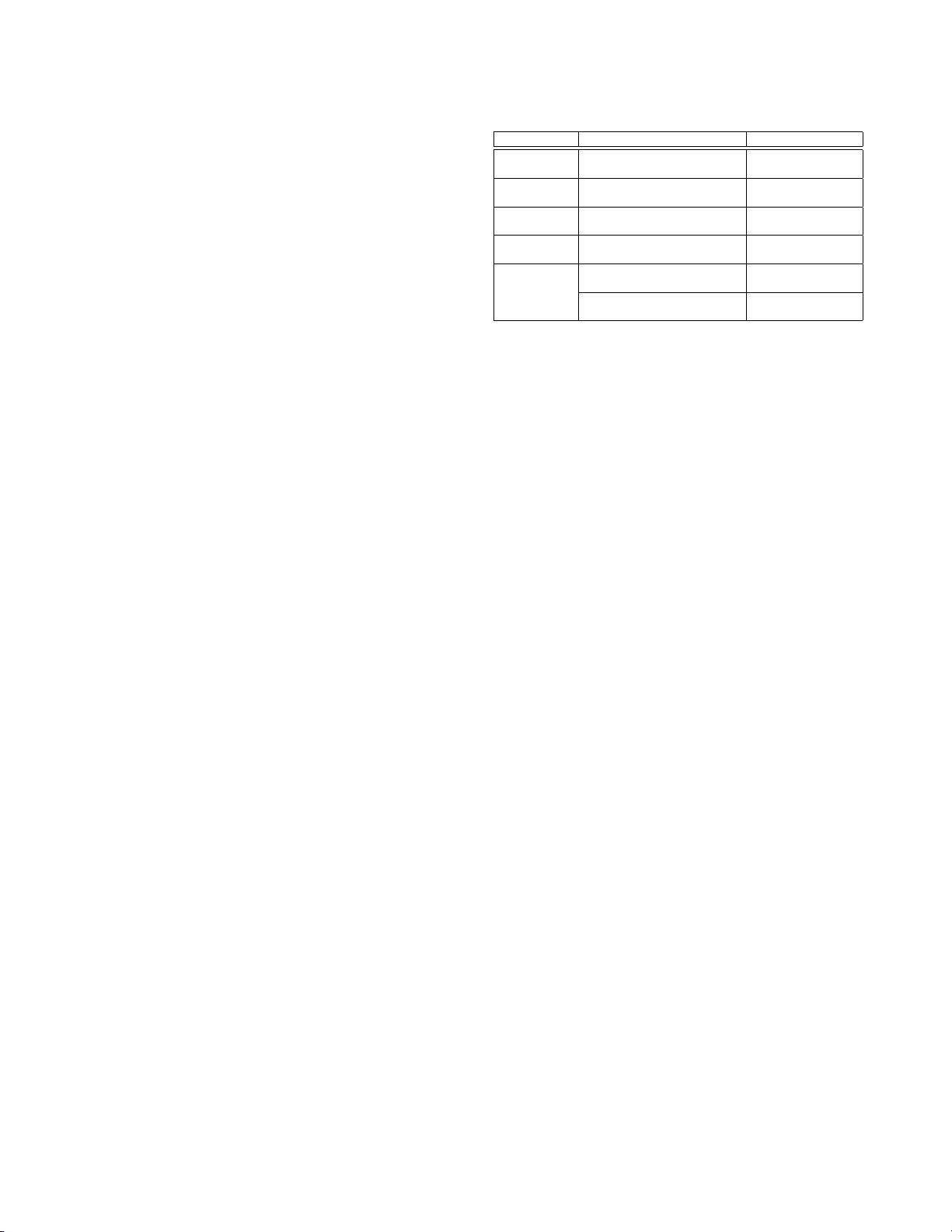

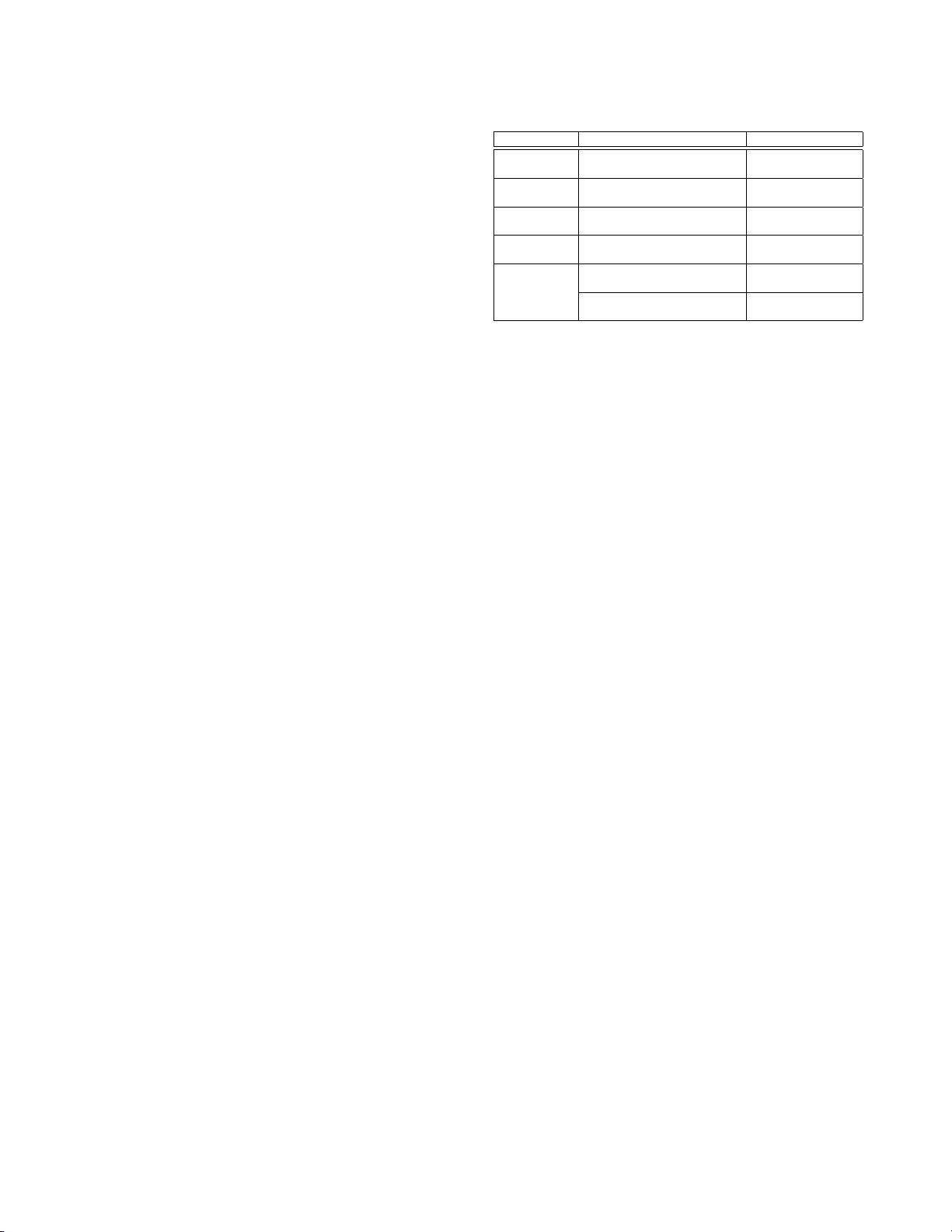

Table 1: Summary of experimental results of previous meth-

ods and the proposed method for exact distance queries.

Method Network |V | |E| Indexing Query

TEDI Computer 22 K 46 K 17 s 4.2 µs

[41] Social 0.6 M 0.6 M 2,226 s 55.0 µs

HCL Social 7.1 K 0.1 M 1,003 s 28.2 µs

[17] Citation 0.7 M 0.3 M 253,104 s 0.2 µs

TD Social 0.3 M 0.4 M 9 s 0.5 µs

[4] Social 2.4 M 4.7 M 2,473 s 0.8 µs

HHL Computer 0.2 M 1.2 M 7,399 s 3.1 µs

[2] Social 0.3 M 1.9 M 19,488 s 6.9 µs

Web 0.3 M 1.5 M 4 s 0.5 µs

PLL Social 2.4 M 4.7 M 61 s 0.6 µs

(this work) Social 1.1 M 114 M 15,164 s 15.6 µs

Web 7.4 M 194 M 6,068 s 4.1 µs

ing breadth-first searches from these central vertices first,

later we can drastically prune breadth-first searches. Tree-

decomposition-based metho ds exploit the core–fringe struc-

ture of networks [10, 27] by decomposing tree-like fringes

of low tree-width. Though our method do es not explicitly

use tree decompositions, we prove that our method can effi-

ciently process graphs of small tree-width. This process indi-

cates that our method also exploits the core–fringe structure.

As with other labeling-based methods, the data structure of

our index is simple and query processing is very quick be-

cause of the locality of memory access.

Though this pruned landmark labeling scheme is already

powerful by itself, we propose another labeling scheme with

a different kind of strength and combine them to further

improve the performance. That is, we show that labeling

by breadth-first search can be implemented in a bit-parallel

way, which exploits the property that the number of bits

b in a register word is typically 32 or 64 and we can per-

form bit manipulations on these b bits simultaneously. By

this technique, we can perform BFSs from b + 1 vertices

simultaneously in O(m) time. In the beginning, this bit-

parallel labeling (without pruning) works better than the

pruned landmark labeling since pruning does not happen

much. Note that we are not talking about thread-level par-

allelism, and our bit-parallelism actually decreases the com-

putational complexity by the factor of b + 1. We can also

use thread-level parallelism in addition to these two labeling

schemes.

As we confirm in our experimental results, our metho d

outperforms other state-of-the-art methods for exact dis-

tance queries. In particular, it has significantly better scal-

ability than previous methods. It took only tens of seconds

for indexing networks with millions of edges. This indexing

time is two orders of magnitude faster than previous meth-

ods, which took at least thousands of seconds or even more

than one day. Moreover, our method successfully handled

networks with hundreds of millions of edges, which is again

two orders of magnitude larger than networks that have been

previously used in experiments of exact methods. The query

time is also better than previous methods for networks with

the same size, and we confirmed that the query time does

not increase rapidly against sizes of networks. We also con-

firm the size of an index of our method is comparable to

other methods.

In Table 1, we summarize our experimental results and

those of previous exact methods presented in these papers.

We listed the results for the largest two real-world complex

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功