This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

IEEE TRANSACTIONS ON CYBERNETICS 1

Enhanced Computer Vision with

Microsoft Kinect Sensor: A Review

Jungong Han, Member, IEEE, Ling Shao, Senior Member, IEEE, Dong Xu, Member, IEEE, and

Jamie Shotton,

Member, IEEE

Abstract—With the invention of the low-cost Microsoft Kinect

sensor, high-resolution depth and visual (RGB) sensing has

become available for widespread use. The complementary nature

of the depth and visual information provided by the Kinect sensor

opens up new opportunities to solve fundamental problems in

computer vision. This paper presents a comprehensive review of

recent Kinect-based computer vision algorithms and applications.

The reviewed approaches are classified according to the type of

vision problems that can be addressed or enhanced by means

of the Kinect sensor. The covered topics include preprocessing,

object tracking and recognition, human activity analysis, hand

gesture analysis, and indoor 3-D mapping. For each category of

methods, we outline their main algorithmic contributions and

summarize their advantages/differences compared to their RGB

counterparts. Finally, we give an overview of the challenges in

this field and future research trends. This paper is expected to

serve as a tutorial and source of references for Kinect-based

computer vision researchers.

Index Terms—Computer vision, depth image, information

fusion, Kinect sensor.

I. Introduction

K

INECT is an RGB-D sensor providing synchronized

color and depth images. It was initially used as an

input device by Microsoft for the Xbox game console [1].

With a 3-D human motion capturing algorithm, it enables

interactions between users and a game without the need

to touch a controller. Recently, the computer vision society

discovered that the depth sensing technology of Kinect could

be extended far beyond gaming and at a much lower cost than

traditional 3-D cameras (such as stereo cameras [2] and time-

of-flight (TOF) cameras [3]). Additionally, the complementary

nature of the depth and visual (RGB) information provided

Manuscript received November 12, 2012; revised April 4, 2013; accepted

May 13, 2013. This work was supported by the Multiplatform Game In-

novation Centre (MAGIC), Nanyang Technological University, Singapore.

Recommended by Associate Editor D. Goldgof. (Corresponding author: L.

Shao)

J. Han is with Civolution Technology, Eindhoven 5656AE, The Netherlands

(e-mail: jungonghan77@gmail.com).

L. Shao is with the Department of Electronic and Electrical Engineering,

University of Sheffield, Sheffield, South Yorkshire, S1 3JD, U.K. (e-mail:

ling.shao@sheffield.ac.uk).

D. Xu is with the School of Computer Engineering, Nanyang Technological

University, 639798, Singapore (e-mail: DongXu@ntu.edu.sg).

J. Shotton is with Microsoft Research, Cambridge CB1 2FB, U.K. (e-mail:

jamiesho@microsoft.com).

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TCYB.2013.2265378

by Kinect bootstraps potential new solutions for classical

problems in computer vision. In just two years after Kinect

was released, a large number of scientific papers as well

as technical demonstrations have already appeared in diverse

vision conferences/journals.

In this paper, we review the recent developments of Kinect

technologies from the perspective of computer vision. The

criteria for topic selection are that the new algorithms are

far beyond the algorithmic modules provided by Kinect de-

velopment tools, and meanwhile, these topics are relatively

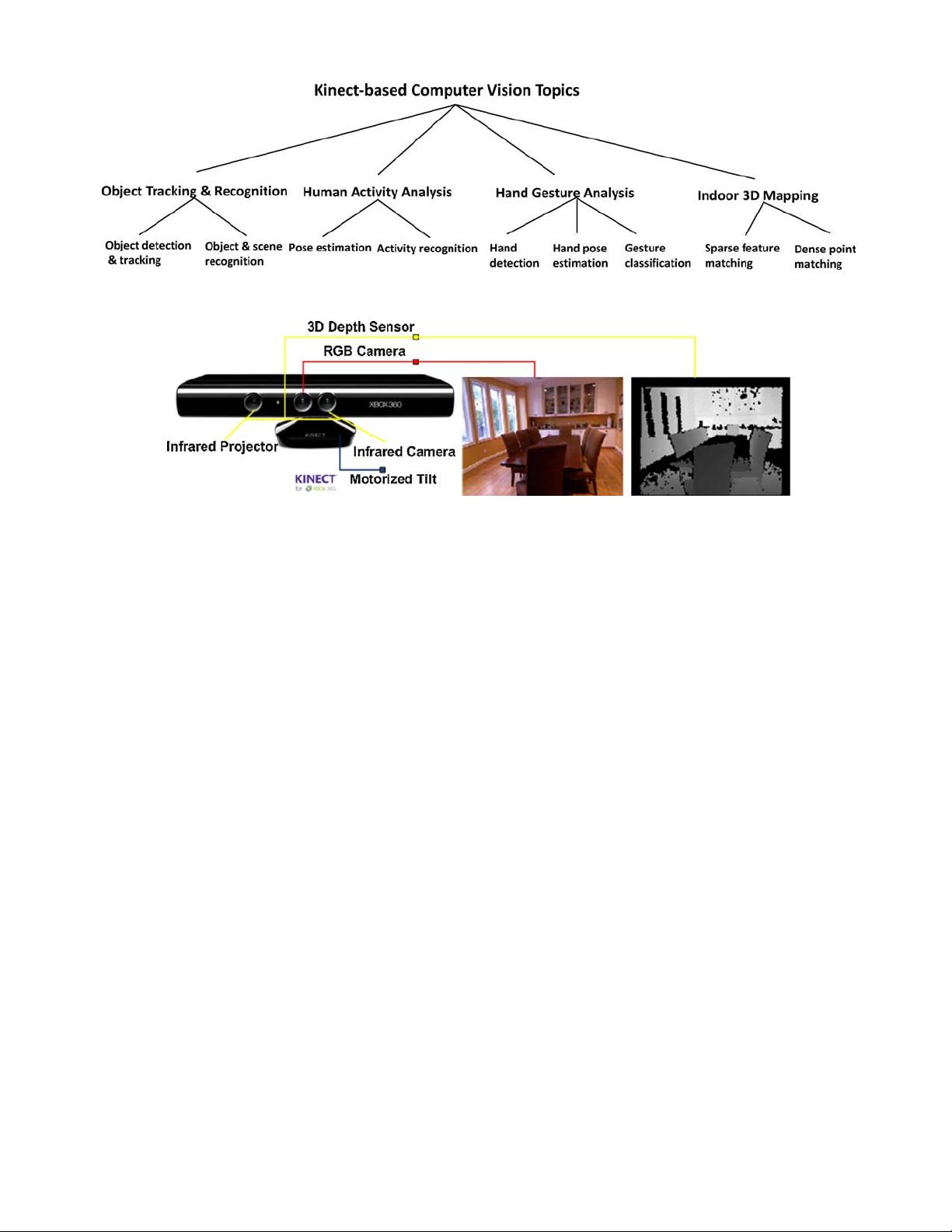

more popular with a substantial number of publications. Fig. 1

illustrates a tree-structured taxonomy that our review follows,

indicating the type of vision problems that can be addressed

or enhanced by means of the Kinect sensor. More specifically,

the reviewed topics include object tracking and recognition,

human activity analysis, hand gesture recognition, and indoor

3-D mapping. The broad diversity of topics clearly shows the

potential impact of Kinect in the computer vision field. We do

not contemplate details of particular algorithms or results of

comparative experiments but summarize main paths that most

approaches follow and point out their contributions.

Until now, we have only found one other survey-like paper

to introduce Kinect-related research [4]. The objective of that

paper is to unravel the intelligent technologies encoded in

Kinect, such as sensor calibration, human skeletal tracking

and facial-expression tracking. It also demonstrates a prototype

system that employs multiple Kinects in an immersive tele-

conferencing application. The major difference between our

paper and [4] is that [4] tries to answer what is inside Kinect,

while our paper intends to give insights on how researchers

exploit and improve computer vision algorithms using

Kinect.

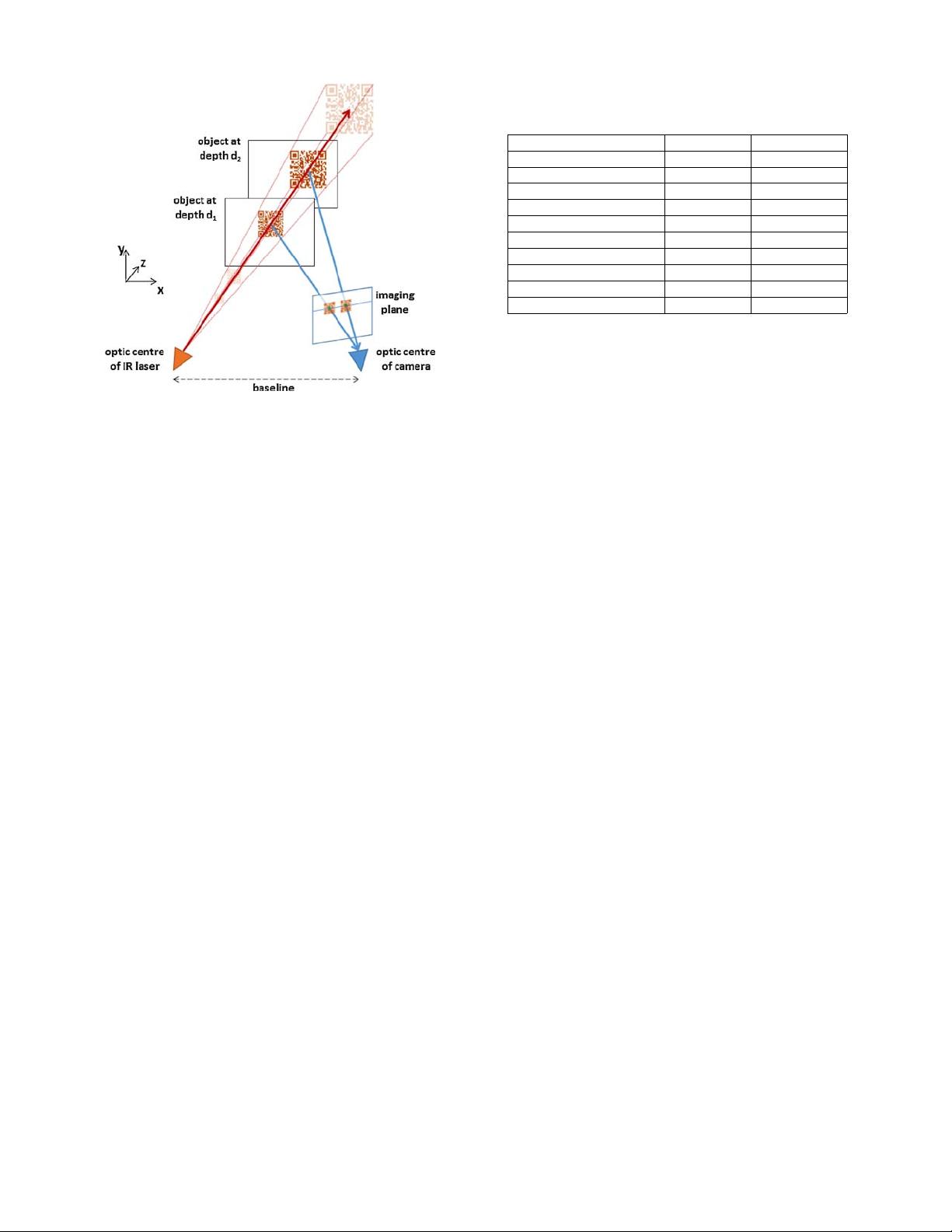

The rest of the paper is organized as follows. First, we

discuss the mechanism of the Kinect sensor taking both

hardware and software into account in Section II. The purpose

is to answer what signals the Kinect can output, and what ad-

vantages the Kinect offers compared to conventional cameras

in the context of several classical vision problems. In Section

III, we introduce two preprocessing steps: Kinect recalibration

and depth data filtering. From Section IV to Section VII, we

give technical overviews for object tracking and recognition,

human activity analysis, hand gesture recognition and indoor

3-D mapping, respectively. Section VIII summarizes the cor-

responding challenges of each topic, and reports the major

trends in this exciting domain.

2168-2267/$31.00

c

2013 IEEE

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功