CS221 Project Report:

Webcam-based Gaze Estimation

Luke Allen (source@stanford.edu) and

Adam Jensen (oojensen@stanford.edu)

Our project uses a laptop webcam to track a user’s gaze location on the screen, for use as

a user interface. At a high level: we use a variety of image-processing techniques to produce

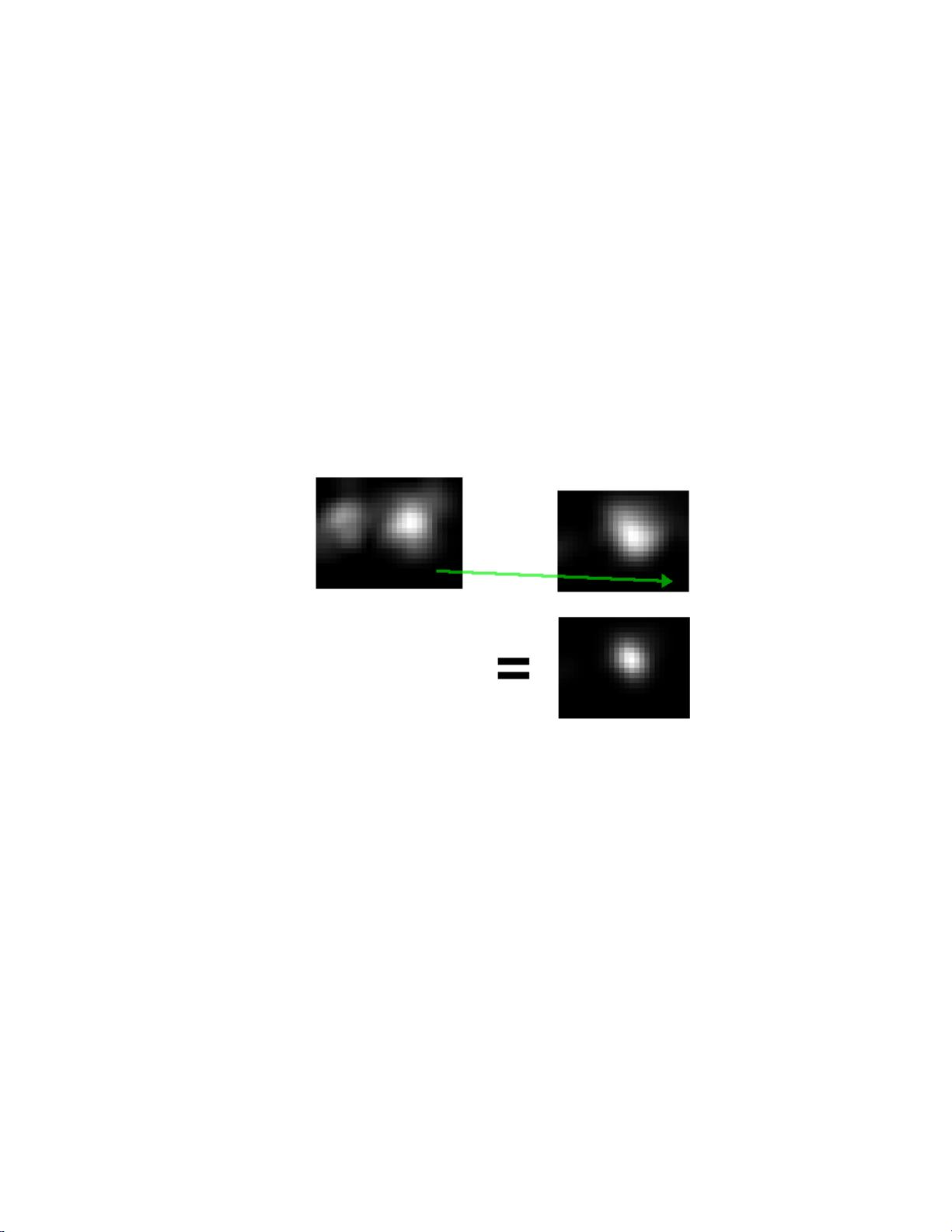

probability estimates for the location of each pupil center. We overlay and multiply these

probability matrices (effectively applying direct inference at every pixel) to estimate the most

likely pupil position given the probabilities from both eyes. We then use least-squares to train a

transformation from pupil position to screen coordinates. Our algorithm can estimate gaze

location in real time, within approximately 3cm in favorable conditions.

Roughly Locating Eyes in the Original Image

Our algorithm begins by using the OpenCV library’s implementation of the Haar cascade

detector [1] to locate a face and the two eyes in a video frame:

Figure 1: Haar Cascade detection of face and eyes

We use known face geometry to remove spurious eye and face detections, producing very

reliable bounding boxes around the user’s eyes.

Finding Pupil Centers

To estimate gaze direction, we must find the pupil center within the bounding box for

each eye. For this, we have implemented a gradient-based method [2] [3] which uses the fact that

image gradients at the border of the iris and pupil tend to point directly away from the center of

the pupil. It works as follows: