没有合适的资源?快使用搜索试试~ 我知道了~

Visibility-Aware_Point-Based_Multi-View_Stereo_Network.pdf

试读

14页

需积分: 0 0 下载量 125 浏览量

更新于2024-11-19

收藏 6.54MB PDF 举报

多视图立体三维重建MVS论文

Visibility-Aware Point-Based

Multi-View Stereo Network

Rui Chen , Songfang Han, Jing Xu , and Hao Su

Abstract—We introduce VA-Point-MVSNet, a novel visibility-aware point-based deep framework for multi-view stereo (MVS). Distinct

from existing cost volume approaches, our method directly processes the target scene as point clouds. More specifically, our method

predicts the depth in a coarse-to-fine manner. We first generate a coarse depth map, convert it into a point cloud and refine the point cloud

iteratively by estimating the residual between the depth of the current iteration and that of the ground truth. Our network leverages 3D

geometry priors and 2D texture information jointly and effectively by fusing them into a feature-augmented point cloud, and processes the

point cloud to estimate the 3D flow for each point. This point-based architecture allows higher accuracy, more computational efficiency

and more flexibility than cost-volume-based counterparts. Furthermore, our visibility-aware multi-view feature aggregation allows the

network to aggregate multi-view appearance cues while taking into account visibility. Experimental results show that our approach

achieves a significant improvement in reconstruction quality compared with state-of-the-art methods on the DTU and the Tanks and

Temples dataset. The code of VA-Point-MVSNet proposed in this work will be released at https://github.com/callmeray/PointMVSNet.

Index Terms—Multi-view stereo, 3D deep learning

Ç

1INTRODUCTION

M

ULTI-VIEW stereo (MVS) aims to reconstruct the dense

geometry of a 3D object from a sequence of images and

corresponding camera poses and intrinsic parameters. MVS

has been widely used in various applications, including

autonomous driving, robot navigation, and remote sens-

ing [1], [2]. Recent learning-based MVS methods [3], [4], [5]

have shown great success compared with their traditional

counterparts as learning-based approaches are able to learn to

take advantage of scene global semantic information, includ-

ing object materials, specularity, 3D geometry priors, and

environmental illumination, to get more robust matching and

more complete reconstruction. Most of these approaches

apply dense multi-scale 3D CNNs to predict the depth map

or voxel occupancy. However, 3D CNNs require memory

cubic to the model resolution, which can be potentially pro-

hibitive to achieving optimal performance. While Tatarch-

enko et al. [6] addressed this problem by progressively

generating an Octree structure, the quantization artifacts

brought by grid partitioning still remain, and errors may

accumulate since the tree is generated layer by layer. More-

over, MVS fundamentally relies on finding photo-consistency

across the input images. However, image appearance cues

from invisible views, which includes being occluded and out

of FOV (Field of View), are not consistent with those from visi-

ble views, which is misguiding for accurate depth prediction

and therefore needs robust handling.

In this work, we propose a novel Visibility-Aware Point-

based Multi-View Stereo Network (VA-Point-MVSNet),

where the target scene is directly processed as a point cloud,

a more efficient representation, particularly when the 3D

resolution is high. Our framework is composed of two steps:

first, in order to carve out the approximate object surface

from the whole scene, an initial coarse depth map is gener-

ated by a relatively small 3D cost volume and then con-

verted to a point cloud. Subsequently, our novel PointFlow

module is applied to iteratively regress accurate and dense

point clouds from the initial point cloud. Similar to

ResNet [7], we explicitly formulate the PointFlow to predict

the residual between the depth of the current iteration and

that of the ground truth. The 3D flow is estimated based on

geometry priors inferred from the predicted point cloud

and the 2D image appearance cues dynamically fetched

from multi-view input images (Fig. 1). Moreover, in order

to take into account visibility, including occlusion and out

of FOV, for accurate MVS reconstruction, we propose a

number of network structure alternatives that infer the visi-

bility of each view for the multi-view feature aggregation.

We find that our VA-Point-MVSNet framework enjoys

advantages in accuracy, efficiency, and flexibility when it is

compared with previous MVS methods that are built upon

a predefined 3D cost volume with a fixed resolution to

aggregate information from views. Our method adaptively

samples potential surface points in the 3D space. It keeps

the continuity of the surface structure naturally, which is

necessary for high precision reconstruction. Furthermore,

because our network only processes valid information near

R. Chen and J. Xu are with the State Key Laboratory of Tribology, the

Beijing Key Laboratory of Precision/Ultra-Precision Manufacturing

Equipment Control, Department of Mechanical Engineering, Tsinghua

University, Beijing 100084, China.

E-mail: callmeray@163.com, jingxu@tsinghua.edu.cn.

S. Han is with The Hong Kong University of Science and Technology,

Hong Kong. E-mail: hansongfang@gmail.com.

H. Su is with the Department of Computer Science a nd Eng ineering,

University of California, San Diego, San Diego, CA 92093 USA.

E-mail: haosu@eng.ucsd.edu.

Manuscript received 13 Oct. 2019; revised 2 Apr. 2020; accepted 15 Apr. 2020.

Date of publication 22 Apr. 2020; date of current version 2 Sept. 2021.

(Corresponding author: Jing Xu.)

Recommended for acceptance by T. Hassner.

Digital Object Identifier no. 10.1109/TPAMI.2020.2988729

IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 43, NO. 10, OCTOBER 2021 3695

This work is licensed under a Creative Commons Attribution 4.0 License. For more information, see https://creativecommons.org/licenses/by/4.0/

the object surface instead of the whole 3D space as is the

case in 3D CNNs, the computation is much more efficient.

The adaptive refinement scheme allows us to first peek at

the scene at a coarse resolution and then densify the recon-

structed point cloud only in the region of interest (ROI). For

scenarios such as interaction-oriented robot vision, this flex-

ibility would result in saving of computational power.

Lastly, the visibility-aware multi-view feature aggregation

allows the network to aggregate multi-view appearance

cues while taking into account visibility, which excludes

misguiding image information from invisible views and

leads to improved reconstruction quality.

Our method achieves state-of-the-art performance on

standard multi-view stereo benchmarks among learning-

based methods, including DTU [8] and Tanks and Tem-

ples [9]. Compared with previous state-of-the-arts, our

method produces better results in terms of both complete-

ness and overall quality.

This article is an extension of our previous ICCV

work [10]. There are two main additional contributions in

this work:

1) We design the novel visibility-aware multi-view fea-

ture aggregation module, which takes into account

visibility when aggregating multi-view image fea-

tures and thus improves the reconstruction quality.

2) We create a synthetic MVS dataset using path tracing

renderer to generate accurate visibility masks, which

are not available from incomplete ground truth depth

maps captured by 3D sensors. We present an exten-

sive and comprehensive evaluation of our work on

both synthetic dataset and real dataset, and analyze

the effectiveness of each component, in particular our

novel visibility-aware multi-view feature aggregation

module, in our network through comparison and

ablation study.

2RELATED WORK

Multi-View Stereo Reconstruction. MVS is a classical problem

that had been extensively studied before the rise of deep

learning. A number of 3D representations are adopted,

including volumes [11], [12], [13], deformation models [14],

[15], [16], and patches [17], [18], [19], which are iteratively

updated through multi-view photo-consistency and regulari-

zation optimization. Our iterative refinement procedure

shares a similar idea with these classical solutions by updat-

ing the depth map iteratively. However, our learning-based

algorithm achieves improved robustness to input image cor-

ruption and avoids the tedious manual hyper-parameters

tuning.

Occlusion-Robust MVS. Since MVS counts on finding cor-

respondences across input images, image appearance from

occluded views will cause mismatches and reduce the recon-

struction accuracy. Vogiatzis et al. [20] addressed this prob-

lem by designing a new metric of multi-view voting which

considers only points of local maximum and eliminates the

influence of occluded views on correspondence matching.

Further, Liu et al. [21] improved the metric by using Gaussian

filtering to counteract the effect of noise. COLMAP [22] and

some following works [23], [24] handled this problem by

dataset-wide pixel-wise view selection using patch color dis-

tribution. Our network learns to predict the pixel-wise visi-

bility for all the given source views and use the prediction in

multi-view feature aggregation, which can be trained end-

to-end and improve the robustness to occlusions.

Learning-Based MVS. Inspired by the recent success of

deep learning in image recognition tasks, researchers began

to apply learning techniques to stereo reconstruction tasks

for better patch representation and matching [25], [26], [27].

Although these methods in which only 2D networks are

used have made a great improvement on stereo tasks, it is

difficult to extend them to multi-view stereo tasks, and their

performance is limited in challenging scenes due to the lack

of contextual geometry knowledge. Concurrently, 3D cost

volume regularization approaches have been proposed [3],

[28], [29], where a 3D cost volume is built either in the camera

frustum or the scene. Next, the multi-view 2D image features

are warped in the cost volume, so that 3D CNNs can be

applied to it. The key advantage of 3D cost volume is that the

3D geometry of the scene can be captured by the network

explicitly, and the photo-metric matching can be performed

in 3D space, alleviating the influence of image distortion

caused by perspective transformation, which makes these

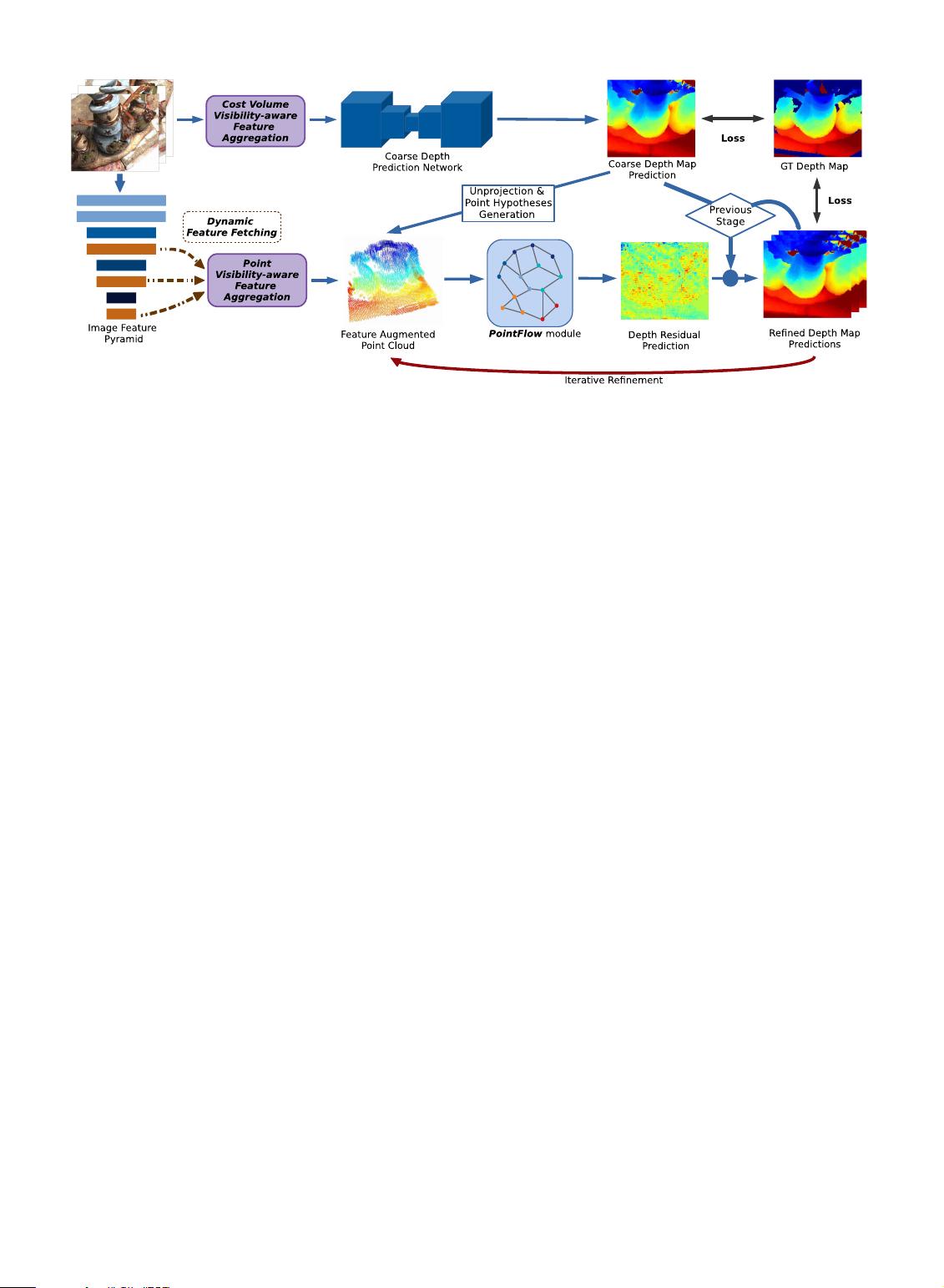

Fig. 1. VA-Point-MVSNet performs multi-view stereo reconstruction in a coarse-to-fine fashion, learning to predict the 3D flow of each point to the

ground truth surface based on geometry priors and 2D image appearance cues dynamically fetched from multi-view images and regress accurate

and dense point clouds iteratively.

3696 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 43, NO. 10, OCTOBER 2021

methods achieve better results than 2D learning-based

methods.

More recently, Luo et al. [30] proposed to use a learnable

patchwise aggregation function and apply isotropic and ani-

sotropic 3D convolutions on the 3D cost volume to improve

the matching accuracy and robustness. Yao et al. [31] pro-

posed to replace 3D CNNs with recurrent neural networks,

which leads to improved memory efficiency. Xue et al. [32]

proposed MVSCRF, where multi-scale conditional random

fields (MSCRFs) are adopted to constraint the smoothness of

depth prediction explicitly. Instead of using voxel grids, in

this paper we propose to use a point-based network for MVS

tasks to take advantage of 3D geometry learning without

being burdened by the inefficiency found in 3D CNN

computation.

Besides depth map prediction, deep learning can also be

used to refine depth maps and fuse them into a single con-

sistent reconstruction [33].

High-Resolution & Hierarchical MVS. High-resolution MVS

is critical to real applications such as robot manipulation and

augmented reality. Traditional methods [17], [34], [35] gener-

ate dense 3D patches by expanding from confident matching

key-points repeatedly, which is potentially time-consuming.

These methods are also sensitive to noise and change of view-

point owing to the usage of hand-crafted features. Hierarchi-

cal MVS generates high-resolution depth maps in a coarse-to-

fine manner, which reduces unnecessary computation and

leads to improved efficiency. For classic methods, hierarchi-

cal MI (mutual information) computation is utilized to initial-

ize and refine disparity maps [36], [37]. And learning-based

methods are proposed to predict the residual of the depth

map from warped images [38] or by constructing cascade

narrow cost volume [39], [40]. In our work, we use point

clouds as the representation of the scene, which explicitly

encodes the spatial position and relationship as important

cues for depth residual prediction and also is more flexible

for potential applications (e.g., foveated depth inference).

Point-Based 3D Learning. Recently, a new type of deep

network architecture has been proposed in [41], [42], which

is able to process point clouds directly without converting

them to volumetric grids. Compared with voxel-based

methods, this kind of architecture concentrates on the point

cloud data and saves unnecessary computation. Also, the

continuity of space is preserved during the process. While

PointNets have shown significant performance and effi-

ciency improvement in various 3D understanding tasks,

such as object classification and detection [42], it is under

exploration how this architecture can be used for MVS task,

where the 3D scene is unknown to the network. In this

paper, we propose PointFlow module, which estimates the

3D flow based on joint 2D-3D features of point hypotheses.

3METHOD

This section describes the detailed network architecture of

VA-Point-MVSNet (Fig. 2). Our method can be divided into

two steps, coarse depth prediction, and iterative depth

refinement. First, we introduce the visibility-aware feature

aggregation (Section 3.1), which reasons about the visibility

of source images from image appearance cues and aggre-

gates multi-view image information while considering visi-

bility. The visibility-aware feature aggregation is utilized in

both coarse depth prediction and iterative depth refinement.

Second, we describe the coarse depth map prediction. Let I

0

denote the reference image and I

i

fg

N

i¼1

denote a set of its

neighboring source images. Since the resolution is low, the

existing volumetric MVS method has sufficient efficiency

and can be used to predict a coarse depth map for I

0

(Section 3.2). Then we d escr ibe the 2D-3D fea ture l ifti ng

(Section 3.3), which associates the 2D image information with

3D geometry priors. Finally we propose our novel PointFlow

module (Section 3.4) to iteratively refine the input depth map

to higher resolution with improved accuracy.

3.1 Visibility-Aware Feature Aggregat ion

The main intuition for depth estimation is multi-view

photo-consistency, that image projections of the recon-

structed shape should be consistent across visible images.

Fig. 2. Overview of VA-Point-MVSNet architecture. The visibility-aware feature aggregation module aggregates the multi-view image appearance

cues to generate visibility-robust features for coarse depth prediction and depth refinement separately. A coarse depth map is first predicted with low

GPU memory and computation cost and then unprojected to a point cloud along with hypothesized points. For each point, the feature is fetched from

the multi-view image feature pyramid dynamically. The PointFlow module uses the feature-augmented point cloud for depth residual prediction, and

the depth map is refined iteratively along with up-sampling.

CHEN ET AL.: VISIBILITY-AWARE POINT-BASED MULTI-VIEW STEREO NETWORK 3697

剩余13页未读,继续阅读

资源推荐

资源评论

2022-11-14 上传

183 浏览量

185 浏览量

173 浏览量

115 浏览量

176 浏览量

2015-01-29 上传

144 浏览量

2021-06-03 上传

2023-06-06 上传

110 浏览量

2020-03-05 上传

2022-07-15 上传

149 浏览量

2021-09-30 上传

143 浏览量

2021-09-03 上传

2018-03-21 上传

2021-10-10 上传

189 浏览量

2018-08-22 上传

2021-09-30 上传

192 浏览量

2022-01-09 上传

133 浏览量

2021-10-01 上传

124 浏览量

资源评论

GL_Rain

- 粉丝: 2015

- 资源: 36

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功