BLEI,NG, AND JORDAN

word in the entire corpus (generally on a log scale, and again suitably normalized). The end result

is a term-by-document matrix X whose columns contain the tf-idf values for each of the documents

in the corpus. Thus the tf-idf scheme reduces documents of arbitrary length to fixed-length lists of

numbers.

While the tf-idf reduction has some appealing features—notably in its basic identification of sets

of words that are discriminative for documents in the collection—the approach also provides a rela-

tively small amount of reduction in description length and reveals little in the way of inter- or intra-

document statistical structure. To address these shortcomings, IR researchers have proposed several

other dimensionality reduction techniques, most notably latent semantic indexing (LSI) (Deerwester

et al., 1990). LSI uses a singular value decomposition of the X matrix to identify a linear subspace

in the space of tf-idf features that captures most of the variance in the collection. This approach can

achieve significant compression in large collections. Furthermore, Deerwester et al. argue that the

derived features of LSI, which are linear combinations of the original tf-idf features, can capture

some aspects of basic linguistic notions such as synonymy and polysemy.

To substantiate the claims regarding LSI, and to study its relative strengths and weaknesses, it is

useful to develop a generative probabilistic model of text corpora and to study the ability of LSI to

recover aspects of the generative model from data (Papadimitriou et al., 1998). Given a generative

model of text, however, it is not clear why one should adopt the LSI methodology—one can attempt

to proceed more directly, fitting the model to data using maximum likelihood or Bayesian methods.

A significant step forward in this regard was made by Hofmann (1999), who presented the

probabilistic LSI (pLSI) model, also known as the aspect model, as an alternative to LSI. The pLSI

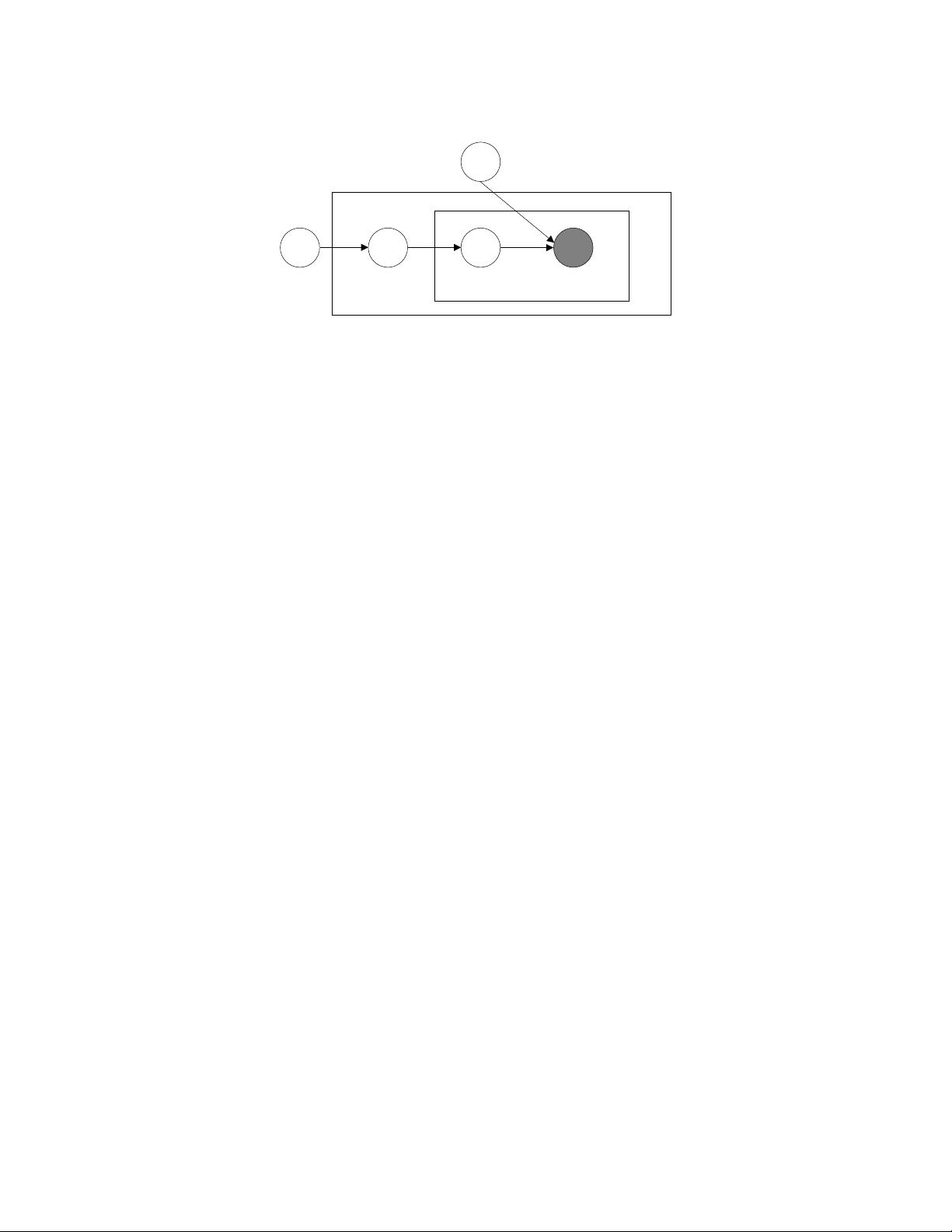

approach, which we describe in detail in Section 4.3, models each word in a document as a sample

from a mixture model, where the mixture components are multinomial random variables that can be

viewed as representations of “topics.” Thus each word is generated from a single topic, and different

words in a document may be generated from different topics. Each document is represented as

a list of mixing proportions for these mixture components and thereby reduced to a probability

distribution on a fixed set of topics. This distribution is the “reduced description” associated with

the document.

While Hofmann’s work is a useful step toward probabilistic modeling of text, it is incomplete

in that it provides no probabilistic model at the level of documents. In pLSI, each document is

represented as a list of numbers (the mixing proportions for topics), and there is no generative

probabilistic model for these numbers. This leads to several problems: (1) the number of parame-

ters in the model grows linearly with the size of the corpus, which leads to serious problems with

overfitting, and (2) it is not clear how to assign probability to a document outside of the training set.

To see how to proceed beyond pLSI, let us consider the fundamental probabilistic assumptions

underlying the class of dimensionality reduction methods that includes LSI and pLSI. All of these

methods are based on the “bag-of-words” assumption—that the order of words in a document can

be neglected. In the language of probability theory, this is an assumption of exchangeability for the

words in a document (Aldous, 1985). Moreover, although less often stated formally, these methods

also assume that documents are exchangeable; the specific ordering of the documents in a corpus

can also be neglected.

A classic representation theorem due to de Finetti (1990) establishes that any collection of ex-

changeable random variables has a representation as a mixture distribution—in general an infinite

mixture. Thus, if we wish to consider exchangeable representations for documents and words, we

need to consider mixture models that capture the exchangeability of both words and documents.

994

ZY_GDUT2013-05-21英文看起来很吃力,不过还是很不错的

ZY_GDUT2013-05-21英文看起来很吃力,不过还是很不错的 lyh8882013-04-22Blei博士最早的关于LDA模型的论文,现在看起来已经有点老了

lyh8882013-04-22Blei博士最早的关于LDA模型的论文,现在看起来已经有点老了 我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功