From Sparse Solutions of Systems...

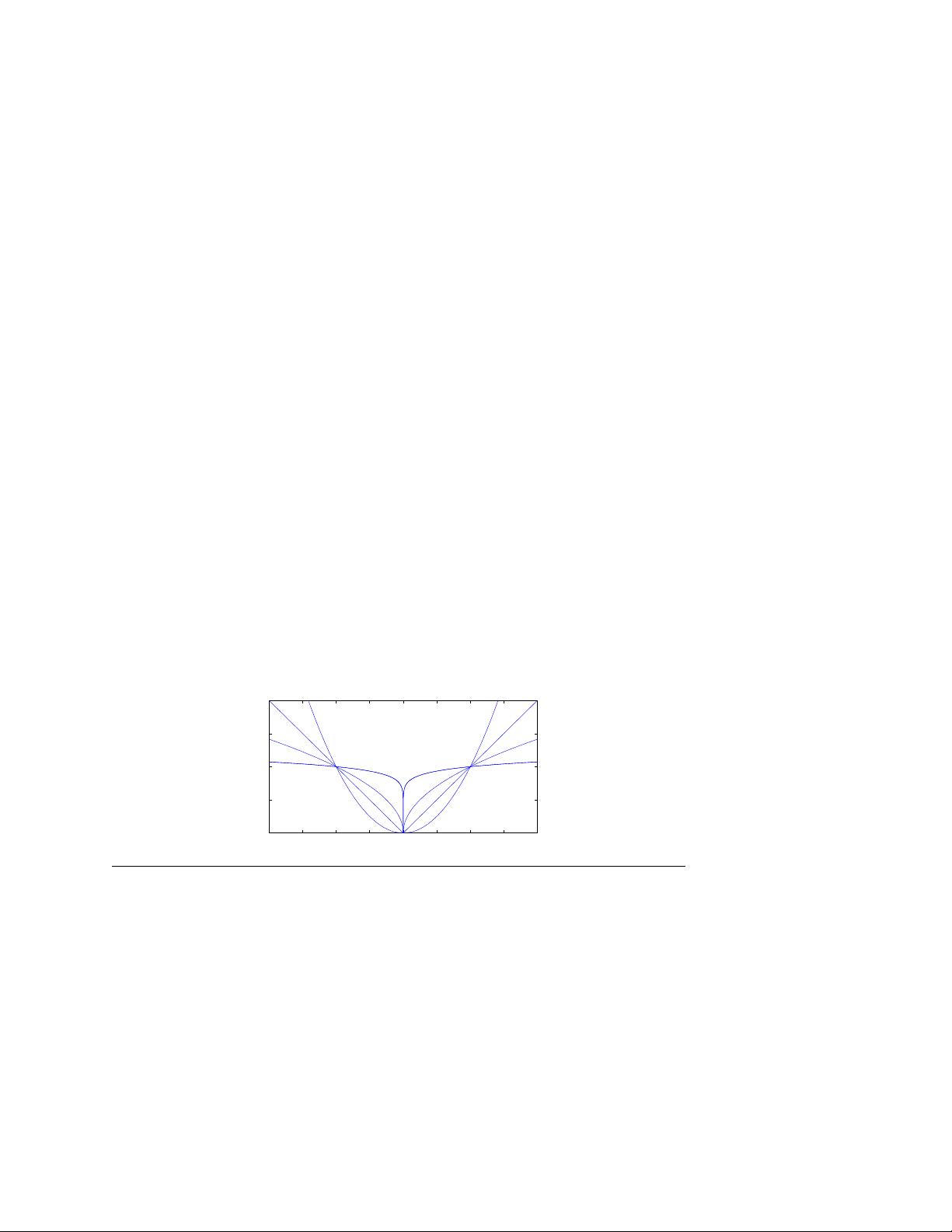

### 关于系统方程稀疏解到信号与图像稀疏建模的研究 #### 概述 本文探讨了从系统方程的稀疏解到信号与图像稀疏建模的理论和应用进展。研究始于线性代数的一个基本问题:如何找到一个欠定线性方程组 \(Ax = b\)(其中 \(A \in \mathbb{R}^{n \times m}\) 且 \(n < m\))中最稀疏的解,即非零元素最少的解。这种类型的解在许多实际应用场景中具有重要意义,尤其是在信号处理和图像处理领域。 #### 稀疏解的基本概念 在考虑一个欠定线性方程组时,存在无穷多个解。然而,在某些情况下,我们可能对寻找最稀疏的解感兴趣。这里的“稀疏”是指解向量中非零元素的数量尽可能少。这样的解在信号处理、压缩感知等领域中尤为重要。 #### 理论背景 1. **唯一性**:尽管欠定系统有无限多解,但特定条件下,最稀疏的解可能是唯一的。 2. **计算方法**:虽然优化稀疏性本质上是组合问题,但在过去几年中已经发展出有效的算法来找到最稀疏的解,如基础追踪(Basis Pursuit)和匹配追踪(Matching Pursuit)等方法。 #### 条件与结果 为了找到最优稀疏解,必须满足一定的条件,这些条件通常涉及矩阵 \(A\) 的特性。例如,矩阵的列之间不能过于相关,这通常通过互相干(coherence)的概念来衡量。如果矩阵满足某些条件,则可以证明稀疏解的存在性和可恢复性,并且可以通过有效算法求得。 #### 应用领域 - **信号处理**:稀疏表示在压缩感知、去噪等方面发挥着重要作用。例如,利用稀疏表示可以实现高效的数据压缩,同时保持信号的质量。 - **图像处理**:在图像恢复、去模糊、超分辨率等问题中,稀疏模型同样具有显著的优势。通过对图像进行稀疏表示,可以在一定程度上恢复丢失或模糊的信息。 #### 具体实例 - **逆问题**:在逆问题中,稀疏模型能够帮助解决由噪声数据引起的不稳定问题。通过寻找稀疏解,可以有效地抑制噪声的影响,提高重建质量。 - **压缩**:在图像压缩方面,利用稀疏表示可以实现更高的压缩比,同时保持良好的视觉效果。这对于存储和传输大量图像数据尤其重要。 #### 结论 本文深入讨论了从系统方程的稀疏解到信号与图像稀疏建模的相关理论和技术。通过理论分析和实验验证,表明了在满足一定条件下,欠定系统的最稀疏解是存在的,并且可以通过有效算法找到。这些成果不仅丰富了数学理论,而且为信号和图像处理领域的实际应用提供了强有力的支持。未来的研究将继续探索更高效的算法以及更广泛的应用场景,推动这一领域的持续发展。

剩余47页未读,继续阅读

- 粉丝: 424

- 资源: 6

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- DBCHM-oracle

- gitee_cli-git

- UnetCD-unet

- selenium-selenium

- xdoj-frontend-xdoj

- mindrl-强化学习

- 永磁同步电机三闭环控制Simulink仿真 电流内环 转速 位置外环 参数已经调好 原理与双闭环类似 有资料,仿真

- 元胞自动机机模拟城镇开发边界(UGB)增长 确定其组成的主要元素:元胞、元胞空间、元胞状态、元胞邻域及转变规则 分析模拟城市空间结构;确定模型的参数:繁殖参数、扩散参数、传播参数及受规划约束参数,C

- Maxwell和Simplorer联合仿真-永磁同步电机SVPWM控制 本仿真用AnsysEM实现永磁同步电机(PMSM)的仿真模拟,控制方式采用空间矢量控制,闭环方式采用电流环速度环双闭环控制

- KalmanFilterer-卡尔曼滤波

- 感应电机故障检测 Matlab simulink仿真搭建,附赠参考文献 提供以下帮助 波形纪录 参考文献 仿真文件 原理解释 仿真原理结构和整体框图

- 正弦波高频注入仿真模型

- 微环谐振腔的光学频率梳matlab仿真 微腔光频梳仿真 包括求解LLE方程(Lugiato-Lefever equation)实现微环中的光频梳,同时考虑了色散,克尔非线性,外部泵浦等因素,具有可延展

- hpwf-pycharm配置python环境

- DB-Docker-rabbitmq

- 考虑寿命损耗的微网电池储能容量优化配置 关键词:两阶段鲁棒优化 KKT条件 CCG算法 寿命损耗 风电、光伏、储能以及燃气轮机 微网中电源 储能容量优化配置 matlab代码 参考文档: 1

信息提交成功

信息提交成功

- 1

- 2

- 3

前往页