side effects, as we believe this information will be useful to others.

While our experiments were done in the context of RoF, we expect

similar systems to require similar configuration, and hope that our

methodology and results will help reduce the tuning time beyond

the scope of this specific study.

In summary, this paper makes the following contributions:

• We present our RocksDB benchmark results and analysis for

several workloads under the two main clouds: EC2 and GCE,

• We describe our tuning process, the parameters that had the

largest positive effect on performance, and their optimal set-

tings; and

• We describe negative results for tuning efforts that either did

not pan out, reduced performance, or had non-intuitive side

effects.

2. BACKGROUND

This section briefly overviews Redis, Redis on Flash, and

RocksDB. It describes the high-level architecture of these systems

and provides the necessary background for understanding the de-

tails brought up in the rest of the paper.

2.1 Redis

Redis (Remote Dictionary Server) [8] is a popular open-source in-

memory key-value store that provides advanced Key-Value abstrac-

tion. Redis is single-threaded, it handles a command from just one

client at a time in the process’ main thread. Unlike traditional KV

systems where keys are of a simple data type (usually strings), keys

in Redis can function as complex data types such as hashes, lists,

sets, and sorted sets. Furthermore, Redis enables complex atomic

operations on these data types (e.g., enqueuing and dequeuing from

a list, inserting a new value with a given score to a sorted set, etc.).

Redis abstraction and high ingestion speed have proven to be partic-

ularly useful for many latency-sensitive tasks. Consequently, Redis

has gained wide-spread adoption, and is used by a growing number

of companies in production setting [9].

Redis supports high availability and persistence. High availability

is achieved by replicating the data from the master nodes to the

slave nodes and syncing them. When a master process fails, its

corresponding slave process is ready to take over following a pro-

cess called failover. Persistence can be configured by either one of

the following two options: (1) using a point-in-time snapshot file

called RDB (Redis Database), or (2) using a change log file called

AOF (Append Only File). Note that these three mechanisms (AOF

rewrite, RDB snapshot, and replication) rely on a fork to acquire

a point in time snapshot of the process memory and serializing it

(while the main process keeps serving client commands).

2.2 Redis on Flash

In-memory databases like Redis store their data in DRAM. This

makes them fast yet expensive, because (1) DRAM capacity per

node is limited, and (2) DRAM price per GB is relatively high. Re-

dis on Flash (RoF) [6, 7] is a commercial extension to Redis that

uses SSDs as RAM extension to dramatically increase the effec-

tive dataset capacity on a single server. RoF is fully compatible

with the open-source Redis and implements the entire Redis com-

mand set and features. RoF uses the same mechanisms as Redis to

provide high-availability and persistence rather than relying on the

non-volatile property of flash.

RoF keeps hot values in RAM and evicts cold values to the flash

drives. It utilizes RocksDB as its storage engine: all drives are

managed by RocksDB, and accesses to values on the drives are

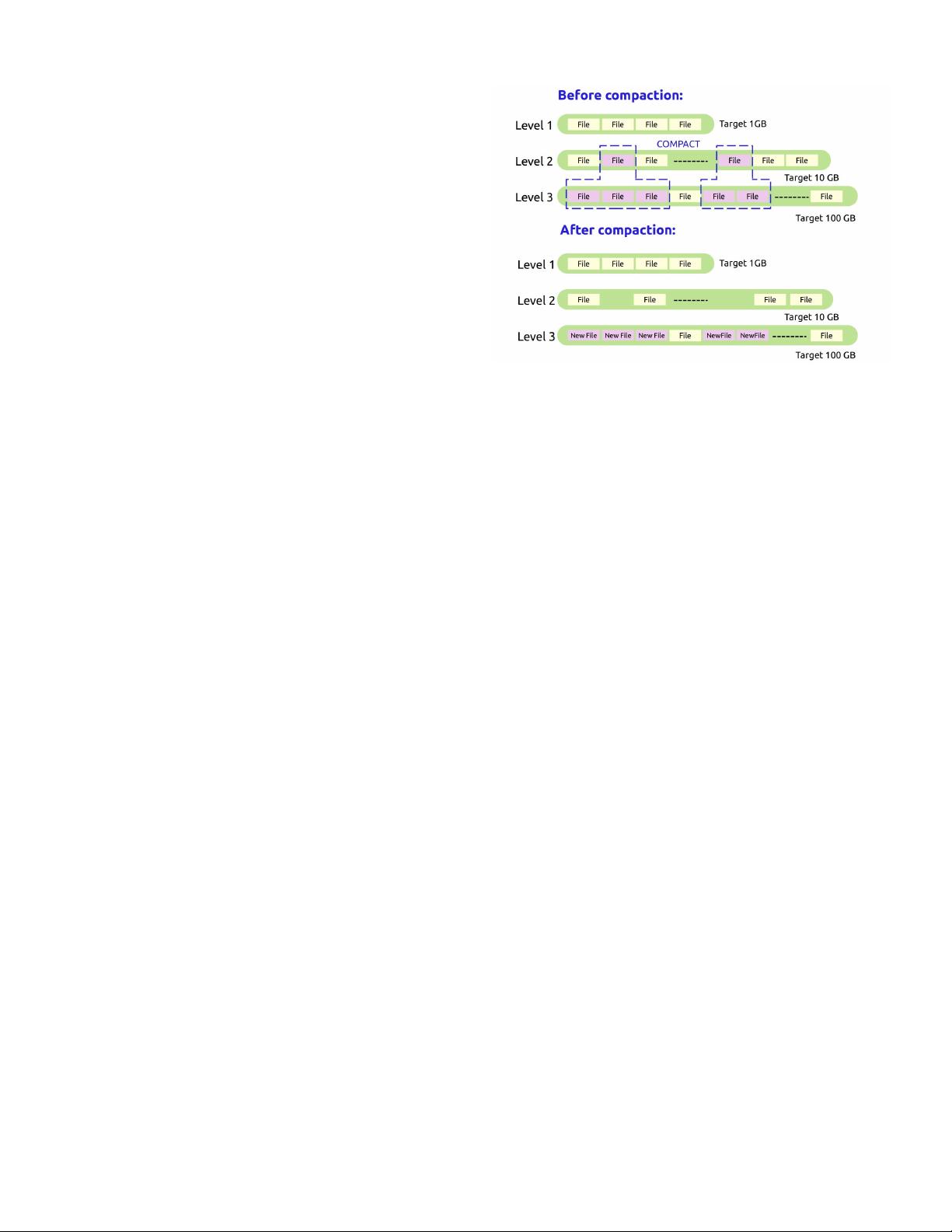

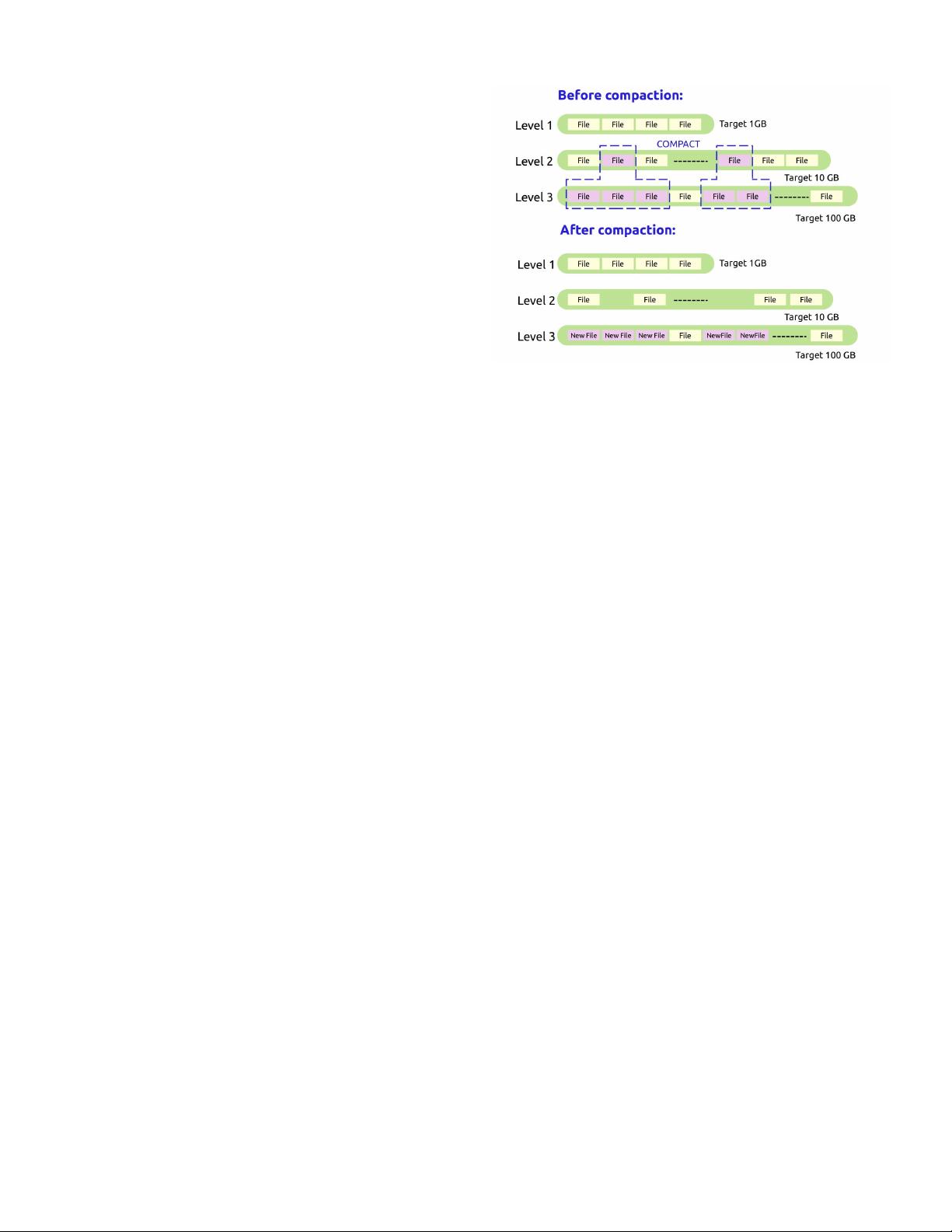

Figure 1: Illustration of the compaction process

done via RocksDB interface. When a client requests a cold value,

the request is temporally blocked while a designated RoF I/O thread

submits the I/O request to RocksDB. During this time, the main

Redis thread serves incoming requests from other clients.

2.3 RocksDB

RocksDB [10] is an open source key-value store implemented in

C++. It supports operations such as get, put, delete, and scan

of key-values. RocksDB can ingest massive amounts of data. It

uses SST (sorted static tables) files to store the data on NVMes,

SATA SSDs, or spinning disks while aiming to minimize latency.

RocksDB uses bloom filters to determine the presence of a key in

a SST file. It avoids the cost of random writes by cumulating data

to memtables in RAM, and then flushing them to disks in bulks.

RocksDB files are immutable: once created they are never over-

written. Records are not updated nor deleted, and instead new files

are created. This generates redundant data on disk, and requires

regular database compaction. Compaction of files remove dupli-

cate keys and process key deletions to free up space as shown in

Figure 1.

2.3.1 RocksDB Architecture

RocksDB organizes data in different sorted runs called levels. Each

level has a target size. The target size of levels increases by the

same size multiplier (default x10). Therefore, if the target size of

level 1 is 1GB, the target size of level 2, 3, 4 will be 10GB, 100GB,

and 1000GB. A key can appear on multiple levels, but the most up-

to-date value is located higher in the levels hierarchy as older keys

are pushed down during compaction.

RocksDB initially stores new writes in RAM using memtables.

When a memtable is filled up, it is converted to an immutable

memtable and inserted into the flush pipeline at which point a new

memtable is allocated for the following writes. Level 0 is an exact

copy of the memtables. When level 0 fill ups, the data is compacted,

i.e. pushed down to the deeper levels. The compaction process ap-

plies to all levels, and merges files together from level N to level

N+1 as shown in Figure 1.

2.3.2 Amplification factors

We measured the impact of the optimizations by monitoring the

throughput and duration of the experiments under various work-

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功