没有合适的资源?快使用搜索试试~ 我知道了~

视觉任务高效注意力金字塔变换器(EAPT)的设计与性能评估

需积分: 5 0 下载量 192 浏览量

2025-01-03

22:16:21

上传

评论

收藏 7.17MB PDF 举报

温馨提示

内容概要:本文提出了一种新的视觉变换器架构——高效注意力金字塔变换器(Efficient Attention Pyramid Transformer, EAPT)。针对现有基于补丁的方法无法覆盖多尺度视觉元素且局部通信限制的问题,EAPT 引入了变形注意力机制(Deformable Attention)、编码-解码通信模块(Encode-Decode Communication module, En-DeC module)以及多维连续混合描述符(Multi-dimensional Continuous Mixture Descriptor, MCMD)。具体来说,EAPT 使用了变形注意力来改进不同形状视觉元素的关注力捕获;用 En-DeC 实现了所有补丁间全局信息交流;并设计了适用于高维数据的位置编码方法 MCMD 来替代低维位置编码,显著提升了模型对于各种长度序列的位置指引能力。 适合人群:从事计算机视觉、图像处理领域的研究人员和从业者。 使用场景及目标:本研究适用于解决图像分类、目标检测和语义分割的任务,在这些任务中,提高视觉特征提取能力和增强模型效率是关键目标。通过对EAPT及其组件的功能测试证明了它在不同视觉任务中的有效性和优越性。 其他说明:实验结果表明EAPT不仅提高了计算效率,还增强了跨不同尺寸视觉要素之间的关系捕捉。未来将探索神经架构搜索、知识蒸馏等先进技术的应用,以进一步降低复杂度并提高性能。

资源推荐

资源详情

资源评论

50 IEEE TRANSACTIONS ON MULTIMEDIA, VOL. 25, 2023

EAPT: Efficient Attention Pyramid Transformer for

Image Processing

Xiao Lin , Shuzhou Sun, Wei Huang, Bin Sheng , Member, IEEE,PingLi , Member, IEEE,

and David Dagan Feng

, Life Fellow, IEEE

Abstract—Recent transformer-based models, especially patch-

based methods, have shown huge potentiality in vision tasks.

However, the split fixed-size patches divide the input features

into the same size patches, which ignores the fact that vision

elements are often various and thus may destroy the semantic

information. Also, the vanilla patch-based transformer cannot

guarantee the information communication between patches, which

will prevent the extraction of attention information with a global

view. To circumvent those problems, we propose an Efficient

Attention Pyramid Transformer (EAPT). Specifically, we first

propose the Deformable Attention, which learns an offset for

each position in patches. Thus, even with split fixed-size patches,

our method can still obtain non-fixed attention information that

can cover various vision elements. Then, we design the Encode-

Decode Communication module (En-DeC module), which can

obtain communication information among all patches to get

more complete global attention information. Finally, we propose

a position encoding specifically for vision transformers, which

can be used for patches of any dimension and any length.

Extensive experiments on the vision tasks of image classification,

object detection, and semantic segmentation demonstrate the

effectiveness of our proposed model. Furthermore, we also conduct

Manuscript received 1 June 2021; revised 9 September 2021; accepted 7

October 2021. Date of publication 19 October 2021; date of current version

13 January 2023. This work was supported in part by the National Natural

Science Foundation of China under Grants 61872241 and 62077037, in part

by Shanghai Municipal Science and Technology Major Project under Grant

2021SHZDZX0102, in part by the Science and Technology Commission of

Shanghai Municipality under Grants 18410750700 and 17411952600, in part

by Shanghai Lin-Gang Area Smart Manufacturing Special Project under Grant

ZN2018020202-3, in part by Project of Shanghai Municipal Health Commission

under Grant 2018ZHYL0230, and in part by the Hong Kong Polytechnic

University under Grants P0030419, P0030929, and P0035358. The associate

editor coordinating the review of this manuscript and approving it for publication

was Professor Liqiang Nie. (Xiao Lin and Shuzhou Sun contributed equally to

this work.) (Corresponding author: Bin Sheng.)

Xiao Lin and Shuzhou Sun are with the Department of Computer

Science, Shanghai Normal University, Shanghai 200234, China, and also

with the Shanghai Engineering Research Center of Intelligent Educa-

tion and Bigdata, Shanghai 200240, China (e-mail: lin6008@shnu.edu.cn;

1000479143@smail.shnu.edu.cn).

Wei Huang is with the Department of Computer Science and Engineering,

University of Shanghai for Science and Technology, Shanghai 200093, China

(e-mail: 191380039@usst.edu.cn).

Bin Sheng is with the Department of Computer Science and Engineer-

ing, Shanghai Jiao Tong University, Shanghai 200240, China (e-mail: sheng-

bin@cs.sjtu.edu.cn).

Ping Li is with the Department of Computing, The Hong Kong Polytechnic

University, Kowloon 999077, Hong Kong (e-mail: p.li@polyu.edu.hk).

David Dagan Feng is with the Biomedical and Multimedia Information Tech-

nology Research Group, School of Information Technologies, The University

of Sydney, Sydney, NSW 2006, Australia (e-mail: dagan.feng@sydney.edu.au).

Color versions of one or more figures in this article are available at

https://doi.org/10.1109/TMM.2021.3120873.

Digital Object Identifier 10.1109/TMM.2021.3120873

rigorous ablation studies to evaluate the key components of the

proposed structure.

Index Terms—Transformer, attention mechanism, pyramid,

classification, object detection, semantic segmentation.

I. INTRODUCTION

T

RANSFORMER-BASED models have become de facto

approaches of Natural Language Processing (NLP) be-

cause of their advantages in processing sequences [1]–[5]. And

in the most recent, transformer has also achieved competitive

performance in many vision tasks, such as image classifica-

tion [6], [7], object detection [8], [9], segmentation [10], im-

age generation [11], person re-identification [12], etc. Compared

with language material, the resolution of visual data is higher,

and thus the global-pixel-level attention calculations will yield

an unbearable cost. To this problem, DEtection TRansformer

(DETR) [13], which is the milestone of the vision transformer,

uses a Convolutional Neural Network (CNN) to reduce the reso-

lution of the input. Furthermore, ViT [6] abandons the CNN fea-

ture extractor and directly splits the input into fixed-size patches

to build a pure vision transformer architecture. And many re-

cent works also follow this method of processing of splitting

high-resolution input. Albeit its prosperity, the above vanilla

splitting-based methods still suffer from many thorny problems.

The one is split fixed-size patches may destroy semantic infor-

mation. Unlike language elements, vision elements differ in size

and shape. Thus, it is difficult for the fixed-size patches to cover

various vision elements. To this issue, Deformable Patch-based

Transformer (DPT) [14] splits the patches in a data-specific way

to obtain non-fixed-size patches, i.e., it gets the learnable posi-

tions and scales in an adaptive way for each patch. However,

considering that the patch-based vision transformer usually cal-

culates attention information in each patch, DPT may introduce

a lot of extra calculations, especially when facing data with

larger vision elements. In this paper, we propose Deformable

Attention, which can combat the problem of fixed-size patches

destroying semantic information without introducing additional

attention. To be specific, the Deformable Attention provides a

learnable offset for each position in the patch, and thus the calcu-

lation of attention information is no longer restricted by patches

of fixed size and shape. Therefore, even with fixed-size patches,

the Deformable Attention can still capture semantic information

of various vision elements. In addition, the Deformable Atten-

tion does not change the size of the original patches, so it does

not introduce additional attention calculation costs.

1520-9210 © 2021 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See https://www.ieee.org/publications/rights/index.html for more information.

Authorized licensed use limited to: Lanzhou University of Technology. Downloaded on October 04,2024 at 14:18:28 UTC from IEEE Xplore. Restrictions apply.

LIN et al.: EAPT: EFFICIENT ATTENTION PYRAMID TRANSFORMER FOR IMAGE PROCESSING 51

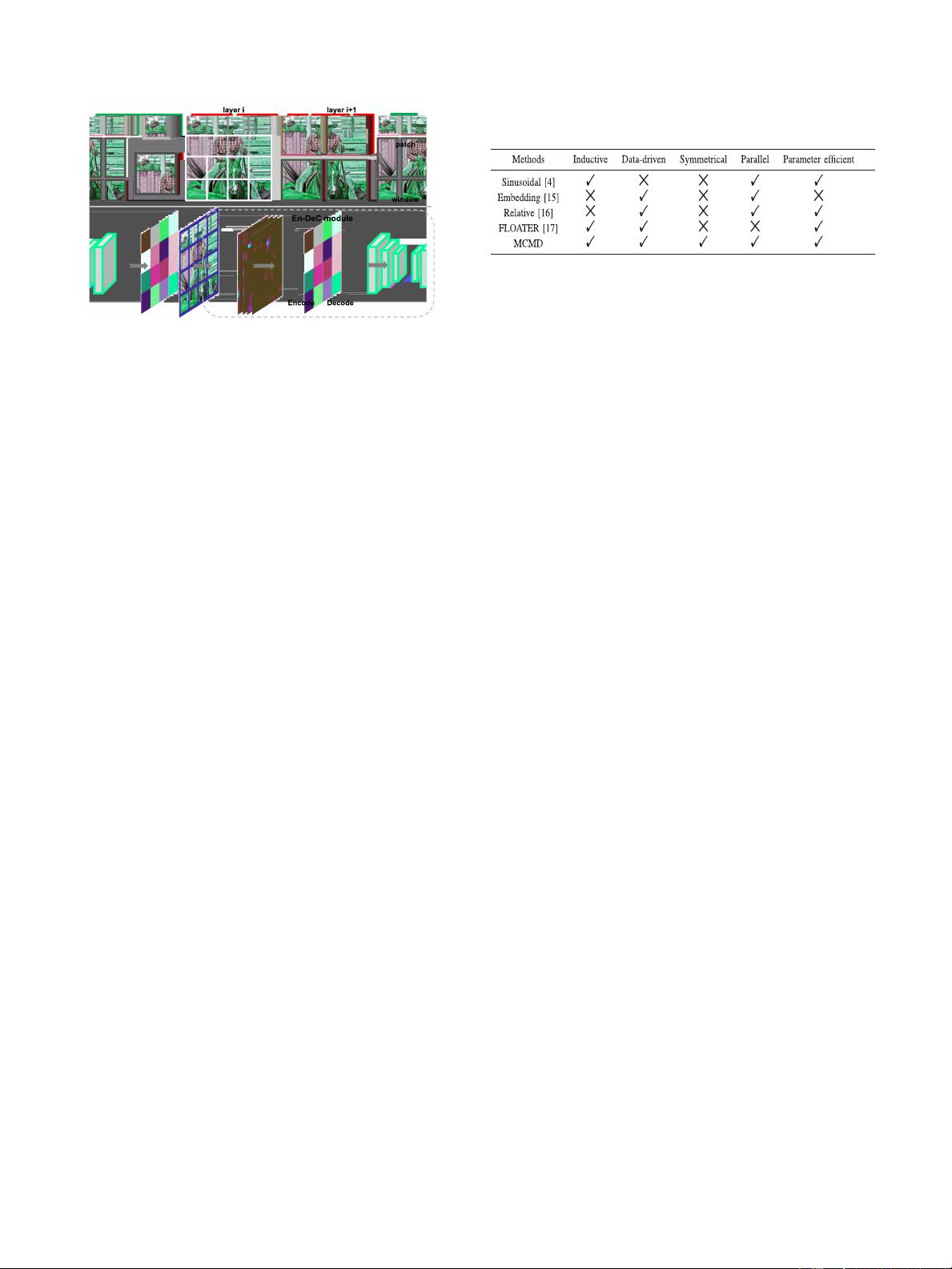

Fig. 1. The shifted window approach in Swin Transformer (this illustration

refers to the original paper) and our proposed En-DeC module. Swin Trans-

former (top) shifts the windows of the i-th layer to obtain the windows of the

(i +1)-th layer. Obviously, different windows cover different patches, so the

communication among different patches can be guaranteed to a certain extent.

To consider all the patches at once, our proposed En-DeC module (bottom) uses

an encode-decode architecture to obtain communication information among all

patches to get more complete global attention information.

And the another thorny problem is that although the vanilla

splitting-based transformer can avoid expensive global-pixel-

level attention calculations, it also limits the communication

of attention information among different patches. Obviously,

the compromise of local-pixel-level attention calculations on

computational cost affects the performance of the model. To

this problem, Swin Transformer [8] calculates the attention in-

formation in the non-overlapping local windows (each window

covers multiple patches) instead of in a single patch. Mean-

while, Swin Transformer uses the Shifted Window mechanism

to make the window cover different patches in different atten-

tion calculation stages. Although Swin Transformer can pro-

mote communication among different patches, it is still local

communication. In this work, we propose a global patch com-

munication module based on the ecode-decode architecture,

dubbed as En-DeC module (Encode-Decode Communication

module). The En-DeC module first uses a encode model to com-

press the information of all patches. Then, the En-DeC mod-

ule recovers the compressed information by a decode model

and adds the recover results into the attention calculation re-

sults. Both our proposed En-DeC module and Shifted Window

mechanism aim to communicate the information among differ-

ent patches, but the former is global-communication and the

latter is local-communication, and we show the difference in

Fig. 1.

In addition to the above two thorny problems, current position

encoding approaches in vision transformer either directly adopt

low-dimensional encoding technology in NLP that cannot cap-

ture multi-dimensional location information or use learn-based

encoding method that may increase learning cost [4], [15]–[17].

These facts motivate our novel position encoding design tailored

for vision transformer. To achieve this goal, we propose to use

Multi-dimensional Continuous Mixture Descriptor (MCMD) to

describe the position information. Specifically, we use Gaus-

sian to describe the position information of each dimension

and then mix them. Benefits from the symmetrical and con-

tinuous properties of Gaussian, our descriptors can be of any

TABLE I

P

ROPERTIES COMPARISON OF DIFFERENT POSITION ENCODING METHODS

length and the changes of the descriptors are smooth as well as

symmetrical. Additionally, our position encoding method can

establish the correlation between descriptors and parameters

to be learned only by adjusting very few hyperparameters in

Gaussian. Based on the above description, our proposed posi-

tion encoding method obviously has the following properties:

1) Inductive. The ability to handle sequences of any dimension

and any length; 2) Data-Driven. The encoding is affected by

the data; 3) Symmetrical. The encoding of symmetrical posi-

tion sequences is also symmetrical; 4) Parallel. Encoding does

not affect the parallelization of the transformer; and 5) Param-

eter efficient. Encoding does not introduce too many additional

parameters. We summarized the properties comparison of typi-

cal position encoding methods in Table I. Our work makes the

following three main contributions:

r

Deformable Attention: We propose a Deformable Atten-

tion, which starts with a learnable offset to remodel the

rules for obtaining attention information. Compared with

the vanilla fixed-size-patch-based transformer (e.g., ViT),

Deformable Attention can better cover the various vision

elements. And our work introduces less computational cost

than similar deformable-based methods (e.g., DPT).

r

Encode-Decode Communication module (En-DeC

module): Although the vanilla splitting-based transformer

can significantly reduce the computational cost, only calcu-

lating the attention information in a single patch also limits

the communication among different patches. Some designs

(e.g., Shifted Window in Swin Transformer) can alleviate

this problem, but they are still local communication. Dif-

ferent from the previous methods, our proposed En-DeC

module uses an encode-decode architecture t o implement

all patches communication.

r

Multi-dimensional Continuous Mixture Descriptor

(MCMD): We propose the MCMD, a position encoding

tailored for vision transformer, which encodes different

dimensions independently and then mixes them. MCMD

satisfies the properties that an excellent position encoding

should have, i.e., inductive, data-driven, symmetrical, par-

allel, parameter efficient.

The rest of this paper is organized as follows: Section II dis-

cusses the related work for transformer, especially Vision trans-

former, and the position encoding in it. The design details of

the Efficient Attention Pyramid Transformer (EAPT) are given

in Section III. In Section IV we cover the experimental results.

Finally, Section V concludes this paper.

Authorized licensed use limited to: Lanzhou University of Technology. Downloaded on October 04,2024 at 14:18:28 UTC from IEEE Xplore. Restrictions apply.

52 IEEE TRANSACTIONS ON MULTIMEDIA, VOL. 25, 2023

II. RELATED WORK

A. Vision Transformer

Due to the strong representation ability of transformer,

transformer-based models have been applied to multiple vision

tasks in most recent. From high-level vision tasks object detec-

tion [8], [9] and segmentation [10] to low-level tasks such as

image enhancement [18] and image generation [11] etc., vision

transformer models have achieved competitive performance.

Groundbreaking work DETR [13] introduces transformer to vi-

sion tasks for the first time. It starts with a convolutional neural

network to extract features coupled with inputting those fea-

tures to the transformer blocks to obtain the attention infor-

mation. However, DETR [13] requires immense amounts of

computational and gains poor performance on small objects

due to its coarse-grained attention information extraction. Then,

ViT [6] splits the input into fixed-size patches to avoid the un-

bearable cost of the global-pixel-level attention calculation. And

much current work followed this patch-based processing mecha-

nism. Although widely used, the patch-based methods still have

many thorny troubles. The one is split fixed-size patches may

destroy semantic information of vision elements. Deformable

Patch-based Transformer (DPT) [14] splits the patches in a

data-specific way to obtain non-fixed-size patches to combat this

trouble. However, non-fixed-size patches may introduce a lot of

additional attention calculation costs. The another is the patch-

based transformers limit the communication of attention infor-

mation among different patches. Although the non-overlapping

local windows in Swin Transformer can promote communica-

tion among different patches, this is still local communication.

For these thorny troubles, we propose Efficient Attention Pyra-

mid Transformer (EAPT), which consists of Deformable At-

tention and Encode-Decode Communication module (En-DeC

module). The former learns an offset for each position in the

patch, so it makes the fixed size patches can better cover the

various vision elements. The latter uses an encode-decode archi-

tecture to make the communications among all patches. Overall,

EAPT is a patch-based transformer, but it can alleviate the thorny

troubles in the vanilla fixed-size-patch-based transformer due to

our proposed Deformable Attention and En-DeC module.

B. Position Encoding in Transformer

Unlike the Recurrent Neural Network (RNN) [19]–[21] the

transformer cannot distinguish the position information of the

tokens and thus requires to perform position encoding for in-

puts [22]. The vanilla transformer [4] adopts Sinusoidal-based

position encoding methods, and its properties of continuous

and unbounded allow it to encode tokens of any length. How-

ever, this method is non-data-driven, i.e., it cannot adaptively

change the position encoding according to the token itself, so

it cannot guarantee the same position guidance for tokens with

different weights. Given the central role of position encoding,

many attempts in the field of language processing improve the

sinusoidal-based encoding method in the original transformer

paper from multiple perspectives, and among these, absolute

encoding [4], relative encoding [16], recursive encoding [17],

learn-based encoding [15], etc. are typical approaches. Rela-

tive encoding [16] hypothesizes that precise relative position

information is not useful beyond a certain distance and thus can

use clipping to encode any amount of sequences. Learn-based

encoding [15] learns position encoding through training, while

recursive encoding [17] adopts Neural ODE. Although these

methods have been effective for transformer model in NLP,

we argue that it is obviously inappropriate to use them di-

rectly in vision transformer because the data in vision tasks are

high-dimensional and these methods are not capable of captur-

ing multi-dimensional position information, and we will analyze

the limits of current position encoding methods in detail in Sec-

tion III. To this issue, we propose a novel position encoding

method specifically for high-dimensional data in this paper. We

encode different dimensions independently and then mix them to

ensure that the encoding has the same guidance for the position

information of different dimensions.

III. P

ROPOSED APPROACH

This section presents implementation methods and details

of our proposed. First, we will introduce the deformable at-

tention mechanism, which can obtain richer attention informa-

tion without increasing the computation cost. Then, we propose

the Encode-Decode Communication module (En-DeC module),

which uses an encode-decode architecture to obtain communi-

cation information among all patches. We finally design a novel

position encoding specifically for vision transformer named

Multi-dimensional Continuous Mixture Descriptor (MCMD),

which can efficiently model any amount of patches of multi-

dimensional position information. We show the above three de-

signs respectively in Fig. 2.

A. Deformable Attention

Following recent excellent work Swin Transformer [8],

we also compute self-attention in non-overlapped windows.

Suppose there is a multi-dimensional feature V∈R

h×w×c

,

which is divided into h

p

× w

p

patches and denoted as

V

p

∈ R

h

p

×w

p

×

h×w

h

p

×w

p

×c

. We then further divide it into h

w

×

w

w

windows, and the results can be denoted as V

w

∈

R

h

w

×w

w

×

h

p

×w

p

h

w

×w

w

×c

. Without loss of generality, we only dis-

cuss the attention calculation of a single window here. For a

single window w

m

in all windows w={w

1

,w

2

,...,w

n

},as-

sume that its all position on the original feature is (l

x

m

,l

y

m

).

In order to allow the current window to have a larger

view of attention, we add a learnable offset (o

x

,o

y

) to

each position of this window, and the overall offset of

(l

x

m

,l

y

m

) is (o

x

m

,o

y

m

). (o

x

m

,o

y

m

) is learned from Wf, specifi-

cally, (o

x

m

,o

y

m

)=Dense(Conv(Wf)), where Dense(·) and

Conv(·) are fully connected layer and convolution layer, respec-

tively. In order to reduce the learning difficulty of offset, we per-

form a value constraint on it, that is, |o

x

m

|≤h

w

, |o

y

m

|≤w

w

.We

denote the position of window (l

x

m

,l

y

m

) added with the learned

offset is (L

x

m

,L

y

m

), and it can be calculated as:

(L

x

m

,L

y

m

)=(l

x

m

,l

y

m

)+(o

x

m

,o

y

m

) (1)

Authorized licensed use limited to: Lanzhou University of Technology. Downloaded on October 04,2024 at 14:18:28 UTC from IEEE Xplore. Restrictions apply.

剩余11页未读,继续阅读

资源评论

码流怪侠

- 粉丝: 3w+

- 资源: 651

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- ASL6328芯片规格说明书

- 基于Matlab实现智能体一阶有领导者仿真(源码+数据).rar

- 独立公众号版本微信社群人脉系统社群空间站最新源码+详细教程

- 森林图像数据集(2700张图片).rar

- 《基于Comsol仿真模拟的岩石损伤研究-水力压裂实验探究》,利用Comsol仿真模拟技术精确预测水力压裂过程中岩石损伤情况,comsol仿真模拟水力压裂岩石损伤 ,关键词:COMSOL仿真;水

- 自由方舟管理后台通用模板-基于TDesign二次优化

- 《学习CRUISE M热管理的视频教程及文档解说,无需模型,轻松入门》,CRUISE M热管理视频教程:无模型,文档解说,轻松学习掌握热管理知识,录的CRUISE M热管理视频,有文档解说,没有模型

- 洛杉矶犯罪数据集概览 (2020年至今),犯罪事件数据集,犯罪影响因素

- 电信客户流失数据集,运营商流失客户数据集

- FinalBurn Neo源代码

- 基于积分型滑模控制器的永磁同步电机FOC转速环设计及仿真模型参考,基于积分型滑模控制器的永磁同步电机FOC转速环设计及仿真模型参考,基于积分型滑模控制器的永磁同步电机FOC 1.转速环基于积分型滑模面

- 智能车辆模拟系统:深度探究多步泊车,平行泊车与垂直泊车的仿真应用,《深入探讨carsim仿真技术下的多步泊车策略:平行泊车与垂直泊车的实现与优化》,carsim仿真多步泊车,平行泊车和垂直泊车 ,核心

- BMS模块Simulink开发基于算法,基于Simulink开发的BMS算法:包含SOC计算、故障处理与状态监测的充放电控制策略图解,BMS Simulink 所有算法基于Simulink开发 BMS

- 犯罪率与社会经济因素数据集,探讨了犯罪率与各种社会经济因素之间的关系,如教育水平、就业率、中位收入、贫困率和人口密度涵盖了1000个地区的数据

- ASL6328芯片原理图-V1.1

- 精品源码Javaweb仓库管理系统项目源码

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功