没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

内容概要:本文提出了一种基于扩散模型逆向生成(Diffusion Inversion)的新图像超分辨率(SR)方法,命名为InvSR。该方法通过引入噪声预测网络估计初始噪声图,构建预训练扩散模型的中间状态作为采样的起点。相比于现有方法,InvSR支持任意步长的灵活采样机制,显著提升了效率和适应性。此外,实验表明,即使仅用一步采样,InvSR也能够优于或媲美现有的单步扩散方法。 适合人群:对图像处理、深度学习、扩散模型感兴趣的研究人员和技术开发者。 使用场景及目标:适用于需要高效、灵活的图像超分辨率任务,特别是在处理不同类型的图像退化时。该方法旨在通过逆向扩散生成高分辨率图像,有效恢复细节并提高图像质量。 其他说明:本文还进行了大量的实验,包括合成数据集和真实世界数据集的对比,验证了InvSR在各种指标上的优越性能。代码和模型已开源,便于复现和应用。

资源推荐

资源详情

资源评论

Arbitrary-steps Image Super-resolution via Diffusion Inversion

Zongsheng Yue, Kang Liao, Chen Change Loy

S-Lab, Nanyang Technological University

{zongsheng.yue, kang.liao, ccloy}@ntu.edu.sg

DiffBIR-50 (7937ms)

Ours-2 (149ms)

DiffBIR-50

Ours-2

ResShift-4 (319ms)

Ours-3 (176ms)

ResShift-4

Ours-3

SinSR-1 (138ms)

Ours-4 (207ms)

SinSR-1

Ours-4

OSEDiff-1 (176ms)

Ours-5 (244ms)

OSEDiff-1

Ours-5

StableSR-50 (3459ms)

Ours-1 (117ms)

StableSR-50

Ours-1

Zoomed LR

Zoomed LR

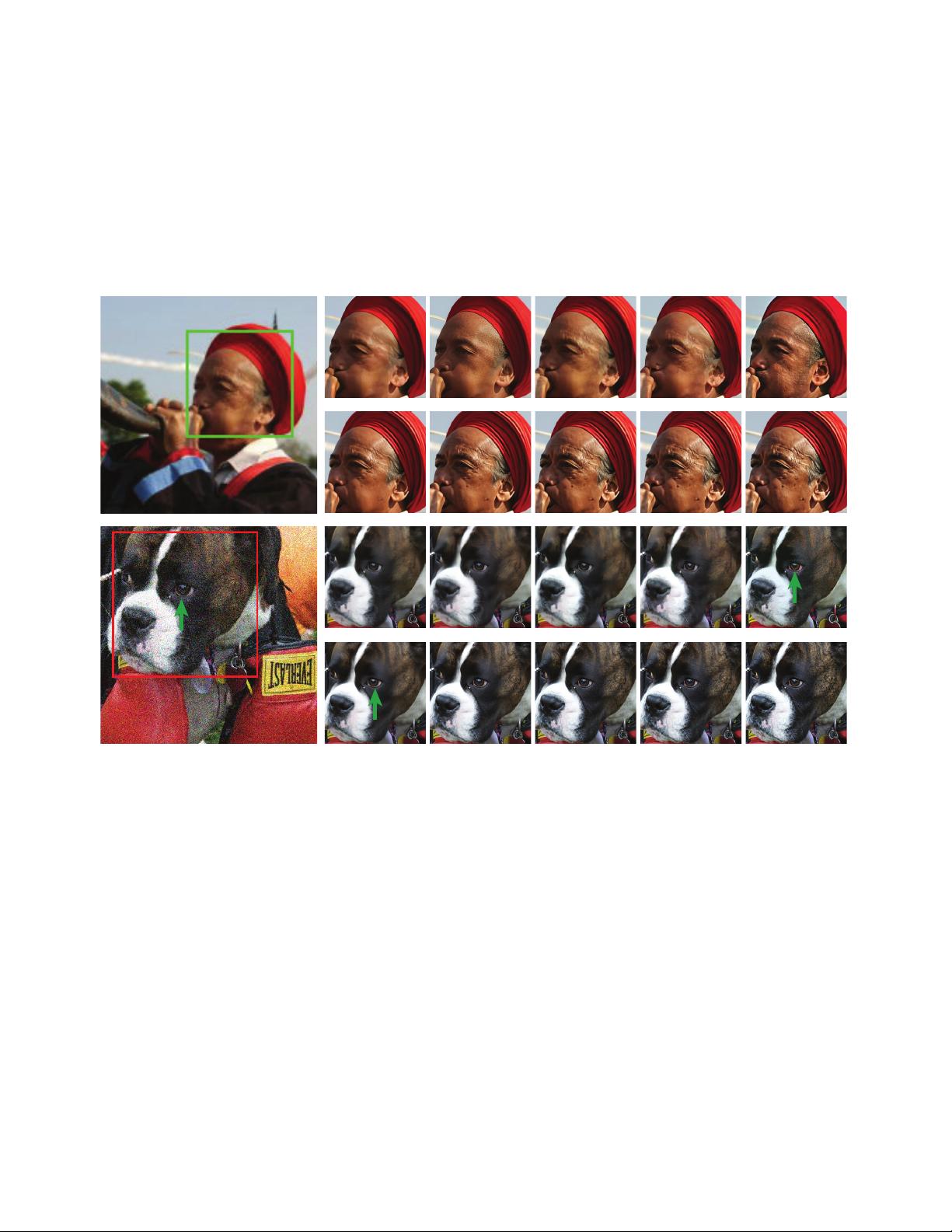

Figure 1. Qualitative comparisons of our proposed method to recent state-of-the-art diffusion-based approaches on two real-world ex-

amples, where the number of sampling steps is annotated in the format “Method name-Steps”. We provide the runtime (in milliseconds)

highlighted by red in the sub-caption of the first example , which is tested on ×4 (128 → 512) SR task on an A100 GPU. Our method

offers an efficient and flexible sampling mechanism, allowing users to freely adjust the number of sampling steps based on the degradation

type or their specific requirements. In the first example, mainly degraded by blurriness, multi-step sampling is preferable to single-step

sampling as it progressively recovers finer details. Conversely, in the second example with severe noise, a single sampling step is sufficient

to achieve satisfactory results, whereas additional steps may amplify the noise and introduce unwanted artifacts. (Zoom-in for best view)

Abstract

This study presents a new image super-resolution (SR) tech-

nique based on diffusion inversion, aiming at harnessing

the rich image priors encapsulated in large pre-trained

diffusion models to improve SR performance. We design

a Partial noise Prediction strategy to construct an inter-

mediate state of the diffusion model, which serves as the

starting sampling point. Central to our approach is a

deep noise predictor to estimate the optimal noise maps

for the forward diffusion process. Once trained, this noise

predictor can be used to initialize the sampling process

partially along the diffusion trajectory, generating the de-

sirable high-resolution result. Compared to existing ap-

proaches, our method offers a flexible and efficient sam-

pling mechanism that supports an arbitrary number of sam-

pling steps, ranging from one to five. Even with a single

sampling step, our method demonstrates superior or com-

parable performance to recent state-of-the-art approaches.

The code and model are publicly available at https:

//github.com/zsyOAOA/InvSR.

1

arXiv:2412.09013v1 [cs.CV] 12 Dec 2024

1. Introduction

Image super-resolution (SR) is a fundamental yet chal-

lenging problem in computer vision, aiming to restore a

high-resolution (HR) image from a given low-resolution

(LR) observation. The main challenge of SR arises from

the complexity and often unknown nature of the degra-

dation model in real-world scenarios, making SR an ill-

posed problem. Recent breakthroughs in diffusion mod-

els [16, 45, 48], particularly large-scale text-to-image (T2I)

models, have demonstrated remarkable success in generat-

ing high-quality images. Owing to the strong generative

capability of these T2I models, recent studies have begun to

use them as a reliable prior to alleviate the ill-posedness of

SR. This work follows this research line, further exploring

the potential of diffusion priors in SR.

The prevailing SR approaches leveraging diffusion pri-

ors usually attempt to modify the intermediate features of

the diffusion network, either through optimization [7, 23,

56] or fine-tuning [30, 56, 60, 64], to better align them with

the given LQ observations. In this work, we propose a new

technique based on diffusion inversion to harness diffusion

priors. Unlike existing approaches, it attempts to find an

optimal noise map as the input of the diffusion model, with-

out any modification to the diffusion network itself, thereby

maximizing the utility of diffusion prior.

While considerable advances have been made in gener-

ative adversarial networks (GANs) [14] inversion for var-

ious applications [62, 75], including SR [4, 15, 41], ex-

tending these principles to diffusion models presents unique

challenges, particularly for SR tasks that demand high fi-

delity preservation. In particular, the multi-step stochastic

sampling process of diffusion models makes inversion non-

trivial. The straightforward inversion approach to optimize

the distinct noise maps at each diffusion step is expensive

and complex. Additionally, the iterative inference mecha-

nism would accumulate prediction errors and randomness

at each step, which can significantly compromise fidelity.

Therefore, recent diffusion inversion methods have mainly

focused on tasks with lower fidelity requirements, such as

image editing [13, 36].

In this work, we reformulate diffusion inversion for the

more challenging task of SR. To enable diffusion inversion

for SR, we introduce a deep neural network called noise pre-

dictor to estimate the noise map from a given LR image. In

addition, a Partial noise Prediction (PnP) strategy is devised

to construct an intermediate state for the diffusion model,

serving as the starting point for sampling. This is made

possible by adding noise onto the LR image according to

the diffusion model’s forward process, where the noise pre-

dictor predicts the added noise instead of random sampling.

This approach is driven by the following key motivations:

• Rationality. LR and HR images differ only in high-

frequency details. With the addition of appropriate noise,

the LR image becomes indistinguishable from its HR

counterpart. Thus, the noisy LR can serve as a proxy for

deriving the inversion trajectory during reverse diffusion.

• Complexity. Rather than predicting noise maps for all

diffusion steps, the PnP strategy simplifies the inversion

task by limiting predictions to the starting step, thereby

reducing the overall complexity of the inversion process.

• Flexibility. The noise predictor can be trained to predict

noise maps for multiple predefined starting steps. During

inference, we can freely select a starting step from them

and then use any existing sampling algorithm with an ar-

bitrary number of steps, offering favorable flexibility in

controlling the sampling process.

• Fidelity. The starting steps during training are carefully

selected to have a high signal-to-noise ratio (SNR), en-

suring robust fidelity preservation for SR. In practice, we

enforce an SNR threshold greater than 1.44, correspond-

ing to the timestep of 250 in Stable Diffusion [43].

• Efficiency. As the sampling process begins from a step

earlier than 250 (SNR larger than 1.44), the PnP strategy

effectively reduces the number of sampling steps to fewer

than five when combined with off-the-shelf accelerated

sampling algorithms [22, 46]. This addresses the com-

mon inefficiency issue in diffusion-based SR approaches.

Unlike most existing diffusion-based methods that rely

on fixed sampling steps, our flexible sampling mechanism

offers a versatile solution for handling varying degrees of

degradation in SR. In SR, it is common to encounter differ-

ent types and intensities of corruption. Intuitively, the num-

ber of sampling steps should adapt to the specific degrada-

tion conditions. For example, as shown in Fig. 1, multi-step

sampling is preferable to single-step sampling in the first

case, as it effectively reduces blurriness and restores finer

details. In contrast, for the second example with severe

noise, a single sampling step achieves satisfactory results,

while additional steps may amplify the noise and introduce

unwanted artifacts. Our method uniquely allows users to

adjust sampling to suit different degradation types.

The main contributions of this work are twofold. First,

we propose a novel SR approach based on diffusion inver-

sion, which effectively leverages the diffusion prior by inte-

grating an auxiliary noise predictor while keeping the entire

diffusion backbone fixed. Second, our method introduces

a flexible and efficient sampling mechanism that allows for

arbitrary sampling steps, ranging from one to five. Remark-

ably, even when the steps are reduced to just one, our ap-

proach still achieves superior or comparable performance

to recent dedicated one-step diffusion methods.

2. Related Work

Diffusion Prior for SR. Existing diffusion prior-based SR

approaches can be broadly categorized into two classes.

The first class of methods involves re-optimizing the in-

2

termediate results of the diffusion model to ensure consis-

tency with the given LR images via pre-defined or esti-

mated degradation models. Representative works include

DDRM [23], CCDF [7], and DDNM [56], among oth-

ers [6, 8, 11, 38, 47, 63, 67]. While effective, these meth-

ods are limited by their computational complexity, as they

require solving an optimization problem at each diffusion

step, leading to slow inference. Furthermore, they often

rely on manually defined assumed degradation models and

thus cannot handle the blind SR problem in real-world sce-

narios. The second class directly fine-tunes a pre-trained

large T2I model for the SR task. StableSR [53] pioneers

this paradigm by incorporating spatial feature transform

layers [54] to guide the T2I model toward generating HR

outputs. Subsequent works follow by proposing various

fine-tuning strategies to exploit diffusion priors, including

DiffBIR [30], SeeSR [60], PASD [64], S3Diff [71], and so

on [27, 40, 49, 59, 61, 66]. These methods have achieved

impressive performance, validating the effectiveness of dif-

fusion priors for SR.

Diffusion Inversion. Diffusion inversion focuses on de-

termining the optimal noise map set that, when processed

through the diffusion model, reconstructs a given image.

DDIM [46] first addressed this by generalizing the diffu-

sion model via a class of non-Markovian processes, thereby

establishing a deterministic generation process. Subse-

quent approaches, such as those by Rinon et al. [12] and

Mokady et al. [36], proposed optimizing the text embed-

ding to better align with the desired textual guidance. Re-

cent efforts have further refined the optimization strategies

for both the textual and visual prompts [35, 39], as well as

for intermediate noise maps [13, 19, 20, 33, 51, 72], leading

to notable enhancements in inversion quality. Despite these

advances, existing methods mainly focus on image editing

and cannot meet the high-fidelity requirements of SR.

In this work, we tailor the diffusion inversion technique

for SR. While Chihaoui et al. [5] have recently explored

diffusion inversion for image restoration, their method re-

lies on solving an optimization problem at each inversion

step, significantly limiting its inference efficiency. In con-

trast, our approach introduces a noise prediction module

that, once trained, enables efficient inversion without re-

quiring iterative optimization during inference. This leads

to substantial improvements in both the efficiency and prac-

ticality of diffusion inversion for SR tasks.

3. Methodology

In this section, we present the proposed diffusion inversion

technique for SR. To maintain consistency with the nota-

tions used in diffusion models, we denote the LR image as

y

0

and the corresponding HR image as x

0

.

3.1. Motivation

The diffusion model [16, 45] was first introduced as a prob-

abilistic generative model inspired by nonequilibrium ther-

modynamics. Subsequently, Song et al. [48] reformulated

it within the framework of stochastic differential equations

(SDEs). In this paper, we propose a general diffusion in-

version technique that is applicable to both the probabilis-

tic and SDE-based diffusion formulations. To facilitate

understanding, we employ the probabilistic framework of

the Denoising Diffusion Probabilistic Model (DDPM) [16]

throughout our presentation.

The DDPM framework [16] is indeed a Markov chain of

length T , where the forward process is characterized by a

Gaussian transition kernel:

q(x

t

|x

t−1

) = N(x

t

;

p

1 − β

t

x

t−1

, β

t

I), (1)

where β

t

is a pre-defined hyper-parameter controlling vari-

ance schedule. Notably, this transition kernel allows the

derivation of the marginal distribution q(x

t

|x

0

), i.e.,

q(x

t

|x

0

) = N(x

t

;

√

¯α

t

x

0

, (1 − ¯α

t

)I), (2)

where ¯α

t

=

Q

t

s=1

α

s

, α

s

= 1 − β

s

. The reverse process

aims to generate a high-quality image from an initial ran-

dom noise map x

T

∼ N(0, I), which can be expressed as:

x

t−1

= g

θ

(x

t

, t) + σ

t

z

t−1

, t = T, ··· , 1, (3)

where

g

θ

(x

t

, t) =

1

√

α

t

x

t

−

1 − α

t

√

1 − ¯α

t

ϵ

θ

(x

t

, t)

, (4)

ϵ

θ

(x

t

, t) is a pre-trained denoising network parameterized

by θ. The noise term z

t

satisfies z

0

= 0 and z

t

∼ N(0, I)

for t = 1, ··· , T − 1.

Equation (3) indicates that the synthesized image x

0

is

fully determined by the set of noise maps M = x

T

∪

{z

t

}

T −1

t=1

. In the context of SR, our goal is to generate an HR

image x

0

conditioned on an LR image y

0

. To this end, we

propose diffusion inversion to find an optimal set of noise

maps M

∗

that reconstruct the target HR image x

0

via the

reverse process of Eq. (3). In the following sections, we

detail how to achieve this goal by training a noise predictor.

3.2. Diffusion Inversion

To achieve diffusion inversion, we introduce a noise predic-

tion network with parameter w, denoted as f

w

, which takes

the LR image y

0

and the timestep t as input and outputs

the desired noise maps M

∗

. Unlike the strategy [5] of di-

rectly optimizing M

∗

for each testing image, we train such

a noise predictor to enable fast sampling during inference,

thereby significantly improving the inference efficiency. To

ensure the output of f

w

conforms to Gaussian distribution,

we adopt the reparameterization trick of VAE [25], which

predicts the mean and variance parameters of Gaussian dis-

tribution rather than directly estimating the noise map.

3

3.2.1. Problem Simplification

Training this noise predictor is inherently challenging. The

noise map set M consists of T noise maps (typically T =

1000 in most current diffusion models), corresponding to

each step of the diffusion process. Naturally, it is non-trivial

to simultaneously estimate such a large number of noise

maps using a single, compact network. What’s worse, the

iterative sampling paradigm of diffusion models can gradu-

ally accumulate prediction errors, which may adversely af-

fect the final SR performance.

To address these challenges, we design a Partial Noise

Prediction (PnP) strategy. Specifically, let’s consider dif-

fusion inversion in the context of SR, where the observed

LR image y

0

only slightly deviates from the target HR im-

age x

0

in most cases, primarily in high-frequency compo-

nents. This observation inspires us to initiate the sampling

process from an intermediate timestep N (N < T ), effec-

tively reducing the number of noise maps in M from T to

N, i.e., M = {z

t

}

N

t=1

. Furthermore, given the high-fidelity

requirements of SR, we constrain x

N

to have a relatively

high SNR, implying mild noise corruption. This constraint

encourages the selection of a smaller N, and in practice, we

set N ≤ 250, corresponding to an SNR threshold of 1.44 in

the widely used Stable Diffusion [43].

In addition, we further compress the set of the noise

maps M = {z

t

}

N

t=1

by integrating existing diffusion ac-

celeration algorithms [22, 46]. The common idea of these

algorithms is to skip certain steps during inference, which

are selected based on specific rules [29], e.g., “linspace” and

“trailing”. Combining with this skipping strategy, the noise

map set is simplified as follows:

M = {z

κ

i

}

M

i=1

, (5)

where {κ

1

, ··· , κ

M

} ⊆ {1, ··· , N }. In practice, we set

M ≤ 5, thus largely reducing the prediction burden on the

noise predictor and improving the sampling efficiency.

3.2.2. Inversion Trajectory

Given the set of noise maps M = {z

κ

i

}

M

i=1

and the noise

prediction network f

w

, our goal is to restore the HR image

x

0

from a given LR observation y

0

, following an inversion

trajectory defined by:

x

κ

i−1

= g

θ

(x

κ

i

, κ

i

) + σ

κ

i

f

w

(y

0

, κ

i−1

), (6)

where κ

0

= 0, and g

θ

(·, ·) is defined in Eq. (4). The key to

initiating this inversion trajectory is constructing the start-

ing state x

κ

M

from the LR image y

0

.

The marginal distribution q(x

κ

M

|x

0

), as defined in

Eq. (2), suggests to achieve x

κ

M

as follows:

x

κ

M

=

p

¯α

κ

M

x

0

+

p

1 − ¯α

κ

M

ξ, ξ ∼ N(0, I). (7)

In the context of SR, since the HR image x

0

is not accessi-

ble during testing, we thus construct an analogous formula-

tion for x

κ

M

directly from the LR image y

0

using the noise

predictor f

w

(·), namely

x

κ

M

=

p

¯α

κ

M

y

0

+

p

1 − ¯α

κ

M

f

w

(y

0

, κ

M

). (8)

This design is inspired by the observation that the LR im-

age y

0

and the HR image x

0

become increasingly indis-

tinguishable when perturbed by Gaussian noise with an ap-

propriate magnitude. Therefore, we aim to seek an optimal

noise map f

w

(y

0

, κ

M

) to perturb y

0

in such a way that the

pre-trained diffusion model can generate the corresponding

x

0

from x

κ

M

that is defined in Eq. (8).

To summarize, we establish an inversion trajectory by

combining Eqs. (6) and (8), which can be used to solve the

SR problem via iterative generation along this trajectory.

3.2.3. Model Training

Given a pre-trained large-scale diffusion model ϵ

θ

(·), an es-

timation of the HR image x

0

can be obtained from x

κ

i

by

taking a reverse diffusion step:

ˆ

x

0←κ

i

=

1

√

¯α

κ

i

h

x

κ

i

−

p

1 − ¯α

κ

i

ϵ

θ

(x

κ

i

, κ

i

)

i

, (9)

where x

κ

i

is defined by Eq. (8) for i = M and Eq. (6) for

i < M . It is thus possible to train the noise predictor f

w

(·)

by minimizing the distance between

ˆ

x

0←κ

i

and x

0

.

However, directly training with this objective is compu-

tationally impractical. Specifically, as shown in Eq. (6),

calculating x

κ

i

(i < M ) necessitates recurrent application

of the large-scale diffusion model ϵ

θ

, which leads to pro-

hibitive GPU memory usage. To circumvent this, we adopt

an alternative version for x

κ

i

based on the marginal distri-

bution in Eq. (2), i.e.,

x

κ

i

=

p

¯α

κ

i

x

0

+

p

1 − ¯α

κ

i

f

w

(y

0

, κ

i

), i < M. (10)

This modification also aligns better with the training pro-

cess of the employed diffusion model, allowing for more

effective leveraging of the prior knowledge embedded in it.

We now detail the training procedure step by step:

Gaussian Constraint. The pre-trained diffusion model is

a powerful denoiser tailored for Gaussian noise with zero

mean and varying variances. Hence, it is reasonable to en-

force the predicted noise map by f

w

to obey a Gaussian

distribution. For the initial state x

κ

M

, it is observed that

the predicted noise map f

w

(y

0

, κ

M

) exhibits a mean shift,

which is evident when comparing Eqs. (7) and (8), due to

the substitution of y

0

for x

0

. Moreover, the visualization

presented in Figs. 2 and 3 further validates this observation,

illustrating that the predicted noise map is clearly correlated

with the LR image. Therefore, we do not consider the Gaus-

sian constraint for x

κ

M

.

4

剩余15页未读,继续阅读

资源评论

码流怪侠

- 粉丝: 3w+

- 资源: 651

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 嵌入式开发_ARM_入门_STM32迁移学习_1741139876.zip

- 嵌入式系统_STM32_自定义Bootloader_教程_1741142157.zip

- 文章上所说的串口助手,工程文件

- 斑马打印机zpl官方指令集

- 《实验二 面向对象编程》

- 《JavaScript项目式实例教程》项目五多窗体注册页面窗口对象.ppt

- Web前端开发中Vue.js组件化的应用详解

- labelme已打包EXE文件

- 一文读懂Redis之单机模式搭建

- Vue综合案例:组件化开发

- 《SolidWorks建模实例教程》第6章工程图及实例详解.ppt

- C语言基础试题.pdf

- Go语言、数据库、缓存与分布式系统核心技术要点及面试问答详解

- 7天精通DeepSeek实操手册.pdf

- DeepSeek R1 Distill 全版本安全评估.pdf

- DeepSeek 零基础入门手册.pdf

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功