ing developed in machine learning and data mining areas.

There has been a large amount of work on transfer learning

for reinforcement learning in the machine learning literature

(e.g., [9], [10]). However, in this paper, we only focus on

transfer learning for classification, regression, and clustering

problems that are related more closely to data mining tasks.

By doing the survey, we hope to provide a useful resource for

the data mining and machine learning community.

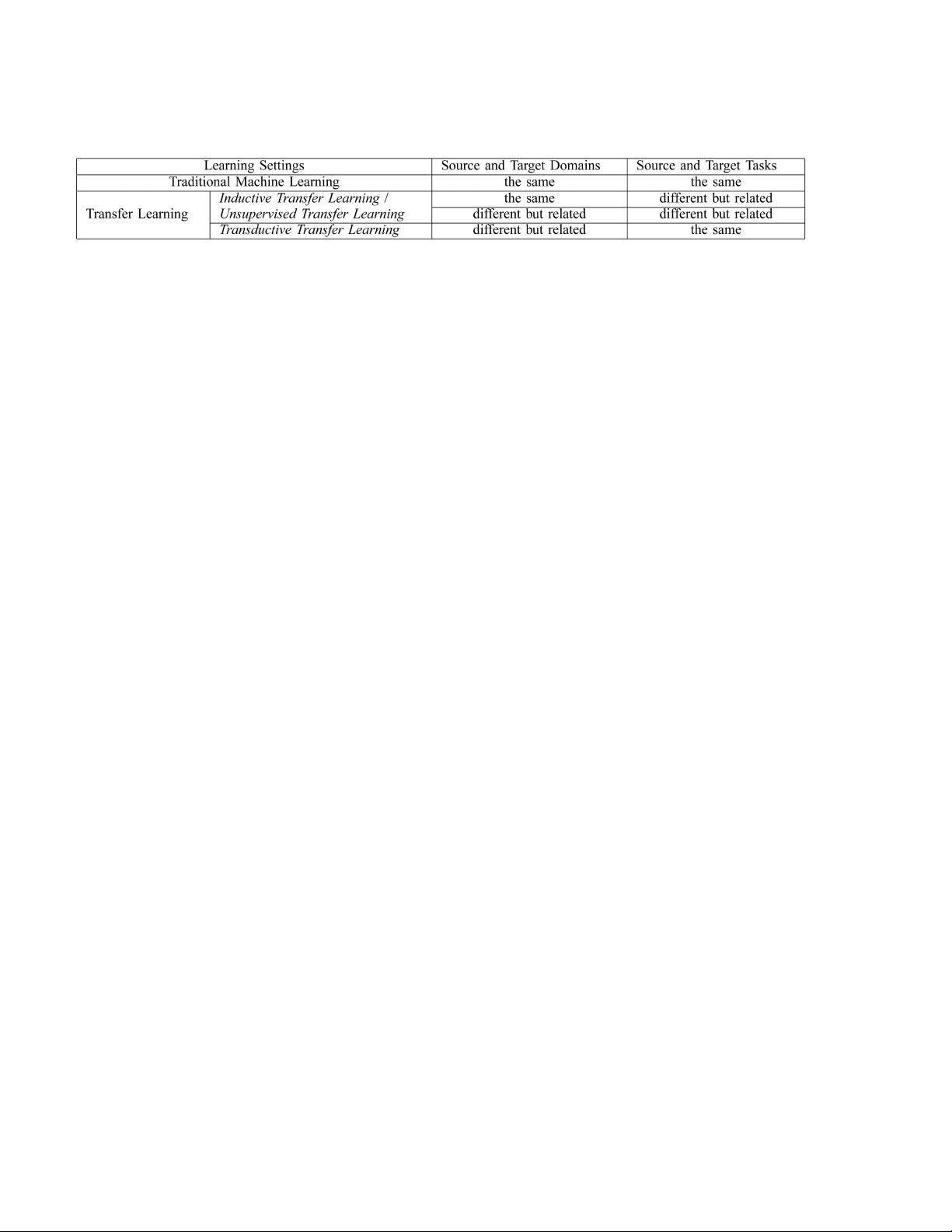

The rest of the survey is organized as follows: In the next

four sections, we first give a general overview and define

some notations we will use later. We, then, briefly survey the

history of transfer learning, give a unified definition of

transfer learning and categorize transfer learning into three

different settings (given in Table 2 and Fig. 2). For each

setting, we review different approaches, given in Table 3 in

detail. After that, in Section 6, we review some current

research on the topic of “negative transfer,” which happens

when knowledge transfer has a negative impact on target

learning. In Section 7, we introduce some successful

applications of transfer learning and list some published

data sets and software toolkits for transfer learning research.

Finally, we conclude the paper with a discussion of future

works in Section 8.

2OVERVIEW

2.1 A Brief History of Transfer Learning

Traditional data mining and machine learning algorithms

make predictions on the future data using statistical models

that are trained on previously collected labeled or unlabeled

training data [11], [12], [13]. Semisupervised classification

[14], [15], [16], [17] addresses the problem that the labeled

data may be too few to build a good classifier, by making use

of a large amount of unlabeled data and a small amount of

labeled data. Variations of supervised and semisupervised

learning for imperfect data sets have been studied; for

example, Zhu and Wu [18] have studied how to deal with the

noisy class-label problems. Yang et al. considered cost-

sensitive learning [19] when additional tests can be made to

future samples. Nevertheless, most of them assume that the

distributions of the labeled and unlabeled data are the same.

Transfer learning, in contrast, allows the domains, tasks, and

distributions used in training and testing to be different. In

the real world, we observe many examples of transfer

learning. For example, we may find that learning to

recognize apples might help to recognize pears. Similarly,

learning to play the electronic organ may help facilitate

learning the piano. The study of Transfer learning is motivated

by the fact that people can intelligently apply knowledge

learned previously to solve new problems faster or with

better solutions. The fundamental motivation for Transfer

learning in the field of machine learning was discussed in a

NIPS-95 workshop on “Learning to Learn,”

1

which focused

on the need for lifelong machine learning methods that retain

and reuse previously learned knowledge.

Research on transfer learning has attracted more and

more attention since 1995 in different names: learning to

learn, life-long learning, knowledge transfer, inductive

transfer, multitask learning, knowledge consolidation,

context-sensitive learning, knowledge-based inductive bias,

metalearning, and incremental/cumulative learning [20].

Among these, a closely related learning technique to

transfer learning is the multitask learning framework [21],

which tries to learn multiple tasks simultaneously even

when they are different. A typical approach for multitask

learning is to uncover the common (latent) features that can

benefit each individual task.

In 2005, the Broad Agency Announcement (BAA) 05-29

of Defense Advanced Research Projects Agency (DARPA)’s

Information Processing Technology Office (IPTO)

2

gave a

new mission of transfer learning: the ability of a system to

recognize and apply knowledge and skills learne d in

previous tasks to novel tasks. In this definition, transfer

learning aims to extract the knowledge from one or more

source tasks and applies the knowledge to a target task.In

contrast to multitask learning, rather than learning all of the

source and target tasks simultaneously, transfer learning

cares most about the target task. The roles of the source and

target tasks are no longer symmetric in transfer learning.

Fig. 1 shows the difference between the learning processes

of traditional and transfer learning techniques. As we can

see, traditional machine learning techniques try to learn each

task from scratch, while transfer learning techniques try to

transfer the knowledge from some previous tasks to a target

task when the latter has fewer high-quality training data.

Today, transfer learning methods appear in several top

venues, most notably in data mining (ACM KDD, IEEE

ICDM, and PKDD, for example), machine learning (ICML,

NIPS, ECML, AAAI, and IJCAI, for example) and applica-

tions of machine learning and data mining (ACM SIGIR,

WWW, and ACL, for example).

3

Before we give different

categorizations of transfer learning, we first describe the

notations used in this paper.

2.2 Notations and Definitions

In this section, we introduce some notations and definitions

that are used in this survey. First of all, we give the

definitions of a “domain” and a “task,” respectively.

In this survey, a domain D consists of two components: a

feature space X and a marginal probability distribution P ðXÞ,

where X ¼fx

1

; ...;x

n

g2X. For example, if our learning task

1346 IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 22, NO. 10, OCTOBER 2010

Fig. 1. Different learning processes between (a) traditional machine

learning and (b) transfer learning.

1. http://socrates.acadiau.ca/courses/comp/dsilver/NIPS9 5_LTL/

transfer.workshop.1995.html.

2. http://www.darpa.mil/ipto/programs/tl/tl.asp.

3. We summarize a list of conferences and workshops where transfer

learning papers appear in these few years in the following webpage for

reference, http://www.cse.ust.hk/~sinnopan/conferenceTL.htm.

qq_264930172019-04-21原版论文,入坑迁移学习.

qq_264930172019-04-21原版论文,入坑迁移学习. 我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功