没有合适的资源?快使用搜索试试~ 我知道了~

资源推荐

资源详情

资源评论

Get PDF for Microsoft DP-203 Exam

Including Answers & Discussions

Download PDF - $49.99

- Expert Veried, Online,

Free

.

Custom View Settings

Topic 1 - Question Set 1

Topic 1

Question #1

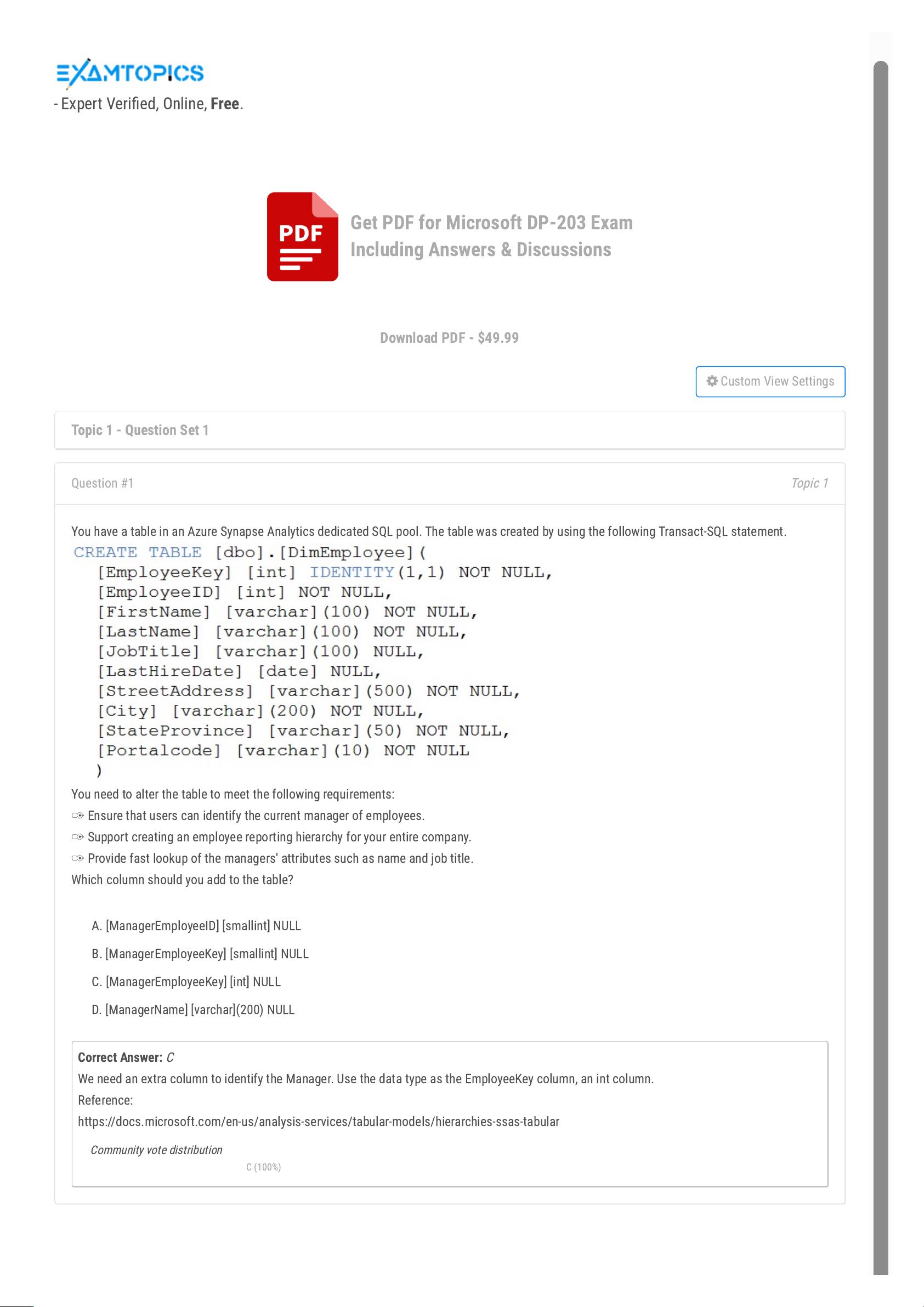

You have a table in an Azure Synapse Analytics dedicated SQL pool. The table was created by using the following Transact-SQL statement.

You need to alter the table to meet the following requirements:

✑

Ensure that users can identify the current manager of employees.

✑

Support creating an employee reporting hierarchy for your entire company.

✑

Provide fast lookup of the managers' attributes such as name and job title.

Which column should you add to the table?

A. [ManagerEmployeeID] [smallint] NULL

B. [ManagerEmployeeKey] [smallint] NULL

C. [ManagerEmployeeKey] [int] NULL

D. [ManagerName] [varchar](200) NULL

Correct Answer:

C

We need an extra column to identify the Manager. Use the data type as the EmployeeKey column, an int column.

Reference:

https://docs.microsoft.com/en-us/analysis-services/tabular-models/hierarchies-ssas-tabular

Community vote distribution

C (100%)

Topic 1

Question #2

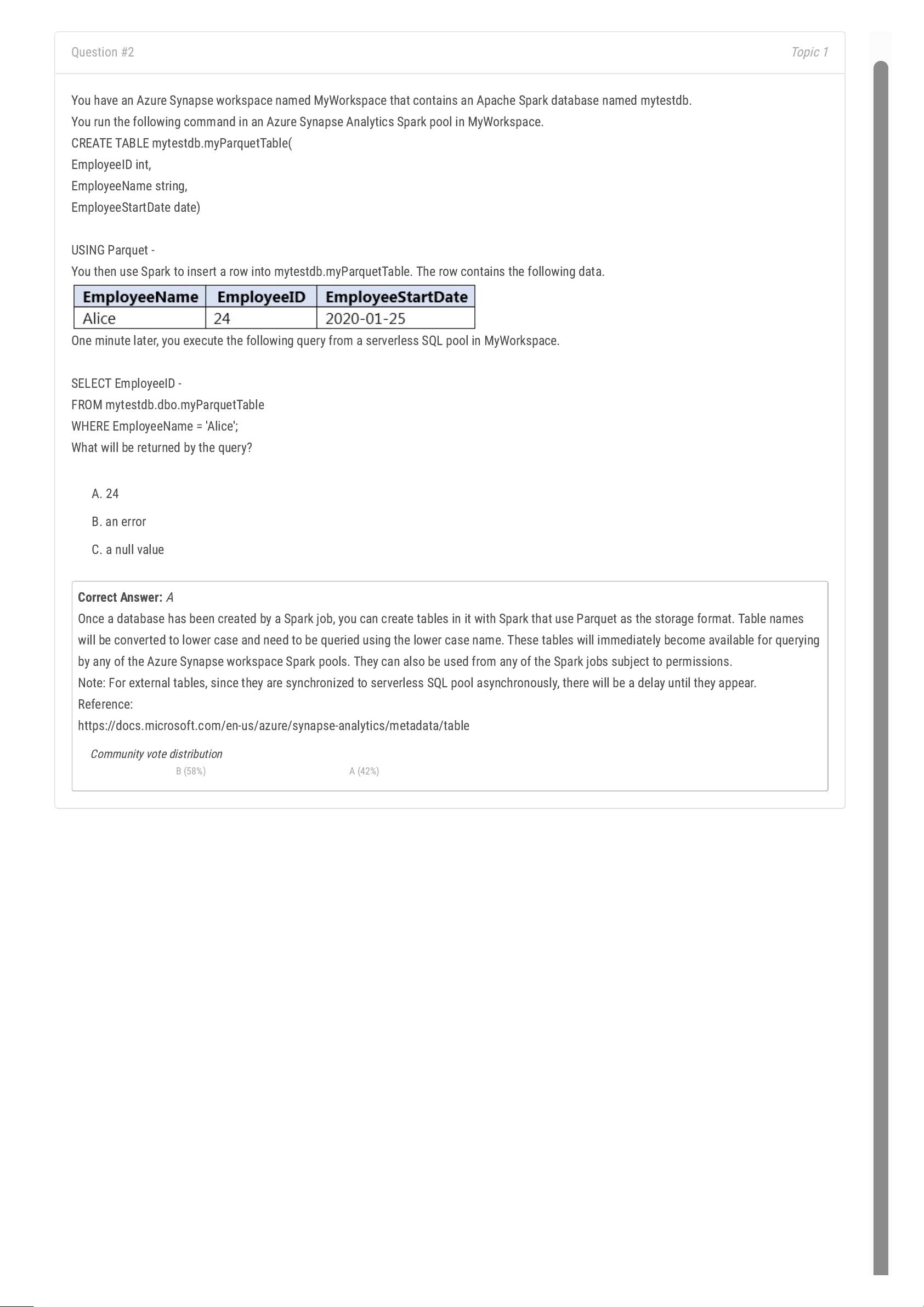

You have an Azure Synapse workspace named MyWorkspace that contains an Apache Spark database named mytestdb.

You run the following command in an Azure Synapse Analytics Spark pool in MyWorkspace.

CREATE TABLE mytestdb.myParquetTable(

EmployeeID int,

EmployeeName string,

EmployeeStartDate date)

USING Parquet -

You then use Spark to insert a row into mytestdb.myParquetTable. The row contains the following data.

One minute later, you execute the following query from a serverless SQL pool in MyWorkspace.

SELECT EmployeeID -

FROM mytestdb.dbo.myParquetTable

WHERE EmployeeName = 'Alice';

What will be returned by the query?

A. 24

B. an error

C. a null value

Correct Answer:

A

Once a database has been created by a Spark job, you can create tables in it with Spark that use Parquet as the storage format. Table names

will be converted to lower case and need to be queried using the lower case name. These tables will immediately become available for querying

by any of the Azure Synapse workspace Spark pools. They can also be used from any of the Spark jobs subject to permissions.

Note: For external tables, since they are synchronized to serverless SQL pool asynchronously, there will be a delay until they appear.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/metadata/table

Community vote distribution

B (58%) A (42%)

Topic 1

Question #3

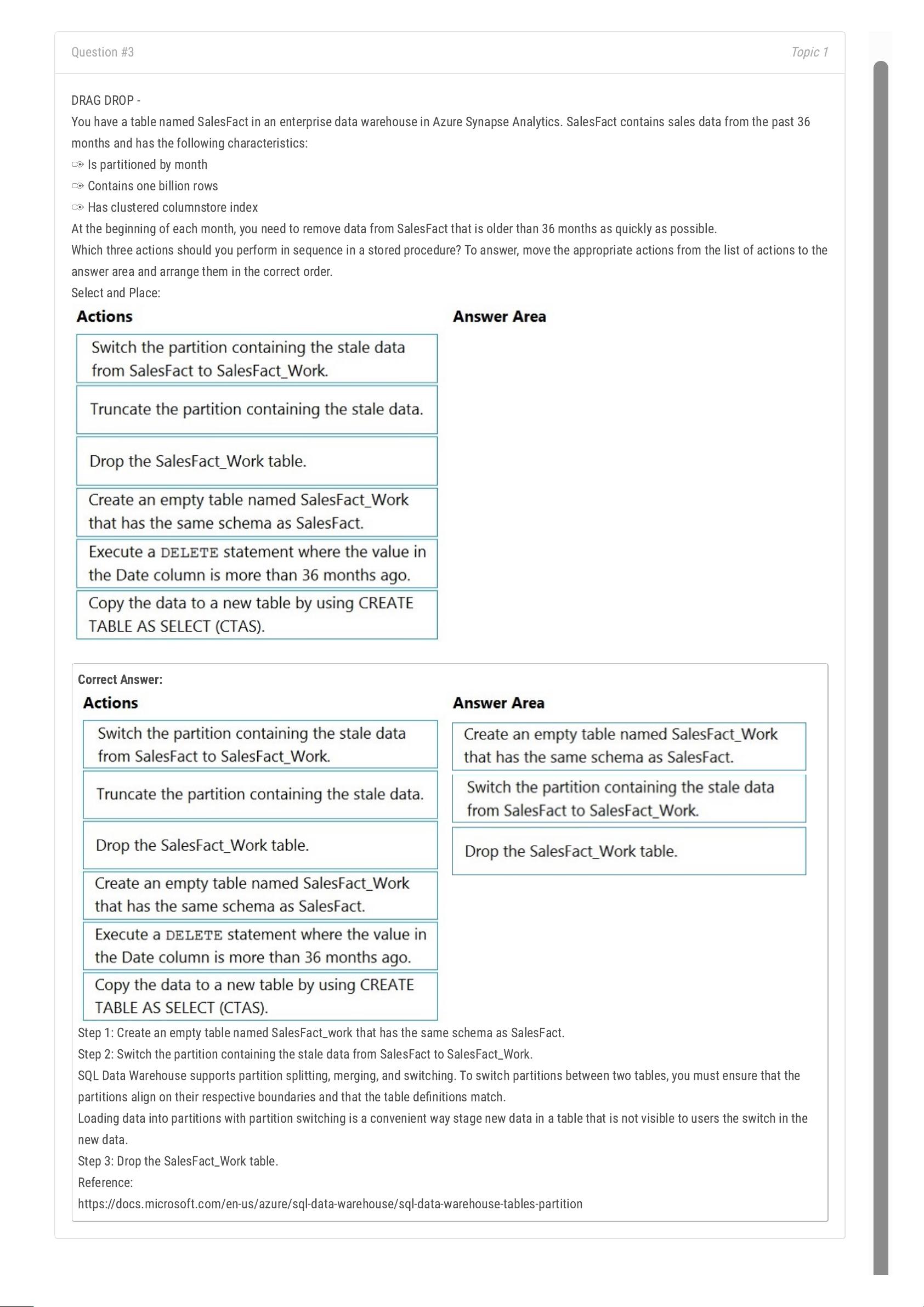

DRAG DROP -

You have a table named SalesFact in an enterprise data warehouse in Azure Synapse Analytics. SalesFact contains sales data from the past 36

months and has the following characteristics:

✑

Is partitioned by month

✑

Contains one billion rows

✑

Has clustered columnstore index

At the beginning of each month, you need to remove data from SalesFact that is older than 36 months as quickly as possible.

Which three actions should you perform in sequence in a stored procedure? To answer, move the appropriate actions from the list of actions to the

answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Step 1: Create an empty table named SalesFact_work that has the same schema as SalesFact.

Step 2: Switch the partition containing the stale data from SalesFact to SalesFact_Work.

SQL Data Warehouse supports partition splitting, merging, and switching. To switch partitions between two tables, you must ensure that the

partitions align on their respective boundaries and that the table denitions match.

Loading data into partitions with partition switching is a convenient way stage new data in a table that is not visible to users the switch in the

new data.

Step 3: Drop the SalesFact_Work table.

Reference:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-partition

Topic 1

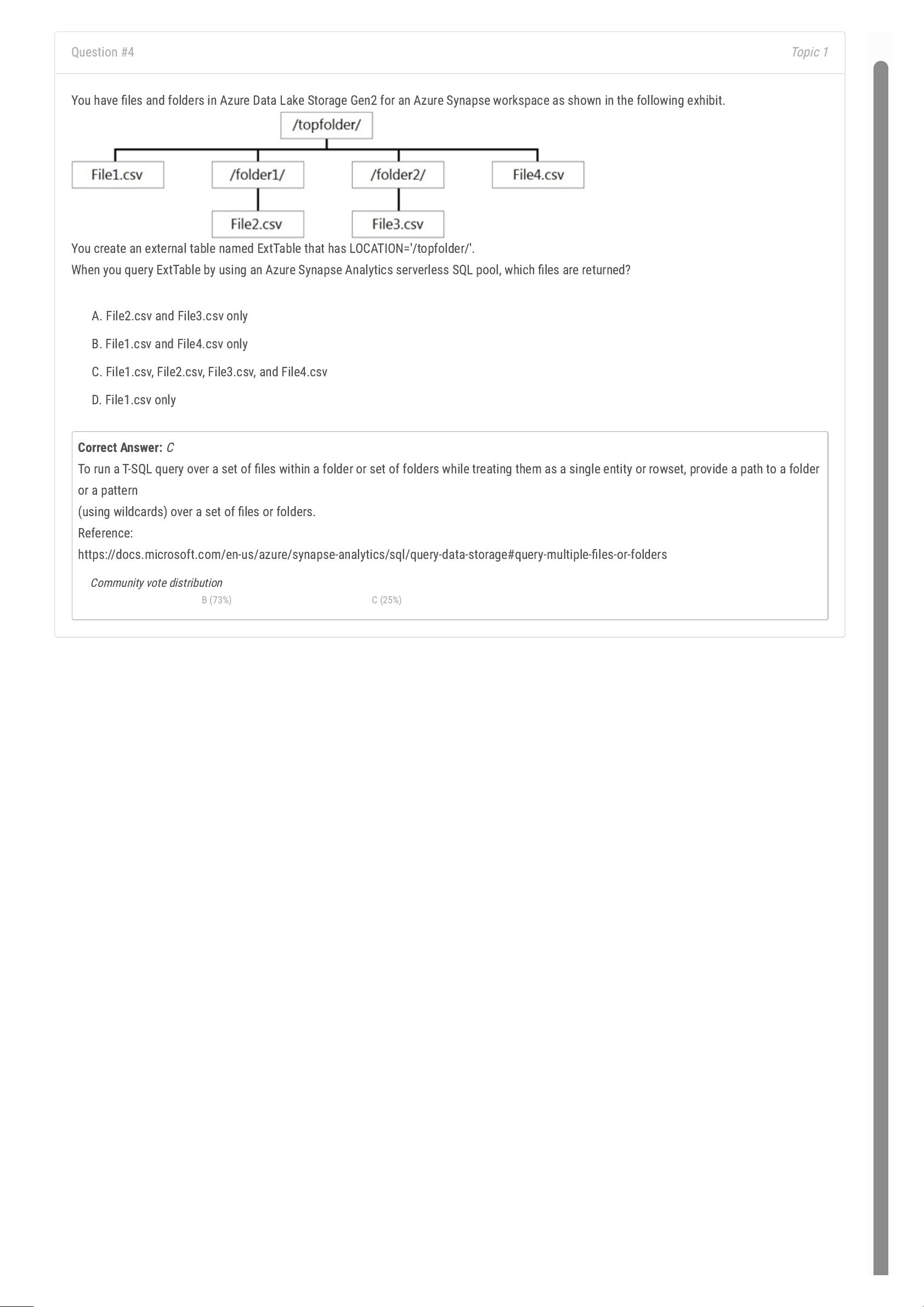

Question #4

You have les and folders in Azure Data Lake Storage Gen2 for an Azure Synapse workspace as shown in the following exhibit.

You create an external table named ExtTable that has LOCATION='/topfolder/'.

When you query ExtTable by using an Azure Synapse Analytics serverless SQL pool, which les are returned?

A. File2.csv and File3.csv only

B. File1.csv and File4.csv only

C. File1.csv, File2.csv, File3.csv, and File4.csv

D. File1.csv only

Correct Answer:

C

To run a T-SQL query over a set of les within a folder or set of folders while treating them as a single entity or rowset, provide a path to a folder

or a pattern

(using wildcards) over a set of les or folders.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-data-storage#query-multiple-les-or-folders

Community vote distribution

B (73%) C (25%)

Topic 1

Question #5

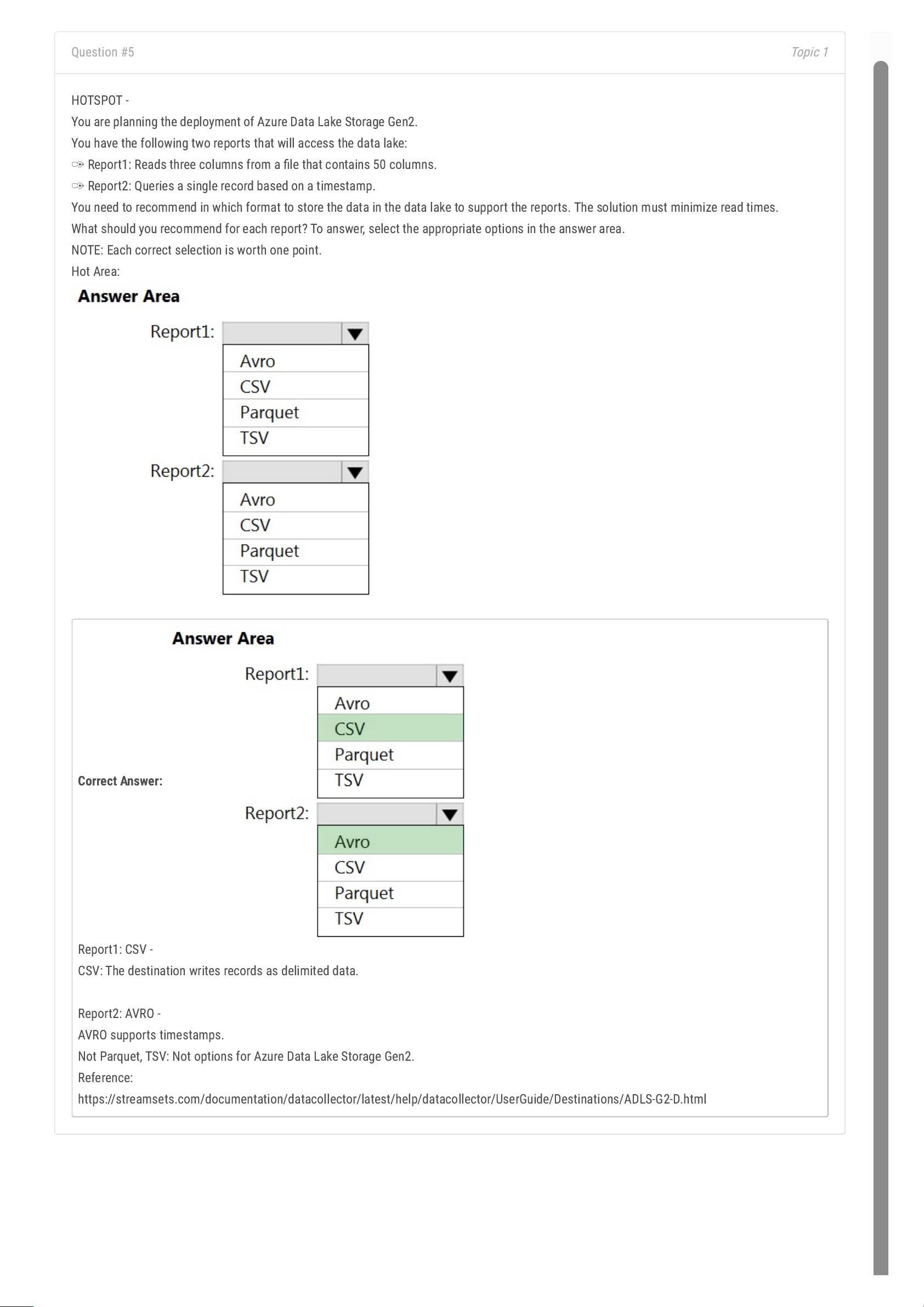

HOTSPOT -

You are planning the deployment of Azure Data Lake Storage Gen2.

You have the following two reports that will access the data lake:

✑

Report1: Reads three columns from a le that contains 50 columns.

✑

Report2: Queries a single record based on a timestamp.

You need to recommend in which format to store the data in the data lake to support the reports. The solution must minimize read times.

What should you recommend for each report? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Report1: CSV -

CSV: The destination writes records as delimited data.

Report2: AVRO -

AVRO supports timestamps.

Not Parquet, TSV: Not options for Azure Data Lake Storage Gen2.

Reference:

https://streamsets.com/documentation/datacollector/latest/help/datacollector/UserGuide/Destinations/ADLS-G2-D.html

剩余365页未读,继续阅读

资源评论

xueyunshengling

- 粉丝: 578

- 资源: 3169

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 20241226_243237026.jpeg

- f81f7b71ce9eb640ab3b0707aaf789f2.PNG

- YOLOv10目标检测基础教程:从零开始构建你的检测系统

- 学生实验:计算机编程基础教程

- 软件安装与配置基础教程:从新手到高手

- IT类课程习题解析与实践基础教程

- 湖南大学大一各种代码:实验1-9,小班,作业1-10,开放题库 注:这是21级的,有问题不要找我,少了也不要找我

- 湖南大学大一计科小学期的练习题 注,有问题别找我

- unidbg一、符号调用、地址调用

- forest-http

- christmas-圣诞树代码

- platform-绿色创新理论与实践

- christmas-圣诞树

- 数据分析-泰坦尼克号幸存者预测

- 字符串-圣诞树c语言编程代码

- learning_coder-二叉树的深度

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功