•

workpath-sensitive tempo control: thread tempo is set

based on control flow, with threads tackling “immediate

work” [7] executing at a faster tempo. This design ap-

proach corresponds to a key design principle in work-

stealing algorithms: the work-first principle.

•

workload-sensitive tempo control: thread tempo is set

based on the number of work items a thread needs to

tackle, as indicated by the size of the deque in work-

stealing runtimes. Threads with a longer deque execute

at a faster tempo.

HERMES unifies the two tempo control strategies in one.

Our experiments show that the two strategies are highly com-

plementary. For instance, on a 32-core machine, each strat-

egy can contribute to 6% and 7% energy savings respec-

tively, whereas the unified algorithm can yield 11% energy

savings. In the same setting, each strategy incurs 6% and 5%

performance loss respectively, whereas the unified algorithm

incurs 3% loss.

This paper makes the following contributions:

1. The first framework, to the best of our knowledge, ad-

dressing energy efficiency in work-stealing systems. The

framework achieves energy efficiency through thread

tempo control.

2. Two novel, complementary tempo control strategies: one

workpath-sensitive and one workload-sensitive.

3. A prototyped implementation and experimental evalua-

tion demonstrating an average of 11-12% energy savings

with 3-4% performance loss over work-stealing bench-

marks. The results are stable throughout comprehensive

design space exploration.

2. Background: Work Stealing

Work stealing was originally developed in Cilk [6, 16], a C-

like language designed for parallel programming. The main

appeal of work stealing is its synergic solution spanning the

compute stack, bridging the gap between abstraction layers

such as architectures, operating systems, compilers, program

runtimes, and programming models.

Work stealing is a load balancing scheduler for multi-

threaded programs over parallel architectures. The program

runtime consists of multiple threads called workers, each

executing on a host CPU core (or hardware parallel unit in

general). Each worker maintains a queue-like data structure

– called a double-ended queue or deque – each item of which

is a task to be processed by the worker. When a worker

finishes processing a task, it picks up next one from its deque

and continues the execution of that task. When the deque is

empty (we say the worker or its host core is idle), the worker

steals a task from the deque of another worker. In this case

we call the stealing worker a thief whereas the worker whose

task was stolen a victim. The selection of victims follows

the principles observed by load balancing and may vary in

different implementations of work stealing.

What sets work stealing apart from standard load balanc-

ing techniques is how the runtime structure described above

corresponds to program structures and compilation units.

Each task on the deque is a block of executable code – or

more strictly, a program counter pointing to the executable

code – demarcated by the programmer and optimized by the

compiler. In that sense, to have a worker “pick up a task” is

indeed to have the worker continue its execution over the ex-

ecutable code embodied in the task. To describe the process

in more detail, let us use the following Cilk example:

L1 cilk int f ( )

L2 { int n1 = spawn f 1 ( ) ;

L3 . . . / / o t h e r s t a t e m e n t s

L4 }

L5 cilk int f 1 ( ) {

L6 int n2 = spawn f 2 ( ) ;

L7 . . . / / o t h e r s t a t e m e n t s

L8 }

L9 cilk int f 2 ( ) {

L10 . . . / / o t h e r s t a t e m e n t s

L11 }

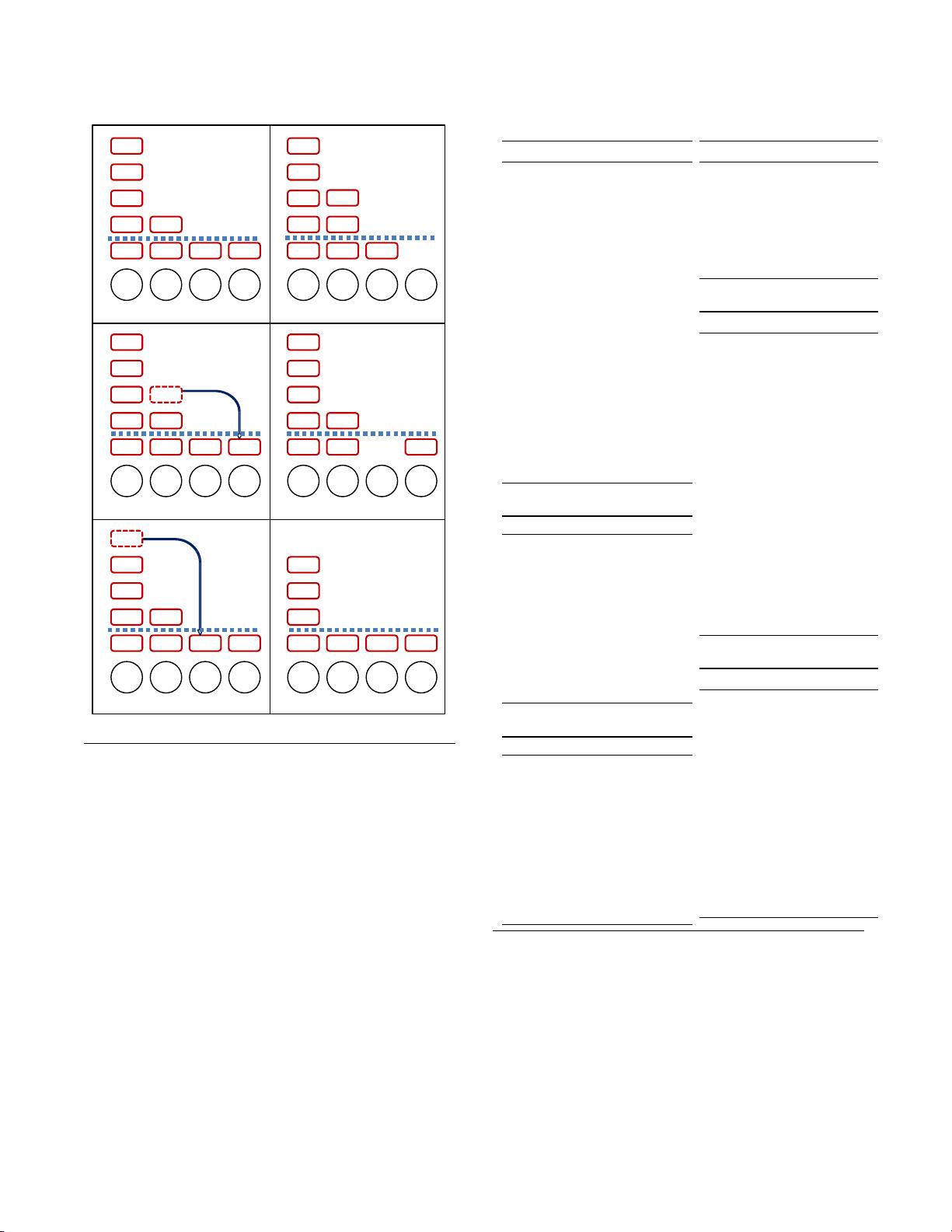

Logically, each spawn can be viewed as a thread creation.

On the implementation level however, a work-stealing run-

time adopts Lazy Task Creation [31], where for each spawn,

the executing worker simply puts a task onto its own deque,

either to pick it up later or to be stolen by some other worker.

This strategy aligns thread management with the underly-

ing parallel architecture: a program that invokes f above 20

times but runs on a dual-core CPU can operate only with 2

threads (workers) instead of 40.

Work-First Principle The question here is what the item

placed on the deque should embody. For instance, when

L2 is executed, one tempting design would be to consider

f1 as the task placed on the deque. The Cilk-like work-

stealing algorithm takes the opposite approach: it places the

continuation of the current spawn statement onto the deque.

In the example above, it is the program counter pointing to

L3. The current worker continues to invoke f1 as if spawn

were elided.

This design reflects a fundamental principle well articu-

lated in Cilk: the work-first principle. The principle concerns

the relationship between the parallel execution of a program

and its corresponding serial execution. (A logically equiva-

lent view for the latter would be to have the parallel program

execute on a single-core machine.) Let us revisit the exam-

ple above. If it is executed on a single-core machine, f1 is

the “immediate” work when L2 is reached, and hence carries

more urgency. For that reason, f1 should be immediately ex-

ecuted by the current worker, whereas the continuation is not

as urgent and is hence placed on the deque.

Work-first principle plays a pivotal role in the design of

work-stealing systems. In Cilk, it further leads to a compila-

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功