没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

A disciplined approach to neural network hyper-parameters Part 1 -- learning rate, batch size, momentum, and weight decay, by Leslie N. Smith

资源推荐

资源详情

资源评论

US Naval Research Laboratory Technical Report 5510-026

A DISCIPLINED APPROACH TO NEURAL NETWORK

HYPER-PARAMETERS: PART 1 – LEARNING RATE,

BATCH SIZE, MOMENTUM, AND WEIGHT DECAY

Leslie N. Smith

US Naval Research Laboratory

Washington, DC, USA

leslie.smith@nrl.navy.mil

ABSTRACT

Although deep learning has produced dazzling successes for applications of im-

age, speech, and video processing in the past few years, most trainings are with

suboptimal hyper-parameters, requiring unnecessarily long training times. Setting

the hyper-parameters remains a black art that requires years of experience to ac-

quire. This report proposes several efficient ways to set the hyper-parameters that

significantly reduce training time and improves performance. Specifically, this

report shows how to examine the training validation/test loss function for subtle

clues of underfitting and overfitting and suggests guidelines for moving toward

the optimal balance point. Then it discusses how to increase/decrease the learning

rate/momentum to speed up training. Our experiments show that it is crucial to

balance every manner of regularization for each dataset and architecture. Weight

decay is used as a sample regularizer to show how its optimal value is tightly

coupled with the learning rates and momentum. Files to help replicate the results

reported here are available at https://github.com/lnsmith54/hyperParam1.

1 INTRODUCTION

The rise of deep learning (DL) has the potential to transform our future as a human race even more

than it already has and perhaps more than any other technology. Deep learning has already created

significant improvements in computer vision, speech recognition, and natural language processing,

which has led to deep learning based commercial products being ubiquitous in our society and in

our lives.

In spite of this success, the application of deep neural networks remains a black art, often requiring

years of experience to effectively choose optimal hyper-parameters, regularization, and network

architecture, which are all tightly coupled. Currently the process of setting the hyper-parameters,

including designing the network architecture, requires expertise and extensive trial and error and is

based more on serendipity than science. On the other hand, there is a recognized need to make the

application of deep learning as easy as possible.

Currently there are no simple and easy ways to set hyper-parameters – specifically, learning rate,

batch size, momentum, and weight decay. A grid search or random search (Bergstra & Bengio,

2012) of the hyper-parameter space is computationally expensive and time consuming. Yet train-

ing time and final performance is highly dependent on good choices. In addition, practitioners

often choose one of the standard architectures (such as residual networks (He et al., 2016)) and the

hyper-parameter files that are freely available in a deep learning framework’s “model zoo” or from

github.com but these are often sub-optimal for the practitioner’s data.

This report proposes several methodologies for finding optimal settings for several hyper-

parameters. A comprehensive approach of all hyper-parameters is valuable due to the interdepen-

dence of all of these factors. Part 1 of this report examines learning rate, batch size, momentum, and

weight decay and Part 2 will examine the architecture, regularization, dataset and task. The goal is to

provide the practitioner with practical advice that saves time and effort, yet improves performance.

1

arXiv:1803.09820v2 [cs.LG] 24 Apr 2018

US Naval Research Laboratory Technical Report 5510-026

The basis of this approach is based on the well-known concept of the balance between underfitting

versus overfitting. Specifically, it consists of examining the training’s test/validation loss for clues of

underfitting and overfitting in order to strive for the optimal set of hyper-parameters (this report uses

“test loss” or “validation loss” interchangeably but both refer to use of validation data to find the

error or accuracy produced by the network during training). This report also suggests paying close

attention to these clues while using cyclical learning rates (Smith, 2017) and cyclical momentum.

The experiments discussed herein indicate that the learning rate, momentum, and regularization are

tightly coupled and optimal values must be determined together.

Since this report is long, the reader who only wants the highlights of this report can: (1) look at

every Figure and caption, (2) read the paragraphs that start with Remark, and (2) review the hyper-

parameter checklist at the beginning of Section 5.

2 RELATED WORK

The topics discussed in this report are related to a great deal of the deep learning literature. See

Goodfellow et al. (2016) for an introductory text on the field. Perhaps most related to this work is

the book “Neural networks: tricks of the trade” (Orr & M

¨

uller, 2003) that contains several chapters

with practical advice on hyper-parameters, such as Bengio (2012). Hence, this section only discusses

a few of the most relevant papers.

This work builds on earlier work by the author. In particular, cyclical learning rates were introduced

by Smith (2015) and later updated in Smith (2017). Section 4.1 provides updated experiments on

super-convergence (Smith & Topin, 2017). There is a discussion in the literature on modifying the

batch size instead of the learning rate, such as discussed in Smith et al. (2017).

Several recent papers discuss the use of large learning rate and small batch size, such as Jastrzebski

et al. (2017a;b); Xing et al. (2018). They demonstrate that the ratio of the learning rate over the batch

size guides training. The recommendations in this report differs from those papers on the optimal

setting of learning rates and batch sizes.

Smith and Le (Smith & Le, 2017) explore batch sizes and correlate the optimal batch size to the

learning rate, size of the dataset, and momentum. This report is more comprehensive and more

practical in its focus. In addition, Section 4.2 recommends a larger batch size than this paper.

A recent paper questions the use of regularization by weight decay and dropout (Hern

´

andez-Garc

´

ıa

& K

¨

onig, 2018). One of the findings of this report is that the total regularization needs to be in

balance for a given dataset and architecture. Our experiments suggest that their perspective on regu-

larization is limited – they only add regularization by data augmentation to replace the regularization

by weight decay and dropout without a full study of regularization.

There also exist approaches to learn optimal hyper-parameters by differentiating the gradient with

respect to the hyper-parameters (for example see Lorraine & Duvenaud (2018)). The approach in

this report is simpler for the practitioner to perform.

3 THE UNREASONABLE EFFECTIVENESS OF VALIDATION/TEST LOSS

“Well begun is half done.” Aristotle

A good detective observes subtle clues that the less observant miss. The purpose of this Section is

to draw your attention to the clues in the training process and provide guidance as to their meaning.

Often overlooked elements from the training process tell a story. By observing and understanding the

clues available early during training, we can tune our architecture and hyper-parameters with short

runs of a few epochs (an epoch is defined as once through the entire training data). In particular,

by monitoring validation/test loss early in the training, enough information is available to tune the

architecture and hyper-parameters and this eliminates the necessity of running complete grid or

random searches.

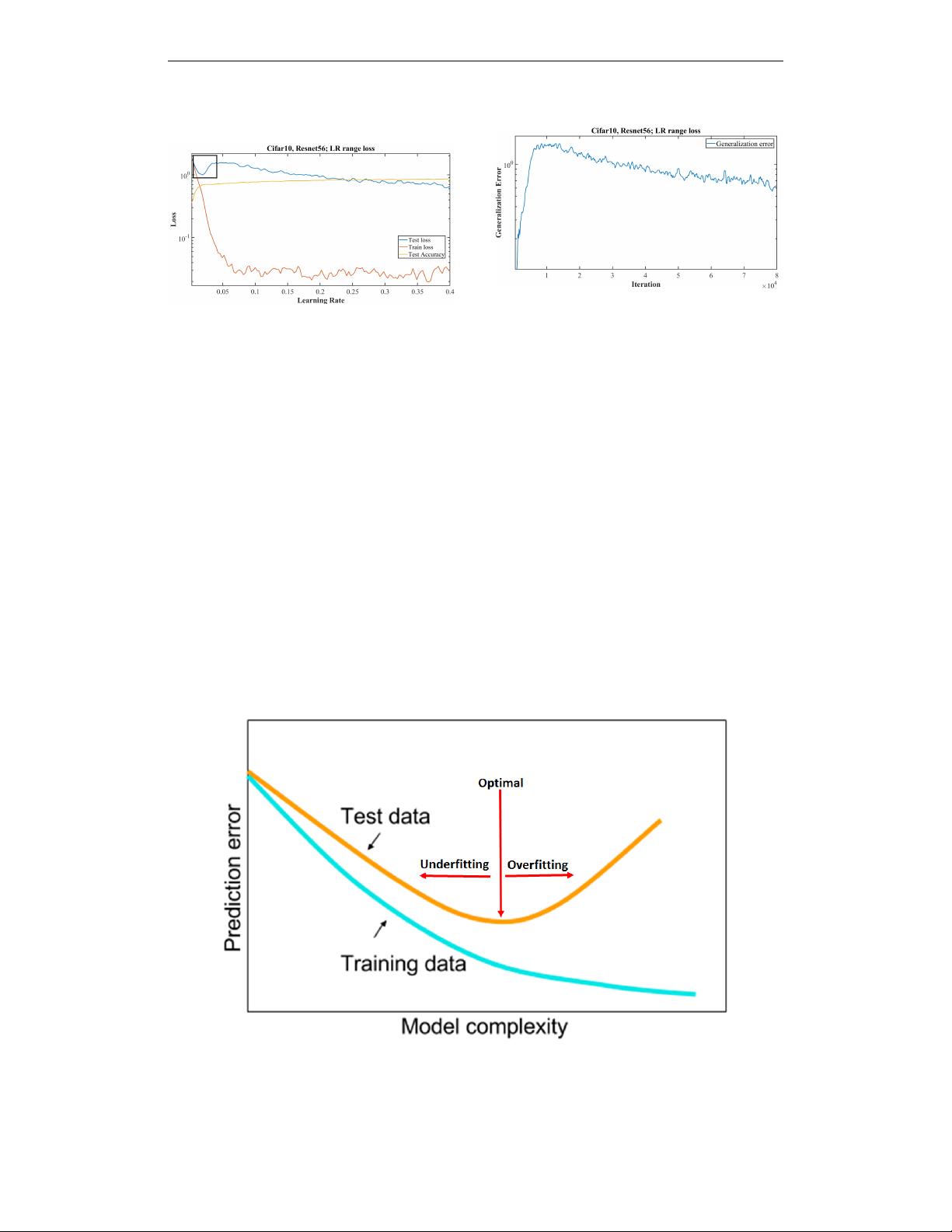

Figure 1a shows plots of the training loss, validation accuracy, and validation loss for a learning rate

range test of a residual network on the Cifar dataset to find reasonable learning rates for training.

In this situation, the test loss within the black box indicates signs of overfitting at learning rates

2

US Naval Research Laboratory Technical Report 5510-026

(a) Characteristic plot of training loss, validation ac-

curacy, and validation loss.

(b) Characteristic plot of the generalization error,

which is the validation/test loss minus the training

loss.

Figure 1: Comparison of the training loss, validation accuracy, validation loss, and generalization

error that illustrates the additional information about the training process in the test/validation loss

but is not visible in the test accuracy and training loss or clear with the generalization error. These

runs are a learning rate range test with the resnet-56 architecture and Cifar-10 dataset.

of 0.01 − 0.04. This information is not present in the test accuracy or in the training loss curves.

However, if we were to subtract the training loss from the test/validation loss (i.e., the generalization

error) the information is present in the generalization error but often the generalization error is less

clear than the validation loss. This is an example where the test loss provides valuable information.

We know that this architecture has the capacity to overfit and that early in the training too small a

learning rate will create overfitting.

Remark 1. The test/validation loss is a good indicator of the network’s convergence and should be

examined for clues. In this report, the test/validation loss is used to provide insights on the training

process and the final test accuracy is used for comparing performance.

Section 3.1 starts a brief review on the underfitting and overfitting tradeoff and demonstrates that the

early training test loss provides information on how to modify the hyper-parameters.

Figure 2: Pictorial explanation of the tradeoff between underfitting and overfitting. Model complex-

ity (the x axis) refers to the capacity or powerfulness of the machine learning model. The figure

shows the optimal capacity that falls between underfitting and overfitting.

3

US Naval Research Laboratory Technical Report 5510-026

3.1 A REVIEW OF THE UNDERFITTING AND OVERFITTING TRADE-OFF

Underfitting is when the machine learning model is unable to reduce the error for either the test or

training set. The cause of underfitting is an under capacity of the machine learning model; that is,

it is not powerful enough to fit the underlying complexities of the data distributions. Overfitting

happens when the machine learning model is so powerful as to fit the training set too well and

the generalization error increases. The representation of this underfitting and overfitting trade-off

displayed in Figure 2, which implies that achieving a horizontal test loss can point the way to the

optimal balance point. Similarly, examining the test loss during the training of a network can also

point to the optimal balance of the hyper-parameters.

Remark 2. The takeaway is that achieving the horizontal part of the test loss is the goal of hyper-

parameter tuning. Achieving this balance can be difficult with deep neural networks. Deep networks

are very powerful, with networks becoming more powerful with greater depth (i.e., more layers),

width (i.e, more neurons or filters per layer), and the addition of skip connections to the architecture.

Also, there are various forms of regulation, such as weight decay or dropout (Srivastava et al., 2014).

One needs to vary important hyper-parameters and can use a variety of optimization methods, such

as Nesterov or Adam (Kingma & Ba, 2014). It is well known that optimizing all of these elements

to achieve the best performance on a given dataset is a challenge.

An insight that inspired this Section is that signs of underfitting or overfitting of the test or validation

loss early in the training process are useful for tuning the hyper-parameters. This section started with

the quote “Well begun is half done” because substantial time can be saved by attending to the test

loss early in the training. For example, Figure 1a shows some overfitting within the black square

that indicates a sub-optimal choice of hyper-parameters. If the hyper-parameters are set well at the

beginning, they will perform well through the entire training process. In addition, if the hyper-

parameters are set using only a few epochs, a significant time savings is possible in the search for

hyper-parameters. The test loss during the training process can be used to find the optimal network

architecture and hyper-parameters without performing a full training in order to compare the final

performance results.

The rest of this report discusses the early signs of underfitting and overfitting that are visible in the

test loss. In addition, it discusses how adjustments to the hyper-parameters affects underfitting and

overfitting. This is necessary in order to know how to adjust the hyper-parameters.

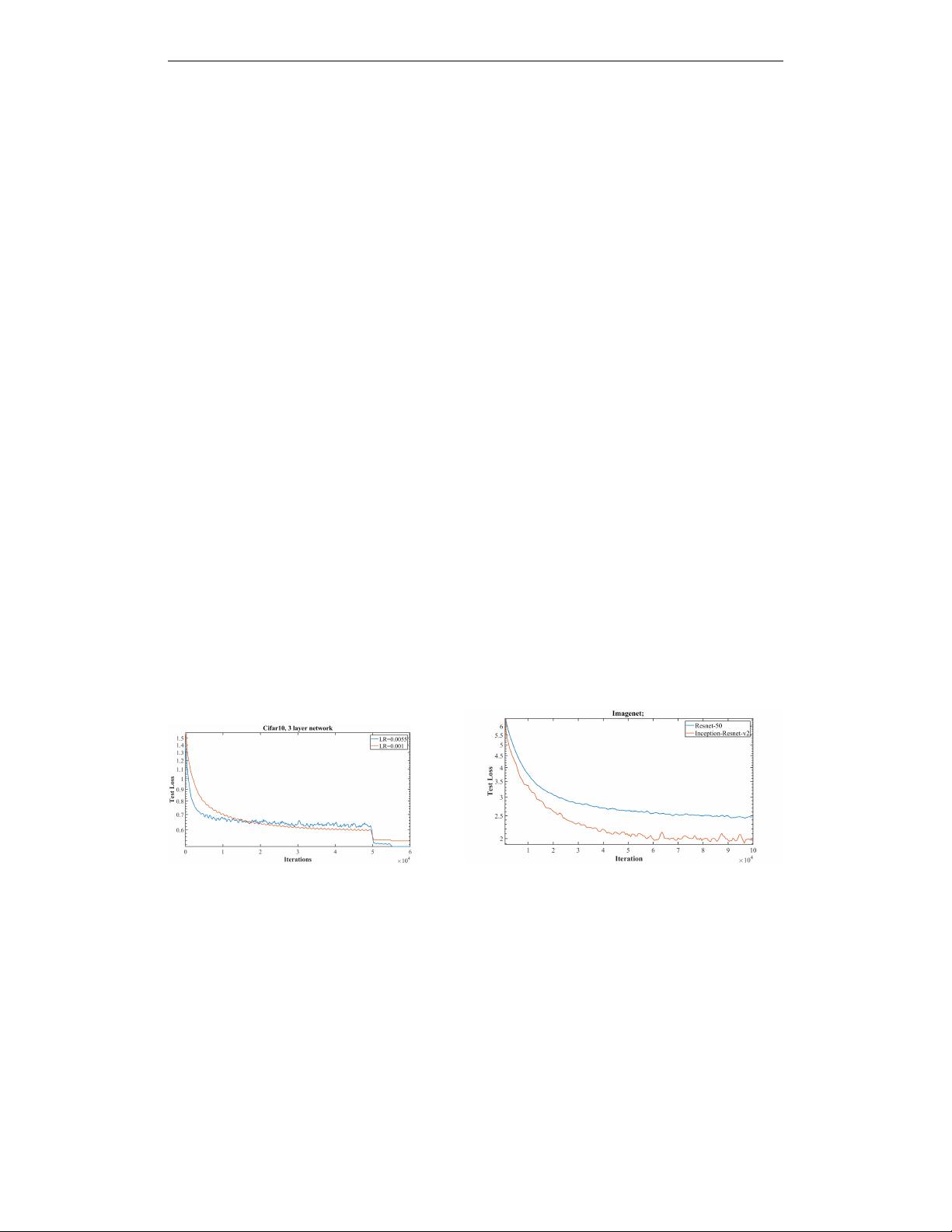

(a) Test loss for the Cifar-10 dataset with a

shallow 3 layer network.

(b) Test loss for Imagenet with two networks; resnet-50

and inception-resnet-v2.

Figure 3: Underfitting is characterized by a continuously decreasing test loss, rather than a horizontal

plateau. Underfitting is visible during the training on two different datasets, Cifar-10 and imagenet.

3.2 UNDERFITTING

Our first example is with a shallow, 3-layer network on the Cifar-10 dataset. The red curve in Figure

3a with a learning rate of 0.001 shows a decreasing test loss. This curve indicates underfitting

because it continues to decrease, like the left side of the test loss curve in Figure 2. Increasing the

learning rate moves the training from underfitting towards overfitting. The blue curve shows the test

loss with a learning rate of 0.004. Note that the test loss decreases more rapidly during the initial

iterations and is then horizontal. This is one of the early positive clues that indicates that this curve’s

configuration will produce a better final accuracy than the other configuration, which it does.

4

剩余20页未读,继续阅读

资源评论

大饼博士X

- 粉丝: 4091

- 资源: 9

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- VID_20250103_144816_970.mp4

- VID_20250103_141709_050.mp4

- -9214195356454737604_235797052

- szg_1682_50001_0b53s4aaaaaaj4aicy3kz5tvdf6daclqaaca.f633.mp4

- 感应电机转子磁场定向FOC仿真,异步电机调速控制仿真 电机参数是山河智能SWFE15型起重量1.5吨电动叉车使用的实际电机 采用转速电流双闭环,防饱和PI调节器,SVPWM发波,通过iq电流查表实

- szg_4578_50001_0b537qabeaaalmaebx3lentvd7gdcl6aaesa.f206513.mp4

- 机械设计双层自动上料倍数链输送机sw18可编辑非常好的设计图纸100%好用.zip

- szg_9837_50001_0b536mabmaaaoaakua3tlztvd46dc3zqafsa.f104101.mp4

- ZeroBasedOne-EXE

- IMG_20250103_145430_206.jpg

- IMG_20250103_145750_179.jpg

- IMG_20250103_145807_306.jpg

- 加速度测量系统.pdf

- 电机控制器,IGBT结温估算(算法+模型)国际大厂机密算法,多年实际应用,准确度良好 能够同时对IGBT内部6个三极管和6个二极管温度进行估计,并输出其中最热的管子对应温度 可用于温度保护,降额,提

- Simplorer与Maxwell电机联合仿真,包含搭建好的Simplorer电机场路耦合主电路与控制算法(矢量控制SVPWM),包含电路与算法搭建的详细教程视频 电机模型可替

- 大学学生信息管理系统,个人学习整理,仅供参考

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功