W. Zhao, Z. Zhang and L. Wang Engineering Applications of Artificial Intelligence 87 (2020) 103300

EAs have been presented recently, including biogeography-based op-

timization (BBO) (Simon, 2009), bacterial foraging optimization (BFO)

(Passino, 2002), artificial algae algorithm (AAA) (Uymaz et al., 2015),

bat algorithm (BA) (Yang and Hossein Gandomi, 2012), monkey king

evolutionary (MKE) (Meng and Pan, 2016), et al. (Punnathanam and

Kotecha, 2016; Pan, 2012; Mehrabian and Lucas, 2006; Civicioglu,

2013).

Physics-based algorithms mimic physical laws in universe. Simu-

lated annealing (SA) (Kirkpatrick et al., 1983) is one of the most

well-known physics-based techniques. SA is analogy with thermody-

namics in physical material. Annealing is to minimize energy use,

specifically with the way that heated metals cool and crystallize. Re-

cently, multiple new physics-inspired techniques are developed, in-

cluding gravitational search algorithm (GSA) (Rashedi et al., 2009),

electromagnetism-like mechanism (EM) algorithm (Birbil and Fang,

2003), particle collision algorithm (PCA) (Sacco and De Oliveira, 2005),

vortex search algorithm (VSA) (Doğan and Ölmez, 2015), water evap-

oration optimization (WEO) (Kaveh and Bakhshpoori, 2016), atom

search optimization (ASO) (Zhao et al., 2019a), big bang–big crunch

algorithm (BB-BC) (Genç et al., 2010), et al. (Shah-Hosseini, 2011;

Chuang and Jiang, 2007; Shah-Hosseini, 2009; Kaveh and Dadras,

2017; Kaveh and Talatahari, 2010; Zheng et al., 2010; Javidy et al.,

2015; Mirjalili and Hashim, 2012; Zheng, 2015; Flores et al., 2011;

Tamura and Yasuda, 2011; Rao et al., 2012; Zarand et al., 2002;

Shen and Li, 2009; Kripka and Kripka, 2008; Patel and Savsani, 2015;

Eskandar et al., 2012; Moghaddam et al., 2012).

Swarm-based algorithms simulate social behaviors of species like

self-organization and division of labor (Beni and Wang, 1993; Ab Wa-

hab et al., 2015). Two outstanding examples are particle swarm op-

timization (PSO) (Kennedy and Eberhart, 1995) and ant colony opti-

mization (ACO) (Dorigo et al., 1996). PSO inspired by bird flocking

behaviors updates each agent in a population by its best individual

agent and the best global agent. ACO is inspired by the foraging

behaviors of ant swarms, ants search for the most effective route

from their nest to the food source by the intensity of pheromones

which reduces over time. Other examples of swarm-inspired algorithms

include glowworm swarm optimization (GSO) (Krihnanand and Ghose,

2009), grey wolf optimization (GWO) (Mirjalili et al., 2014), artifi-

cial ecosystem-based optimization (AEO) (Zhao et al., 2019b), shark

smell optimization (SSO) (Abedinia et al., 2014), firefly algorithm (FA)

(Yang, 2010), supply–demand-based optimization (SDO) (Zhao et al.,

2019c), spotted hyena optimization (SHO) (Gaurav and Vijay, 2017),

and so on (Yang and Deb, 2009; Oftadeh et al., 2010; Kiran, 2015;

Mohamed et al., 2017; Cuevas et al., 2013; Askarzadeh, 2014; Saremi

et al., 2017; Akay and Karaboga, 2012a,b; Mirjalili, 2016; Kaveh and

Farhoudi, 2013; Yang, 2010; Mucherino and Seref, 2007; Gandomi and

Alavi, 2012).

It is worth mentioning that there are other recently developed meta-

heuristics motivated from social behaviors and ideology in humans.

Some of the most well-known ones include parliamentary optimization

algorithm (POA) (Borji, 2007), artificial human optimization (AHO)

(Gajawada, 2016), continuous opinion dynamics optimization (CODO)

(Kaur et al., 2013), league championship algorithm (LCA) (Kashan,

2009), social group optimization (SGO) (Satapathy and Naik, 2016),

ideology algorithm (IA) (Huan et al., 2016), and so on (Kuo and Lin,

2013; Moosavian and Roodsari, 2014; Kumar et al., 2018; Xu et al.,

2010; Ray and Liew, 2003).

Swarm-based algorithms have some unique features. Some histor-

ical information about the swarm is restored to provide a basis for

every agent to update individual positions by using interaction rules

over subsequent iterations. In general, swarm-based techniques share

two specific behaviors, exploration and exploitation (Alba and Dor-

ronsoro, 2005; Lynn and Suganthan, 2015). Exploration is to search

a wide variable space for promising solutions which are not neighbor

to the current solution, and this search should be as extensive and

random as possible. This behavior generally contributes to escaping

local optima. Exploitation is to confine the search to a small region

found in the exploration process to refine the solution. Essentially

this behavior implements local search in a promising space. Based on

these two behaviors, swarm-based algorithms have superiority over

other two types of algorithms.

Some might question why new optimization algorithms are still

developed despite of so many existing algorithms. The answer can be

found in the No Free Lunch Theorem of Optimization (Wolpert and

Macready, 1997), which describes that there is no optimizer perform-

ing the best for all optimization problems. So developing an effec-

tive swarm-inspired optimizer to solve specific real-world problems

motivates this work.

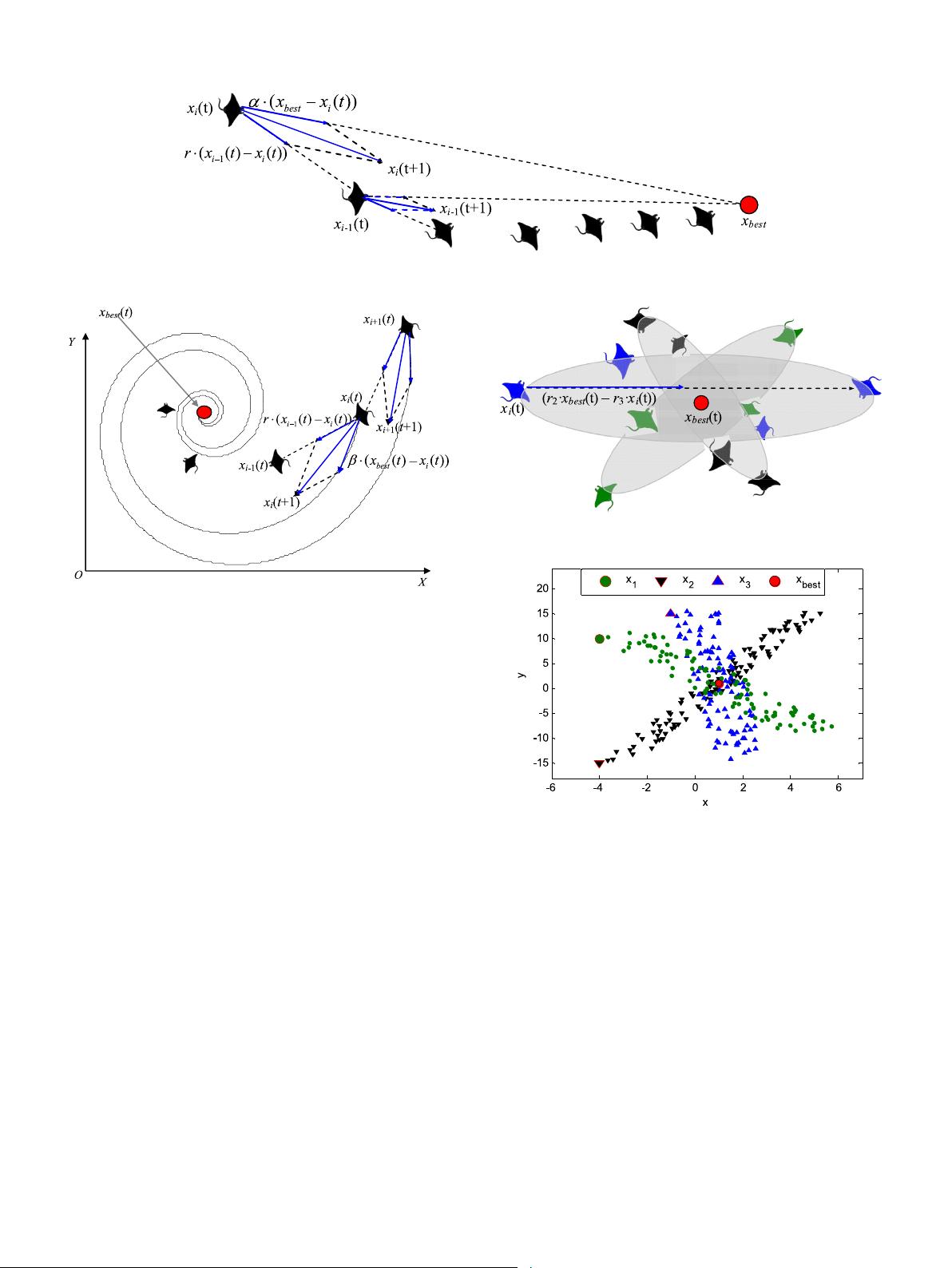

This paper proposes a new metaheuristic algorithm, named manta

ray foraging optimization (MRFO), which simulates the foraging be-

haviors of manta rays. This algorithm has three foraging operators,

including chain foraging, cyclone foraging, and somersault foraging.

The performance of MRFO is tested using 31 test functions and 8 engi-

neering problems. The test results discover that the proposed method

significantly outperforms those well-known metaheuristics.

This study is structured as follows. Section 2 detailedly introduces

MRFO algorithm and the concepts behind it. 31 mathematical optimiza-

tion problems and 8 real-world engineering problems are employed

to check the validity of MRFO in Sections 3 and 4, respectively.

Finally, Section 5 gives some conclusions and suggests future research

directions.

2. Manta Ray Foraging Optimization (MRFO)

2.1. Inspiration

Manta rays are fancy creatures although they appear to be terrible.

They are one of the largest known marine creatures. Manta rays have

a flat body from top to bottom and a pair of pectoral fins, with which

they elegantly swim as birds freely fly. They also have a pair of cephalic

lobes that extend in front of their giant, terminal mouths. Fig. 1(A)

provided by Swanson Chan on Unsplash depicts a foraging manta ray,

and Fig. 1(B) shows the structure of a manta ray. Without sharp teeth,

manta rays feed on plankton made mostly of microscopic animals from

the water. When foraging, they funnel water and prey into their mouths

using horn-shaped cephalic lobes. Then the prey is filtered from the

water by modified gill rakers (Dewar et al., 2008). Manta rays can be

divided into two distinct species. One is reef manta rays (manta alfredi)

living in Indian Ocean and western and south Pacific, which can reach

5.5 m in width. The other is giant manta rays (manta birostris) found

throughout tropical, subtropical and warm temperate oceans, which

can reach 7 m in width. They have existed for about 5 million years.

The average life span is 20 years but many never reach this age because

they are hunted by fishers (Miller and Klimovich, 2016).

Manta rays eat a large amount of plankton every day. An adult

manta ray can eat 5 kg of plankton on daily basis. Oceans are believed

to be the richest source of plankton. However, plankton is not evenly

dispersed or regularly concentrated in some specific areas, which are

formed with ebb and flow of tides or change of seasons. Interestingly,

manta rays are always good at finding abundant plankton. The most

intriguing thing about manta rays is their foraging behaviors, and they

may travel alone or in groups of up to 50 but foraging is often observed

in groups. These creatures have evolved a variety of fantastic and

intelligent foraging strategies.

The first foraging strategy is chain foraging (Johnna, 2016). When

50 or more manta rays start foraging, they line up, one behind another,

forming an orderly line. Smaller male manta rays are piggybacked upon

female ones and swim on top of their backs to match the beats of

the female’s pectoral fins. Consequently, the plankton which is missed

by previous manta rays will be scooped up by ones behind them.

Cooperating with each other in this way, they can funnel the most

amount of plankton into their gills and improve their food rewards.

2

蝠鲼优化算法.zip (2个子文件)

蝠鲼优化算法.zip (2个子文件)  j.engappai.2019.103300.pdf 4.83MB

j.engappai.2019.103300.pdf 4.83MB MRFO.zip 5KB

MRFO.zip 5KB

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功

评论3