没有合适的资源?快使用搜索试试~ 我知道了~

三维重建论文 -point-mvsnet

需积分: 5 0 下载量 108 浏览量

2024-10-26

08:36:53

上传

评论

收藏 4.92MB PDF 举报

温馨提示

三维重建论文 _point-mvsnet

资源推荐

资源详情

资源评论

Point-Based Multi-View Stereo Network

Rui Chen

1,3*

Songfang Han

2,3*

Jing Xu

1

Hao Su

3

1

Tsinghua University

2

The Hong Kong University of Science and Technology

3

University of California, San Diego

chenr17@mails.tsinghua.edu.cn shanaf@connect.ust.hk

jingxu@tsinghua.edu.cn haosu@eng.ucsd.edu

before ow after ow

PointFlow

Dynamic Feature Fetching

Coarse prediction Rened prediction Final prediction

GT

surface

GT

surface

Figure 1: Point-MVSNet performs multi-view stereo reconstruction in a coarse-to-fine fashion, learning to predict the 3D flow of each point

to the groundtruth surface based on geometry priors and 2D image appearance cues dynamically fetched from multi-view images and regress

accurate and dense point clouds iteratively.

Abstract

We introduce Point-MVSNet, a novel point-based deep

framework for multi-view stereo (MVS). Distinct from

existing cost volume approaches, our method directly

processes the target scene as point clouds. More specifically,

our method predicts the depth in a coarse-to-fine manner.

We first generate a coarse depth map, convert it into a point

cloud and refine the point cloud iteratively by estimating

the residual between the depth of the current iteration

and that of the ground truth. Our network leverages 3D

geometry priors and 2D texture information jointly and

effectively by fusing them into a feature-augmented point

cloud, and processes the point cloud to estimate the 3D flow

for each point. This point-based architecture allows higher

accuracy, more computational efficiency and more flexibility

than cost-volume-based counterparts. Experimental results

show that our approach achieves a significant improvement

in reconstruction quality compared with state-of-the-art

methods on the DTU and the Tanks and Temples dataset.

Our source code and trained models are available at

https://github.com/callmeray/PointMVSNet.

*

Equal contribution.

1. Introduction

Recent learning-based multi-view stereo (MVS)

methods [

12

,

29

,

10

] have shown great success compared

with their traditional counterparts as learning-based

approaches are able to learn to take advantage of scene

global semantic information, including object materials,

specularity, and environmental illumination, to get more

robust matching and more complete reconstruction. All

these approaches apply dense multi-scale 3D CNNs to

predict the depth map or voxel occupancy. However, 3D

CNNs require memory cubic to the model resolution,

which can be potentially prohibitive to achieving optimal

performance. While Maxim et al. [

24

] addressed this

problem by progressively generating an Octree structure,

the quantization artifacts brought by grid partitioning

still remain, and errors may accumulate since the tree is

generated layer by layer.

In this work, we propose a novel point cloud multi-view

stereo network, where the target scene is directly processed

as a point cloud, a more efficient representation, particularly

when the 3D resolution is high. Our framework is composed

of two steps: first, in order to carve out the approximate

object surface from the whole scene, an initial coarse depth

map is generated by a relatively small 3D cost volume and

1

arXiv:1908.04422v1 [cs.CV] 12 Aug 2019

then converted to a point cloud. Subsequently, our novel

PointFlow module is applied to iteratively regress accurate

and dense point clouds from the initial point cloud. Similar to

ResNet [

8

], we explicitly formulate the PointFlow to predict

the residual between the depth of the current iteration and

that of the ground truth. The 3D flow is estimated based on

geometry priors inferred from the predicted point cloud and

the 2D image appearance cues dynamically fetched from

multi-view input images (Figure 1).

We find that our Point-based Multi-view Stereo Network

(Point-MVSNet) framework enjoys advantages in accuracy,

efficiency, and flexibility when it is compared with previous

MVS methods that are built upon a predefined 3D volume

with the fixed resolution to aggregate information from

views. Our method adaptively samples potential surface

points in the 3D space. It keeps the continuity of the surface

structure naturally, which is necessary for high precision

reconstruction. Furthermore, because our network only

processes valid information near the object surface instead

of the whole 3D space as is the case in 3D CNNs, the

computation is much more efficient. Lastly, the adaptive

refinement scheme allows us to first peek at the scene at

coarse resolution and then densify the reconstructed point

cloud only in the region of interest. For scenarios such as

interaction-oriented robot vision, this flexibility would result

in saving of computational power.

Our method achieves state-of-the-art performance on

standard multi-view stereo benchmarks among learning-

based methods, including DTU [

1

] and Tanks and

Temples [

15

]. Compared with previous state-of-the-art, our

method produces better results in terms of both completeness

and overall quality. Besides, we show potential applications

of our proposed method, such as foveated depth inference.

2. Related work

Multi-view Stereo Reconstruction

MVS is a classical

problem that had been extensively studied before the rise of

deep learning. A number of 3D representations are adopted,

including volumes [

26

,

9

], deformation models [

3

,

31

], and

patches [

5

], which are iteratively updated through multi-view

photometric consistency and regularization optimization.

Our iterative refinement procedure shares a similar idea

with these classical solutions by updating the depth map

iteratively. However, our learning-based algorithm achieves

improved robustness to input image corruption and avoids

the tedious manual hyper-parameters tuning.

Learning-based MVS

Inspired by the recent success of

deep learning in image recognition tasks, researchers began

to apply learning techniques to stereo reconstruction tasks

for better patch representation and matching [

7

,

22

,

16

].

Although these methods in which only 2D networks are

used have made a great improvement on stereo tasks, it is

difficult to extend them to multi-view stereo tasks, and their

performance is limited in challenging scenes due to the lack

of contextual geometry knowledge. Concurrently, 3D cost

volume regularization approaches have been proposed [

14

,

12

,

13

], where a 3D cost volume is built either in the camera

frustum or the scene. Next, the 2D image features of multi-

views are warped in the cost volume, so that 3D CNNs can

be applied to it. The key advantage of 3D cost volume is

that the 3D geometry of the scene can be captured by the

network explicitly, and the photometric matching can be

performed in 3D space, alleviating the influence of image

distortion caused by perspective transformation and potential

occlusions, which makes these methods achieve better results

than 2D learning based methods. Instead of using voxel

grids, in this paper we propose to use a point-based network

for MVS tasks to take advantage of 3D geometry learning

without being buredened by the inefficiency found in 3D

CNN computation.

High-Resolution MVS

High-resolution MVS is critical to

real applications such as robot manipulation and augmented

reality. Traditional methods [

17

,

5

,

18

] generate dense 3D

patches by expanding from confident matching key-points

repeatedly, which is potentially time-consuming. These

methods are also sensitive to noise and change of viewpoint

owing to the usage of hand-crafted features. Recent learning

methods try to ease memory consumption by advanced space

partitioning [

21

,

27

,

24

]. However, most of these methods

construct a fixed cost volume representation for the whole

scene, lacking flexibility. In our work, we use point clouds

as the representation of the scene, which is more flexible and

enables us to approach the accurate position progressively.

Point-based 3D Learning

Recently, a new type of deep

network architecture has been proposed in [

19

,

20

], which

is able to process point clouds directly without converting

them to volumetric grids. Compared with voxel-based

methods, this kind of architecture concentrates on the

point cloud data and saves unnecessary computation. Also,

the continuity of space is preserved during the process.

While PointNets have shown significant performance and

efficiency improvement in various 3D understanding tasks,

such as object classification and detection [

20

], it is under

exploration how this architecture can be used for MVS task,

where the 3D scene is unknown to the network. In this paper,

we propose PointFlow module, which estimates the 3D flow

based on joint 2D-3D features of point hypotheses.

3. Method

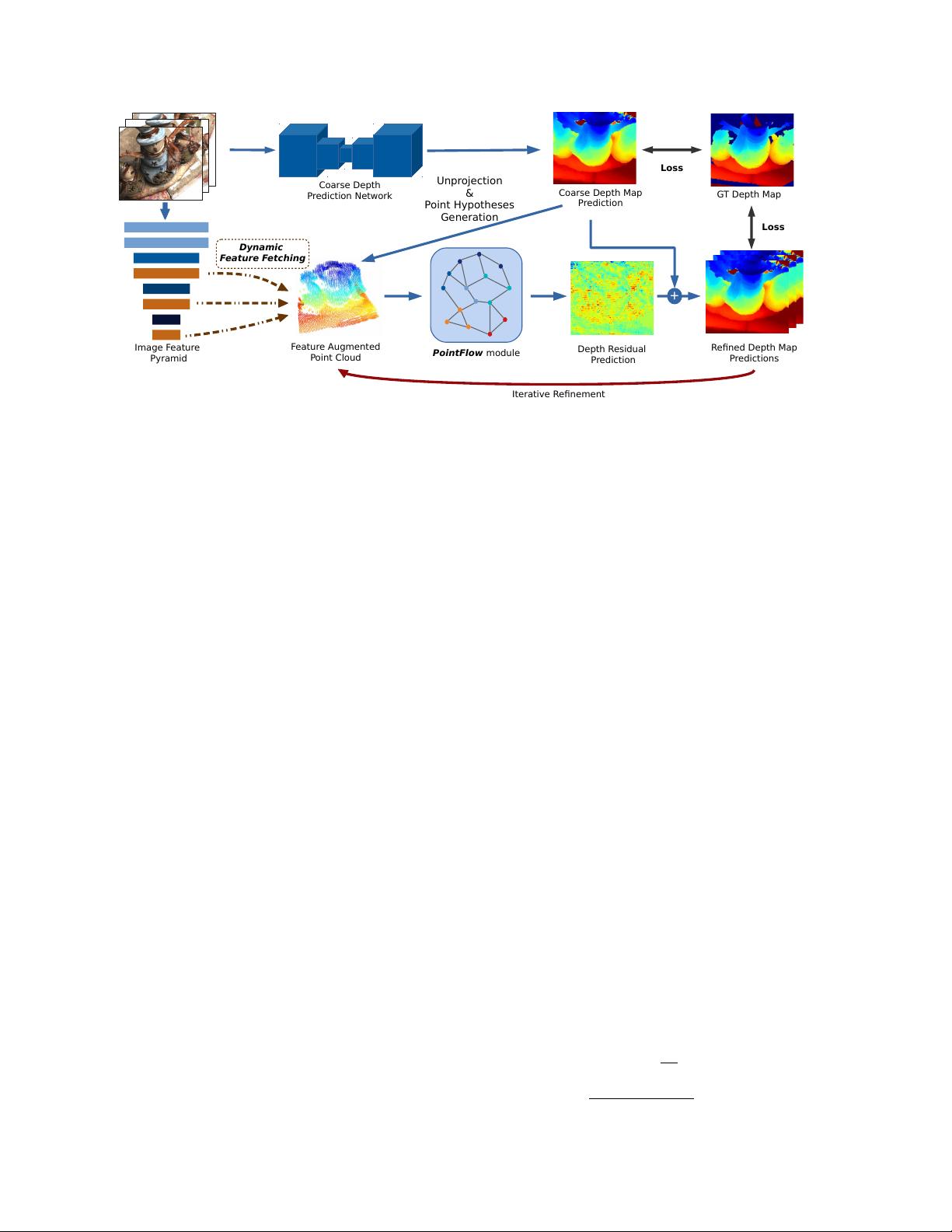

This section describes the detailed network architecture

of Point-MVSNet (Figure 2). Our method can be divided

into two steps, coarse depth prediction, and iterative depth

2

GT Depth Map

Coarse Depth Map

Prediction

Rened Depth Map

Predictions

Loss

Coarse Depth

Prediction Network

Feature Augmented

Point Cloud

Loss

+

Image Feature

Pyramid

Depth Residual

Prediction

Unprojection

&

Point Hypotheses

Generation

Iterative Renement

PointFlow module

Dynamic

Feature Fetching

Figure 2: Overview of Point-MVSNet architecture. A coarse depth map is first predicted with low GPU memory and computation cost and

then unprojected to a point cloud along with hypothesized points. For each point, the feature is fetched from the multi-view image feature

pyramid dynamically. The PointFlow module uses the feature augmented point cloud for depth residual prediction, and the depth map is

refined iteratively.

refinement. Let

I

0

denote the reference image and

{I

i

}

N

i=1

denote a set of its neighbouring source images. We first

generate a coarse depth map for

I

0

(subsection 3.1). Since

the resolution is low, the existing volumetric MVS method

has sufficient efficiency and can be used. Second we

introduce the 2D-3D feature lifting (subsection 3.2), which

associates the 2D image information with 3D geometry

priors. Then we propose our novel PointFlow module

(subsection 3.3) to iteratively refine the input depth map

to higher resolution with improved accuracy.

3.1. Coarse depth prediction

Recently, learning-based MVS [

12

,

29

,

11

] achieves state-

of-the-art performance using multi-scale 3D CNNs on cost

volume regularization. However, this step could be extremely

memory expensive as the memory requirement is increasing

cubically as the cost volume resolution grows. Taking

memory and time into consideration, we use the recently

proposed MVSNet [

29

] to predict a relatively low-resolution

cost volume.

Given the images and corresponding camera parameters,

MVSNet [

29

] builds a 3D cost volume upon the reference

camera frustum. Then the initial depth map for reference

view is regressed through multi-scale 3D CNNs and the

soft argmin [

15

] operation. In MVSNet, feature maps are

downsampled to

1/4

of the original input image in each

dimension and the number of virtual depth planes are

256

for

both training and evaluation. On the other hand, in our coarse

depth estimation network, the cost volume is constructed

with feature maps of

1/8

the size of the reference image,

containing

48

or

96

virtual depth planes for training and

evaluation, respectively. Therefore, our memory usage of

this 3D feature volume is about 1/20 of that in MVSNet.

3.2. 2D-3D feature lifting

Image Feature Pyramid

Learning-based image features

are demonstrated to be critical to boosting up dense pixel

correspondence quality [

29

,

23

]. In order to endow points

with a larger receptive field of contextual information at

multiple scales, we construct a 3-scale feature pyramid.

2D convolutional networks with stride

2

are applied to

downsample the feature map, and each last layer before

the downsampling is extracted to construct the final feature

pyramid

F

i

= [F

1

i

, F

2

i

, F

3

i

]

for image

I

i

. Similar to common

MVS methods[

29

,

11

], feature pyramids are shared among

all input images.

Dynamic Feature Fetching

The point feature used in our

network is compromised of the fetched multi-view image

feature variance with the normalized 3D coordinates in world

space X

p

. We will introduce them separately.

Image appearance features for each 3D point can

be fetched from the multi-view feature maps using a

differentiable unprojection given corresponding camera

parameters. Note that features

F

1

i

, F

2

i

, F

3

i

are at different

image resolutions, thus the camera intrinsic matrix should

be scaled at each level of the feature maps for correct feature

warping. Similar to MVSNet [

29

], we keep a variance-based

cost metric, i.e. the feature variance among different views,

to aggregate features warped from an arbitrary number of

views. For pyramid feature at level

j

, the variance metric for

N views is defined as below:

C

j

=

N

X

i=1

F

j

i

− F

j

2

N

, (j = 1, 2, 3) (1)

3

剩余12页未读,继续阅读

资源评论

三十度角阳光的问候

- 粉丝: 2208

- 资源: 352

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- CC2530无线zigbee裸机代码实现液晶LCD显示.zip

- CC2530无线zigbee裸机代码实现中断唤醒系统.zip

- 车辆、飞机、船检测24-YOLO(v5至v11)、COCO、CreateML、Paligemma、TFRecord、VOC数据集合集.rar

- 基于51单片机的火灾烟雾红外人体检测声光报警系统(protues仿真)-毕业设计

- 高仿抖音滑动H5随机短视频源码带打赏带后台 网站引流必备源码

- 车辆、飞机、船检测25-YOLO(v5至v11)、COCO、CreateML、Paligemma、TFRecord、VOC数据集合集.rar

- 四足机器人示例代码pupper-example-master.zip

- Python人工智能基于深度学习的农作物病虫害识别项目源码.zip

- 基于MIT mini-cheetah 的四足机器人控制quadruped-robot-master.zip

- 菠萝狗四足机器人py-apple-bldc-quadruped-robot-main.zip

- 基于51单片机的篮球足球球类比赛计分器设计(protues仿真)-毕业设计

- 第3天实训任务--电子22级.pdf

- 基于FPGA 的4位密码锁矩阵键盘 数码管显示 报警仿真

- 车辆、飞机、船检测5-YOLO(v5至v11)、COCO、CreateML、Paligemma、VOC数据集合集.rar

- 河南大学(软工免浪费时间)

- NOIP-学习建议-C++

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功