没有合适的资源?快使用搜索试试~ 我知道了~

Deep Image Retrieval:Learning global representations for image s...

需积分: 0 1 下载量 183 浏览量

2020-12-25

10:03:06

上传

评论

收藏 6.56MB PDF 举报

温馨提示

Deep Image Retrieval:Learning global representations for image search Deep Image Retrieval:Learning global representations for image search Deep Image Retrieval:Learning global representations for image search

资源推荐

资源详情

资源评论

Deep Image Retrieval:

Learning global representations for image search

Albert Gordo, Jon Almaz´an, Jerome Revaud, and Diane Larlus

Computer Vision Group, Xerox Research Center Europe

firstname.lastname@xrce.xerox.com

Abstract. We propose a novel approach for instance-level image re-

trieval. It produces a global and compact fixed-length representation for

each image by aggregating many region-wise descriptors. In contrast to

previous works employing pre-trained deep networks as a black box to

produce features, our method leverages a deep architecture trained for

the specific task of image retrieval. Our contribution is twofold: (i) we

leverage a ranking framework to learn convolution and projection weights

that are used to build the region features; and (ii) we employ a region

proposal network to learn which regions should be pooled to form the fi-

nal global descriptor. We show that using clean training data is key to the

success of our approach. To that aim, we use a large scale but noisy land-

mark dataset and develop an automatic cleaning approach. The proposed

architecture produces a global image representation in a single forward

pass. Our approach significantly outperforms previous approaches based

on global descriptors on standard datasets. It even surpasses most prior

works based on costly local descriptor indexing and spatial verification

1

.

Keywords: deep learning, instance-level retrieval

1 Introduction

Since their ground-breaking results on image classification in recent ImageNet

challenges [1,2], deep learning based methods have shined in many other com-

puter vision tasks, including object detection [3] and semantic segmentation [4].

Recently, they also rekindled highly semantic tasks such as image captioning [5,6]

and visual question answering [7]. However, for some problems such as instance-

level image retrieval, deep learning methods have led to rather underwhelming

results. In fact, for most image retrieval benchmarks, the state of the art is cur-

rently held by conventional methods relying on local descriptor matching and

re-ranking with elaborate spatial verification [8,9,10,11].

Recent works leveraging deep architectures for image retrieval are mostly

limited to using a pre-trained network as local feature extractor. Most efforts

have been devoted towards designing image representations suitable for image

retrieval on top of those features. This is challenging because representations for

1

Additional material available at www.xrce.xerox.com/Deep-Image-Retrieval

arXiv:1604.01325v2 [cs.CV] 28 Jul 2016

2 A. Gordo, J. Almaz´an, J. Revaud, D. Larlus

retrieval need to be compact while retaining most of the fine details of the images.

Contributions have been made to allow deep architectures to accurately represent

input images of different sizes and aspect ratios [12,13,14] or to address the lack

of geometric invariance of convolutional neural network (CNN) features [15,16].

In this paper, we focus on learning these representations. We argue that one

of the main reasons for the deep methods lagging behind the state of the art is

the lack of supervised learning for the specific task of instance-level image re-

trieval. At the core of their architecture, CNN-based retrieval methods often use

local features extracted using networks pre-trained on ImageNet for a classifica-

tion task. These features are learned to distinguish between different semantic

categories, but, as a side effect, are quite robust to intra-class variability. This is

an undesirable property for instance retrieval, where we are interested in distin-

guishing between particular objects – even if they belong to the same semantic

category. Therefore, learning features for the specific task of instance-level re-

trieval seems of paramount importance to achieve competitive results.

To this end, we build upon a recent deep representation for retrieval, the re-

gional maximum activations of convolutions (R-MAC) [14]. It aggregates several

image regions into a compact feature vector of fixed length and is thus robust to

scale and translation. This representation can deal with high resolution images of

different aspect ratios and obtains a competitive accuracy. We note that all the

steps involved to build the R-MAC representation are differentiable, and so its

weights can be learned in an end-to-end manner. Our first contribution is thus

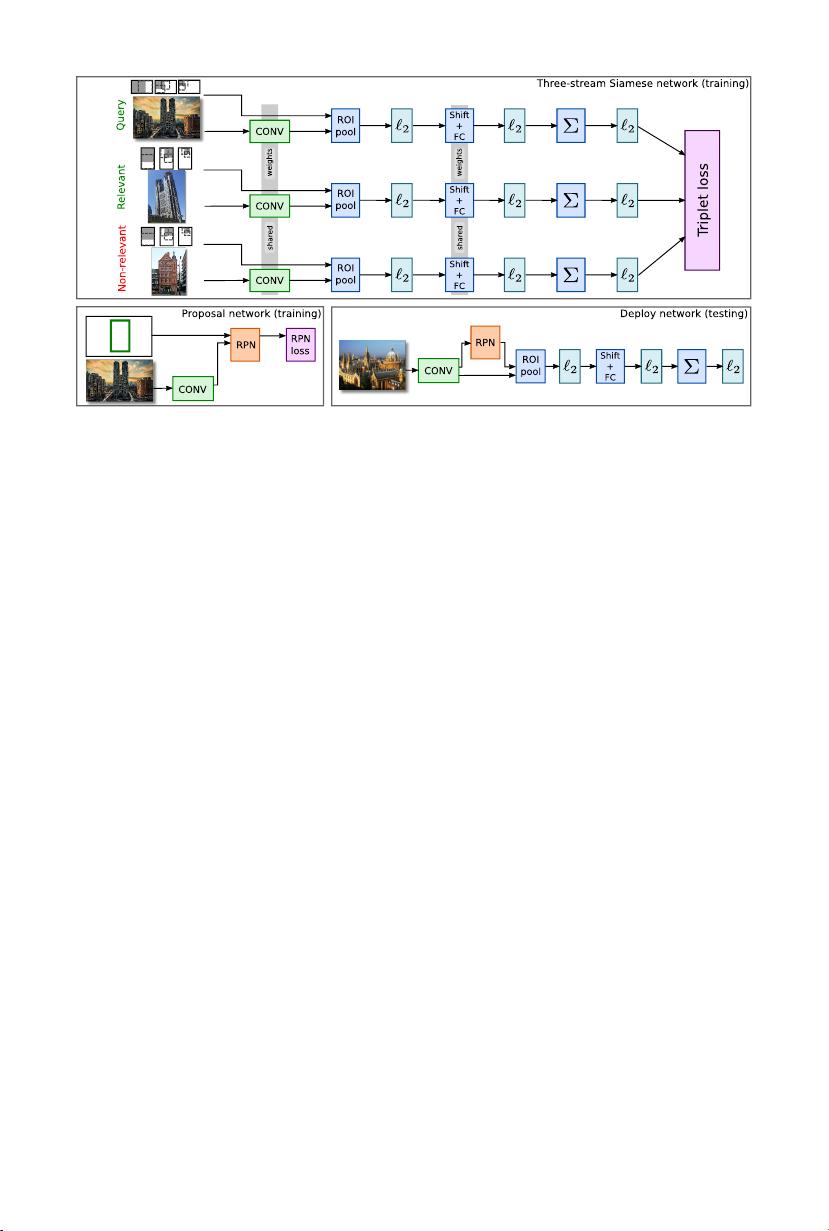

to use a three-stream Siamese network that explicitly optimizes the weights of

the R-MAC representation for the image retrieval task by using a triplet ranking

loss (Fig. 1).

To train this network, we leverage the public Landmarks dataset [17]. This

dataset was constructed by querying image search engines with names of different

landmarks and, as such, exhibits a very large amount of mislabeled and false

positive images. This prevents the network from learning a good representation.

We propose an automatic cleaning process, and show that on the cleaned data

learning significantly improves.

Our second contribution consists in learning the pooling mechanism of the

R-MAC descriptor. In the original architecture of [14], a rigid grid determines

the location of regions that are pooled together. Here we propose to predict the

location of these regions given the image content. We train a region proposal

network with bounding boxes that are estimated for the Landmarks images

as a by-product of the cleaning process. We show quantitative and qualitative

evidence that region proposals significantly outperform the rigid grid.

The combination of our two contributions produces a novel architecture that

is able to encode one image into a compact fixed-length vector in a single forward

pass. Representations of different images can be then compared using the dot-

product. Our method significantly outperforms previous approaches based on

global descriptors. It even outperforms more complex approaches that involve

keypoint matching and spatial verification at test time.

Learning global representations for image search 3

Fig. 1. Summary of the proposed CNN-based representation tailored for

retrieval. At training time, image triplets are sampled and simultaneously considered

by a triplet-loss that is well-suited for the task (top). A region proposal network (RPN)

learns which image regions should be pooled (bottom left). At test time (bottom right),

the query image is fed to the learned architecture to efficiently produce a compact global

image representation that can be compared with the dataset image representations with

a simple dot-product.

Finally, we would like to refer the reader to the recent work of Radenovic

et al. [18], concurrent to ours and published in these same proceedings, that

also proposes to learn representations for retrieval using a Siamese network on

a geometrically-verified landmark dataset.

The rest of the paper is organized as follows. Section 2 discusses related

works. Sections 3 and 4 present our contributions. Section 5 validates them on

five different datasets. Finally Section 6 concludes the paper.

2 Related Work

We now describe previous works most related to our approach.

Conventional image retrieval. Early techniques for instance-level retrieval

are based on bag-of-features representations with large vocabularies and inverted

files [19,20]. Numerous methods to better approximate the matching of the de-

scriptors have been proposed, see e.g. [21,22]. An advantage of these techniques is

that spatial verification can be employed to re-rank a short-list of results [20,23],

yielding a significant improvement despite a significant cost. Concurrently, meth-

ods that aggregate the local image patches have been considered. Encoding tech-

niques, such as the Fisher Vector [24], or VLAD [25], combined with compression

[26,27,28] produce global descriptors that scale to larger databases at the cost of

reduced accuracy. All these methods can be combined with other post-processing

techniques such as query expansion [29,30,31].

4 A. Gordo, J. Almaz´an, J. Revaud, D. Larlus

CNN-based retrieval. After their success in classification [1], CNN features

were used as off-the-shelf features for image retrieval [16,17]. Although they

outperform other standard global descriptors, their performance is significantly

below the state of the art. Several improvements were proposed to overcome their

lack of robustness to scaling, cropping and image clutter. [16] performs region

cross-matching and accumulates the maximum similarity per query region. [12]

applies sum-pooling to whitened region descriptors. [13] extends [12] by allowing

cross-dimensional weighting and aggregation of neural codes. Other approaches

proposed hybrid models involving an encoding technique such as FV [32] or

VLAD [15,33], potentially learnt as well [34] as one of their components.

Tolias et al. [14] propose R-MAC, an approach that produces a global image

representation by aggregating the activation features of a CNN in a fixed layout

of spatial regions. The result is a fixed-length vector representation that, when

combined with re-ranking and query expansion, achieves results close to the state

of the art. Our work extends this architecture by discriminatively learning the

representation parameters and by improving the region pooling mechanism.

Fine-tuning for retrieval. Babenko et al. [17] showed that models pre-trained

on ImageNet for object classification could be improved by fine-tuning them on

an external set of Landmarks images. In this paper we confirm that fine-tuning

the pre-trained models for the retrieval task is indeed crucial, but argue that one

should use a good image representation (R-MAC) and a ranking loss instead of

a classification loss as used in [17].

Localization/Region pooling. Retrieval methods that ground their descrip-

tors in regions typically consider random regions [16] or a rigid grid of re-

gions [14]. Some works exploit the center bias that benchmarks usually exhibit

to weight their regions accordingly [12]. The spatial transformer network of [35]

can be inserted in CNN architectures to transform input images appropriately,

including by selecting the most relevant region for the task. In this paper, we

would like to bias our descriptor towards interesting regions without paying an

extra-cost or relying on a central bias. We achieve this by using a proposal

network similar in essence to the Faster R-CNN detection method [36].

Siamese networks and metric learning. Siamese networks have commonly

been used for metric learning [37], dimensionality reduction [38], learning image

descriptors [39], and performing face identification [40,41,42]. Recently triplet

networks (i.e. three stream Siamese networks) have been considered for metric

learning [43,44] and face identification [45]. However, these Siamese networks

usually rely on simpler network architectures than the one we use here, which

involves pooling and aggregation of several regions.

3 Method

This section introduces our method for retrieving images in large collections.

We first revisit the R-MAC representation (Section 3.1) showing that, despite

its handcrafted nature, all of its components consist of differentiable operations.

From this it follows that one can learn the weights of the R-MAC representa-

剩余20页未读,继续阅读

资源评论

kaichu2

- 粉丝: 888

- 资源: 71

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功