Analysis of variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures

(such as the "variation" among and between groups) used to analyze the differences among means in a sample.

ANOVA was developed by the statistician Ronald Fisher. The ANOVA is based on the law of total variance,

where the observed variance in a particular variable is partitioned into components attributable to different

sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population

means are equal, and therefore generalizes the t-test beyond two means.

History

Example

Background and terminology

Design-of-experiments terms

Classes of models

Fixed-effects models

Random-effects models

Mixed-effects models

Assumptions

Textbook analysis using a normal distribution

Randomization-based analysis

Summary of assumptions

Characteristics

Logic

Partitioning of the sum of squares

The F-test

Extended logic

For a single factor

For multiple factors

Associated analysis

Preparatory analysis

Study designs

Cautions

Generalizations

Connection to linear regression

See also

Footnotes

Notes

Contents

No fit: Young vs old, and short-haired

vs long-haired

References

Further reading

External links

While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past

according to Stigler.

[1]

These include hypothesis testing, the partitioning of sums of squares, experimental

techniques and the additive model. Laplace was performing hypothesis testing in the 1770s.

[2]

Around 1800,

Laplace and Gauss developed the least-squares method for combining observations, which improved upon

methods then used in astronomy and geodesy. It also initiated much study of the contributions to sums of

squares. Laplace knew how to estimate a variance from a residual (rather than a total) sum of squares.

[3]

By

1827, Laplace was using least squares methods to address ANOVA problems regarding measurements of

atmospheric tides.

[4]

Before 1800, astronomers had isolated observational errors resulting from reaction times

(the "personal equation") and had developed methods of reducing the errors.

[5]

The experimental methods

used in the study of the personal equation were later accepted by the emerging field of psychology

[6]

which

developed strong (full factorial) experimental methods to which randomization and blinding were soon

added.

[7]

An eloquent non-mathematical explanation of the additive effects model was available in 1885.

[8]

Ronald Fisher introduced the term variance and proposed its formal analysis in a 1918 article The Correlation

Between Relatives on the Supposition of Mendelian Inheritance.

[9]

His first application of the analysis of

variance was published in 1921.

[10]

Analysis of variance became widely known after being included in

Fisher's 1925 book Statistical Methods for Research Workers.

Randomization models were developed by several researchers. The first was published in Polish by Jerzy

Neyman in 1923.

[11]

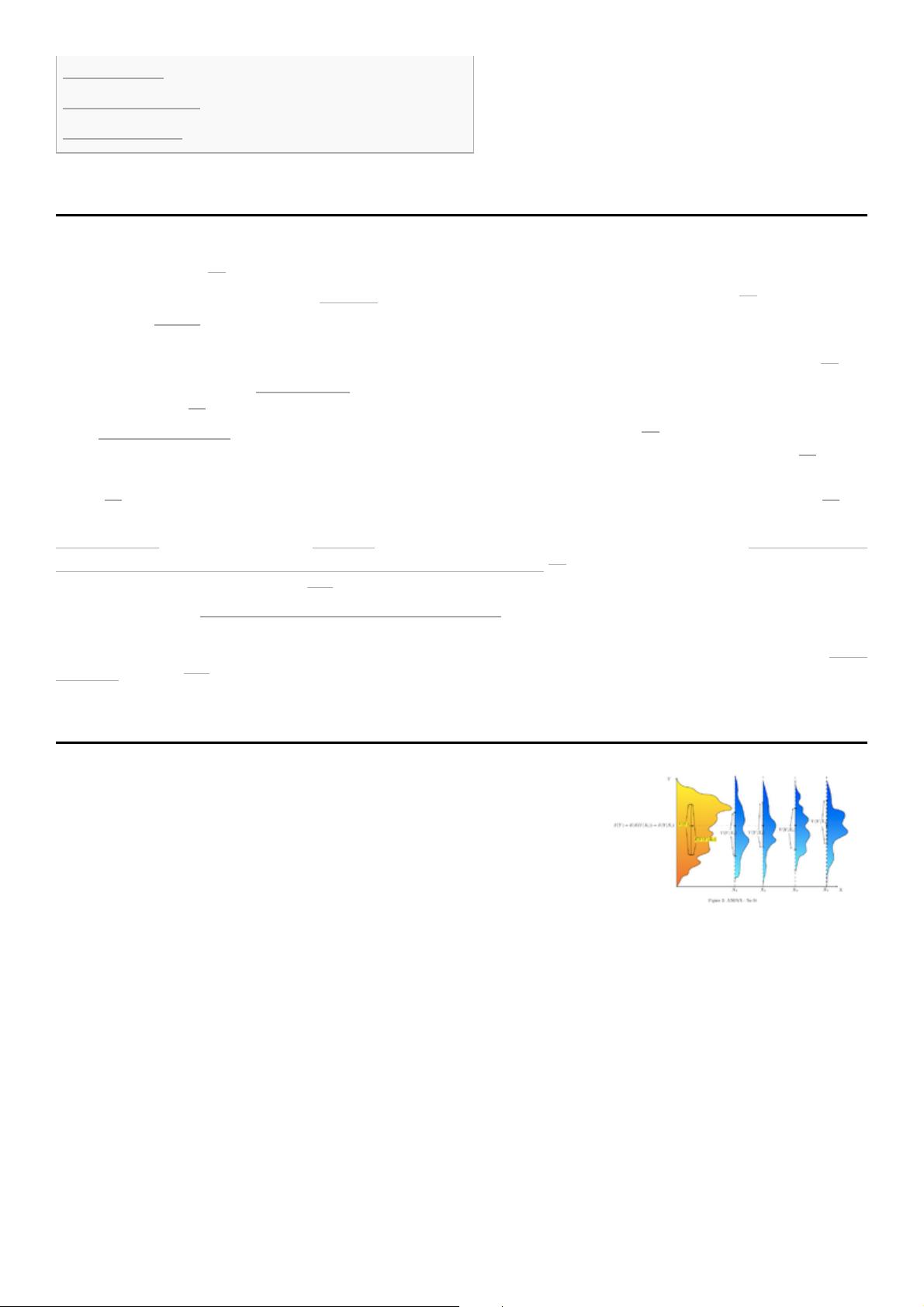

The analysis of variance can be used to describe otherwise complex

relations among variables. A dog show provides an example. A dog

show is not a random sampling of the breed: it is typically limited to

dogs that are adult, pure-bred, and exemplary. A histogram of dog

weights from a show might plausibly be rather complex, like the

yellow-orange distribution shown in the illustrations. Suppose we

wanted to predict the weight of a dog based on a certain set of

characteristics of each dog. One way to do that is to explain the

distribution of weights by dividing the dog population into groups

based on those characteristics. A successful grouping will split dogs

such that (a) each group has a low variance of dog weights (meaning

the group is relatively homogeneous) and (b) the mean of each group is distinct (if two groups have the same

mean, then it isn't reasonable to conclude that the groups are, in fact, separate in any meaningful way).

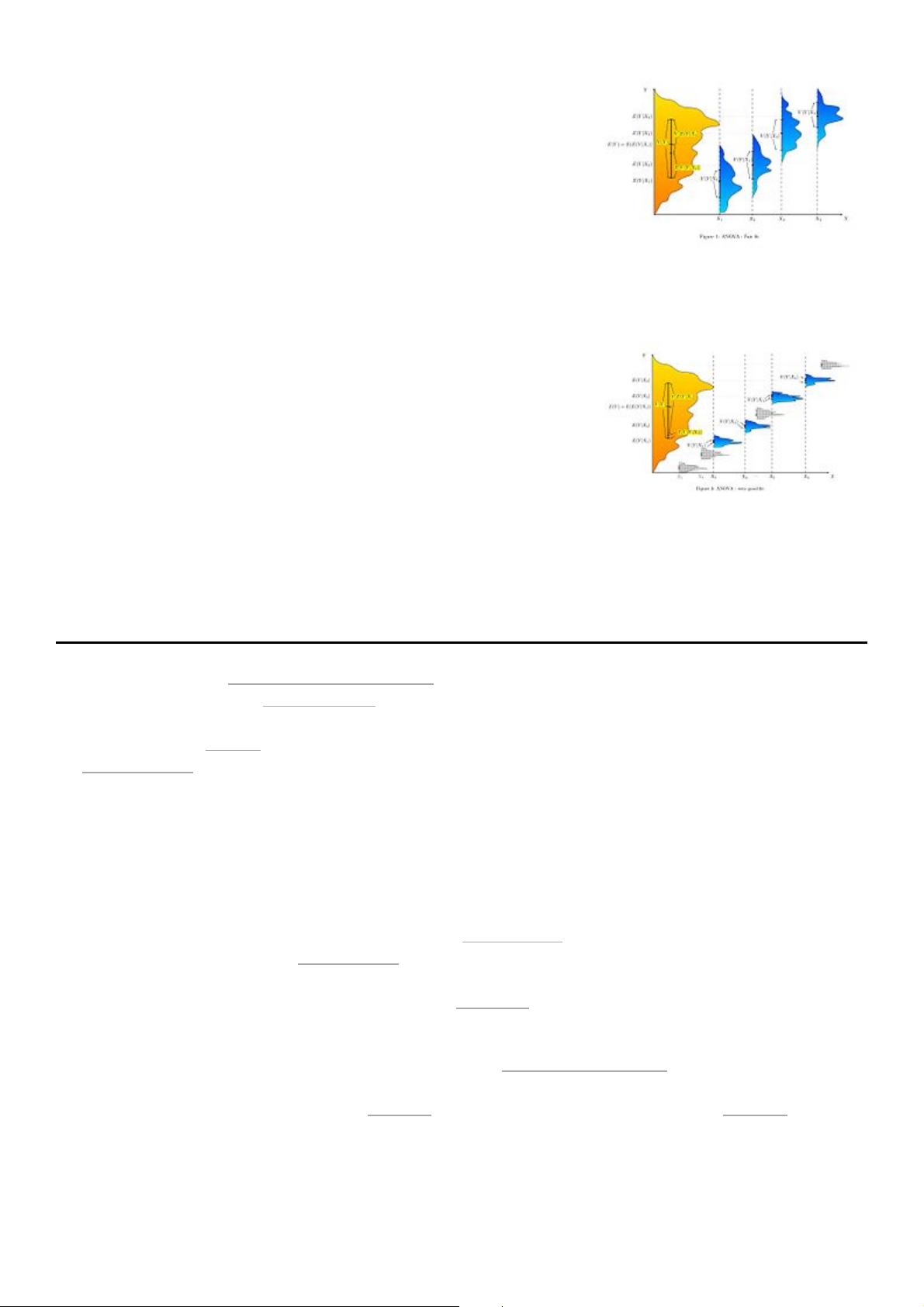

In the illustrations to the right, groups are identified as X

1

, X

2

, etc. In the first illustration, the dogs are divided

according to the product (interaction) of two binary groupings: young vs old, and short-haired vs long-haired

(e.g., group 1 is young, short-haired dogs, group 2 is young, long-haired dogs, etc.). Since the distributions of

dog weight within each of the groups (shown in blue) has a relatively large variance, and since the means are

very similar across groups, grouping dogs by these characteristics does not produce an effective way to explain

the variation in dog weights: knowing which group a dog is in doesn't allow us to predict its weight much

History

Example

Fair fit: Pet vs Working breed and

less athletic vs more athletic

Very good fit: Weight by breed

better than simply knowing the dog is in a dog show. Thus, this

grouping fails to explain the variation in the overall distribution

(yellow-orange).

An attempt to explain the weight distribution by grouping dogs as pet

vs working breed and less athletic vs more athletic would probably be

somewhat more successful (fair fit). The heaviest show dogs are likely

to be big, strong, working breeds, while breeds kept as pets tend to be

smaller and thus lighter. As shown by the second illustration, the

distributions have variances that are considerably smaller than in the

first case, and the means are more distinguishable. However, the

significant overlap of distributions, for example, means that we cannot

distinguish X

1

and X

2

reliably. Grouping dogs according to a coin flip

might produce distributions that look similar.

An attempt to explain weight by breed is likely to produce a very

good fit. All Chihuahuas are light and all St Bernards are heavy. The

difference in weights between Setters and Pointers does not justify

separate breeds. The analysis of variance provides the formal tools to

justify these intuitive judgments. A common use of the method is the

analysis of experimental data or the development of models. The

method has some advantages over correlation: not all of the data must

be numeric and one result of the method is a judgment in the

confidence in an explanatory relationship.

ANOVA is a form of statistical hypothesis testing heavily used in the analysis of experimental data. A test

result (calculated from the null hypothesis and the sample) is called statistically significant if it is deemed

unlikely to have occurred by chance, assuming the truth of the null hypothesis. A statistically significant result,

when a probability (p-value) is less than a pre-specified threshold (significance level), justifies the rejection of

the null hypothesis, but only if the a priori probability of the null hypothesis is not high.

In the typical application of ANOVA, the null hypothesis is that all groups are random samples from the same

population. For example, when studying the effect of different treatments on similar samples of patients, the

null hypothesis would be that all treatments have the same effect (perhaps none). Rejecting the null hypothesis

is taken to mean that the differences in observed effects between treatment groups are unlikely to be due to

random chance.

By construction, hypothesis testing limits the rate of Type I errors (false positives) to a significance level.

Experimenters also wish to limit Type II errors (false negatives). The rate of Type II errors depends largely on

sample size (the rate is larger for smaller samples), significance level (when the standard of proof is high, the

chances of overlooking a discovery are also high) and effect size (a smaller effect size is more prone to Type II

error).

The terminology of ANOVA is largely from the statistical design of experiments. The experimenter adjusts

factors and measures responses in an attempt to determine an effect. Factors are assigned to experimental units

by a combination of randomization and blocking to ensure the validity of the results. Blinding keeps the

weighing impartial. Responses show a variability that is partially the result of the effect and is partially random

error.

Background and terminology

ANOVA is the synthesis of several ideas and it is used for multiple purposes. As a consequence, it is difficult

to define concisely or precisely.

"Classical" ANOVA for balanced data does three things at once:

1. As exploratory data analysis, an ANOVA employs an additive data decomposition, and its sums

of squares indicate the variance of each component of the decomposition (or, equivalently,

each set of terms of a linear model).

2. Comparisons of mean squares, along with an F-test ... allow testing of a nested sequence of

models.

3. Closely related to the ANOVA is a linear model fit with coefficient estimates and standard

errors.

[12]

In short, ANOVA is a statistical tool used in several ways to develop and confirm an explanation for the

observed data.

Additionally:

4. It is computationally elegant and relatively robust against violations of its assumptions.

5. ANOVA provides strong (multiple sample comparison) statistical analysis.

6. It has been adapted to the analysis of a variety of experimental designs.

As a result: ANOVA "has long enjoyed the status of being the most used (some would say abused) statistical

technique in psychological research."

[13]

ANOVA "is probably the most useful technique in the field of

statistical inference."

[14]

ANOVA is difficult to teach, particularly for complex experiments, with split-plot designs being notorious.

[15]

In some cases the proper application of the method is best determined by problem pattern recognition followed

by the consultation of a classic authoritative test.

[16]

(Condensed from the "NIST Engineering Statistics Handbook": Section 5.7. A Glossary of DOE

Terminology.)

[17]

Balanced design

An experimental design where all cells (i.e. treatment combinations) have the same number

of observations.

Blocking

A schedule for conducting treatment combinations in an experimental study such that any

effects on the experimental results due to a known change in raw materials, operators,

machines, etc., become concentrated in the levels of the blocking variable. The reason for

blocking is to isolate a systematic effect and prevent it from obscuring the main effects.

Blocking is achieved by restricting randomization.

Design

A set of experimental runs which allows the fit of a particular model and the estimate of

effects.

DOE

Design of experiments. An approach to problem solving involving collection of data that will

support valid, defensible, and supportable conclusions.

[18]

Effect

How changing the settings of a factor changes the response. The effect of a single factor is

also called a main effect.

Design-of-experiments terms

Error

Unexplained variation in a collection of observations. DOE's typically require understanding

of both random error and lack of fit error.

Experimental unit

The entity to which a specific treatment combination is applied.

Factors

Process inputs that an investigator manipulates to cause a change in the output.

Lack-of-fit error

Error that occurs when the analysis omits one or more important terms or factors from the

process model. Including replication in a DOE allows separation of experimental error into its

components: lack of fit and random (pure) error.

Model

Mathematical relationship which relates changes in a given response to changes in one or

more factors.

Random error

Error that occurs due to natural variation in the process. Random error is typically assumed to

be normally distributed with zero mean and a constant variance. Random error is also called

experimental error.

Randomization

A schedule for allocating treatment material and for conducting treatment combinations in a

DOE such that the conditions in one run neither depend on the conditions of the previous run

nor predict the conditions in the subsequent runs.

[nb 1]

Replication

Performing the same treatment combination more than once. Including replication allows an

estimate of the random error independent of any lack of fit error.

Responses

The output(s) of a process. Sometimes called dependent variable(s).

Treatment

A treatment is a specific combination of factor levels whose effect is to be compared with

other treatments.

There are three classes of models used in the analysis of variance, and these are outlined here.

The fixed-effects model (class I) of analysis of variance applies to situations in which the experimenter applies

one or more treatments to the subjects of the experiment to see whether the response variable values change.

This allows the experimenter to estimate the ranges of response variable values that the treatment would

generate in the population as a whole.

Random-effects model (class II) is used when the treatments are not fixed. This occurs when the various factor

levels are sampled from a larger population. Because the levels themselves are random variables, some

assumptions and the method of contrasting the treatments (a multi-variable generalization of simple differences)

differ from the fixed-effects model.

[19]

Classes of models

Fixed-effects models

Random-effects models

Mixed-effects models