pso.rar_PSO

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

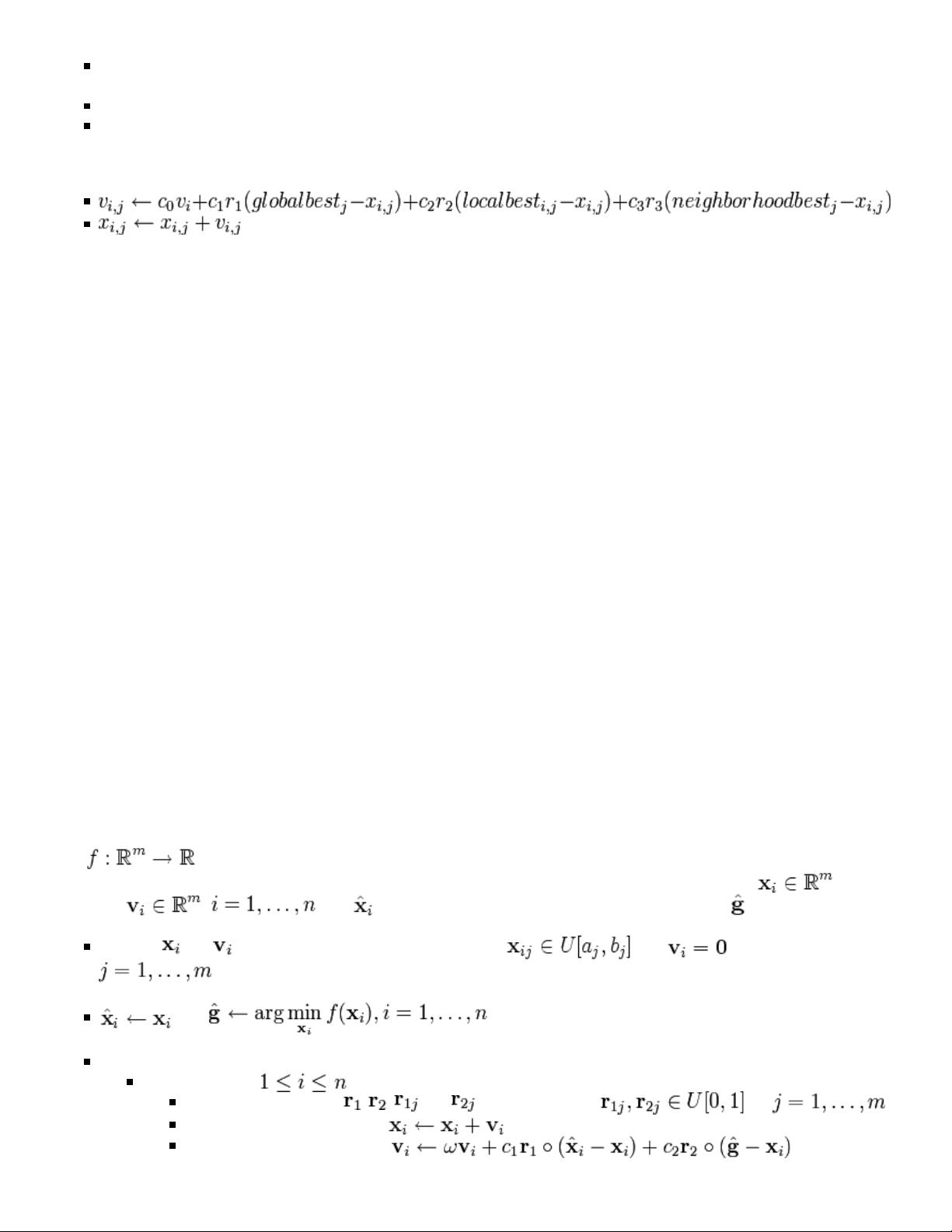

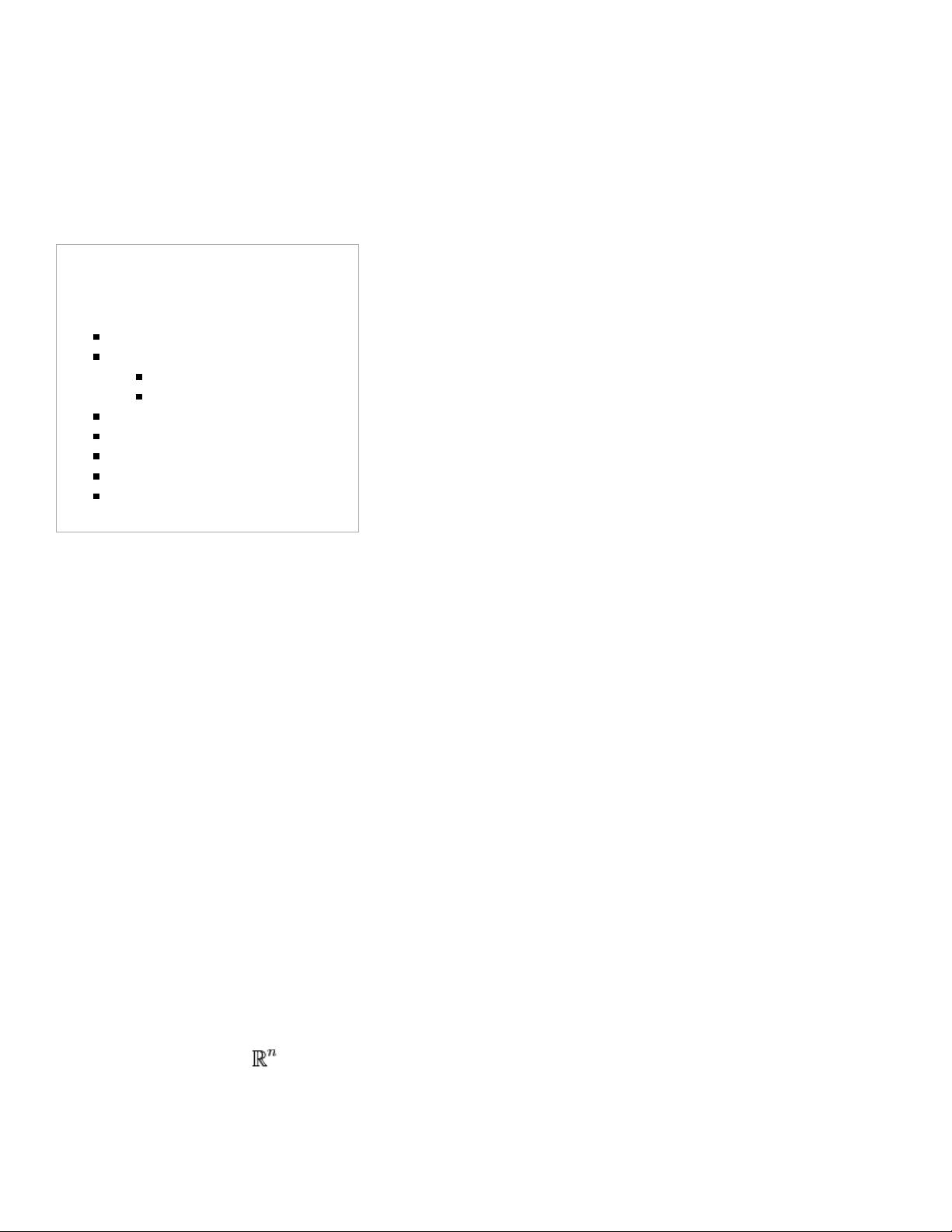

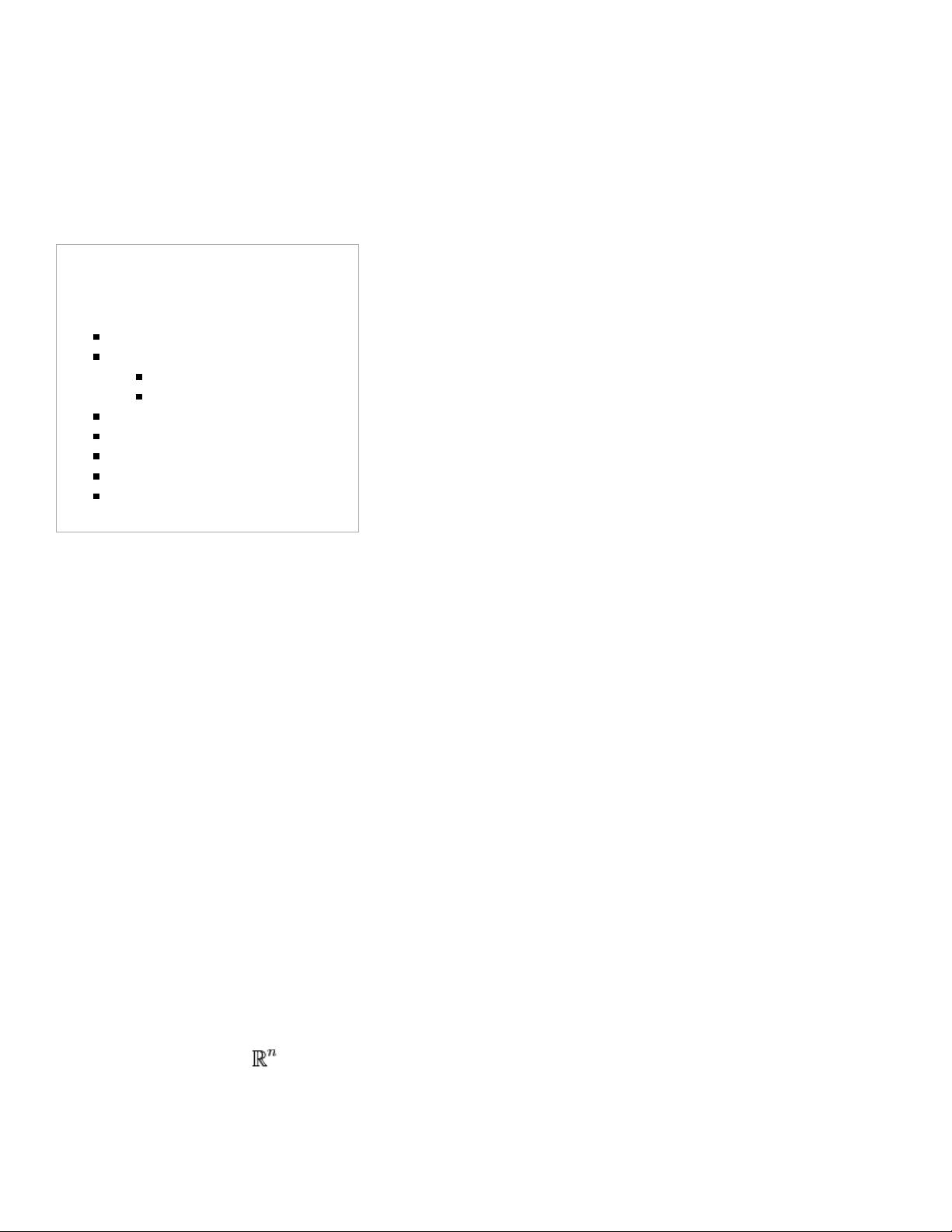

粒子群优化算法(Particle Swarm Optimization, PSO)是一种在复杂多目标优化问题中广泛应用的全局搜索算法,源自对鸟群和鱼群集体行为的研究。PSO由John Kennedy和Russell Eberhart在1995年提出,它通过模拟群体智能来寻找最优解。 在PSO算法中,每个解决方案被称为一个“粒子”,粒子在解空间中随机移动,其运动受到当前最佳位置(个人最好位置,pBest)和全局最佳位置(全局最好位置,gBest)的影响。这两个位置分别记录了粒子自身历史上的最优解和整个种群历史上的最优解。粒子的速度决定了它移动的方向和距离,而速度的更新则依赖于粒子当前的位置、pBest和gBest。 以下是PSO算法的基本步骤: 1. 初始化:随机生成一群粒子,并为每个粒子分配一个初始位置和速度,通常设置在搜索空间的边界内。 2. 计算适应度值:根据目标函数计算每个粒子的适应度值,这反映了粒子对应解的好坏。 3. 更新pBest:如果粒子的新位置比旧位置更优,即适应度值更高,则更新粒子的pBest。 4. 更新gBest:比较所有粒子的pBest,选择适应度值最高的作为新的gBest。 5. 更新速度:依据公式V(t+1) = w * V(t) + c1 * r1 * (pBest - X(t)) + c2 * r2 * (gBest - X(t))更新粒子的速度,其中V(t)是当前速度,X(t)是当前位置,w是惯性权重,c1和c2是学习因子,r1和r2是随机数。 6. 更新位置:粒子的新位置由旧位置和更新后的速度决定,即X(t+1) = X(t) + V(t+1)。 7. 重复步骤2-6,直到满足停止条件,如达到最大迭代次数或适应度值收敛。 PSO算法的优点在于简单易实现、全局搜索能力强,尤其适用于解决非线性、多模态优化问题。然而,它也有一些缺点,如容易陷入局部最优、收敛速度慢以及对参数敏感等。为改进这些问题,研究人员提出了多种变种,如引入混沌、自适应调整参数、引入记忆机制等。 在实际应用中,PSO已成功应用于工程优化、机器学习、神经网络训练、图像处理、数据挖掘等多个领域。例如,在神经网络中,PSO可以用来优化网络的权值和阈值;在数据挖掘中,可以寻找关联规则或聚类的最佳划分。 "Particle swarm optimization.pdf"可能包含对PSO算法的深入理论分析、数学模型以及实例应用的详细介绍。而"www.pudn.com.txt"可能是某个论坛或资源网站的链接文本,可能提供与PSO相关的更多资源和讨论。 粒子群优化算法是一种强大的全局搜索工具,通过模拟群体智能解决了许多复杂优化问题。了解并掌握PSO的基本原理和应用,对于在IT领域的优化问题求解具有重要的实践意义。

pso.rar (2个子文件)

pso.rar (2个子文件)  www.pudn.com.txt 218B

www.pudn.com.txt 218B Particle swarm optimization...pdf 165KB

Particle swarm optimization...pdf 165KB- 1

- 粉丝: 91

- 资源: 1万+

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 中国商务统计年鉴面板数据2023-2001轻工产品加工运输旅行建设建筑电信计算机和信息服务贸易进出口等 数据年度2022-2000 excel、dta版本 数据范围:全国31个省份

- Android中各种图像格式转换(裁剪,旋转,缩放等一系列操作工具).zip

- 基于three.js + canvas实现爱心代码+播放器效果.zip

- 去年和朋友一起做的java小游戏.游戏具体界面在readme中,游戏设计的uml图在design.pdf中.zip

- 使用JAVA开发的飞机大战小游戏,包括i背景图以及绘制.zip竞赛

- 使用java代码完成一个联机版五子棋applet游戏.zip

- Linux系统上FastDFS相关操作脚本与软件包.zip

- W3CSchool全套Web开发手册中文CHM版15MB最新版本

- Light Table 的 Python 语言插件.zip

- UIkit中文帮助文档pdf格式最新版本

信息提交成功

信息提交成功