1

Introduction to OpenMP

*

Tim Mattson

Principal Engineer

Intel Corporation

timothy.g.mattson@intel.com

Acknowledgements:

Barbara Chapman of the University of Houston, Rudi Eigenmann of Purdue, Sanjiv

Shah of Intel and others too numerous to name have contributed this tutorial.

* The name “OpenMP” is the property of the OpenMP Architecture Review Board.

2

Agenda

• Parallel computing, threads, and OpenMP

• The core elements of OpenMP

– Thread creation

– Workshare constructs

– Managing the data environment

– Synchronization

– The runtime library and environment variables

• Case Studies and Examples

• Background information and extra details

3

Parallel Computing: a few definitions

• Concurrency: when multiple tasks are active at

the same time.

– Concurrency is a general idea – even on single

processor systems inside the OS.

• Parallel Computing – when concurrency is used

with multiple processors to:

– Make a single job fun faster (turnaround time or

capability computing).

– Decrease the time required to complete a collection of

jobs (throughput or capacity computing).

4

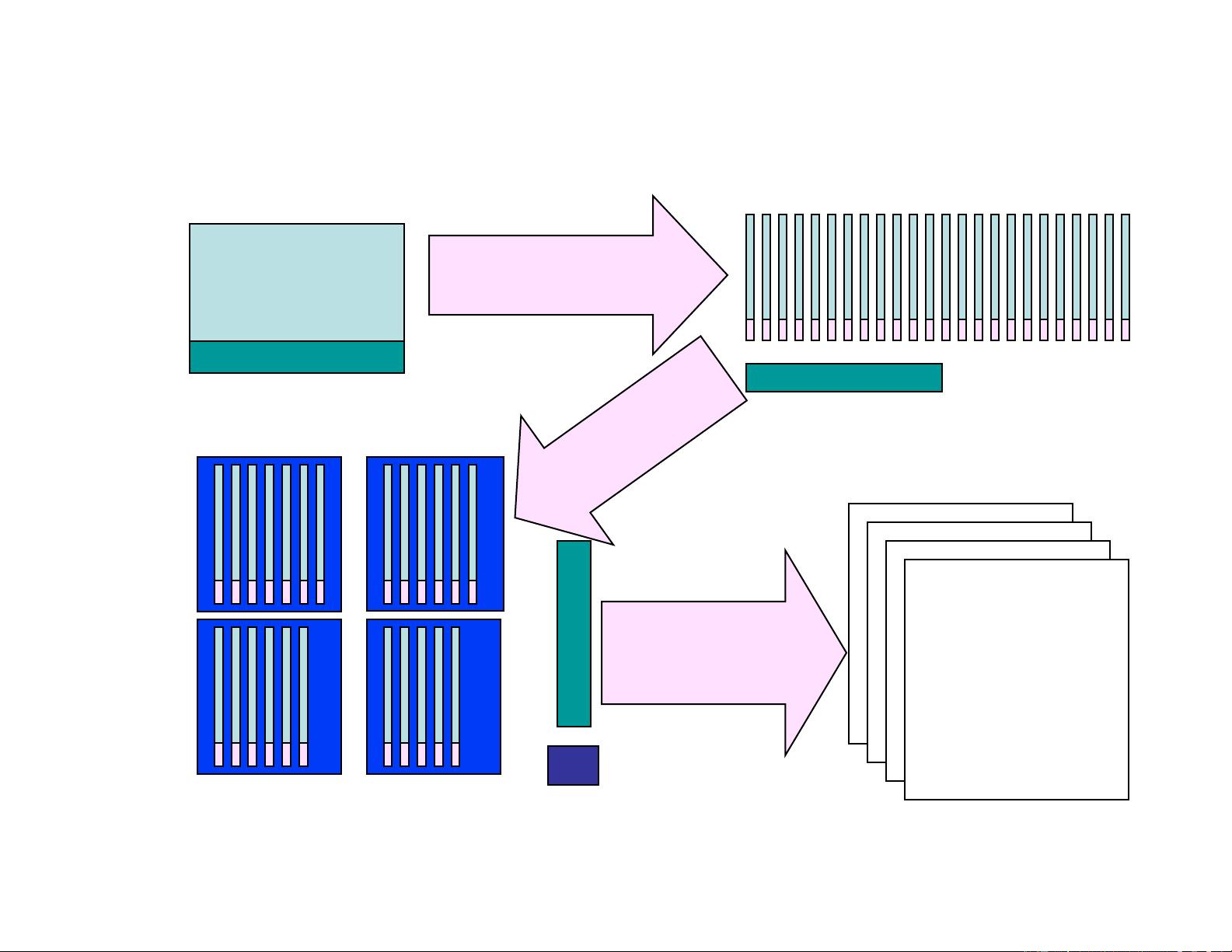

Parallel algorithms:

the high level view

Original Problem

Tasks, shared and local data

Concurrent tasks

& decompose data

Put it in the source

code

Corresponding source code

Program SPMD_Emb_Par ()

{

TYPE *tmp, *func();

global_array Data(TYPE);

global_array Res(TYPE);

int N = get_num_procs();

int id = get_proc_id();

if (id==0) setup_problem(N,DATA);

for (int I= 0; I<N;I=I+Num){

tmp = func(I);

Res.accumulate( tmp);

}

}

Program SPMD_Emb_Par ()

{

TYPE *tmp, *func();

global_array Data(TYPE);

global_array Res(TYPE);

int N = get_num_procs();

int id = get_proc_id();

if (id==0) setup_problem(N,DATA);

for (int I= 0; I<N;I=I+Num){

tmp = func(I);

Res.accumulate( tmp);

}

}

Program SPMD_Emb_Par ()

{

TYPE *tmp, *func();

global_array Data(TYPE);

global_array Res(TYPE);

int N = get_num_procs();

int id = get_proc_id();

if (id==0) setup_problem(N,DATA);

for (int I= 0; I<N;I=I+Num){

tmp = func(I);

Res.accumulate( tmp);

}

}

Program SPMD_Emb_Par ()

{

TYPE *tmp, *func();

global_array Data(TYPE);

global_array Res(TYPE);

int Num = get_num_procs();

int id = get_proc_id();

if (id==0) setup_problem(N, Data);

for (int I= ID; I<N;I=I+Num){

tmp = func(I, Data);

Res.accumulate( tmp);

}

}

Or

g

a

n

ize

t

a

sk

s

fo

r

e

x

e

c

u

ti

o

n

Units of execution + new shared data

for extracted dependencies

5

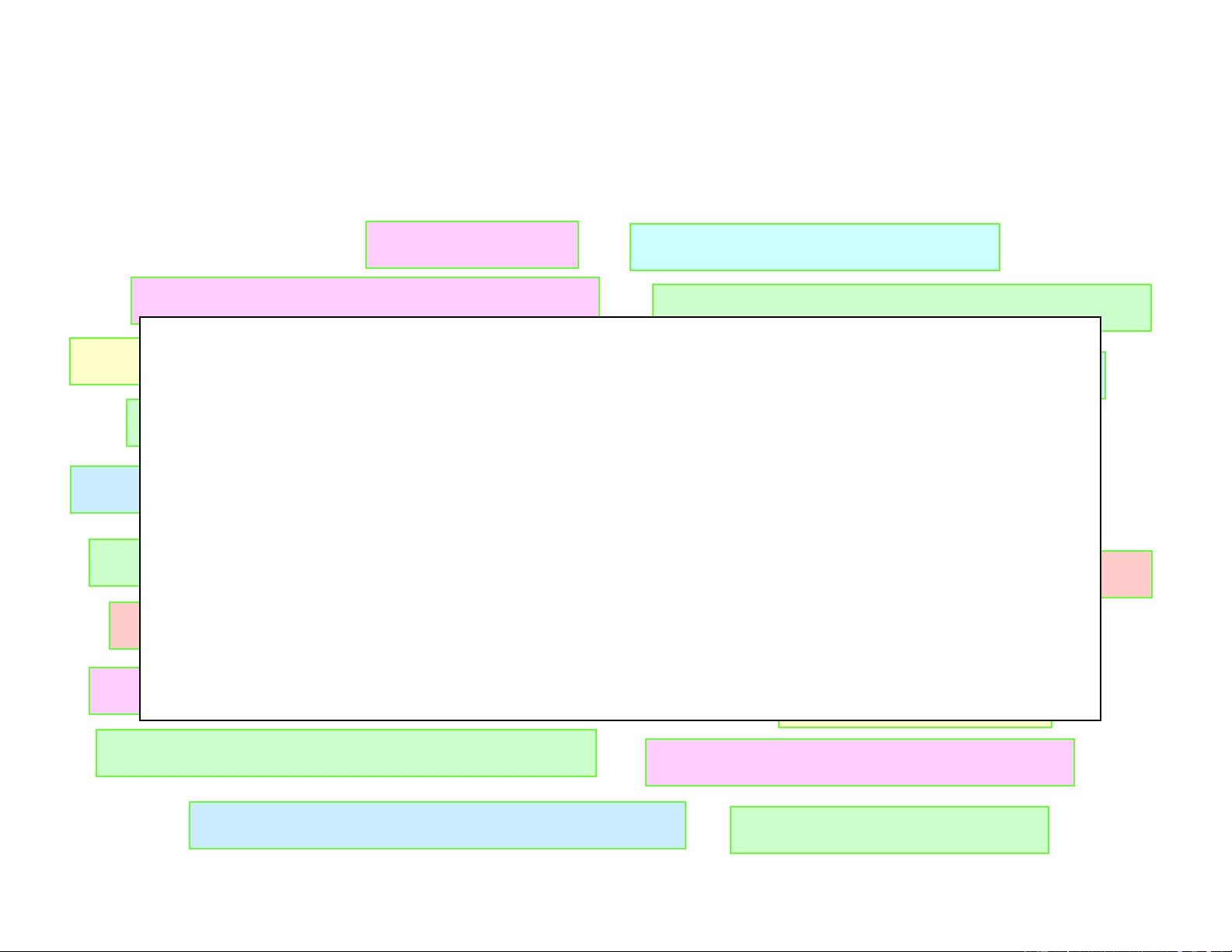

OpenMP

*

Overview:

omp_set_lock(lck)

#pragma omp parallel for private(A, B)

#pragma omp critical

C$OMP parallel do shared(a, b, c)

C$OMP PARALLEL REDUCTION (+: A, B)

call OMP_INIT_LOCK (ilok)

call omp_test_lock(jlok)

setenv OMP_SCHEDULE “dynamic”

CALL OMP_SET_NUM_THREADS(10)

C$OMP DO lastprivate(XX)

C$OMP ORDERED

C$OMP SINGLE PRIVATE(X)

C$OMP SECTIONS

C$OMP MASTER

C$OMP ATOMIC

C$OMP FLUSH

C$OMP PARALLEL DO ORDERED PRIVATE (A, B, C)

C$OMP THREADPRIVATE(/ABC/)

C$OMP PARALLEL COPYIN(/blk/)

Nthrds = OMP_GET_NUM_PROCS()

!$OMP BARRIER

OpenMP: An API for Writing Multithreaded

Applications

A set of compiler directives and library

routines for parallel application programmers

Greatly simplifies writing multi-threaded (MT)

programs in Fortran, C and C++

Standardizes last 20 years of SMP practice

* The name “OpenMP” is the property of the OpenMP Architecture Review Board.