1

A Method for Controlling Mouse Movement using a Real-

Time Camera

Hojoon Park

Department of Computer Science

Brown University, Providence, RI, USA

hojoon@cs.brown.edu

Abstract

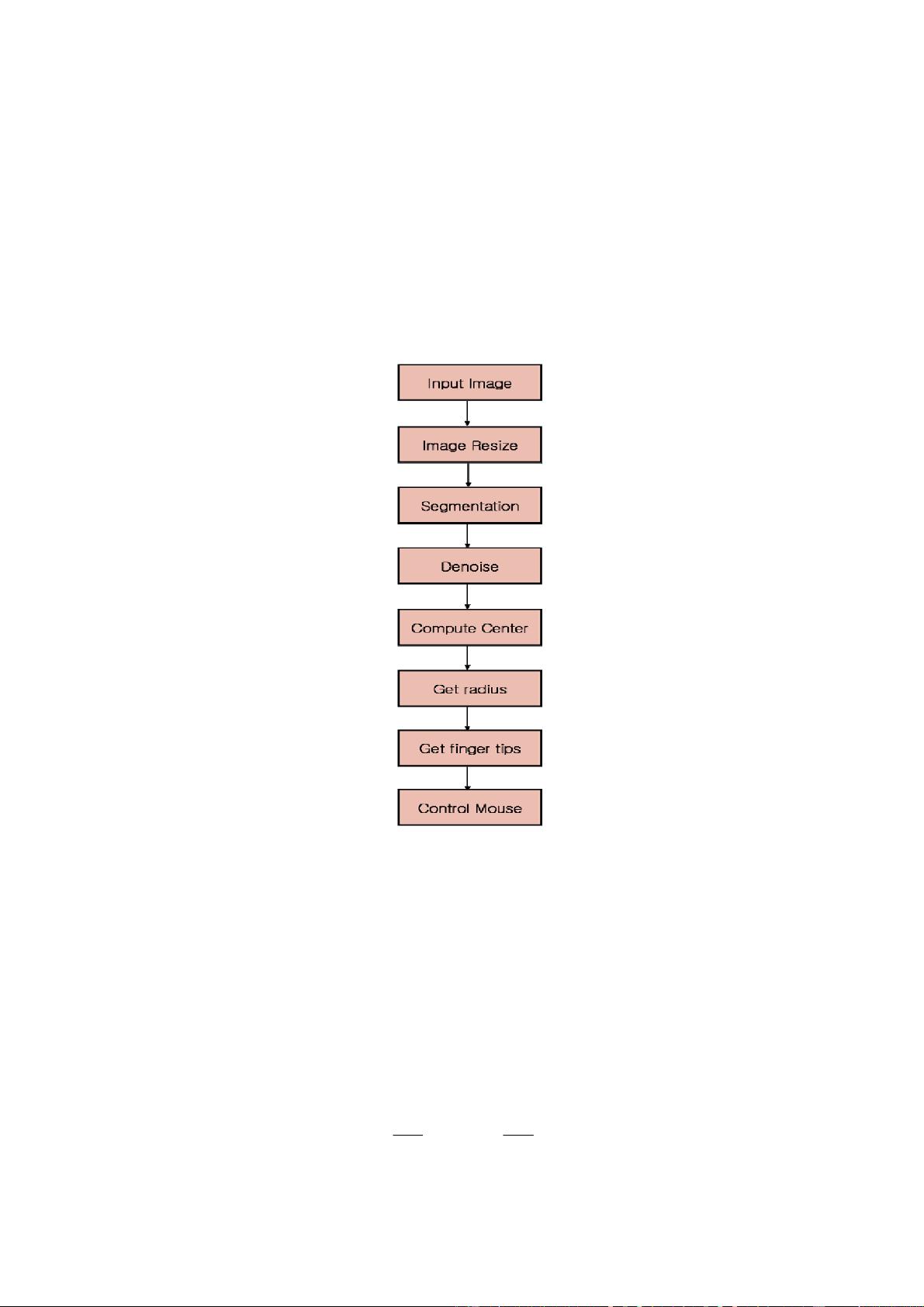

This paper presents a new approach for controlling mouse movement using a real-time camera. Most

existing approaches involve changing mouse parts such as adding more buttons or changing the position of the

tracking ball. Instead, we propose to change the hardware design. Our method is to use a camera and computer

vision technology, such as image segmentation and gesture recognition, to control mouse tasks (left and right

clicking, double-clicking, and scrolling) and we show how it can perform everything current mouse devices can.

This paper shows how to build this mouse control system.

1. Introduction

As computer technology continues to develop, people have smaller and smaller electronic devices and want

to use them ubiquitously. There is a need for new interfaces designed specifically for use with these smaller

devices. Increasingly we are recognizing the importance of human computing interaction (HCI), and in

particular vision-based gesture and object recognition. Simple interfaces already exist, such as embedded-

keyboard, folder-keyboard and mini-keyboard. However, these interfaces need some amount of space to use and

cannot be used while moving. Touch screens are also a good control interface and nowadays it is used globally

in many applications. However, touch screens cannot be applied to desktop systems because of cost and other

hardware limitations. By applying vision technology and controlling the mouse by natural hand gestures, we can

reduce the work space required. In this paper, we propose a novel approach that uses a video device to control

the mouse system. This mouse system can control all mouse tasks, such as clicking (right and left), double-

clicking and scrolling. We employ several image processing algorithms to implement this.

2. Related Work

Many researchers in the human computer interaction and robotics fields have tried to control mouse

movement using video devices. However, all of them used different methods to make a clicking event. One

approach, by Erdem et al, used finger tip tracking to control the motion of the mouse. A click of the mouse

button was implemented by defining a screen such that a click occurred when a user’s hand passed over the

region [1, 3]. Another approach was developed by Chu-Feng Lien [4]. He used only the finger-tips to control the

mouse cursor and click. His clicking method was based on image density, and required the user to hold the

mouse cursor on the desired spot for a short period of time. Paul et al, used still another method to click. They

used the motion of the thumb (from a ‘thumbs-up’ position to a fist) to mark a clicking event thumb. Movement

of the hand while making a special hand sign moved the mouse pointer.

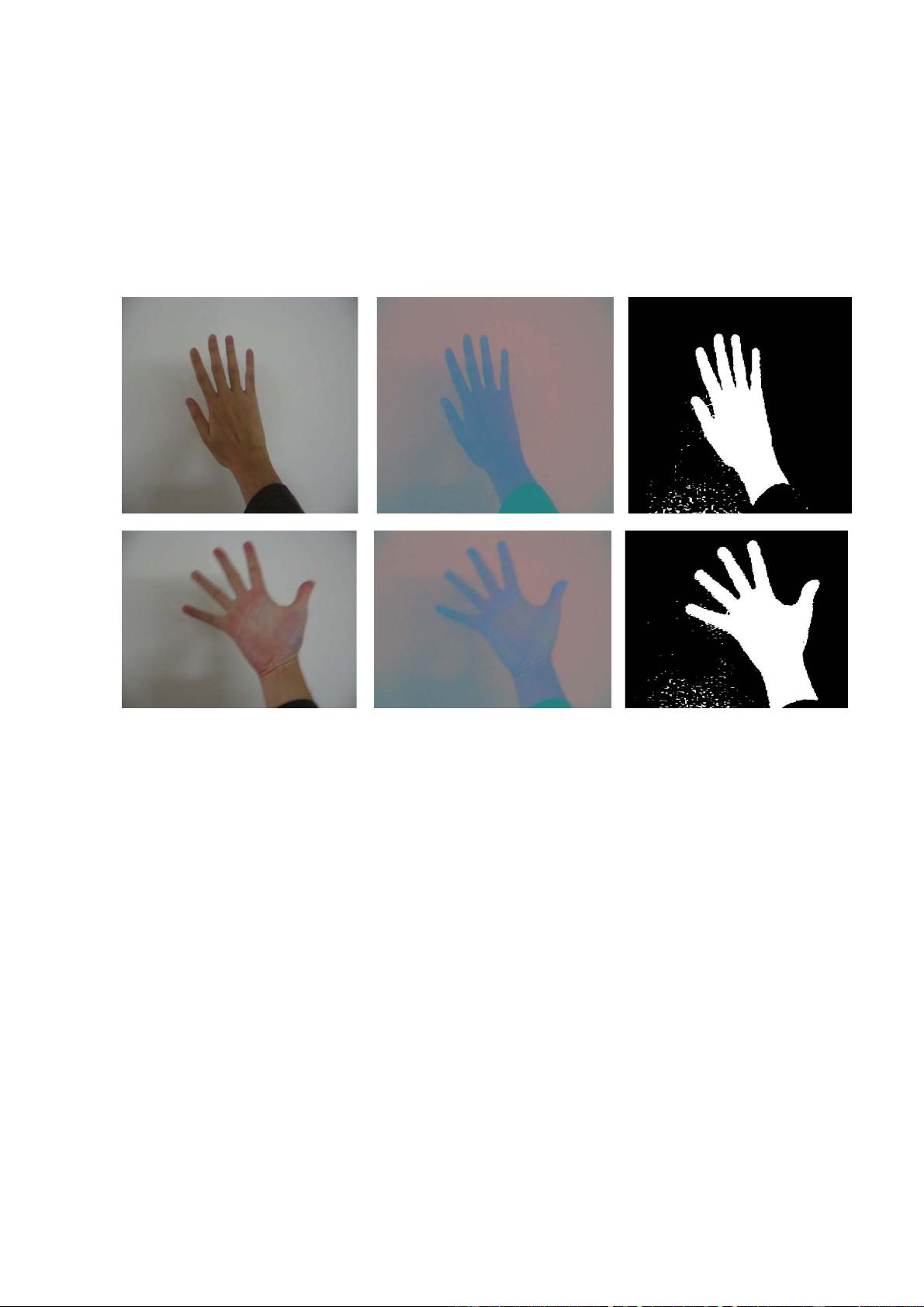

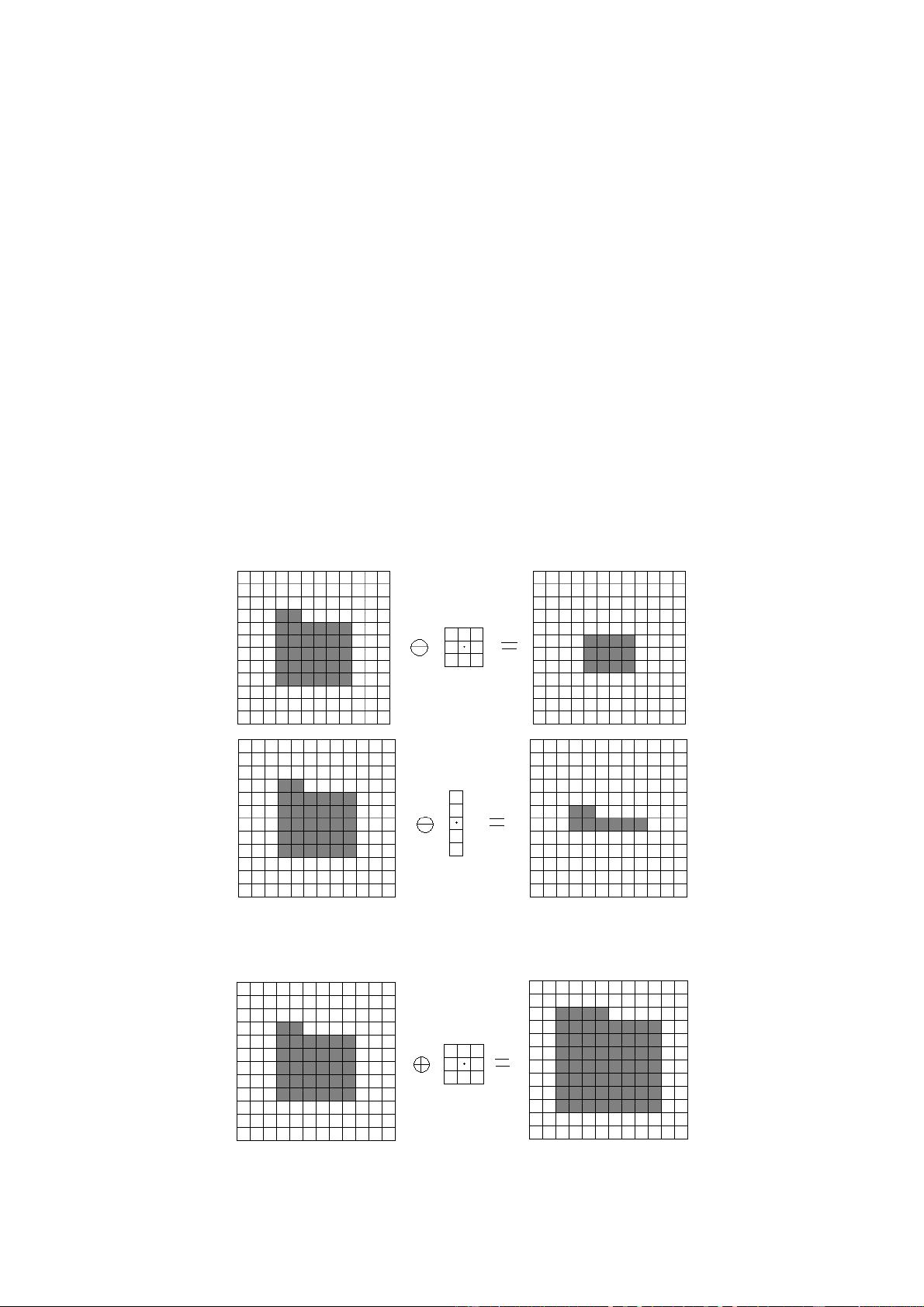

Our project was inspired by a paper of Asanterabi Malima et al. [8]. They developed a finger counting

system to control behaviors of a robot. We used their algorithm to estimate the radius of hand region and other

algorithms in our image segmentation part to improve our results. The segmentation is the most important part

in this project. Our system used a color calibration method to segment the hand region and convex hull

algorithm to find finger tip positions [7].

Mouse-Movement.rar (1个子文件)

Mouse-Movement.rar (1个子文件)  Mouse Movement.pdf 302KB

Mouse Movement.pdf 302KB

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜 信息提交成功

信息提交成功