First International Workshop on

Data Mining in Sensor Networks

Chairs: Rasmus Pedersen & Eric Jul

Keynote by Samuel Madden, MIT

Table of Content

An Adaptive Modular Approach to the Mining of Sensor Network Data,

Gianluca Bontempi and Yann-Aël Le Borgne……………………………………….………………3

Data Mining in Wireless Sensor Networks Based on Artificial Neural-Networks Algorithms,

Andrea Kulakov and Danco Davcev………………………………………………………………..10

Distributed Pre-Processing of Data on Networks of Berkeley Motes Using Non-Parametric EM,

Ian Davidson and S. S. Ravi………………………………………………………………………...17

A Distributed Approach for Prediction in Sensor Networks,

Sabine M. McConnell and David B. Skillicorn……………………………………………………..28

Local Hill Climbing in Sensor Networks

Denis Krivitski, Assaf Schustery, and Ran Wolff……………………………………………...…...38

An adaptive modular approach to the mining of sensor network data.

Gianluca Bontempi, Yann-A¨el Le Borgne

∗

.

ULB Machine Learning Group

Universit´e Libre de Bruxelles, Belgium

email: {gbonte,yleborgn}@ulb.ac.be

Abstract

This paper proposes a two-layer modular architecture

to adaptively perform data mining tasks in large sensor

networks. The architecture consists in a lower layer

which performs data aggregation in a modular fashion

and in an upper layer which employs an adaptive

local learning technique to extract a prediction model

from the aggregated information. The rationale of the

approach is that a modular aggregation of sensor data

can serve jointly two purposes: first, the organization

of sensors in clusters, then reducing the communication

effort, second, the dimensionality reduction of the data

mining task, then improving the accuracy of the sensing

task.

1 Introduction.

There are plenty of potential applications for intelli-

gent sensor networks: distributed information gathering

and processing, monitoring, supervision of hazardous

environments, intrusion detection, cooperative sensing,

tracking.

The ever-increasing use of sensing units asks for

the development of specific data mining architectures.

What is expected from these architectures is not only

accurate modeling of high dimensional streams of data

but also a minimization of the communication and

computational effort demanded to each single sensor

unit.

The simplest approach to the analysis of sensor

network data makes use of a centralized architecture

where a central server maintains a database of readings

from all the sensors. The whole analysis effort is

localized in the server, whose mission is to extract from

the flow of data the high-level information expected to

be returned by the monitoring system. If we assume

that reasonable-size sensor networks will be made of

thousands of nodes, the limitation of this approach is

strikingly evident: the number of messages sent in the

∗

Supported by the COMP

2

SYS project, sponsored by the

HRM program of the European Community (MEST-CT-2004-

505079)

system as well as the number of variables of the data

mining task are too large to be managed efficiently.

It has been suggested in literature that alternative

architectures are to be preferred in applications where

neighboring sensors are likely to have correlated read-

ings [6]. This is the case of aggregating systems which,

according to the definition of [10], are systems where the

data obtained from the different source nodes can be ag-

gregated before being transmitted along the network. In

these systems, we can imagine the existence of interme-

diary nodes (aggregators) having the capability to fuse

the information from different sources. Sensor networks

for weather forecasting and monitoring are examples of

aggregating systems. The authors of [6] showed that a

compression of the sensor information can be performed

at local level then reducing the amount of communica-

tion and the bandwidth required for the functioning of

the system.

Atthesametimetechniquesofdatacompression,

like Principal Component analysis (PCA) or Indepen-

dent Component Analysis (ICA) [8], are often used in

data mining to reduce the complexity of modeling tasks

with a very large number of variables. It is well known

in the data mining literature that methods for reducing

complexity are beneficial for several reasons: improve-

ment of the accuracy and intelligibility of the model,

reduced storage and time requirements.

The rationale of the paper is that a modular orga-

nization of the sensor network can be used to jointly

address the two main issues in mining sensor network

data: the minimization of the communication effort and

the accurate extraction of high-level information from

massive and streaming datasets.

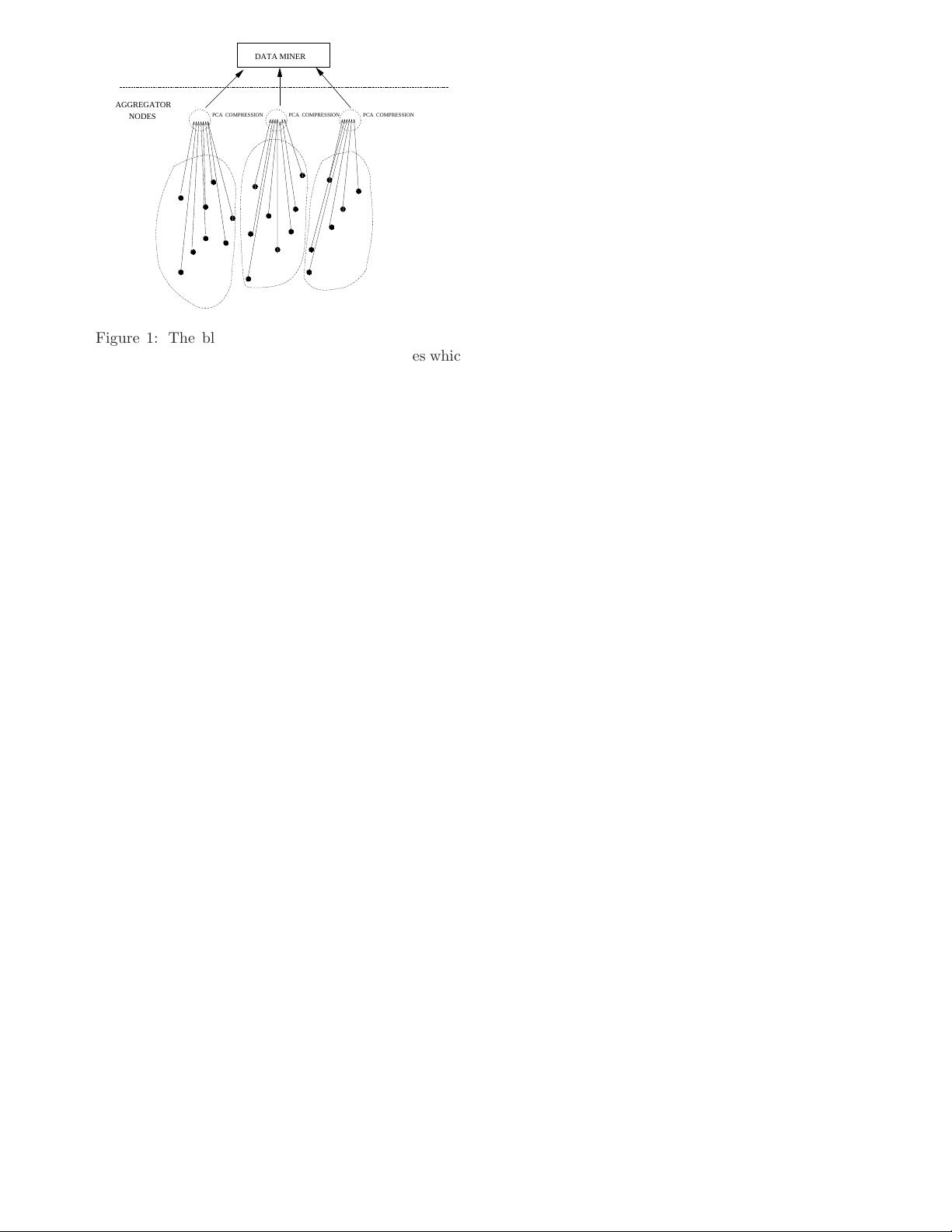

In particular this paper proposes a data driven

procedure to configure a two-layer topology of a sensor

network (Figure 1) made of

1. a lower level whose task is to organize the sensors

in clusters, compress their signals and transmit the

aggregate information to the upper level,

2. an upper level playing the role of a data mining

server which uses the aggregate information to

3

SENSING UNITS

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

0011

000111

0011

0011

PCA COMPRESSIONPCA COMPRESSION PCA COMPRESSION

DATA MINER

A

GGREGATOR

NODES

000111

Figure 1: The black dots represent the sensing units.

The dotted circles represent the aggregator nodes which

carry out the fusion of data coming from neighboring

sensors before sending the aggregated signals up to the

data mining server.

carry out the required sensing task.

We focus here on problems where the sensors are used

to perform a supervised learning (e.g. classification,

regression or prediction) task: examples could be the

classification of traffic fluidity on the basis of route

sensing units or the prediction of a wave intensity on the

basis of sensors scattered in the ocean. Our approach

consists in using a historical data set to find the best

way to combine the measures of neighboring sensors

such that the accuracy of the prediction model based

on such aggregate measures is optimized. The design

procedure relies on an iterative optimization procedure

which loops over five steps: (i) a partition of the sensing

units in proximity clusters, (ii) the compression of the

signals of each cluster of sensors, (iii) the aggregation

and transmission of the compressed signals to the upper

data mining server, (iv) the training of the prediction

model in the data mining server, and (v) the assessment

of the partition according to multiple criteria, like the

prediction accuracy of the data mining model and the

energy and transmission requirements of the resulting

network. The best partition which is returned by this

multi-criteria optimization procedure can be used as a

template for the topological organization of sensors.

An important issue in mining sensor network data

concerns the streaming and possibly non stationary

nature of data. Non stationarity may be due to changes

in the phenomenon underlying the measures as well

to sensor malfunctioning and/or modifications of their

geographical location. In order to address this aspect

we have recourse to adaptive features at both levels

of our architecture. At the lower sensor integration

level we use an effective sequential implementation of

the Principal Component Analysis (PCA) technique:

the PAST algorithm [11]. The upper data mining

module uses an adaptive lazy learning (LL) technique [1]

characterized by a fast training phase and an effective

treatment of non stationarity.

The experimental section of the paper presents

some preliminary results obtained by adopting the

proposed two-layer architecture in the context of a

simulated monitoring task: measuring the wavelength

of a two dimensional wave in situation of scattering.

2 The problem

Consider a sensor network S made of S sensors where

P is a [S, 3] matrix containing the three-dimensional

coordinates of the S sensors and

x(t)={s

1

(t),s

2

(t),...,s

S

(t)}(2.1)

is the state (or snapshot) of the sensor network at time t.

Suppose we intend to employ S to perform a supervised

learning task, for example a regression problem

y(t)=f(x(t)) + ε(t)(2.2)

where y is the variable to be predicted at time t on

the basis of the state x(t) of the network S and ε is

usually thought as the term including modeling error,

disturbances and noise.

If we have available a finite dataset D

N

=

{x(t

i

),y(t

i

),i =1,...,N} of N input-output obser-

vations, this problem can be tackled as a conventional

regression problem, by first estimating an approximator

of f on the basis of D

N

and then using this estimator

as a predictor of y.

However, if, like in the case of sensor networks, the

number S is huge, the mapping f is non-stationary

and the data are collected sequentially, conventional

techniques reach rapidly their limits. In particular, the

large dimensionality of the problem asks for feature

selection problem as well as the streaming aspect of

the problem requires sequential (also called recursive)

estimation approaches.

This paper proposes an approach to the problem of

data mining in sensor networks which tries to concili-

ate the needs for an accurate prediction of the output y

with the constraints related to energy reserves, commu-

nication bandwidth and sensor computational power.

The following subsections will rapidly sketch the

two computational modules used in our approach: the

recursive PCA and the adaptive Lazy Learning. Sec-

tion 5 will describe how these modules are combined in

our architecture for mining sensor networks.

4

3 PCA compression techniques

As discussed above, each data mining problem in the

context of sensor network data with large S has to face

the problem of reducing dimensionality. Existing tech-

niques for feature selection (for an up-to-date state of

the art on feature selection see [7]) can be grouped into

two main approaches: the wrapper and the filter ap-

proach. In the wrapper approach [9] the feature sub-

set selection algorithm exists as a wrapper around the

learning algorithm, which is often considered as a black

box able to return (e.g. via cross-validation) an evalua-

tion of the quality of a feature subset. On the contrary,

the filter approach selects features using a preprocessing

step independently of the learning algorithm.

In this paper we will adopt the Principal Compo-

nent analysis (PCA) technique, an instance of the filter

approach. PCA is a classic technique in statistical data

analysis, feature extraction and data compression [8].

Given a set of multivariate measurements, its goal is

to find a smaller set of variables with less redundancy,

that would give as good a representation as possible. In

PCA the redundancy is measured by computing linear

correlations between variables. PCA entails transform-

ing the n original variables x

1

,...,x

n

into m new vari-

ables z

1

,...,z

m

(called principal components) such that

the new variables are uncorrelated with each other and

account for decreasing portions of the variance of the

original variables. Consider a vector x of size n and a

matrix X containing N measures of the vector x.The

m principal components

z

k

=

n

j=1

w

jk

x

j

= w

T

k

x, k =1,...,m(3.3)

are defined as weighted sums of the elements of x

with maximal variance, under the constraints that the

weights are normalized and the principal components

are uncorrelated with each other. It is well-known

from basic linear algebra that the solution to the PCA

problem is given in terms of the unit-length eigenvectors

e

1

,...,e

n

of the correlation matrix of x. Once ordered

the eigenvectors such that the corresponding eigenvalues

λ

1

,...,λ

n

satisfy λ

1

≥ λ

2

≥ ... ≥ λ

n

, the principal

component z

k

is given by z

k

= e

T

k

x.Itcanbeshown

that the PCA problem can be also put in the form

of a minimum mean-square error compression of x.

This means that the computation of the w

k

for the

first m principal components is equivalent to find the

orthonormal basis w

1

,...,w

m

that minimizes

J

PCA

=

1

N

N

t=1

x(t) −

m

k=1

(w

T

k

x(t))w

k

2

(3.4)

If we denote W =(w

1

,...,w

m

)

T

where W is a matrix

of size [m, n]wehave

J

PCA

=

1

N

N

t=1

x(t) − W

T

Wx(t)

2

(3.5)

What is appealing in this formulation is that a recur-

sive formulation of this least-squares problem is pro-

vided by the Projection Approximation Subspace Track-

ing (PAST) algorithm proposed by [11]. This algorithm,

based on the recursive formulation of the least squares

problem, has low computational cost and makes pos-

sible an updating of the principal components as new

observations become available.

Once the matrix W is computed a reduction of the

input dimensionality is obtained by transforming the

input matrix X into the matrix Z = XW

T

and by

transforming the regression problem of dimensionality

n into a problem of dimensionality m in the space of

principal components.

At this step the question arises of how to choose m.

The techniques more commonly used rely either on the

absolute values of the eigenvalues or on procedures of

cross-validation [8].

4 The Lazy Learning algorithm

In supervised learning literature a possible way to

classify learning techniques relies on the dichotomy:

local memory-based versus global methods. Global

modeling builds a single functional model of the dataset.

This has traditionally been the approach taken in neural

networks [2] and other form of non-linear statistical

regression. The available dataset is used by a learning

algorithm to produce a model of the mapping and then

the dataset is discarded and only the model is kept.

Local algorithms defer processing of the dataset until

they receive request for information (e.g. prediction or

local modeling). The classical nearest neighbor method

is the original approach to local modeling. A database

of observed input-output data is always kept and the

estimate for a new operating point is derived from an

interpolation based on a neighborhood of the query

point.

The data mining architecture proposed in this paper

adopts the Lazy Learning (LL) algorithm proposed

by [1], an instance of the local modeling approach

that, on a query-by-query basis, tunes the number of

neighbors using a local cross-validation criterion. For

a detailed description of the approach see also [5] et

references therein. The LL algorithm, publicly available

in a MATLAB and R implementation

1

,provedtobe

successful in many problems of non-linear data analysis

and time series prediction [5, 3, 4].

1

http://iridia.ulb.ac.be/∼lazy

5