The community has already taken significant steps in this

direction, with convolutional neural nets (CNNs) becoming

the common workhorse behind a wide variety of image pre-

diction problems. CNNs learn to minimize a loss function –

an objective that scores the quality of results – and although

the learning process is automatic, a lot of manual effort still

goes into designing effective losses. In other words, we still

have to tell the CNN what we wish it to minimize. But,

just like Midas, we must be careful what we wish for! If

we take a naive approach, and ask the CNN to minimize

Euclidean distance between predicted and ground truth pix-

els, it will tend to produce blurry results [29, 46]. This is

because Euclidean distance is minimized by averaging all

plausible outputs, which causes blurring. Coming up with

loss functions that force the CNN to do what we really want

– e.g., output sharp, realistic images – is an open problem

and generally requires expert knowledge.

It would be highly desirable if we could instead specify

only a high-level goal, like “make the output indistinguish-

able from reality”, and then automatically learn a loss func-

tion appropriate for satisfying this goal. Fortunately, this is

exactly what is done by the recently proposed Generative

Adversarial Networks (GANs) [14, 5, 30, 36, 47]. GANs

learn a loss that tries to classify if the output image is real

or fake, while simultaneously training a generative model

to minimize this loss. Blurry images will not be tolerated

since they look obviously fake. Because GANs learn a loss

that adapts to the data, they can be applied to a multitude of

tasks that traditionally would require very different kinds of

loss functions.

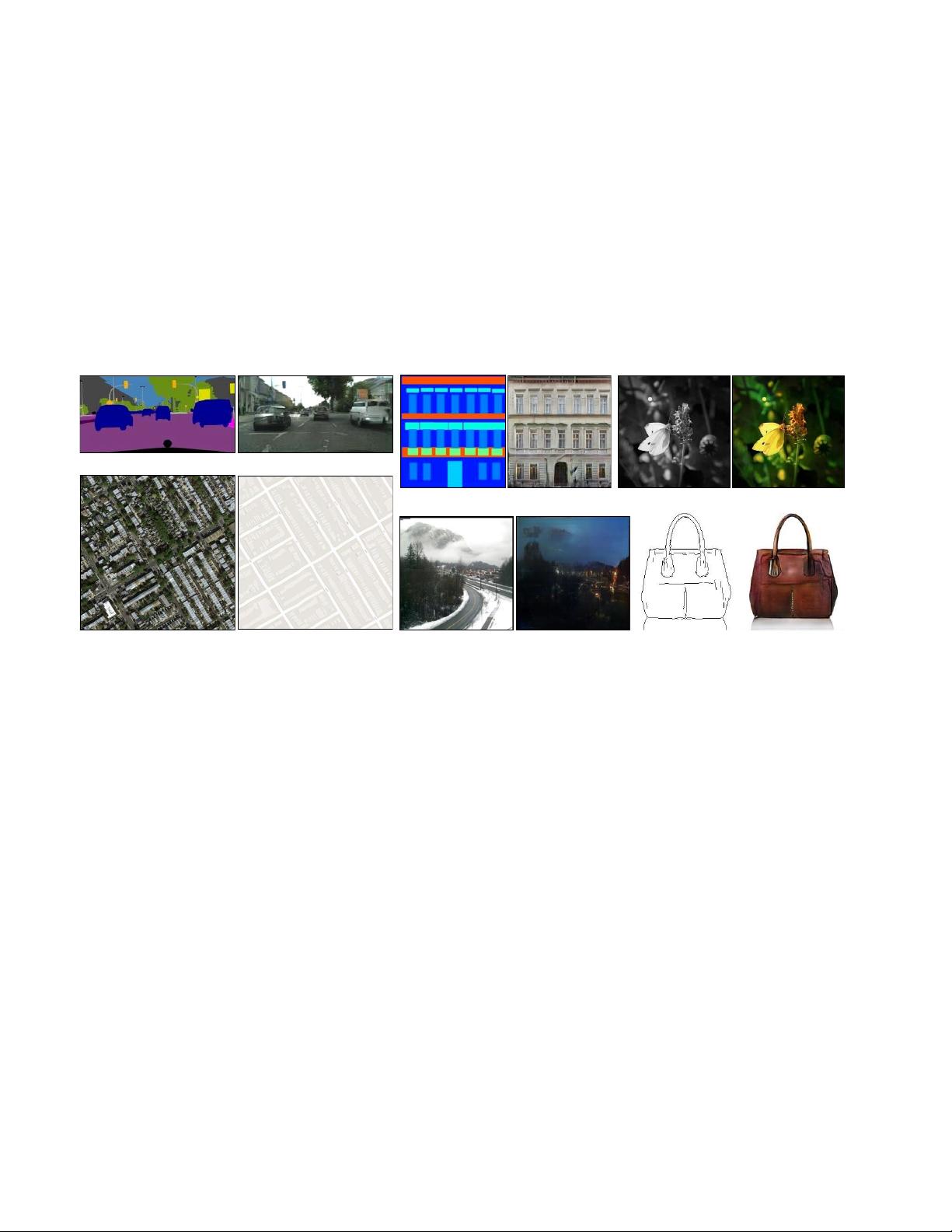

In this paper, we explore GANs in the conditional set-

ting. Just as GANs learn a generative model of data, condi-

tional GANs (cGANs) learn a conditional generative model

[14]. This makes cGANs suitable for image-to-image trans-

lation tasks, where we condition on an input image and gen-

erate a corresponding output image.

GANs have been vigorously studied in the last two

years and many of the techniques we explore in this pa-

per have been previously proposed. Nonetheless, ear-

lier papers have focused on specific applications, and

it has remained unclear how effective image-conditional

GANs can be as a general-purpose solution for image-to-

image translation. Our primary contribution is to demon-

strate that on a wide variety of problems, conditional

GANs produce reasonable results. Our second contri-

bution is to present a simple framework sufficient to

achieve good results, and to analyze the effects of sev-

eral important architectural choices. Code is available at

https://github.com/phillipi/pix2pix.

1. Related work

Structured losses for image modeling Image-to-image

translation problems are often formulated as per-pixel clas-

sification or regression [26, 42, 17, 23, 46]. These for-

mulations treat the output space as “unstructured” in the

sense that each output pixel is considered conditionally in-

dependent from all others given the input image. Condi-

tional GANs instead learn a structured loss. Structured

losses penalize the joint configuration of the output. A large

body of literature has considered losses of this kind, with

popular methods including conditional random fields [2],

the SSIM metric [40], feature matching [6], nonparametric

losses [24], the convolutional pseudo-prior [41], and losses

based on matching covariance statistics [19]. Our condi-

tional GAN is different in that the loss is learned, and can, in

theory, penalize any possible structure that differs between

output and target.

Conditional GANs We are not the first to apply GANs

in the conditional setting. Previous works have conditioned

GANs on discrete labels [28], text [32], and, indeed, im-

ages. The image-conditional models have tackled inpaint-

ing [29], image prediction from a normal map [39], image

manipulation guided by user constraints [49], future frame

prediction [27], future state prediction [48], product photo

generation [43], and style transfer [25]. Each of these meth-

ods was tailored for a specific application. Our framework

differs in that nothing is application-specific. This makes

our setup considerably simpler than most others.

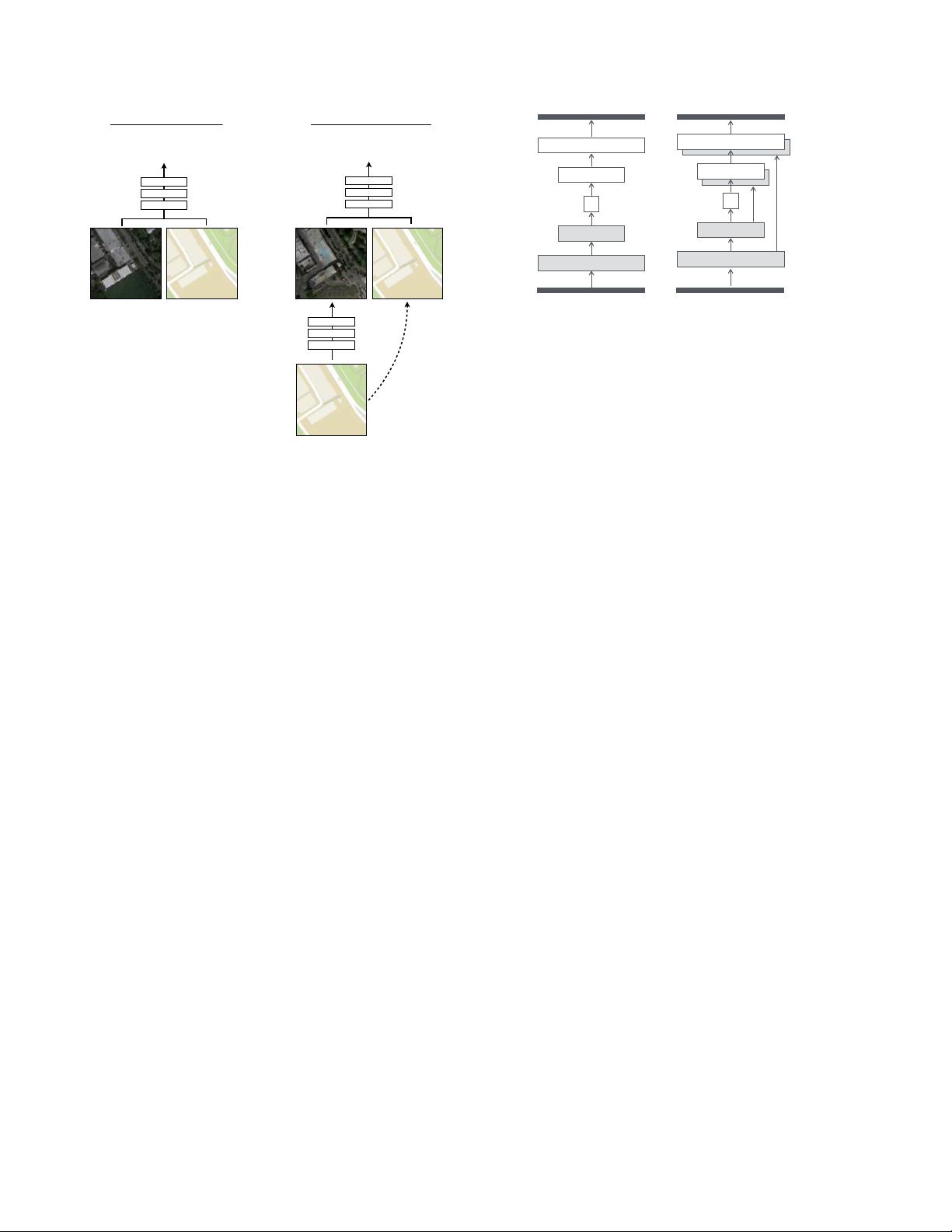

Our method also differs from these prior works in sev-

eral architectural choices for the generator and discrimina-

tor. Unlike past work, for our generator we use a “U-Net”-

based architecture [34], and for our discriminator we use a

convolutional “PatchGAN” classifier, which only penalizes

structure at the scale of image patches. A similar Patch-

GAN architecture was previously proposed in [25], for the

purpose of capturing local style statistics. Here we show

that this approach is effective on a wider range of problems,

and we investigate the effect of changing the patch size.

2. Method

GANs are generative models that learn a mapping from

random noise vector z to output image y: G : z → y

[14]. In contrast, conditional GANs learn a mapping from

observed image x and random noise vector z, to y: G :

{x, z} → y. The generator G is trained to produce outputs

that cannot be distinguished from “real” images by an ad-

versarially trained discrimintor, D, which is trained to do as

well as possible at detecting the generator’s “fakes”. This

training procedure is diagrammed in Figure 2.

2.1. Objective

The objective of a conditional GAN can be expressed as

L

cGAN

(G, D) =E

x,y∼p

data

(x,y )

[log D(x, y)]+

E

x∼p

data

(x),z∼p

z

(z)

[log(1 −D(x, G(x, z))],

(1)

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功