没有合适的资源?快使用搜索试试~ 我知道了~

资源推荐

资源详情

资源评论

Journal of Communication and Computer 15 (2019) 1-13

doi: 10.17265/1548-7709/2019.01.001

D

DAVID PUBLISHING

Artificial Intelligence Driven Resiliency with Machine

Learning and Deep Learning Components

Bahman Zohuri

1

, and Farhang Mossavar Rahmani

2

1. Research Associate Professor, University of New Mexico, Electrical Engineering and Computer Science Department, Albuquerque,

New Mexico USA

2. Professor of Finance and Director of MBA School of Business and Management, San Diego, California, USA

Abstract: The future of any business from banking, e-commerce, real estate, homeland security, healthcare, marketing, the stock

market, manufacturing, education, retail to government organizations depends on the data and analytics capabilities that are built and

scaled. The speed of change in technology in recent years has been a real challenge for all businesses. To manage that, a significant

number of organizations are exploring the Big Data (BD) infrastructure that helps them to take advantage of new opportunities while

saving costs. Timely transformation of information is also critical for the survivability of an organization. Having the right

information at the right time will enhance not only the knowledge of stakeholders within an organization but also providing them

with a tool to make the right decision at the right moment. It is no longer enough to rely on a sampling of information about the

organizations' customers. The decision-makers need to get vital insights into the customers' actual behavior, which requires enormous

volumes of data to be processed. We believe that Big Data infrastructure is the key to successful Artificial Intelligence (AI)

deployments and accurate, unbiased real-time insights. Big data solutions have a direct impact and changing the way the organization

needs to work with help from AI and its components ML and DL. In this article, we discuss these topics.

Key words: Artificial intelligence, resilience system, machine learning, deep learning, big data.

1. Introduction

In today’s growth of modern technology and the

world of Robotics, significant momentum is driving

the next generation of these robots that we now know

as Artificial Intelligence (AI). This new generation is

attracting tremendous attention of scientists and

engineers. They are eager to move them to the next

generation that is smarter and more cognitive, which

we now call them Super Artificial Intelligence (SAI).

Every day businesses are facing the vast volume of

data. Unlike before, processing this amount of data is

beyond Master Data Management (MDM) to a level

that we know it as Big Data (BD) that are getting

around at the speed of the Internet. Since our daily

operations within any organization or enterprises are

Corresponding author: Bahman Zohuri, Ph.D, Associate

Research Professor, research fields: electrical and computer

engineering. E-mail: zohurib@unm.edu.

expanding the Internet of Things (IoT) dealing with

these data either structured or unstructured also is

growing at the same speed, thus processing these data

for extracting the right information for the proper

knowledge growing accordingly.

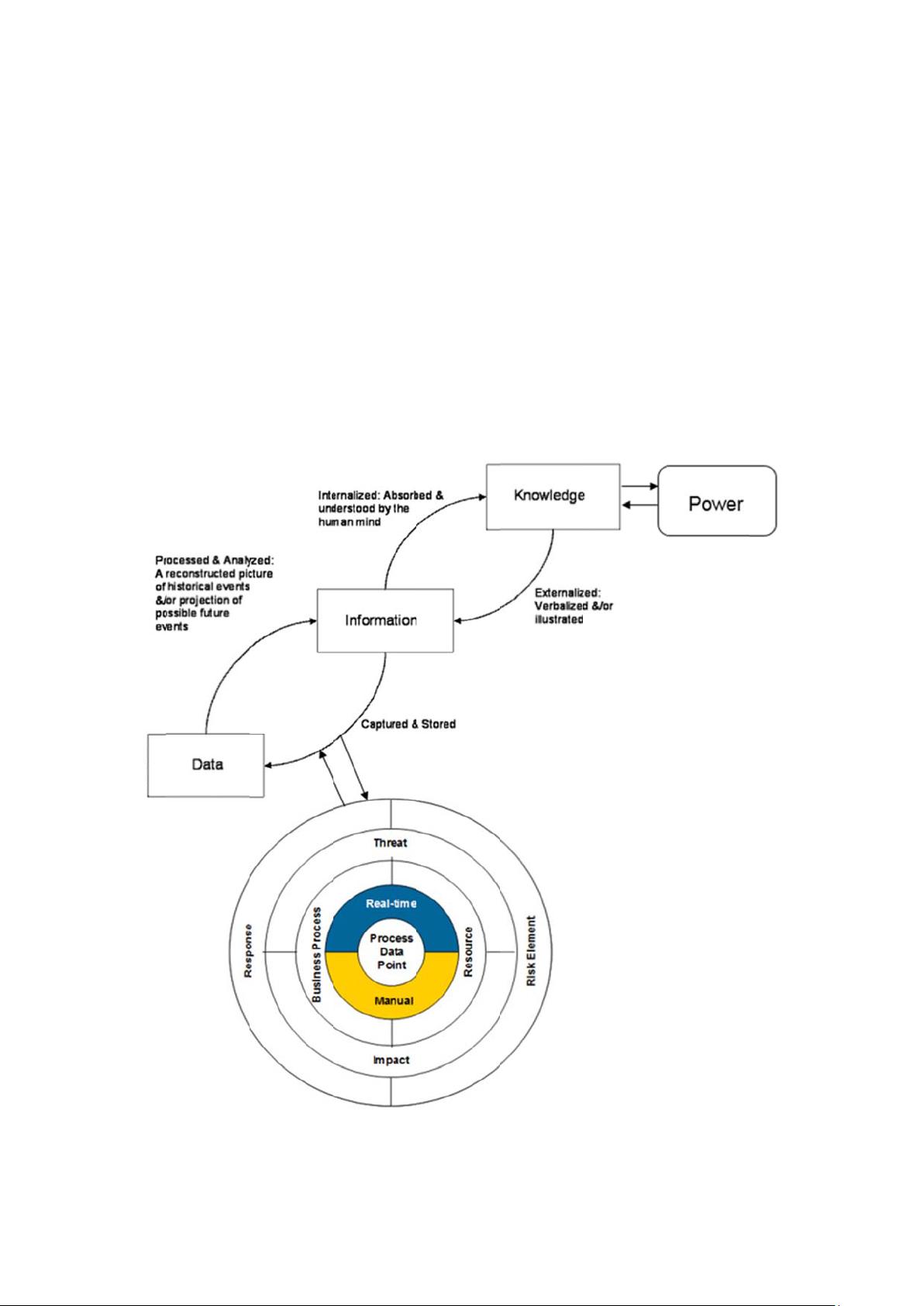

With the demand of Power to make a decision with

minimum risk based on Knowledge of Information

from Data accumulated in Big Data (i.e., Fig. 1) [1]

repository, we need real-time processing of the data

coming to us from Omni-direction perspective. These

data are centric around the BD and get deposited at the

speed of Internet-driven mainly by IoT.

Thus, at this stage, we need to understand, what is

the Big Data (BD) and why it matters when we are

bringing the AI into play.

Toward real-time processing of data with

infrastructure around the Big data is a term that

describes the large volume of data — both structured

and unstructured — that inundates a business on a

2

Artificial Intelligence Driven Resiliency with Machine Learning and Deep Learning Components

day-to-day basis. Big data usually has three

characteristics. They are volume (the amount of data),

velocity (the rate at which the data is received), and

variety (types of data).

Each of these three characteristics is briefly defined

here as:

1) Volume: Organizations collect data from a

variety of sources, including business transactions,

social media, and information from sensor or

machine-to-machine data. In the past, storing it

would’ve been a problem — but new technologies

(such as Hadoop) have eased the burden.

2) Velocity: Data streams in at an unprecedented

speed and must be dealt with in a timely manner. RFID

tags, sensors, and smart metering are driving the need

to deal with torrents of data in near-real-time.

3) Variety: Data comes in all types of formats —

from structured, numeric data in traditional databases

to unstructured text documents, email, video, audio,

stock ticker data, and financial transactions.

Although at some companies such as SAS, they

consider two additional dimensions when it comes to

big data:

1) Variability: In addition to the increasing

velocities and varieties of data, data flows can be

highly inconsistent with periodic peaks. Is something

trending in social media? Daily, seasonal and

event-triggered peak data loads can be challenging to

manage. Even more so with unstructured data.

2) Complexity: Today’s data comes from multiple

sources, which makes it difficult to link, match,

cleanse and transform data across systems. However,

it’s necessary to connect and correlate relationships,

hierarchies, and multiple data linkages or your data

can quickly spiral out of control.

In a nutshell, Big Data is one of the essential

concepts of our time. It is a phenomenon that has

taken the world by storm. Data is growing at a

compound annual growth rate of almost 60% per year.

As per studies, 70% of that vast data is unstructured.

Examples of unstructured data are video files, data

related to social media, etc. A look at the diversity of

data itself tells the story and the challenges associated

with it. Big Data is a burning topic in IT and the

business world. Different people have different views

and opinions about it. However, everyone agrees that

[2]:

Big Data refers to a huge amount of unstructured

or semi-structured data.

Storing this mammoth detail is beyond the

capacity of typical traditional database systems

(relational databases).

Legacy software tools are unable to capture,

manage, and process Big Data.

Big Data is a relative term, which depends on an

organization’s size. Big Data is not just referring to

traditional data warehouses, but it includes operational

data stores that can be used for real-time applications.

Also, Big Data is about finding value from the

available data, which is the key to success for the

business. Companies try to understand their customers

better so that they can come up with more targeted

products and services for them.

To do this they are increasingly adopting analytical

techniques by utilizing Artificial Intelligence (AI)

driven by Machine Learning (ML) and that is derived

by Deep Learning (DL) for doing analysis on larger

sets of data.

AI, ML and DL are defined further down in this

white paper in a short format to fit this paper and their

details are beyond the scope of this white paper.

To perform operations on data effectively,

efficiently, and accurately, they are expanding their

traditional data sets by integrating them with social

media data, text analytics, and sensor data to get a

complete understanding of customer’s behavior.

However, it is not the amount of data that is

important. It is what organizations do with the data

that matters. Big data can be analyzed for insights that

lead to better decisions and strategic business moves.

The fact is that in today’s volatile environment, every

major organization needs to have the ability to adapt

Artificial Intelligence Driven Resiliency with Machine Learning and Deep Learning Components

3

to disrupt while maintaining its o eration quickly. In contrast, h ndling the sheer volume of data at the

That is why collecting, housing, analyzing data, and level of Big Data and being able to process these data

using the result of our analysis can give us the power for the right information in near real-time if not

to make a more informed decision and implement that

in a timely manner.

real-time requi es a smart

Artificial Intelligence (AI).

tool that is known as

The above statement, holistically presented in Fig. 1, Artificial intelligence (AI) is a set of technologies

where partially originated by Anthony Liew [1] from and building blocks, all utilizing

data

to

unlock

Walden University in his article entitled intelligent value across industries and businesses. It is

“Understanding Data, Information, Knowledge and being scaled across sectors at an enterprise level. By

Their Inter-Relationships and was completed by the gathering and learning from data, cogn tive systems

first two authors of this article in their volume I of book can spot

trends

and

provide

insights to

enhance

under title of A Model to Forecast Future Paradigm, workflows, response times, and client ex eriences and

Knowledge Is Power in Four Dimensions” [2]. reduce the cost f operation.

Fig. 1 Depiction of data, information, and knowledge is power in four dimensions [1].

剩余12页未读,继续阅读

资源评论

百态老人

- 粉丝: 1w+

- 资源: 2万+

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 机械设计大型举重机卷绳机sw17可编辑非常好的设计图纸100%好用.zip

- 机械设计单片式离合器sw20可编辑非常好的设计图纸100%好用.zip

- 英飞凌TC系列旋变软解码开发,含程序与电路

- 抖音自动点赞自动滚屏app

- 有源电力滤波器APF仿真,ip-iq谐波电流检测和无功电流检测 matlab simlink仿真 滞环控制 PI控制 很适合用于初学者学习 了解电能质量研究方向可用于电能质量相关的基础仿真控制

- mmexport1735898743184.mp4

- 项目简单,适合新手入门(飞翔的小鸟java源代码)

- 机械设计单柱巷道式堆垛机(sw16可编辑+cad)非常好的设计图纸100%好用.zip

- mmexport1735898729052.mp4

- 机械设计电脑光驱组件自动贴膜设备sw17非常好的设计图纸100%好用.zip

- comsol二维裂隙流压裂水平井

- 机械设计复合铜换热器组装机_step非常好的设计图纸100%好用.zip

- AW35616 linux驱动

- 反步法 PID(backstepping)控制算法下的USV(无人船 艇)路径跟踪控制方案(考虑洋流扰动) Norrbin Fossen模型+LOS制导+PID 反步法控制 Matlab Simuli

- 机械设计电液滑环(sw15可编辑+工程图)非常好的设计图纸100%好用.zip

- 机械设计滚轮跳动度检查step非常好的设计图纸100%好用.zip

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功